Kathy Lüdge

Efficient Optimisation of Physical Reservoir Computers using only a Delayed Input

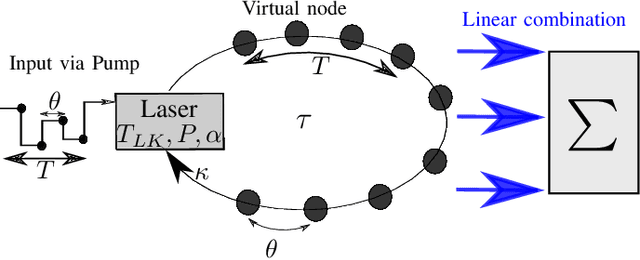

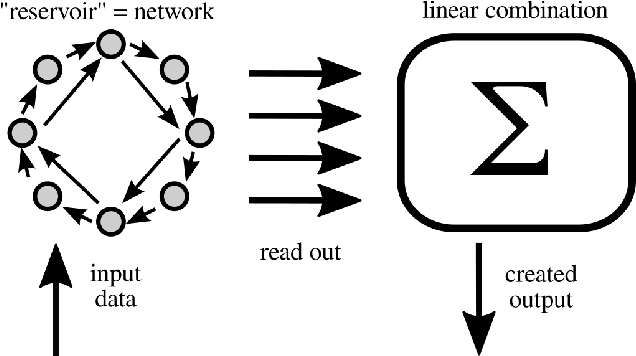

Jan 25, 2024Abstract:We present an experimental validation of a recently proposed optimization technique for reservoir computing, using an optoelectronic setup. Reservoir computing is a robust framework for signal processing applications, and the development of efficient optimization approaches remains a key challenge. The technique we address leverages solely a delayed version of the input signal to identify the optimal operational region of the reservoir, simplifying the traditionally time-consuming task of hyperparameter tuning. We verify the effectiveness of this approach on different benchmark tasks and reservoir operating conditions.

Reducing hyperparameter dependence by external timescale tailoring

Jul 17, 2023Abstract:Task specific hyperparameter tuning in reservoir computing is an open issue, and is of particular relevance for hardware implemented reservoirs. We investigate the influence of directly including externally controllable task specific timescales on the performance and hyperparameter sensitivity of reservoir computing approaches. We show that the need for hyperparameter optimisation can be reduced if timescales of the reservoir are tailored to the specific task. Our results are mainly relevant for temporal tasks requiring memory of past inputs, for example chaotic timeseries prediciton. We consider various methods of including task specific timescales in the reservoir computing approach and demonstrate the universality of our message by looking at both time-multiplexed and spatially multiplexed reservoir computing.

Master memory function for delay-based reservoir computers with single-variable dynamics

Aug 28, 2021

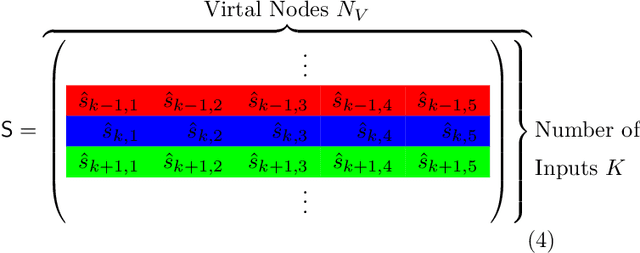

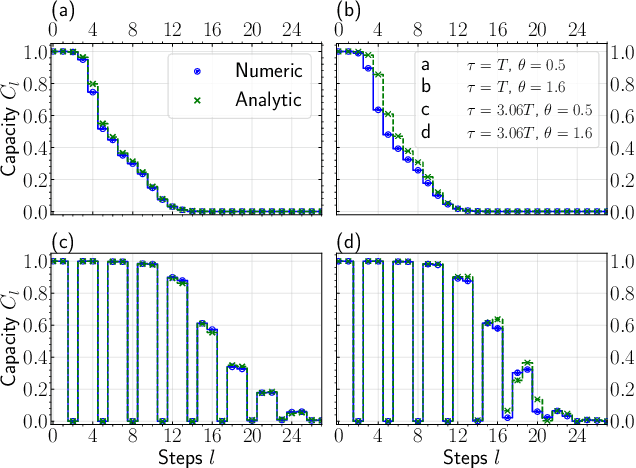

Abstract:We show that many delay-based reservoir computers considered in the literature can be characterized by a universal master memory function (MMF). Once computed for two independent parameters, this function provides linear memory capacity for any delay-based single-variable reservoir with small inputs. Moreover, we propose an analytical description of the MMF that enables its efficient and fast computation. Our approach can be applied not only to reservoirs governed by known dynamical rules such as Mackey-Glass or Ikeda-like systems but also to reservoirs whose dynamical model is not available. We also present results comparing the performance of the reservoir computer and the memory capacity given by the MMF.

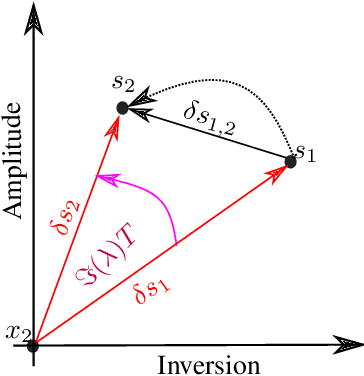

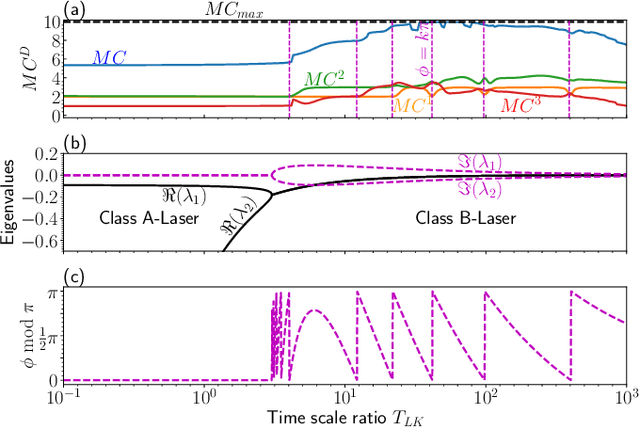

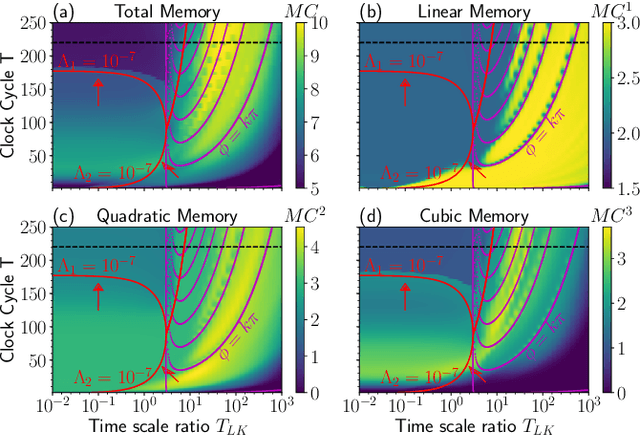

Improving Delay Based Reservoir Computing via Eigenvalue Analysis

Sep 16, 2020

Abstract:We analyze the reservoir computation capability of the Lang-Kobayashi system by comparing the numerically computed recall capabilities and the eigenvalue spectrum. We show that these two quantities are deeply connected, and thus the reservoir computing performance is predictable by analyzing the eigenvalue spectrum. Our results suggest that any dynamical system used as a reservoir can be analyzed in this way as long as the reservoir perturbations are sufficiently small. Optimal performance is found for a system with the eigenvalues having real parts close to zero and off-resonant imaginary parts.

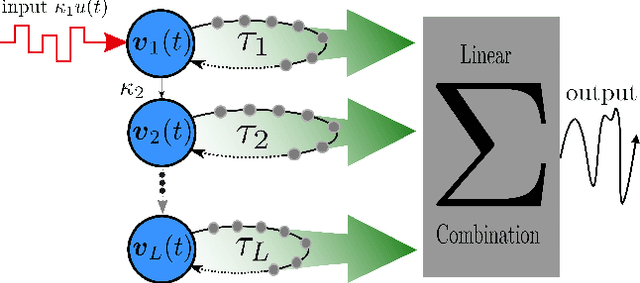

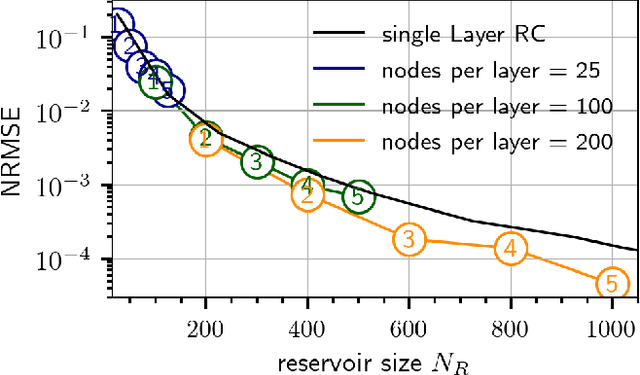

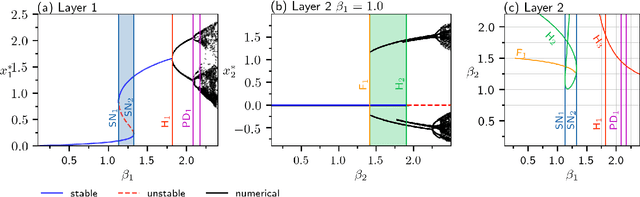

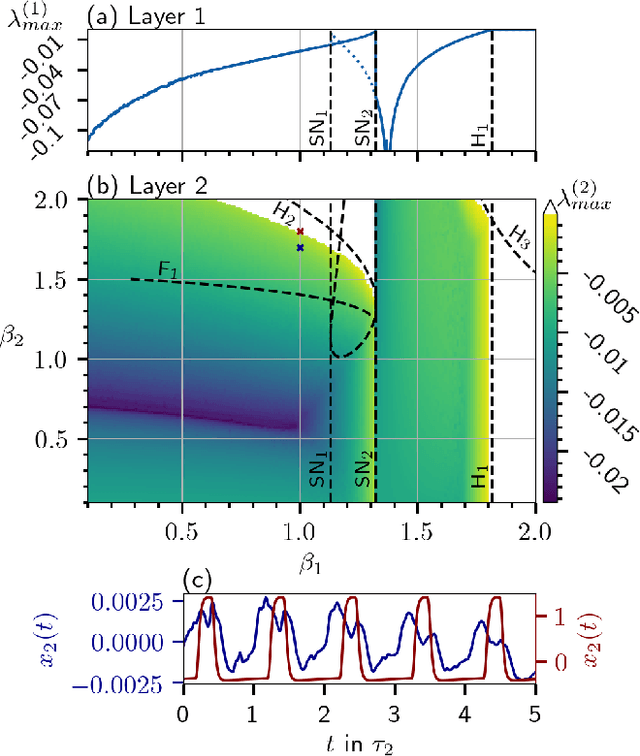

Deep Time-Delay Reservoir Computing: Dynamics and Memory Capacity

Jun 11, 2020

Abstract:The Deep Time-Delay Reservoir Computing concept utilizes unidirectionally connected systems with time-delays for supervised learning. We present how the dynamical properties of a deep Ikeda-based reservoir are related to its memory capacity (MC) and how that can be used for optimization. In particular, we analyze bifurcations of the corresponding autonomous system and compute conditional Lyapunov exponents, which measure the generalized synchronization between the input and the layer dynamics. We show how the MC is related to the systems distance to bifurcations or magnitude of the conditional Lyapunov exponent. The interplay of different dynamical regimes leads to a adjustable distribution between linear and nonlinear MC. Furthermore, numerical simulations show resonances between clock cycle and delays of the layers in all degrees of the MC. Contrary to MC losses in a single-layer reservoirs, these resonances can boost separate degrees of the MC and can be used, e.g., to design a system with maximum linear MC. Accordingly, we present two configurations that empower either high nonlinear MC or long time linear MC.

Performance boost of time-delay reservoir computing by non-resonant clock cycle

May 07, 2019

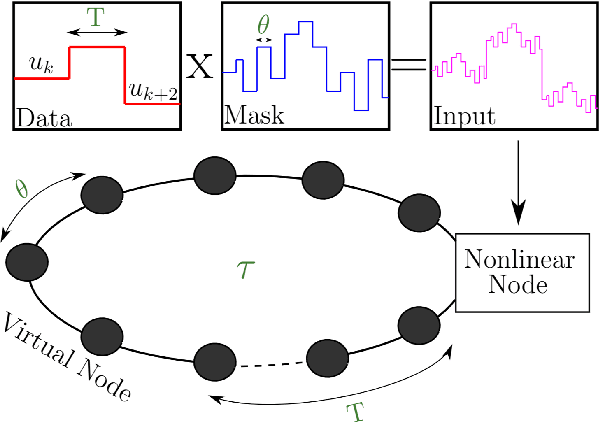

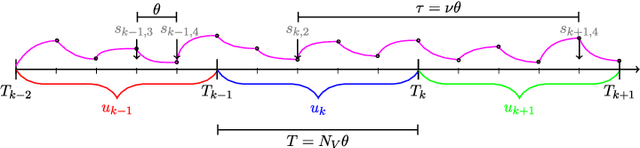

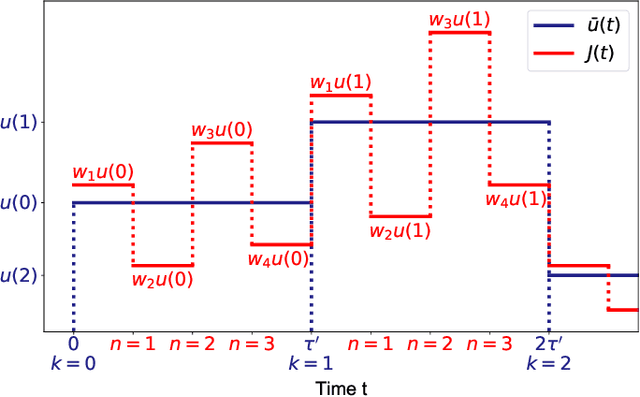

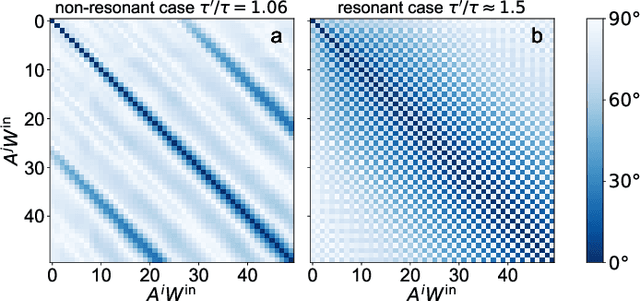

Abstract:The time-delay-based reservoir computing setup has seen tremendous success in both experiment and simulation. It allows for the construction of large neuromorphic computing systems with only few components. However, until now the interplay of the different timescales has not been investigated thoroughly. In this manuscript, we investigate the effects of a mismatch between the time-delay and the clock cycle for a general model. Typically, these two time scales are considered to be equal. Here we show that the case of equal or rationally related time-delay and clock cycle could be actively detrimental and leads to an increase of the approximation error of the reservoir. In particular, we can show that non-resonant ratios of these time scales have maximal memory capacities. We achieve this by translating the periodically driven delay-dynamical system into an equivalent network. Networks that originate from a system with resonant delay-times and clock cycles fail to utilize all of their degrees of freedom, which causes the degradation of their performance.

Reservoir computing with simple oscillators: Virtual and real networks

Feb 23, 2018

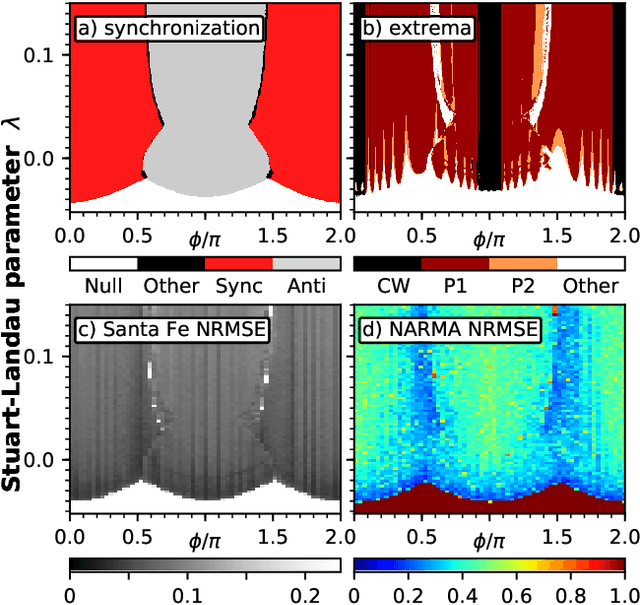

Abstract:The reservoir computing scheme is a machine learning mechanism which utilizes the naturally occuring computational capabilities of dynamical systems. One important subset of systems that has proven powerful both in experiments and theory are delay-systems. In this work, we investigate the reservoir computing performance of hybrid network-delay systems systematically by evaluating the NARMA10 and the Sante Fe task.. We construct 'multiplexed networks' that can be seen as intermediate steps on the scale from classical networks to the 'virtual networks' of delay systems. We find that the delay approach can be extended to the network case without loss of computational power, enabling the construction of faster reservoir computing substrates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge