Seongho Bak

Learning to Place Unseen Objects Stably using a Large-scale Simulation

Mar 15, 2023

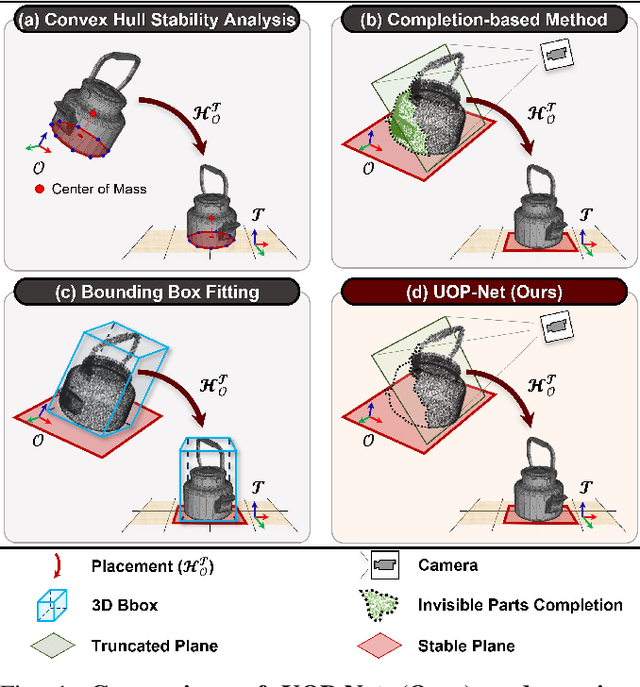

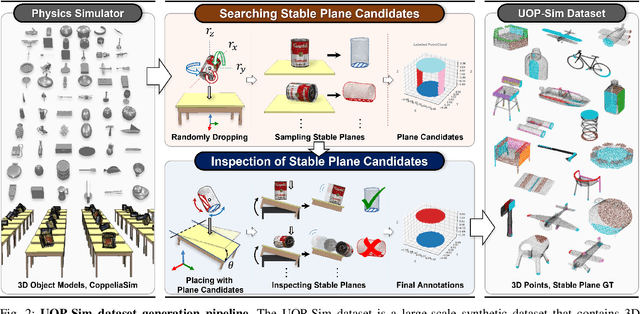

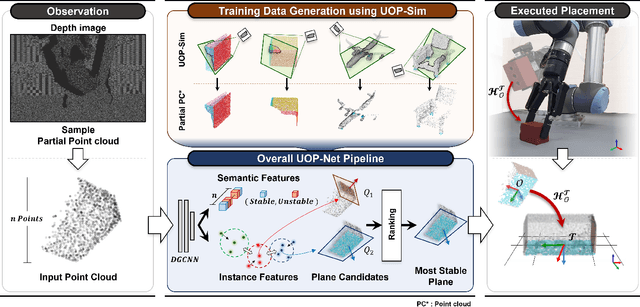

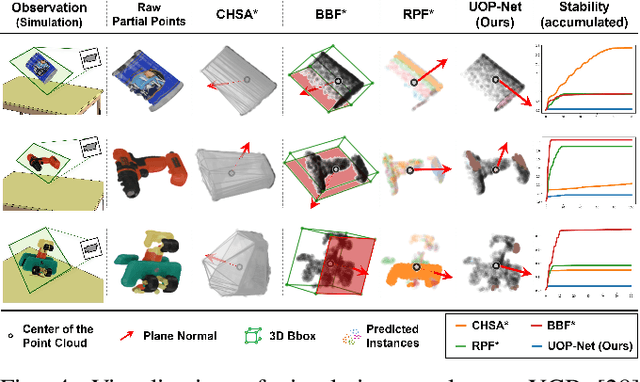

Abstract:Object placement is a crucial task for robots in unstructured environments as it enables them to manipulate and arrange objects safely and efficiently. However, existing methods for object placement have limitations, such as the requirement for a complete 3D model of the object or the inability to handle complex object shapes, which restrict the applicability of robots in unstructured scenarios. In this paper, we propose an Unseen Object Placement (UOP) method that directly detects stable planes of unseen objects from a single-view and partial point cloud. We trained our model on large-scale simulation data to generalize over relationships between the shape and properties of stable planes with a 3D point cloud. We verify our approach through simulation and real-world robot experiments, demonstrating state-of-the-art performance for placing single-view and partial objects. Our UOP approach enables robots to place objects stably, even when the object's shape and properties are not fully known, providing a promising solution for object placement in unstructured environments. Our research has potential applications in various domains such as manufacturing, logistics, and home automation. Additional results can be viewed on https://sites.google.com/uop-net, and we will release our code, dataset upon publication.

Automatic Detection of Injection and Press Mold Parts on 2D Drawing Using Deep Neural Network

Oct 22, 2021

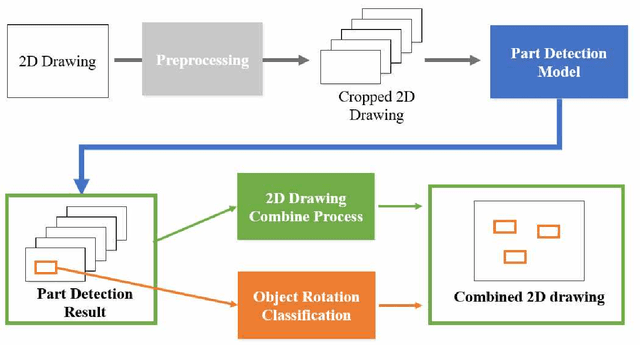

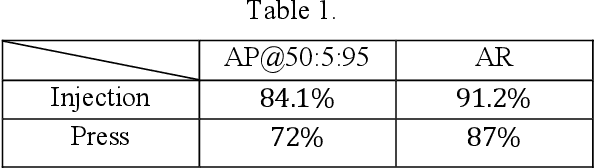

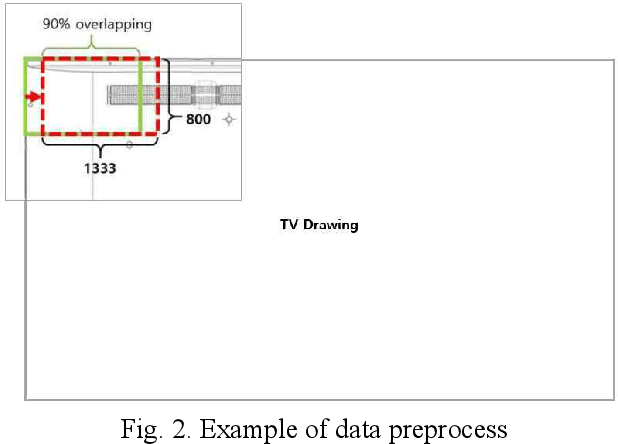

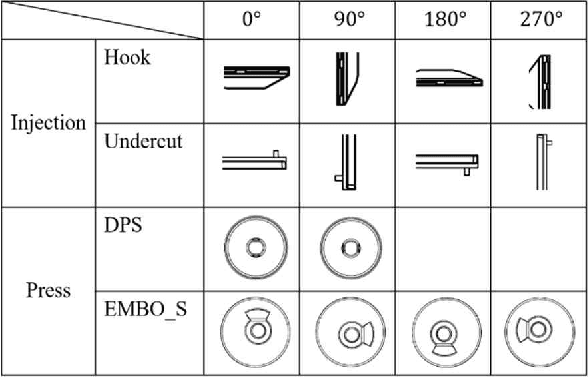

Abstract:This paper proposes a method to automatically detect the key feature parts in a CAD of commercial TV and monitor using a deep neural network. We developed a deep learning pipeline that can detect the injection parts such as hook, boss, undercut and press parts such as DPS, Embo-Screwless, Embo-Burring, and EMBO in the 2D CAD drawing images. We first cropped the drawing to a specific size for the training efficiency of a deep neural network. Then, we use Cascade R-CNN to find the position of injection and press parts and use Resnet-50 to predict the orientation of the parts. Finally, we convert the position of the parts found through the cropped image to the position of the original image. As a result, we obtained detection accuracy of injection and press parts with 84.1% in AP (Average Precision), 91.2% in AR(Average Recall), 72.0% in AP, 87.0% in AR, and orientation accuracy of injection and press parts with 94.4% and 92.0%, which can facilitate the faster design in industrial product design.

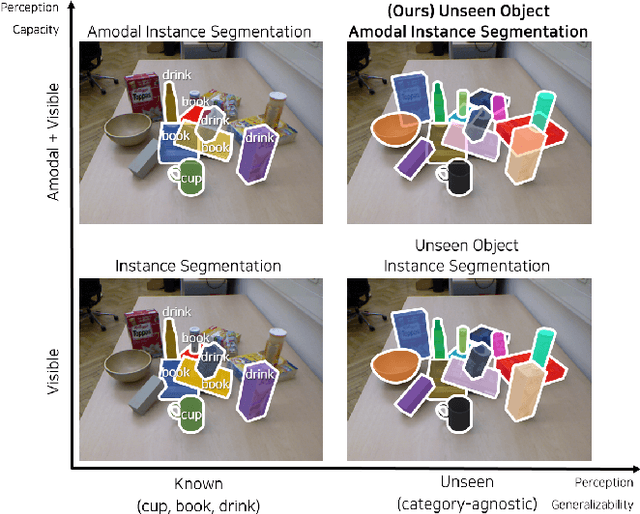

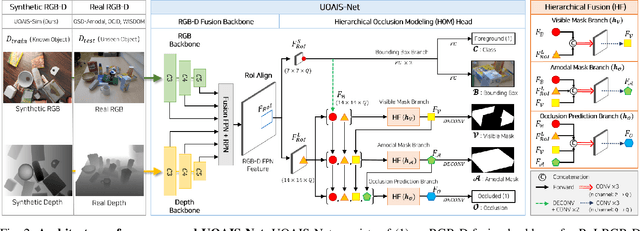

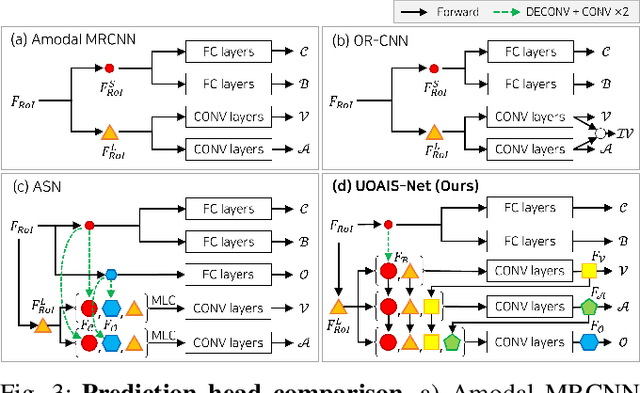

Unseen Object Amodal Instance Segmentation via Hierarchical Occlusion Modeling

Sep 23, 2021

Abstract:Instance-aware segmentation of unseen objects is essential for a robotic system in an unstructured environment. Although previous works achieved encouraging results, they were limited to segmenting the only visible regions of unseen objects. For robotic manipulation in a cluttered scene, amodal perception is required to handle the occluded objects behind others. This paper addresses Unseen Object Amodal Instance Segmentation (UOAIS) to detect 1) visible masks, 2) amodal masks, and 3) occlusions on unseen object instances. For this, we propose a Hierarchical Occlusion Modeling (HOM) scheme designed to reason about the occlusion by assigning a hierarchy to a feature fusion and prediction order. We evaluated our method on three benchmarks (tabletop, indoors, and bin environments) and achieved state-of-the-art (SOTA) performance. Robot demos for picking up occluded objects, codes, and datasets are available at https://sites.google.com/view/uoais

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge