Sean C. Epstein

Rician likelihood loss for quantitative MRI using self-supervised deep learning

Jul 13, 2023Abstract:Purpose: Previous quantitative MR imaging studies using self-supervised deep learning have reported biased parameter estimates at low SNR. Such systematic errors arise from the choice of Mean Squared Error (MSE) loss function for network training, which is incompatible with Rician-distributed MR magnitude signals. To address this issue, we introduce the negative log Rician likelihood (NLR) loss. Methods: A numerically stable and accurate implementation of the NLR loss was developed to estimate quantitative parameters of the apparent diffusion coefficient (ADC) model and intra-voxel incoherent motion (IVIM) model. Parameter estimation accuracy, precision and overall error were evaluated in terms of bias, variance and root mean squared error and compared against the MSE loss over a range of SNRs (5 - 30). Results: Networks trained with NLR loss show higher estimation accuracy than MSE for the ADC and IVIM diffusion coefficients as SNR decreases, with minimal loss of precision or total error. At high effective SNR (high SNR and small diffusion coefficients), both losses show comparable accuracy and precision for all parameters of both models. Conclusion: The proposed NLR loss is numerically stable and accurate across the full range of tested SNRs and improves parameter estimation accuracy of diffusion coefficients using self-supervised deep learning. We expect the development to benefit quantitative MR imaging techniques broadly, enabling more accurate parameter estimation from noisy data.

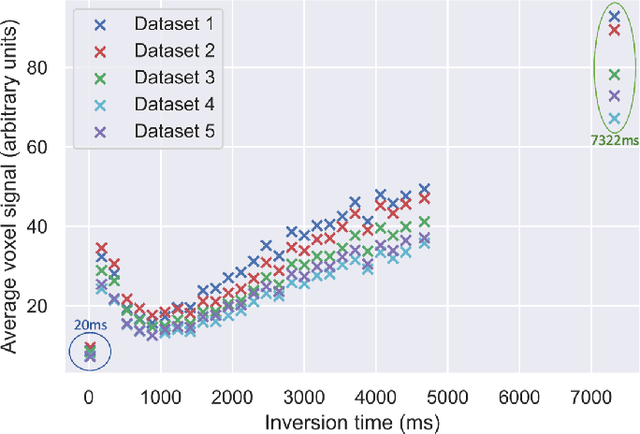

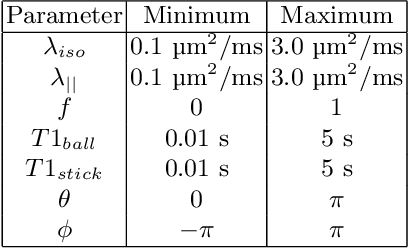

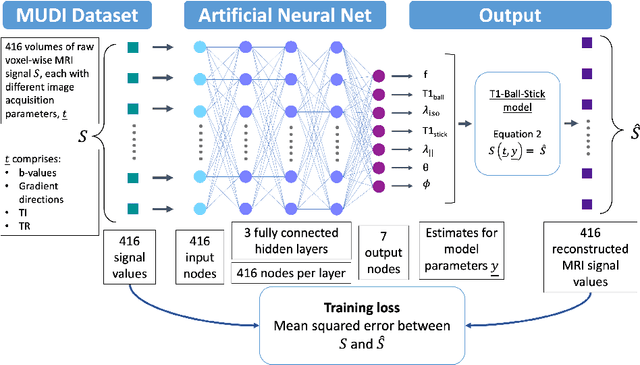

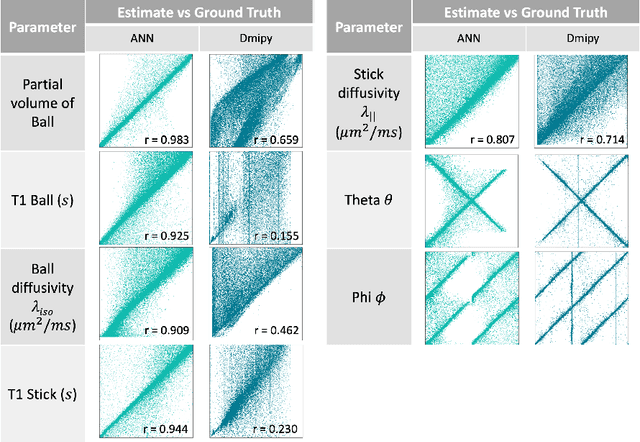

Fitting a Directional Microstructure Model to Diffusion-Relaxation MRI Data with Self-Supervised Machine Learning

Oct 05, 2022

Abstract:Machine learning is a powerful approach for fitting microstructural models to diffusion MRI data. Early machine learning microstructure imaging implementations trained regressors to estimate model parameters in a supervised way, using synthetic training data with known ground truth. However, a drawback of this approach is that the choice of training data impacts fitted parameter values. Self-supervised learning is emerging as an attractive alternative to supervised learning in this context. Thus far, both supervised and self-supervised learning have typically been applied to isotropic models, such as intravoxel incoherent motion (IVIM), as opposed to models where the directionality of anisotropic structures is also estimated. In this paper, we demonstrate self-supervised machine learning model fitting for a directional microstructural model. In particular, we fit a combined T1-ball-stick model to the multidimensional diffusion (MUDI) challenge diffusion-relaxation dataset. Our self-supervised approach shows clear improvements in parameter estimation and computational time, for both simulated and in-vivo brain data, compared to standard non-linear least squares fitting. Code for the artificial neural net constructed for this study is available for public use from the following GitHub repository: https://github.com/jplte/deep-T1-ball-stick

Choice of training label matters: how to best use deep learning for quantitative MRI parameter estimation

May 11, 2022

Abstract:Deep learning (DL) is gaining popularity as a parameter estimation method for quantitative MRI. A range of competing implementations have been proposed, relying on either supervised or self-supervised learning. Self-supervised approaches, sometimes referred to as unsupervised, have been loosely based on auto-encoders, whereas supervised methods have, to date, been trained on groundtruth labels. These two learning paradigms have been shown to have distinct strengths. Notably, self-supervised approaches have offered lower-bias parameter estimates than their supervised alternatives. This result is counterintuitive - incorporating prior knowledge with supervised labels should, in theory, lead to improved accuracy. In this work, we show that this apparent limitation of supervised approaches stems from the naive choice of groundtruth training labels. By training on labels which are deliberately not groundtruth, we show that the low-bias parameter estimation previously associated with self-supervised methods can be replicated - and improved on - within a supervised learning framework. This approach sets the stage for a single, unifying, deep learning parameter estimation framework, based on supervised learning, where trade-offs between bias and variance are made by careful adjustment of training label.

Task-driven assessment of experimental designs in diffusion MRI: a computational framework

Mar 15, 2021

Abstract:Purpose: To propose a task-driven computational framework for assessing diffusion MRI experimental designs which, rather than relying on parameter-estimation metrics, directly measures quantitative task performance. Theory: Traditional computational experimental design (CED) methods may be ill-suited to tasks, such as clinical classification, where outcome does not depend on parameter-estimation accuracy or precision alone. Current assessment metrics evaluate experiments' ability to faithfully recover microstructure parameters rather than their associated task performance. This work proposes a novel CED assessment method that addresses this shortcoming. For a given experimental design (protocol, parameter-estimation method, model, etc.), experiments are simulated start-to-finish and task performance is computed from receiver operating characteristic (ROC) curves and summary metrics such as area under the curve (AUC). Methods: Two experiments were performed: first a validation of the pipeline's task performance predictions in two clinical datasets, comparing in-silico predictions to real-world ROC/AUC; and second, a demonstration of the pipeline's advantages over traditional CED approaches, using two simulated clinical classification tasks. Results: Our computational method accurately predicts (a) the qualitative form of ROC curves, (b) the relative performance of different experimental designs, and (c) the absolute performance (AUC) of each experimental design. Furthermore, our method is shown to outperform traditional task-agnostic assessment methods. Conclusions: The proposed pipeline produces accurate, quantitative predictions of real-world task performance. Compared to current approaches, such task-driven assessment is more likely to identify experimental design that perform well in practice. It provides the foundation for developing future task-driven CED frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge