Sean A. P. Clouston

Inferring Effects of Major Events through Discontinuity Forecasting of Population Anxiety

Aug 29, 2025Abstract:Estimating community-specific mental health effects of local events is vital for public health policy. While forecasting mental health scores alone offers limited insights into the impact of events on community well-being, quasi-experimental designs like the Longitudinal Regression Discontinuity Design (LRDD) from econometrics help researchers derive more effects that are more likely to be causal from observational data. LRDDs aim to extrapolate the size of changes in an outcome (e.g. a discontinuity in running scores for anxiety) due to a time-specific event. Here, we propose adapting LRDDs beyond traditional forecasting into a statistical learning framework whereby future discontinuities (i.e. time-specific shifts) and changes in slope (i.e. linear trajectories) are estimated given a location's history of the score, dynamic covariates (other running assessments), and exogenous variables (static representations). Applying our framework to predict discontinuities in the anxiety of US counties from COVID-19 events, we found the task was difficult but more achievable as the sophistication of models was increased, with the best results coming from integrating exogenous and dynamic covariates. Our approach shows strong improvement ($r=+.46$ for discontinuity and $r = +.65$ for slope) over traditional static community representations. Discontinuity forecasting raises new possibilities for estimating the idiosyncratic effects of potential future or hypothetical events on specific communities.

Robust language-based mental health assessments in time and space through social media

Feb 25, 2023Abstract:Compared to physical health, population mental health measurement in the U.S. is very coarse-grained. Currently, in the largest population surveys, such as those carried out by the Centers for Disease Control or Gallup, mental health is only broadly captured through "mentally unhealthy days" or "sadness", and limited to relatively infrequent state or metropolitan estimates. Through the large scale analysis of social media data, robust estimation of population mental health is feasible at much higher resolutions, up to weekly estimates for counties. In the present work, we validate a pipeline that uses a sample of 1.2 billion Tweets from 2 million geo-located users to estimate mental health changes for the two leading mental health conditions, depression and anxiety. We find moderate to large associations between the language-based mental health assessments and survey scores from Gallup for multiple levels of granularity, down to the county-week (fixed effects $\beta = .25$ to $1.58$; $p<.001$). Language-based assessment allows for the cost-effective and scalable monitoring of population mental health at weekly time scales. Such spatially fine-grained time series are well suited to monitor effects of societal events and policies as well as enable quasi-experimental study designs in population health and other disciplines. Beyond mental health in the U.S., this method generalizes to a broad set of psychological outcomes and allows for community measurement in under-resourced settings where no traditional survey measures - but social media data - are available.

World Trade Center responders in their own words: Predicting PTSD symptom trajectories with AI-based language analyses of interviews

Nov 12, 2020

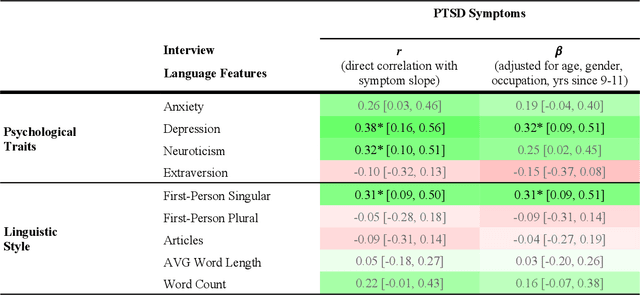

Abstract:Background: Oral histories from 9/11 responders to the World Trade Center (WTC) attacks provide rich narratives about distress and resilience. Artificial Intelligence (AI) models promise to detect psychopathology in natural language, but they have been evaluated primarily in non-clinical settings using social media. This study sought to test the ability of AI-based language assessments to predict PTSD symptom trajectories among responders. Methods: Participants were 124 responders whose health was monitored at the Stony Brook WTC Health and Wellness Program who completed oral history interviews about their initial WTC experiences. PTSD symptom severity was measured longitudinally using the PTSD Checklist (PCL) for up to 7 years post-interview. AI-based indicators were computed for depression, anxiety, neuroticism, and extraversion along with dictionary-based measures of linguistic and interpersonal style. Linear regression and multilevel models estimated associations of AI indicators with concurrent and subsequent PTSD symptom severity (significance adjusted by false discovery rate). Results: Cross-sectionally, greater depressive language (beta=0.32; p=0.043) and first-person singular usage (beta=0.31; p=0.044) were associated with increased symptom severity. Longitudinally, anxious language predicted future worsening in PCL scores (beta=0.31; p=0.031), whereas first-person plural usage (beta=-0.37; p=0.007) and longer words usage (beta=-0.36; p=0.007) predicted improvement. Conclusions: This is the first study to demonstrate the value of AI in understanding PTSD in a vulnerable population. Future studies should extend this application to other trauma exposures and to other demographic groups, especially under-represented minorities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge