Satya Gautam Vadlamudi

Explicablility as Minimizing Distance from Expected Behavior

Mar 13, 2019

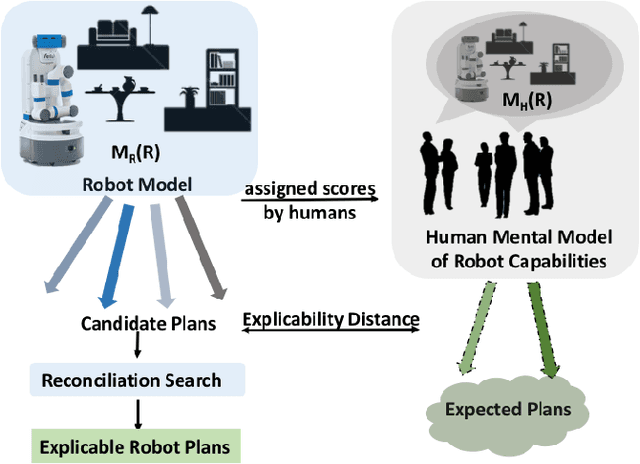

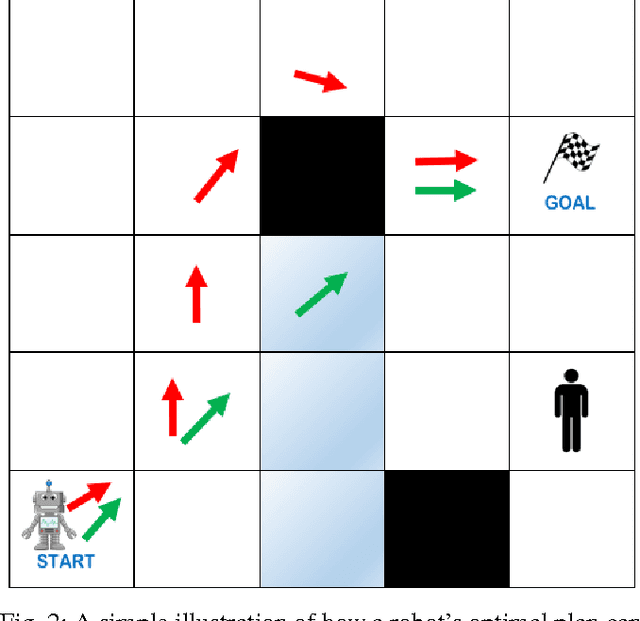

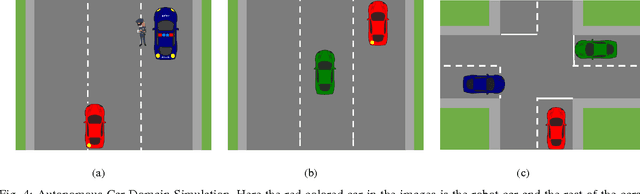

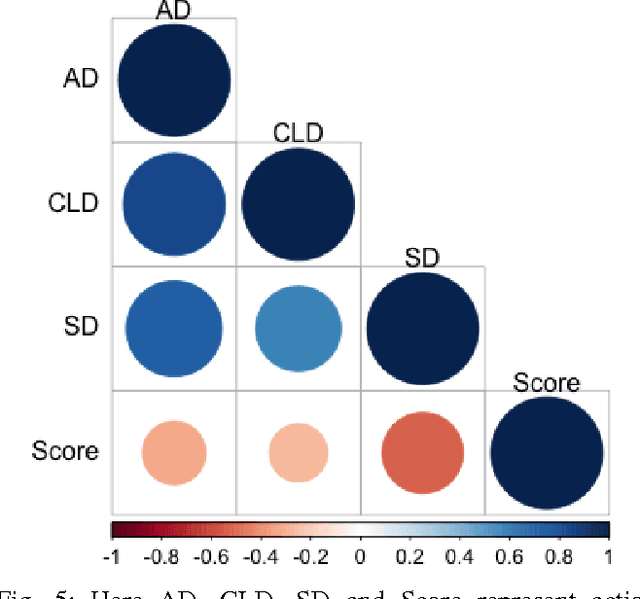

Abstract:In order to have effective human-AI collaboration, it is necessary to address how the AI agent's behavior is being perceived by the humans-in-the-loop. When the agent's task plans are generated without such considerations, they may often demonstrate inexplicable behavior from the human's point of view. This problem may arise due to the human's partial or inaccurate understanding of the agent's planning model. This may have serious implications from increased cognitive load to more serious concerns of safety around a physical agent. In this paper, we address this issue by modeling plan explicability as a function of the distance between a plan that agent makes and the plan that human expects it to make. We learn a regression model for mapping the plan distances to explicability scores of plans and develop an anytime search algorithm that can use this model as a heuristic to come up with progressively explicable plans. We evaluate the effectiveness of our approach in a simulated autonomous car domain and a physical robot domain.

Moving Target Defense for Web Applications using Bayesian Stackelberg Games

Nov 17, 2016

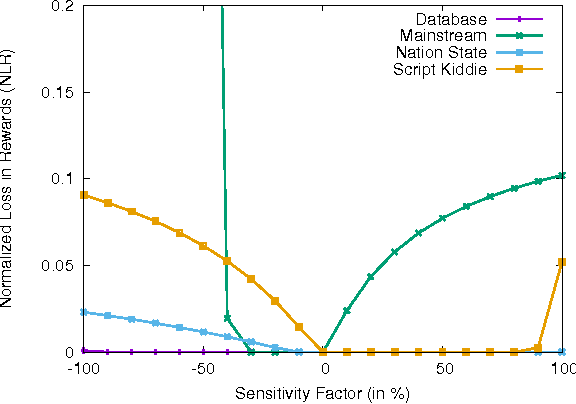

Abstract:The present complexity in designing web applications makes software security a difficult goal to achieve. An attacker can explore a deployed service on the web and attack at his/her own leisure. Moving Target Defense (MTD) in web applications is an effective mechanism to nullify this advantage of their reconnaissance but the framework demands a good switching strategy when switching between multiple configurations for its web-stack. To address this issue, we propose modeling of a real-world MTD web application as a repeated Bayesian game. We then formulate an optimization problem that generates an effective switching strategy while considering the cost of switching between different web-stack configurations. To incorporate this model into a developed MTD system, we develop an automated system for generating attack sets of Common Vulnerabilities and Exposures (CVEs) for input attacker types with predefined capabilities. Our framework obtains realistic reward values for the players (defenders and attackers) in this game by using security domain expertise on CVEs obtained from the National Vulnerability Database (NVD). We also address the issue of prioritizing vulnerabilities that when fixed, improves the security of the MTD system. Lastly, we demonstrate the robustness of our proposed model by evaluating its performance when there is uncertainty about input attacker information.

Proactive Decision Support using Automated Planning

Jun 24, 2016

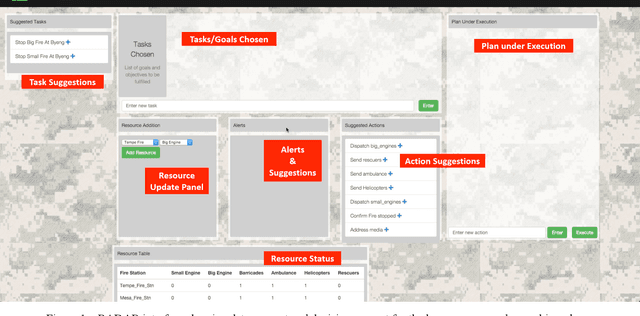

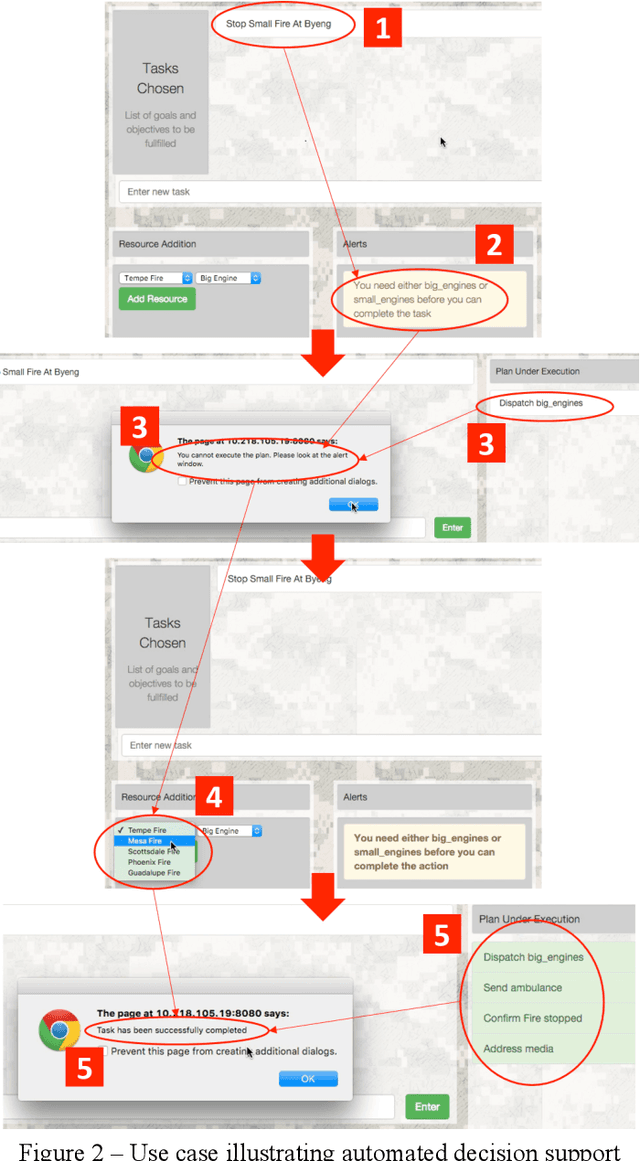

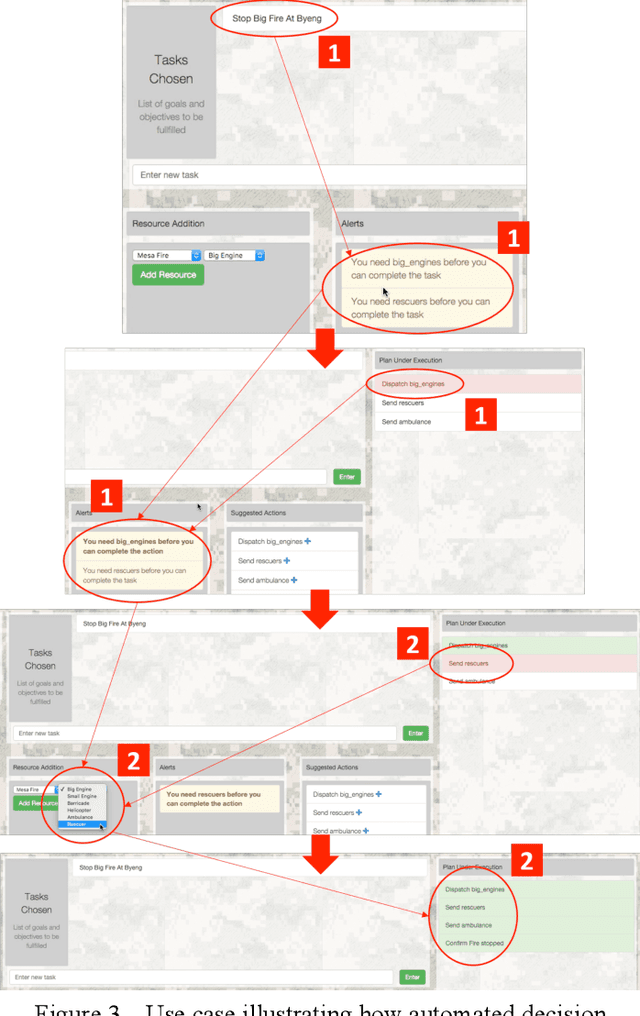

Abstract:Proactive decision support (PDS) helps in improving the decision making experience of human decision makers in human-in-the-loop planning environments. Here both the quality of the decisions and the ease of making them are enhanced. In this regard, we propose a PDS framework, named RADAR, based on the research in Automated Planning in AI, that aids the human decision maker with her plan to achieve her goals by providing alerts on: whether such a plan can succeed at all, whether there exist any resource constraints that may foil her plan, etc. This is achieved by generating and analyzing the landmarks that must be accomplished by any successful plan on the way to achieving the goals. Note that, this approach also supports naturalistic decision making which is being acknowledged as a necessary element in proactive decision support, since it only aids the human decision maker through suggestions and alerts rather than enforcing fixed plans or decisions. We demonstrate the utility of the proposed framework through search-and-rescue examples in a fire-fighting domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge