Saturnino Maldonado-Bascón

Live Video Captioning

Jun 20, 2024

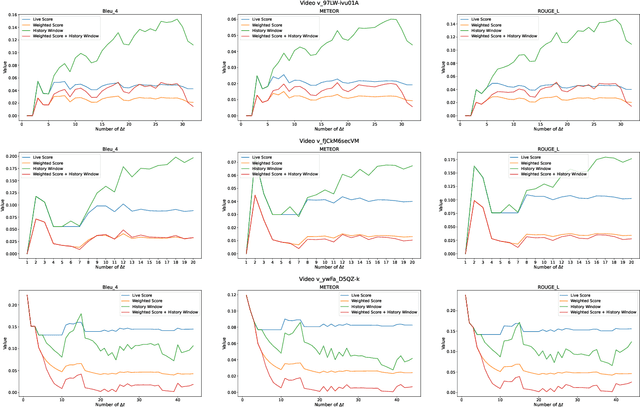

Abstract:Dense video captioning is the task that involves the detection and description of events within video sequences. While traditional approaches focus on offline solutions where the entire video of analysis is available for the captioning model, in this work we introduce a paradigm shift towards Live Video Captioning (LVC). In LVC, dense video captioning models must generate captions for video streams in an online manner, facing important constraints such as having to work with partial observations of the video, the need for temporal anticipation and, of course, ensuring ideally a real-time response. In this work we formally introduce the novel problem of LVC and propose new evaluation metrics tailored for the online scenario, demonstrating their superiority over traditional metrics. We also propose an LVC model integrating deformable transformers and temporal filtering to address the LVC new challenges. Experimental evaluations on the ActivityNet Captions dataset validate the effectiveness of our approach, highlighting its performance in LVC compared to state-of-the-art offline methods. Results of our model as well as an evaluation kit with the novel metrics integrated are made publicly available to encourage further research on LVC.

Realistic Continual Learning Approach using Pre-trained Models

Apr 11, 2024

Abstract:Continual learning (CL) is crucial for evaluating adaptability in learning solutions to retain knowledge. Our research addresses the challenge of catastrophic forgetting, where models lose proficiency in previously learned tasks as they acquire new ones. While numerous solutions have been proposed, existing experimental setups often rely on idealized class-incremental learning scenarios. We introduce Realistic Continual Learning (RealCL), a novel CL paradigm where class distributions across tasks are random, departing from structured setups. We also present CLARE (Continual Learning Approach with pRE-trained models for RealCL scenarios), a pre-trained model-based solution designed to integrate new knowledge while preserving past learning. Our contributions include pioneering RealCL as a generalization of traditional CL setups, proposing CLARE as an adaptable approach for RealCL tasks, and conducting extensive experiments demonstrating its effectiveness across various RealCL scenarios. Notably, CLARE outperforms existing models on RealCL benchmarks, highlighting its versatility and robustness in unpredictable learning environments.

The Instantaneous Accuracy: a Novel Metric for the Problem of Online Human Behaviour Recognition in Untrimmed Videos

Mar 25, 2020

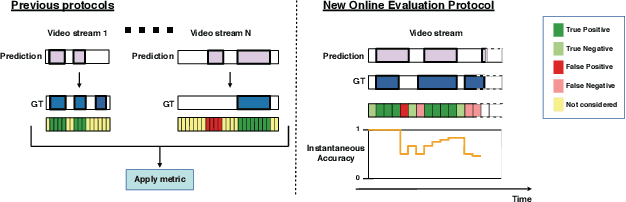

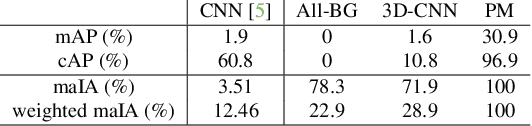

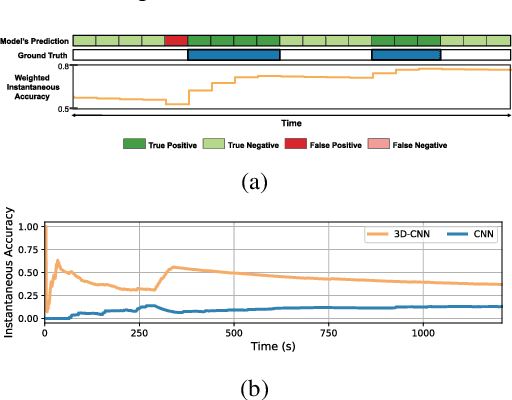

Abstract:The problem of Online Human Behaviour Recognition in untrimmed videos, aka Online Action Detection (OAD), needs to be revisited. Unlike traditional offline action detection approaches, where the evaluation metrics are clear and well established, in the OAD setting we find few works and no consensus on the evaluation protocols to be used. In this paper we introduce a novel online metric, the Instantaneous Accuracy ($IA$), that exhibits an \emph{online} nature, solving most of the limitations of the previous (offline) metrics. We conduct a thorough experimental evaluation on TVSeries dataset, comparing the performance of various baseline methods to the state of the art. Our results confirm the problems of previous evaluation protocols, and suggest that an IA-based protocol is more adequate to the online scenario for human behaviour understanding. Code of the metric available https://github.com/gramuah/ia

Embarrassingly Simple Model for Early Action Proposal

Oct 18, 2018

Abstract:Early action proposal consists in generating high quality candidate temporal segments that are likely to contain an action in a video stream, as soon as they happen. Many sophisticated approaches have been proposed for the action proposal problem but from the off-line perspective. On the contrary, we focus on the on-line version of the problem, proposing a simple classifier-based model, using standard 3D CNNs, that performs significantly better than the state of the art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge