Saptarshi Debroy

Detection and Recovery of Adversarial Slow-Pose Drift in Offloaded Visual-Inertial Odometry

Sep 08, 2025Abstract:Visual-Inertial Odometry (VIO) supports immersive Virtual Reality (VR) by fusing camera and Inertial Measurement Unit (IMU) data for real-time pose. However, current trend of offloading VIO to edge servers can lead server-side threat surface where subtle pose spoofing can accumulate into substantial drift, while evading heuristic checks. In this paper, we study this threat and present an unsupervised, label-free detection and recovery mechanism. The proposed model is trained on attack-free sessions to learn temporal regularities of motion to detect runtime deviations and initiate recovery to restore pose consistency. We evaluate the approach in a realistic offloaded-VIO environment using ILLIXR testbed across multiple spoofing intensities. Experimental results in terms of well-known performance metrics show substantial reductions in trajectory and pose error compared to a no-defense baseline.

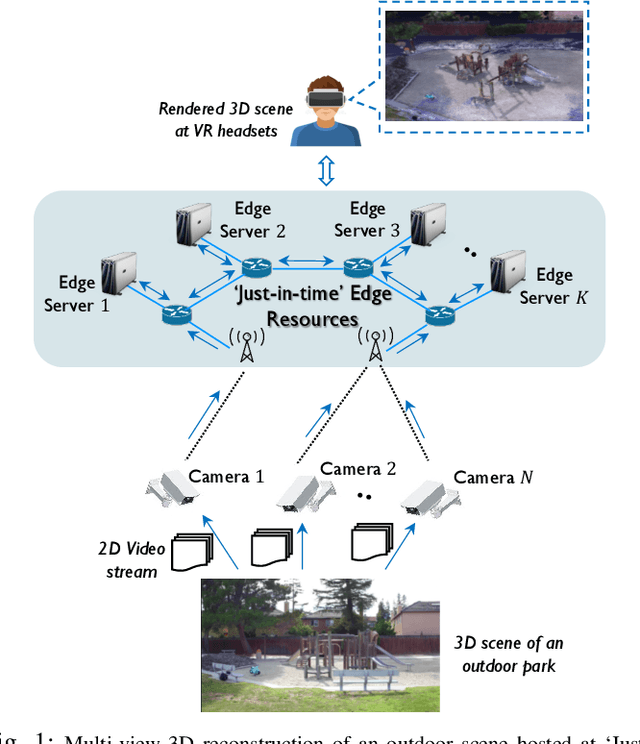

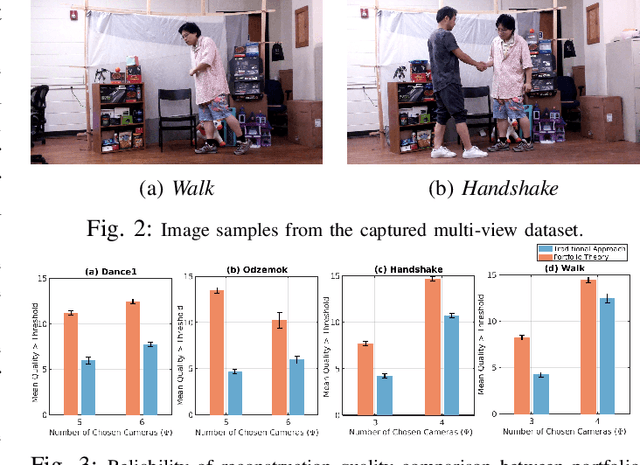

Reliable Multi-view 3D Reconstruction for `Just-in-time' Edge Environments

Aug 21, 2025Abstract:Multi-view 3D reconstruction applications are revolutionizing critical use cases that require rapid situational-awareness, such as emergency response, tactical scenarios, and public safety. In many cases, their near-real-time latency requirements and ad-hoc needs for compute resources necessitate adoption of `Just-in-time' edge environments where the system is set up on the fly to support the applications during the mission lifetime. However, reliability issues can arise from the inherent dynamism and operational adversities of such edge environments, resulting in spatiotemporally correlated disruptions that impact the camera operations, which can lead to sustained degradation of reconstruction quality. In this paper, we propose a novel portfolio theory inspired edge resource management strategy for reliable multi-view 3D reconstruction against possible system disruptions. Our proposed methodology can guarantee reconstruction quality satisfaction even when the cameras are prone to spatiotemporally correlated disruptions. The portfolio theoretic optimization problem is solved using a genetic algorithm that converges quickly for realistic system settings. Using publicly available and customized 3D datasets, we demonstrate the proposed camera selection strategy's benefits in guaranteeing reliable 3D reconstruction against traditional baseline strategies, under spatiotemporal disruptions.

Poster: Reliable 3D Reconstruction for Ad-hoc Edge Implementations

Nov 16, 2024

Abstract:Ad-hoc edge deployments to support real-time complex video processing applications such as, multi-view 3D reconstruction often suffer from spatio-temporal system disruptions that greatly impact reconstruction quality. In this poster paper, we present a novel portfolio theory-inspired edge resource management strategy to ensure reliable multi-view 3D reconstruction by accounting for possible system disruptions.

EdgeRL: Reinforcement Learning-driven Deep Learning Model Inference Optimization at Edge

Oct 16, 2024Abstract:Balancing mutually diverging performance metrics, such as, processing latency, outcome accuracy, and end device energy consumption is a challenging undertaking for deep learning model inference in ad-hoc edge environments. In this paper, we propose EdgeRL framework that seeks to strike such balance by using an Advantage Actor-Critic (A2C) Reinforcement Learning (RL) approach that can choose optimal run-time DNN inference parameters and aligns the performance metrics based on the application requirements. Using real world deep learning model and a hardware testbed, we evaluate the benefits of EdgeRL framework in terms of end device energy savings, inference accuracy improvement, and end-to-end inference latency reduction.

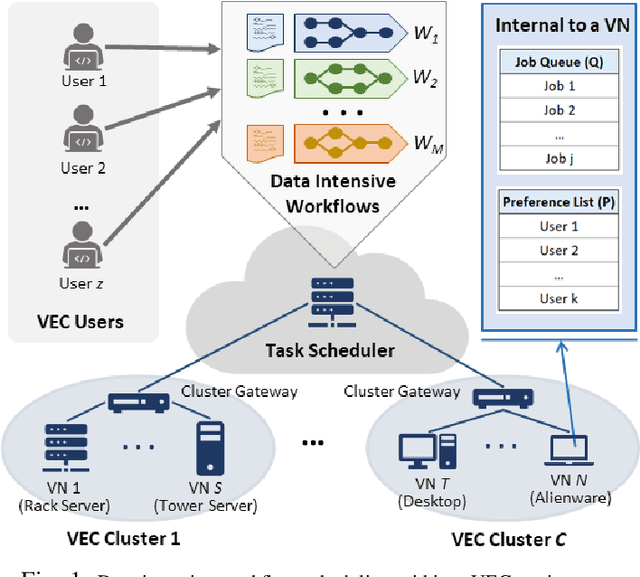

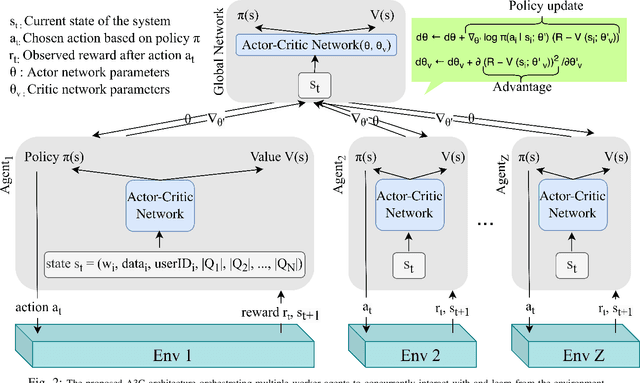

Reinforcement Learning-driven Data-intensive Workflow Scheduling for Volunteer Edge-Cloud

Jul 01, 2024

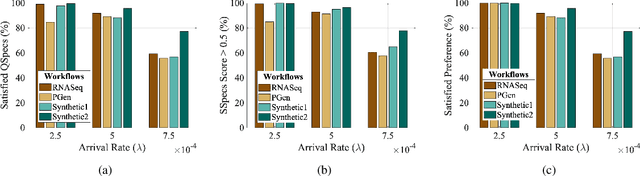

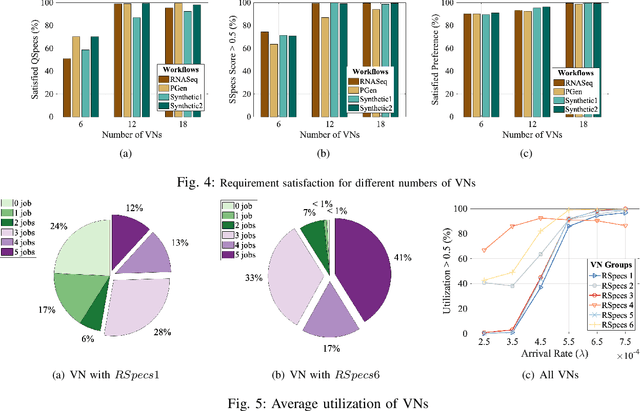

Abstract:In recent times, Volunteer Edge-Cloud (VEC) has gained traction as a cost-effective, community computing paradigm to support data-intensive scientific workflows. However, due to the highly distributed and heterogeneous nature of VEC resources, centralized workflow task scheduling remains a challenge. In this paper, we propose a Reinforcement Learning (RL)-driven data-intensive scientific workflow scheduling approach that takes into consideration: i) workflow requirements, ii) VEC resources' preference on workflows, and iii) diverse VEC resource policies, to ensure robust resource allocation. We formulate the long-term average performance optimization problem as a Markov Decision Process, which is solved using an event-based Asynchronous Advantage Actor-Critic RL approach. Our extensive simulations and testbed implementations demonstrate our approach's benefits over popular baseline strategies in terms of workflow requirement satisfaction, VEC preference satisfaction, and available VEC resource utilization.

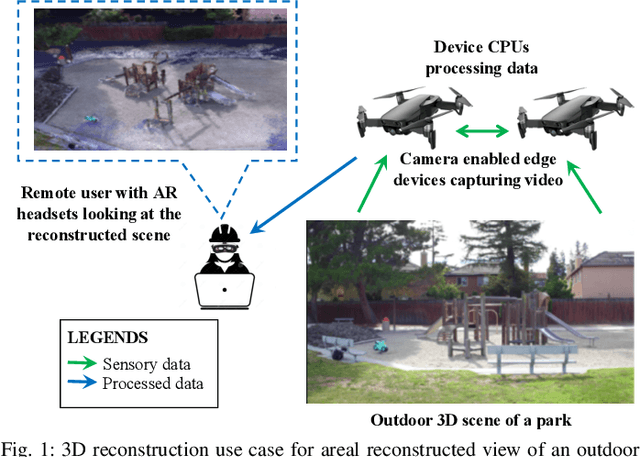

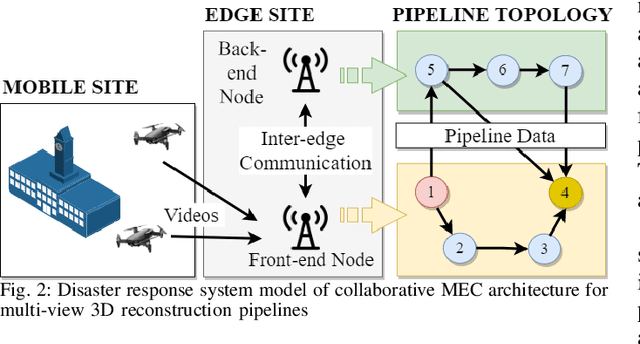

End-to-End Latency Optimization of Multi-view 3D Reconstruction for Disaster Response

Apr 04, 2023

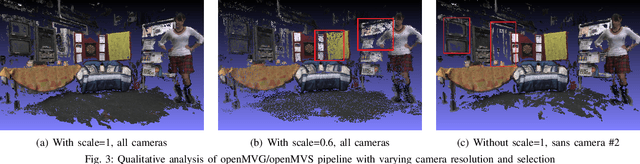

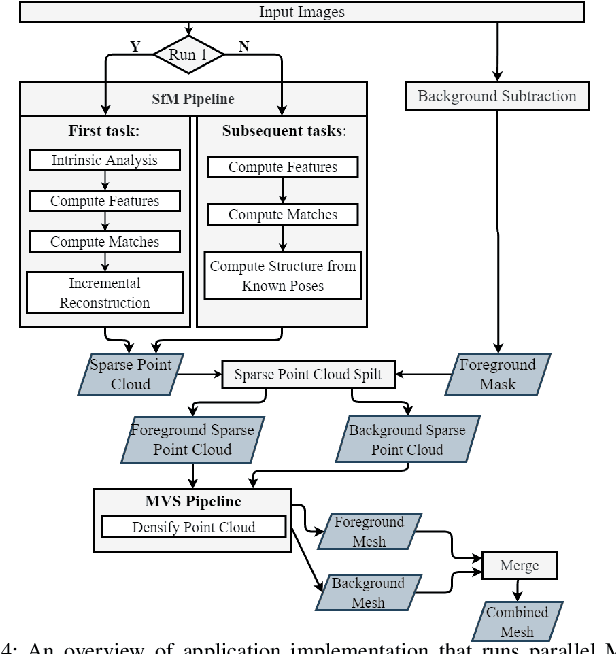

Abstract:In order to plan rapid response during disasters, first responder agencies often adopt `bring your own device' (BYOD) model with inexpensive mobile edge devices (e.g., drones, robots, tablets) for complex video analytics applications, e.g., 3D reconstruction of a disaster scene. Unlike simpler video applications, widely used Multi-view Stereo (MVS) based 3D reconstruction applications (e.g., openMVG/openMVS) are exceedingly time consuming, especially when run on such computationally constrained mobile edge devices. Additionally, reducing the reconstruction latency of such inherently sequential algorithms is challenging as unintelligent, application-agnostic strategies can drastically degrade the reconstruction (i.e., application outcome) quality making them useless. In this paper, we aim to design a latency optimized MVS algorithm pipeline, with the objective to best balance the end-to-end latency and reconstruction quality by running the pipeline on a collaborative mobile edge environment. The overall optimization approach is two-pronged where: (a) application optimizations introduce data-level parallelism by splitting the pipeline into high frequency and low frequency reconstruction components and (b) system optimizations incorporate task-level parallelism to the pipelines by running them opportunistically on available resources with online quality control in order to balance both latency and quality. Our evaluation on a hardware testbed using publicly available datasets shows upto ~54% reduction in latency with negligible loss (~4-7%) in reconstruction quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge