Amitangshu Pal

End-to-End Latency Optimization of Multi-view 3D Reconstruction for Disaster Response

Apr 04, 2023

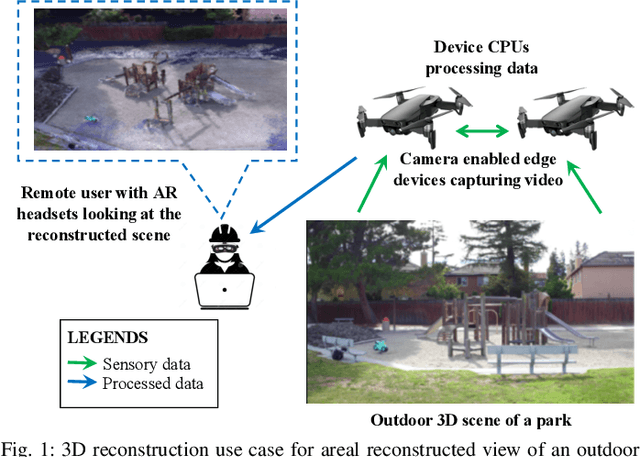

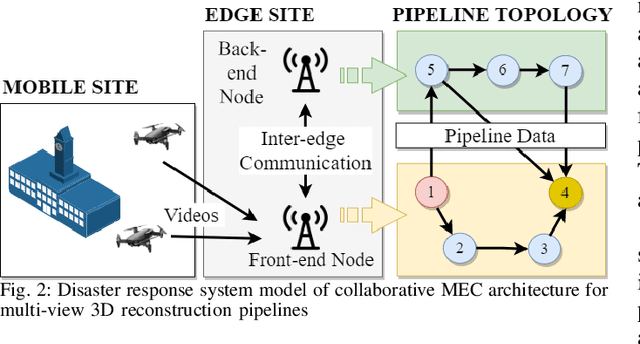

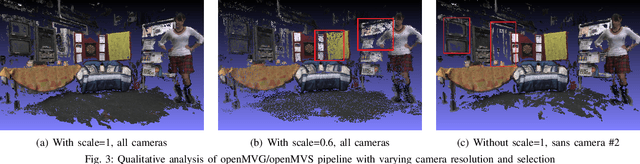

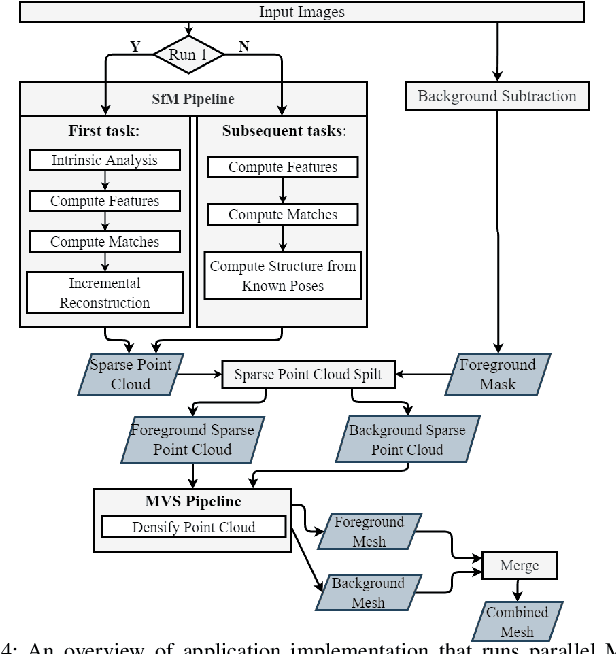

Abstract:In order to plan rapid response during disasters, first responder agencies often adopt `bring your own device' (BYOD) model with inexpensive mobile edge devices (e.g., drones, robots, tablets) for complex video analytics applications, e.g., 3D reconstruction of a disaster scene. Unlike simpler video applications, widely used Multi-view Stereo (MVS) based 3D reconstruction applications (e.g., openMVG/openMVS) are exceedingly time consuming, especially when run on such computationally constrained mobile edge devices. Additionally, reducing the reconstruction latency of such inherently sequential algorithms is challenging as unintelligent, application-agnostic strategies can drastically degrade the reconstruction (i.e., application outcome) quality making them useless. In this paper, we aim to design a latency optimized MVS algorithm pipeline, with the objective to best balance the end-to-end latency and reconstruction quality by running the pipeline on a collaborative mobile edge environment. The overall optimization approach is two-pronged where: (a) application optimizations introduce data-level parallelism by splitting the pipeline into high frequency and low frequency reconstruction components and (b) system optimizations incorporate task-level parallelism to the pipelines by running them opportunistically on available resources with online quality control in order to balance both latency and quality. Our evaluation on a hardware testbed using publicly available datasets shows upto ~54% reduction in latency with negligible loss (~4-7%) in reconstruction quality.

Non-Intrusive Driver Behavior Characterization From Road-Side Cameras

Feb 25, 2023Abstract:In this paper, we demonstrate a proof of concept for characterizing vehicular behavior using only the roadside cameras of the ITS system. The essential advantage of this method is that it can be implemented in the roadside infrastructure transparently and inexpensively and can have a global view of each vehicle's behavior without any involvement of or awareness by the individual vehicles or drivers. By using a setup that includes programmatically controlled robot cars (to simulate different types of vehicular behaviors) and an external video camera set up to capture and analyze the vehicular behavior, we show that the driver classification based on the external video analytics yields accuracies that are within 1-2\% of the accuracies of direct vehicle-based characterization. We also show that the residual errors primarily relate to gaps in correct object identification and tracking and thus can be further reduced with a more sophisticated setup. The characterization can be used to enhance both the safety and performance of the traffic flow, particularly in the mixed manual and automated vehicle scenarios that are expected to be common soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge