Sandeep Nallan Chakravarthula

An analysis of observation length requirements for machine understanding of human behaviors in spoken language

Nov 29, 2019

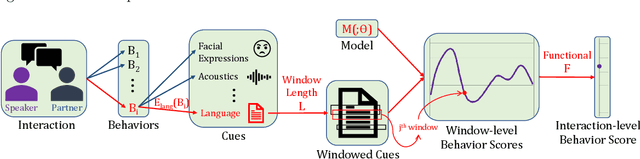

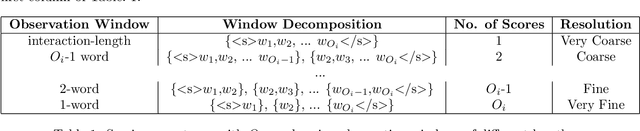

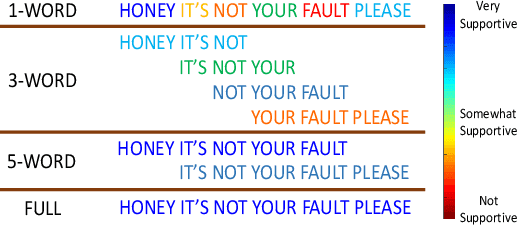

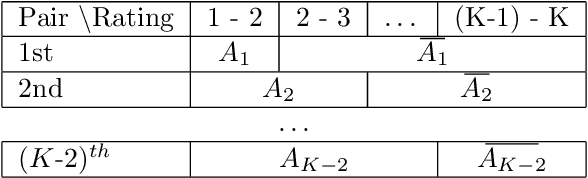

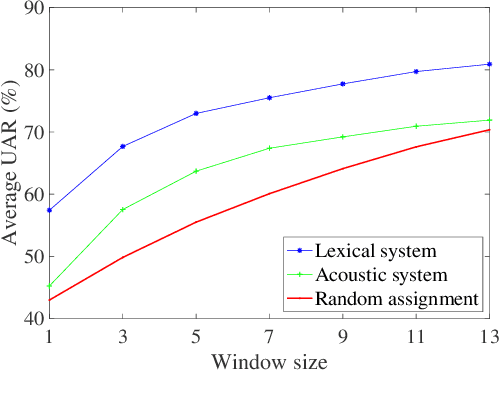

Abstract:Machine learning-based human behavior modeling, often at the level of characterizing an entire clinical encounter such as a therapy session, has been shown to be useful across a range of domains in psychological research and practice from relationship and family studies to cancer care. Existing approaches typically first quantify the target behavior construct based on cues in an observation window, such as a fixed number of words, and then aggregate it over all the windows in that session. During this process, a sufficiently long window is employed so that adequate information is gathered to accurately estimate the construct. The link between behavior modeling and the observation length, however, has not been well studied, especially for spoken language. In this paper, we analyze the effect of observation window length on the quality of behavior quantification and present a framework for determining appropriate windows for a wide range of behaviors. Our analysis method employs two levels of evaluations: (a) extrinsic similarity between machine predictions and human expert annotations, and (b) intrinsic consistency between intra-machine and intra-human behavior relations. We apply our analysis on a dataset of real-life married couple interactions that are annotated for a large and diverse set of behavior codes and test the robustness of our findings to different machine learning models. We find that negative constructs such as blame can be accurately identified from short expressions while those pertaining to positive affect such as satisfaction tend to require slightly longer observation windows. Behaviors that describe more complex personality traits such as negotiation and avoidance are found to require very long observations and are difficult to quantify from language alone. Our findings are in agreement with similar work on acoustic cues, thin slices and human emotion perception.

Predicting Behavior in Cancer-Afflicted Patient and Spouse Interactions using Speech and Language

Aug 02, 2019

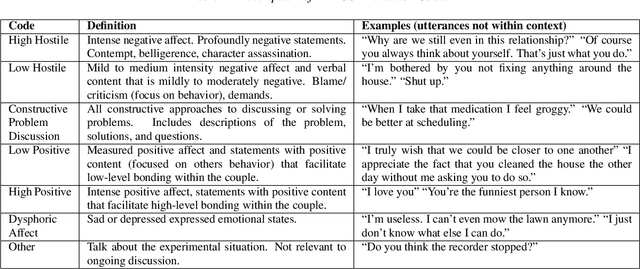

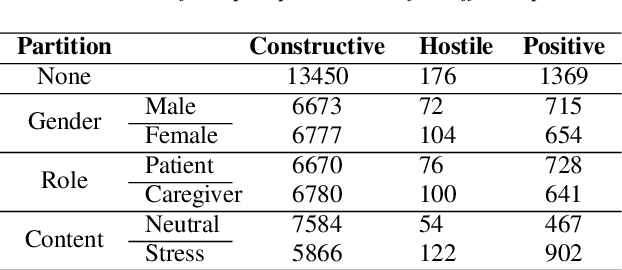

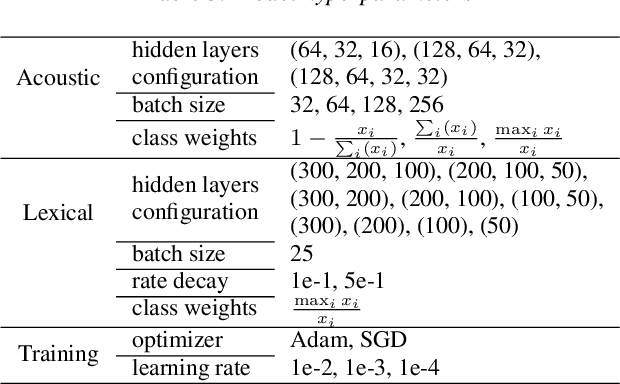

Abstract:Cancer impacts the quality of life of those diagnosed as well as their spouse caregivers, in addition to potentially influencing their day-to-day behaviors. There is evidence that effective communication between spouses can improve well-being related to cancer but it is difficult to efficiently evaluate the quality of daily life interactions using manual annotation frameworks. Automated recognition of behaviors based on the interaction cues of speakers can help analyze interactions in such couples and identify behaviors which are beneficial for effective communication. In this paper, we present and detail a dataset of dyadic interactions in 85 real-life cancer-afflicted couples and a set of observational behavior codes pertaining to interpersonal communication attributes. We describe and employ neural network-based systems for classifying these behaviors based on turn-level acoustic and lexical speech patterns. Furthermore, we investigate the effect of controlling for factors such as gender, patient/caregiver role and conversation content on behavior classification. Analysis of our preliminary results indicates the challenges in this task due to the nature of the targeted behaviors and suggests that techniques incorporating contextual processing might be better suited to tackle this problem.

Modeling Interpersonal Linguistic Coordination in Conversations using Word Mover's Distance

Apr 12, 2019

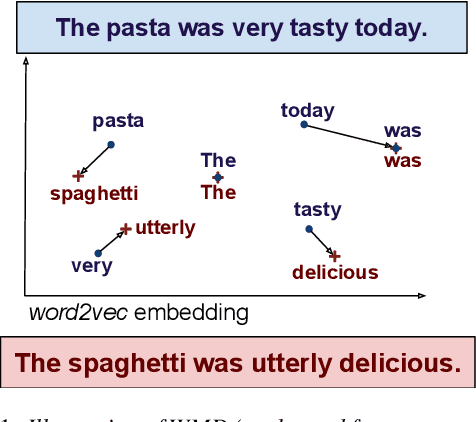

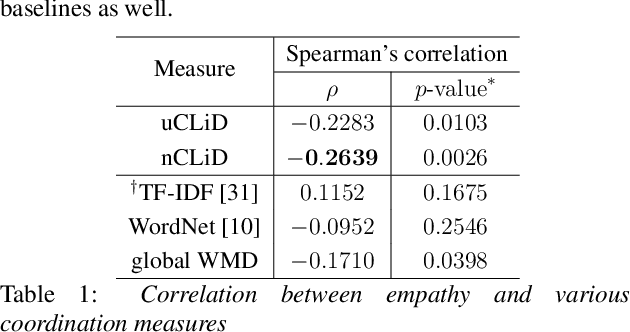

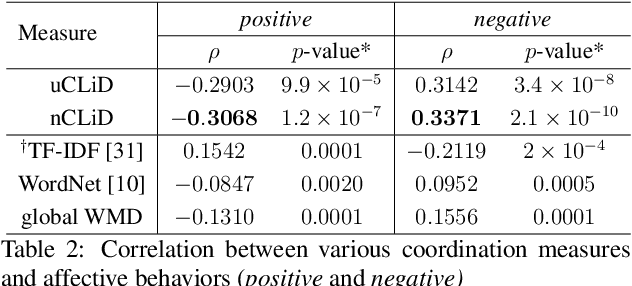

Abstract:Linguistic coordination is a well-established phenomenon in spoken conversations and often associated with positive social behaviors and outcomes. While there have been many attempts to measure lexical coordination or entrainment in literature, only a few have explored coordination in syntactic or semantic space. In this work, we attempt to combine these different aspects of coordination into a single measure by leveraging distances in a neural word representation space. In particular, we adopt the recently proposed Word Mover's Distance with word2vec embeddings and extend it to measure the dissimilarity in language used in multiple consecutive speaker turns. To validate our approach, we apply this measure for two case studies in the clinical psychology domain. We find that our proposed measure is correlated with the therapist's empathy towards their patient in Motivational Interviewing and with affective behaviors in Couples Therapy. In both case studies, our proposed metric exhibits higher correlation than previously proposed measures. When applied to the couples with relationship improvement, we also notice a significant decrease in the proposed measure over the course of therapy, indicating higher linguistic coordination.

Modeling Interpersonal Influence of Verbal Behavior in Couples Therapy Dyadic Interactions

May 23, 2018

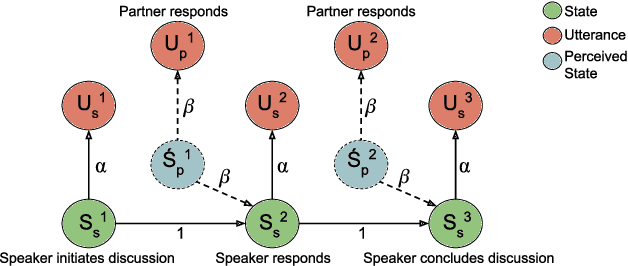

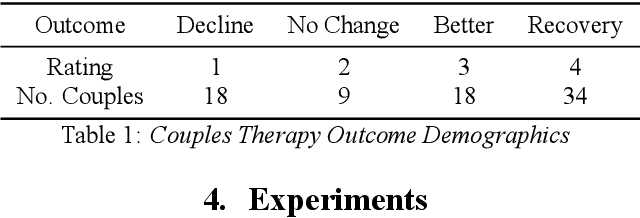

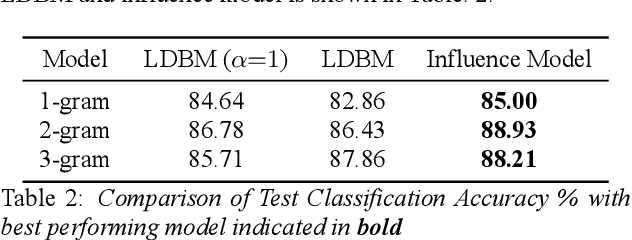

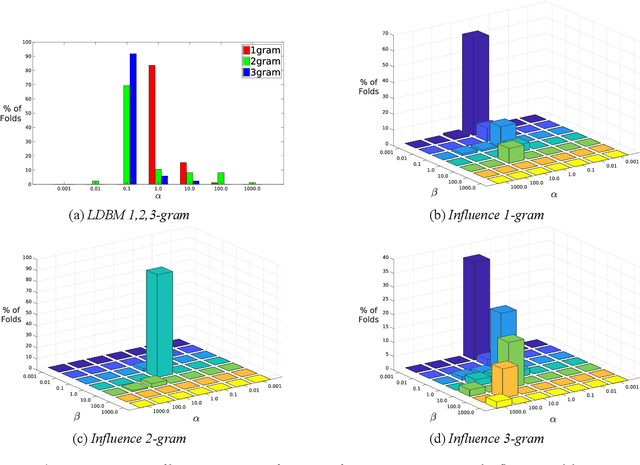

Abstract:Dyadic interactions among humans are marked by speakers continuously influencing and reacting to each other in terms of responses and behaviors, among others. Understanding how interpersonal dynamics affect behavior is important for successful treatment in psychotherapy domains. Traditional schemes that automatically identify behavior for this purpose have often looked at only the target speaker. In this work, we propose a Markov model of how a target speaker's behavior is influenced by their own past behavior as well as their perception of their partner's behavior, based on lexical features. Apart from incorporating additional potentially useful information, our model can also control the degree to which the partner affects the target speaker. We evaluate our proposed model on the task of classifying Negative behavior in Couples Therapy and show that it is more accurate than the single-speaker model. Furthermore, we investigate the degree to which the optimal influence relates to how well a couple does on the long-term, via relating to relationship outcomes

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge