Samah Khawaled

A self-attention model for robust rigid slice-to-volume registration of functional MRI

Apr 06, 2024

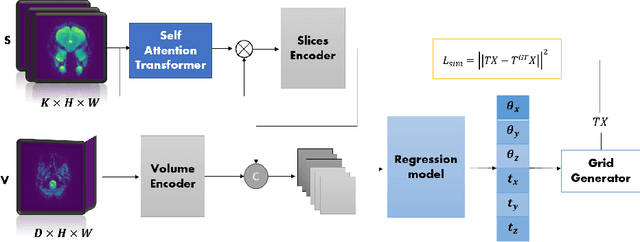

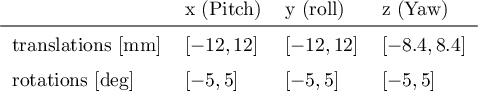

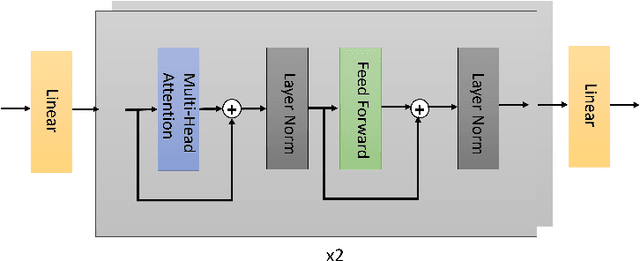

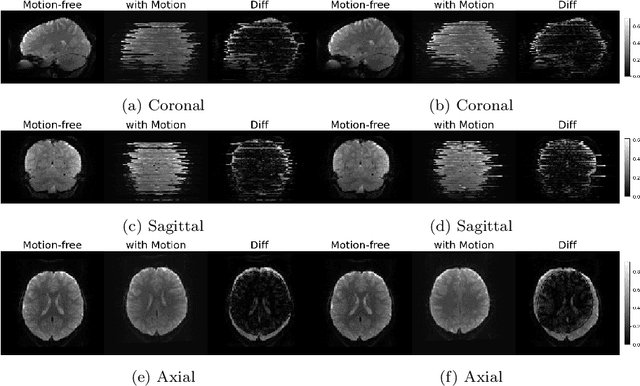

Abstract:Functional Magnetic Resonance Imaging (fMRI) is vital in neuroscience, enabling investigations into brain disorders, treatment monitoring, and brain function mapping. However, head motion during fMRI scans, occurring between shots of slice acquisition, can result in distortion, biased analyses, and increased costs due to the need for scan repetitions. Therefore, retrospective slice-level motion correction through slice-to-volume registration (SVR) is crucial. Previous studies have utilized deep learning (DL) based models to address the SVR task; however, they overlooked the uncertainty stemming from the input stack of slices and did not assign weighting or scoring to each slice. In this work, we introduce an end-to-end SVR model for aligning 2D fMRI slices with a 3D reference volume, incorporating a self-attention mechanism to enhance robustness against input data variations and uncertainties. It utilizes independent slice and volume encoders and a self-attention module to assign pixel-wise scores for each slice. We conducted evaluation experiments on 200 images involving synthetic rigid motion generated from 27 subjects belonging to the test set, from the publicly available Healthy Brain Network (HBN) dataset. Our experimental results demonstrate that our model achieves competitive performance in terms of alignment accuracy compared to state-of-the-art deep learning-based methods (Euclidean distance of $0.93$ [mm] vs. $1.86$ [mm]). Furthermore, our approach exhibits significantly faster registration speed compared to conventional iterative methods ($0.096$ sec. vs. $1.17$ sec.). Our end-to-end SVR model facilitates real-time head motion tracking during fMRI acquisition, ensuring reliability and robustness against uncertainties in inputs. source code, which includes the training and evaluations, will be available soon.

NPB-REC: A Non-parametric Bayesian Deep-learning Approach for Undersampled MRI Reconstruction with Uncertainty Estimation

Apr 06, 2024

Abstract:The ability to reconstruct high-quality images from undersampled MRI data is vital in improving MRI temporal resolution and reducing acquisition times. Deep learning methods have been proposed for this task, but the lack of verified methods to quantify the uncertainty in the reconstructed images hampered clinical applicability. We introduce "NPB-REC", a non-parametric fully Bayesian framework, for MRI reconstruction from undersampled data with uncertainty estimation. We use Stochastic Gradient Langevin Dynamics during training to characterize the posterior distribution of the network parameters. This enables us to both improve the quality of the reconstructed images and quantify the uncertainty in the reconstructed images. We demonstrate the efficacy of our approach on a multi-coil MRI dataset from the fastMRI challenge and compare it to the baseline End-to-End Variational Network (E2E-VarNet). Our approach outperforms the baseline in terms of reconstruction accuracy by means of PSNR and SSIM ($34.55$, $0.908$ vs. $33.08$, $0.897$, $p<0.01$, acceleration rate $R=8$) and provides uncertainty measures that correlate better with the reconstruction error (Pearson correlation, $R=0.94$ vs. $R=0.91$). Additionally, our approach exhibits better generalization capabilities against anatomical distribution shifts (PSNR and SSIM of $32.38$, $0.849$ vs. $31.63$, $0.836$, $p<0.01$, training on brain data, inference on knee data, acceleration rate $R=8$). NPB-REC has the potential to facilitate the safe utilization of deep learning-based methods for MRI reconstruction from undersampled data. Code and trained models are available at \url{https://github.com/samahkh/NPB-REC}.

NPB-REC: Non-parametric Assessment of Uncertainty in Deep-learning-based MRI Reconstruction from Undersampled Data

Aug 08, 2022

Abstract:Uncertainty quantification in deep-learning (DL) based image reconstruction models is critical for reliable clinical decision making based on the reconstructed images. We introduce "NPB-REC", a non-parametric fully Bayesian framework for uncertainty assessment in MRI reconstruction from undersampled "k-space" data. We use Stochastic gradient Langevin dynamics (SGLD) during the training phase to characterize the posterior distribution of the network weights. We demonstrated the added-value of our approach on the multi-coil brain MRI dataset, from the fastmri challenge, in comparison to the baseline E2E-VarNet with and without inference-time dropout. Our experiments show that NPB-REC outperforms the baseline by means of reconstruction accuracy (PSNR and SSIM of $34.55$, $0.908$ vs. $33.08$, $0.897$, $p<0.01$) in high acceleration rates ($R=8$). This is also measured in regions of clinical annotations. More significantly, it provides a more accurate estimate of the uncertainty that correlates with the reconstruction error, compared to the Monte-Carlo inference time Dropout method (Pearson correlation coefficient of $R=0.94$ vs. $R=0.91$). The proposed approach has the potential to facilitate safe utilization of DL based methods for MRI reconstruction from undersampled data. Code and trained models are available in \url{https://github.com/samahkh/NPB-REC}.

Deep-Learning based Motion Correction for Myocardial T1 Mapping

Sep 21, 2021

Abstract:Myocardial T1 mapping is a cardiac MRI technique, used to assess myocardial fibrosis. In this technique, a series of T1-weighted MRI images are acquired with different saturation or inversion times. These images are fitted to the T1 model to estimate the model parameters and construct the desired T1 maps. In the presence of motion, the different T1-weighted images are not aligned. This, in turn, will cause errors and inaccuracies in the final estimation of the T1 maps. Therefore, motion correction is a necessary process for myocardial T1 mapping. We present a deep-learning (DL) based system for cardiac T1-weighted MRI images motion correction. When applying our DL-based motion correction system we achieve a statistically significant improved performance by means of R2 of the model fitting regression, in compared to the model fitting regression without motion correction (0.52 vs 0.29, p<0.05).

NPBDREG: A Non-parametric Bayesian Deep-Learning Based Approach for Diffeomorphic Brain MRI Registration

Aug 29, 2021

Abstract:Quantification of uncertainty in deep-neural-networks (DNN) based image registration algorithms plays an important role in the safe deployment of real-world medical applications and research-oriented processing pipelines, and in improving generalization capabilities. Currently available approaches for uncertainty estimation, including the variational encoder-decoder architecture and the inference-time dropout approach, require specific network architectures and assume parametric distribution of the latent space which may result in sub-optimal characterization of the posterior distribution for the predicted deformation-fields. We introduce the NPBDREG, a fully non-parametric Bayesian framework for unsupervised DNN-based deformable image registration by combining an \texttt{Adam} optimizer with stochastic gradient Langevin dynamics (SGLD) to characterize the true posterior distribution through posterior sampling. The NPBDREG provides a principled non-parametric way to characterize the true posterior distribution, thus providing improved uncertainty estimates and confidence measures in a theoretically well-founded and computationally efficient way. We demonstrated the added-value of NPBDREG, compared to the baseline probabilistic \texttt{VoxelMorph} unsupervised model (PrVXM), on brain MRI images registration using $390$ image pairs from four publicly available databases: MGH10, CMUC12, ISBR18 and LPBA40. The NPBDREG shows a slight improvement in the registration accuracy compared to PrVXM (Dice score of $0.73$ vs. $0.68$, $p \ll 0.01$), a better generalization capability for data corrupted by a mixed structure noise (e.g Dice score of $0.729$ vs. $0.686$ for $\alpha=0.2$) and last but foremost, a significantly better correlation of the predicted uncertainty with out-of-distribution data ($r>0.95$ vs. $r<0.5$).

Unsupervised Deep-Learning Based Deformable Image Registration: A Bayesian Framework

Aug 10, 2020

Abstract:Unsupervised deep-learning (DL) models were recently proposed for deformable image registration tasks. In such models, a neural-network is trained to predict the best deformation field by minimizing some dissimilarity function between the moving and the target images. After training on a dataset without reference deformation fields available, such a model can be used to rapidly predict the deformation field between newly seen moving and target images. Currently, the training process effectively provides a point-estimate of the network weights rather than characterizing their entire posterior distribution. This may result in a potential over-fitting which may yield sub-optimal results at inference phase, especially for small-size datasets, frequently present in the medical imaging domain. We introduce a fully Bayesian framework for unsupervised DL-based deformable image registration. Our method provides a principled way to characterize the true posterior distribution, thus, avoiding potential over-fitting. We used stochastic gradient Langevin dynamics (SGLD) to conduct the posterior sampling, which is both theoretically well-founded and computationally efficient. We demonstrated the added-value of our Basyesian unsupervised DL-based registration framework on the MNIST and brain MRI (MGH10) datasets in comparison to the VoxelMorph unsupervised DL-based image registration framework. Our experiments show that our approach provided better estimates of the deformation field by means of improved mean-squared-error ($0.0063$ vs. $0.0065$) and Dice coefficient ($0.73$ vs. $0.71$) for the MNIST and the MGH10 datasets respectively. Further, our approach provides an estimate of the uncertainty in the deformation-field by characterizing the true posterior distribution.

Texture and Structure Two-view Classification of Images

Aug 25, 2019

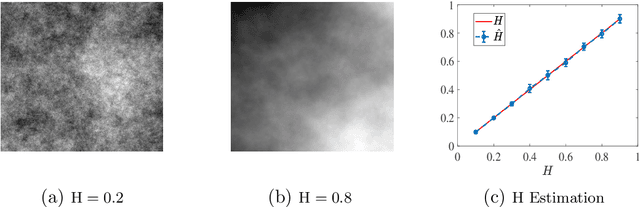

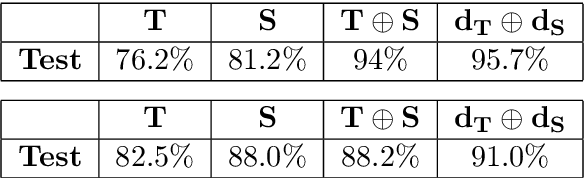

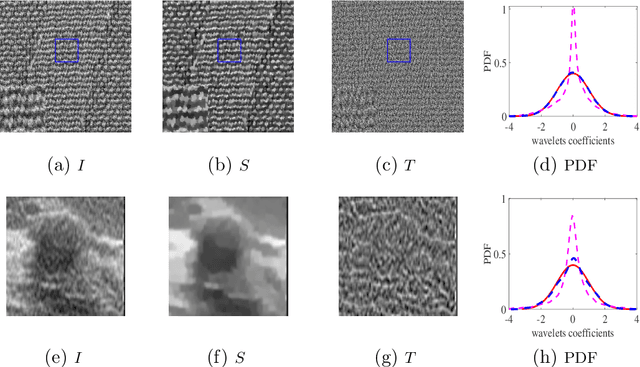

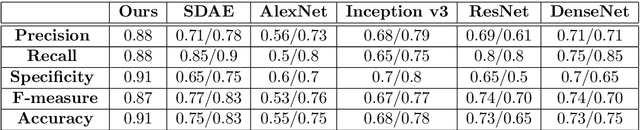

Abstract:Textural and structural features can be regraded as "two-view" feature sets. Inspired by the recent progress in multi-view learning, we propose a novel two-view classification method that models each feature set and optimizes the process of merging these views efficiently. Examples of implementation of this approach in classification of real-world data are presented, with special emphasis on medical images. We firstly decompose fully-textured images into two layers of representation, corresponding to natural stochastic textures (NST) and structural layer, respectively. The structural, edge-and-curve-type, information is mostly represented by the local spatial phase, whereas, the pure NST has random phase and is characterized by Gaussianity and self-similarity. Therefore, the NST is modeled by the 2D self-similar process, fractional Brownian motion (fBm). The Hurst parameter, characteristic of fBm, specifies the roughness or irregularity of the texture. This leads us to its estimation and implementation along other features extracted from the structure layer, to build the "two-view" features sets used in our classification scheme. A shallow neural net (NN) is exploited to execute the process of merging these feature sets, in a straightforward and efficient manner.

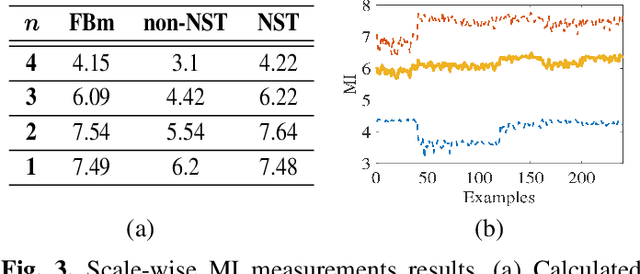

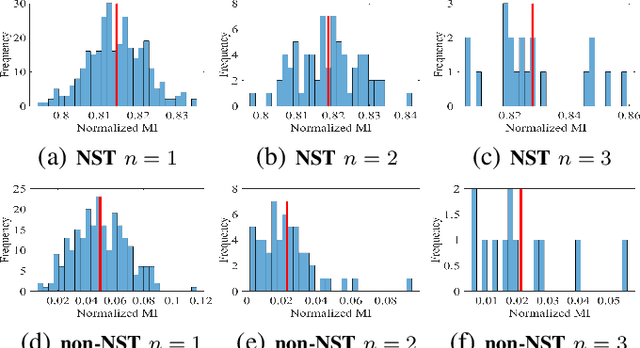

On the Self-Similarity of Natural Stochastic Textures

Jun 16, 2019

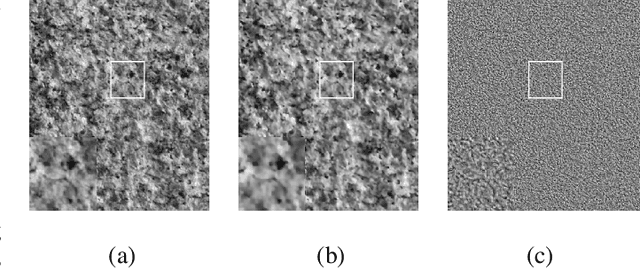

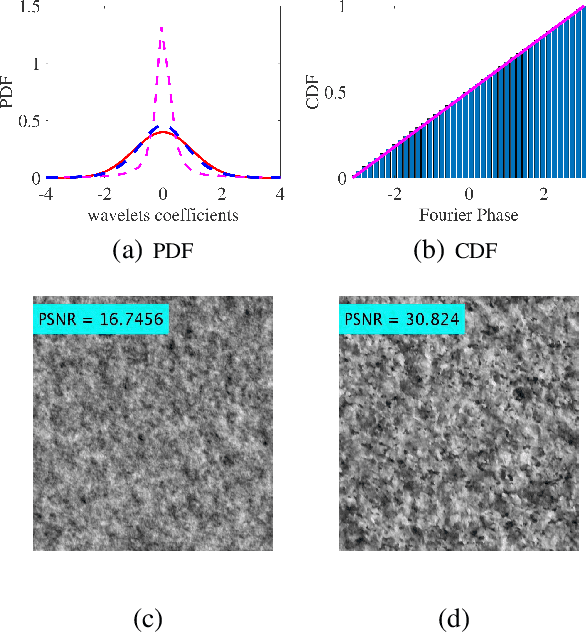

Abstract:Self-similarity is the essence of fractal images and, as such, characterizes natural stochastic textures. This paper is concerned with the property of self-similarity in the statistical sense in the case of fully-textured images that contain both stochastic texture and structural (mostly deterministic) information. We firstly decompose a textured image into two layers corresponding to its texture and structure, and show that the layer representing the stochastic texture is characterized by random phase of uniform distribution, unlike the phase of the structured information which is coherent. The uniform distribution of the the random phase is verified by using a suitable hypothesis testing framework. We proceed by proposing two approaches to assessment of self-similarity. The first is based on patch-wise calculation of the mutual information, while the second measures the mutual information that exists across scales. Quantifying the extent of self-similarity by means of mutual information is of paramount importance in the analysis of natural stochastic textures that are encountered in medical imaging, geology, agriculture and in computer vision algorithms that are designed for application on fully-textures images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge