Salvatore Vitabile

Rad4XCNN: a new agnostic method for post-hoc global explanation of CNN-derived features by means of radiomics

Apr 26, 2024

Abstract:In the last years, artificial intelligence (AI) in clinical decision support systems (CDSS) played a key role in harnessing machine learning and deep learning architectures. Despite their promising capabilities, the lack of transparency and explainability of AI models poses significant challenges, particularly in medical contexts where reliability is a mandatory aspect. Achieving transparency without compromising predictive accuracy remains a key challenge. This paper presents a novel method, namely Rad4XCNN, to enhance the predictive power of CNN-derived features with the interpretability inherent in radiomic features. Rad4XCNN diverges from conventional methods based on saliency map, by associating intelligible meaning to CNN-derived features by means of Radiomics, offering new perspectives on explanation methods beyond visualization maps. Using a breast cancer classification task as a case study, we evaluated Rad4XCNN on ultrasound imaging datasets, including an online dataset and two in-house datasets for internal and external validation. Some key results are: i) CNN-derived features guarantee more robust accuracy when compared against ViT-derived and radiomic features; ii) conventional visualization map methods for explanation present several pitfalls; iii) Rad4XCNN does not sacrifice model accuracy for their explainability; iv) Rad4XCNN provides global explanation insights enabling the physician to analyze the model outputs and findings. In addition, we highlight the importance of integrating interpretability into AI models for enhanced trust and adoption in clinical practice, emphasizing how our method can mitigate some concerns related to explainable AI methods.

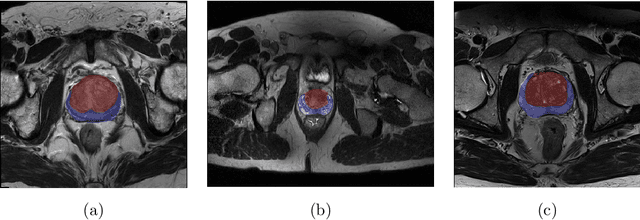

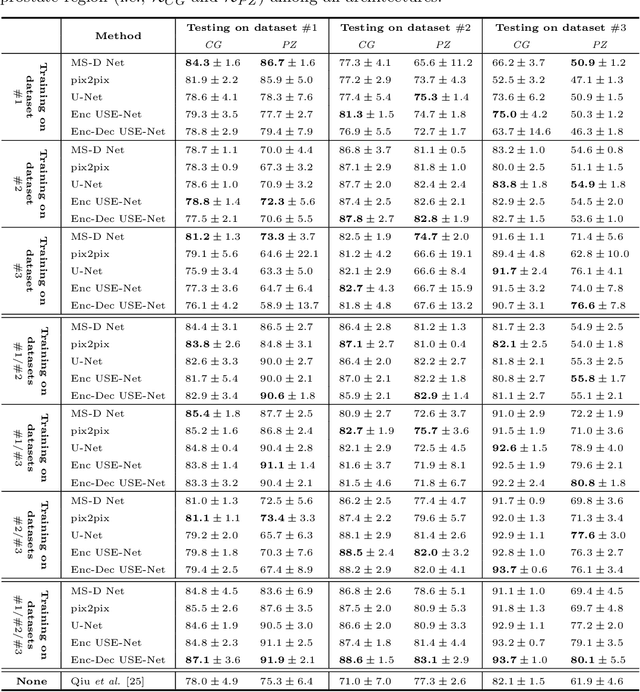

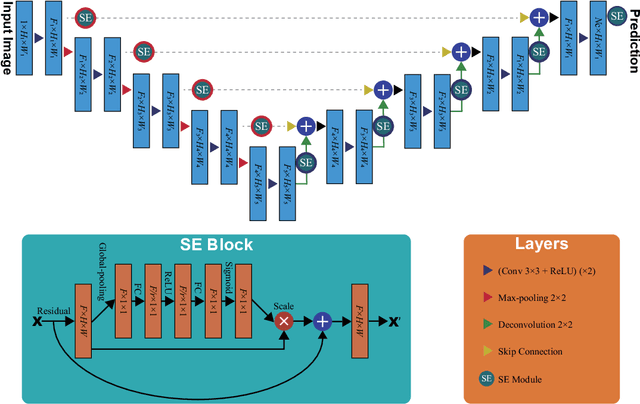

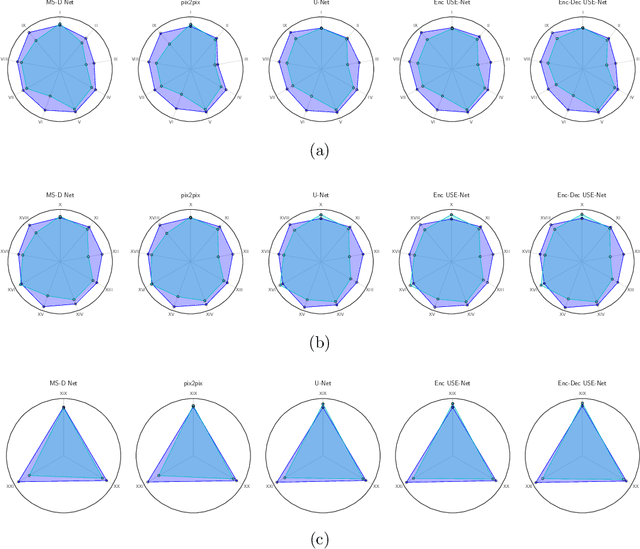

USE-Net: incorporating Squeeze-and-Excitation blocks into U-Net for prostate zonal segmentation of multi-institutional MRI datasets

Apr 17, 2019

Abstract:Prostate cancer is the most common malignant tumors in men but prostate Magnetic Resonance Imaging (MRI) analysis remains challenging. Besides whole prostate gland segmentation, the capability to differentiate between the blurry boundary of the Central Gland (CG) and Peripheral Zone (PZ) can lead to differential diagnosis, since tumor's frequency and severity differ in these regions. To tackle the prostate zonal segmentation task, we propose a novel Convolutional Neural Network (CNN), called USE-Net, which incorporates Squeeze-and-Excitation (SE) blocks into U-Net. Especially, the SE blocks are added after every Encoder (Enc USE-Net) or Encoder-Decoder block (Enc-Dec USE-Net). This study evaluates the generalization ability of CNN-based architectures on three T2-weighted MRI datasets, each one consisting of a different number of patients and heterogeneous image characteristics, collected by different institutions. The following mixed scheme is used for training/testing: (i) training on either each individual dataset or multiple prostate MRI datasets and (ii) testing on all three datasets with all possible training/testing combinations. USE-Net is compared against three state-of-the-art CNN-based architectures (i.e., U-Net, pix2pix, and Mixed-Scale Dense Network), along with a semi-automatic continuous max-flow model. The results show that training on the union of the datasets generally outperforms training on each dataset separately, allowing for both intra-/cross-dataset generalization. Enc USE-Net shows good overall generalization under any training condition, while Enc-Dec USE-Net remarkably outperforms the other methods when trained on all datasets. These findings reveal that the SE blocks' adaptive feature recalibration provides excellent cross-dataset generalization when testing is performed on samples of the datasets used during training.

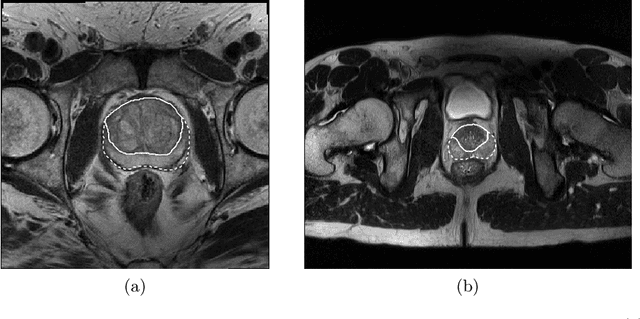

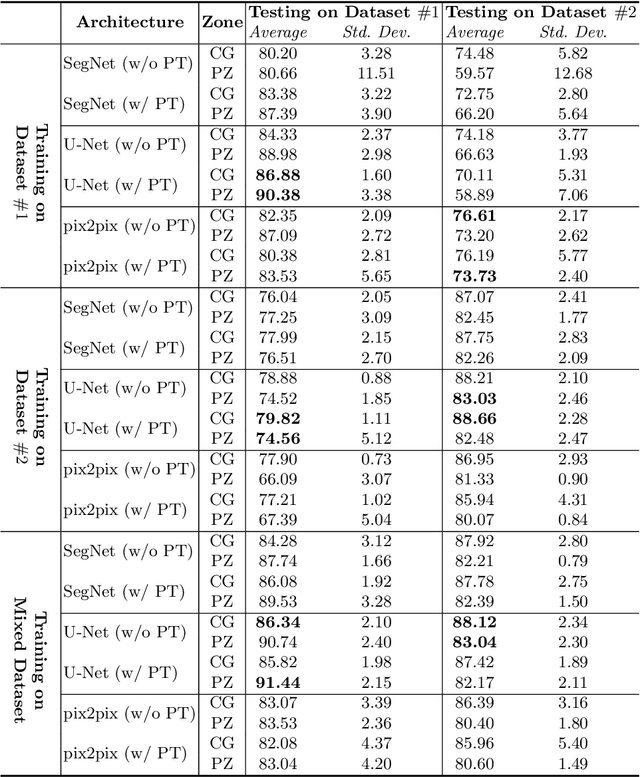

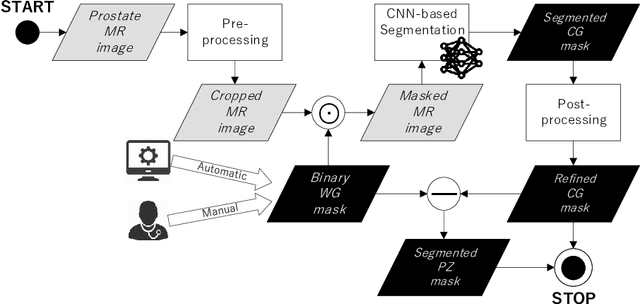

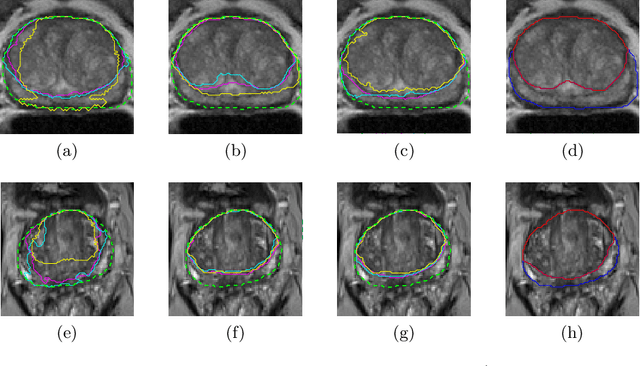

CNN-based Prostate Zonal Segmentation on T2-weighted MR Images: A Cross-dataset Study

Mar 29, 2019

Abstract:Prostate cancer is the most common cancer among US men. However, prostate imaging is still challenging despite the advances in multi-parametric Magnetic Resonance Imaging (MRI), which provides both morphologic and functional information pertaining to the pathological regions. Along with whole prostate gland segmentation, distinguishing between the Central Gland (CG) and Peripheral Zone (PZ) can guide towards differential diagnosis, since the frequency and severity of tumors differ in these regions; however, their boundary is often weak and fuzzy. This work presents a preliminary study on Deep Learning to automatically delineate the CG and PZ, aiming at evaluating the generalization ability of Convolutional Neural Networks (CNNs) on two multi-centric MRI prostate datasets. Especially, we compared three CNN-based architectures: SegNet, U-Net, and pix2pix. In such a context, the segmentation performances achieved with/without pre-training were compared in 4-fold cross-validation. In general, U-Net outperforms the other methods, especially when training and testing are performed on multiple datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge