Saish Jaiswal

Using Signal Processing in Tandem With Adapted Mixture Models for Classifying Genomic Signals

Nov 03, 2022Abstract:Genomic signal processing has been used successfully in bioinformatics to analyze biomolecular sequences and gain varied insights into DNA structure, gene organization, protein binding, sequence evolution, etc. But challenges remain in finding the appropriate spectral representation of a biomolecular sequence, especially when multiple variable-length sequences need to be handled consistently. In this study, we address this challenge in the context of the well-studied problem of classifying genomic sequences into different taxonomic units (strain, phyla, order, etc.). We propose a novel technique that employs signal processing in tandem with Gaussian mixture models to improve the spectral representation of a sequence and subsequently the taxonomic classification accuracies. The sequences are first transformed into spectra, and projected to a subspace, where sequences belonging to different taxons are better distinguishable. Our method outperforms a similar state-of-the-art method on established benchmark datasets by an absolute margin of 6.06% accuracy.

Dual Script E2E framework for Multilingual and Code-Switching ASR

Jun 02, 2021

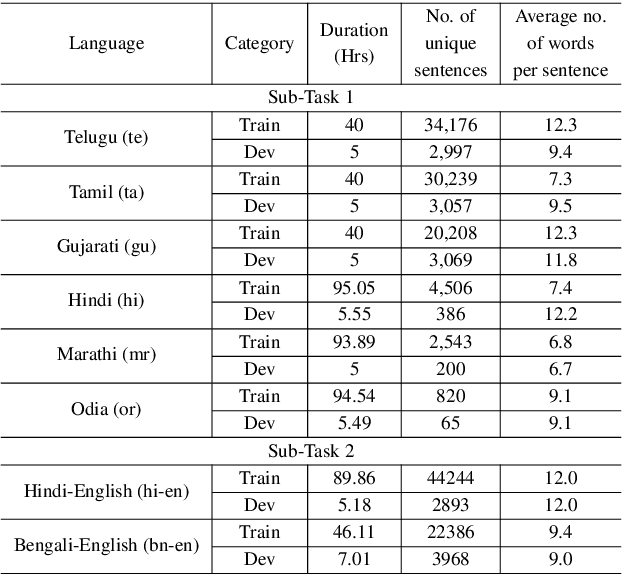

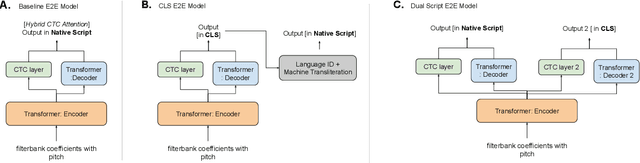

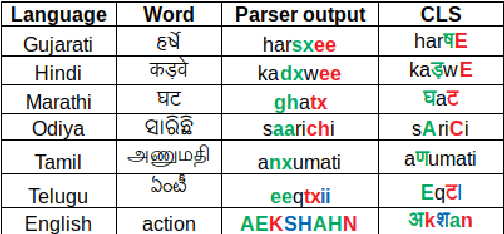

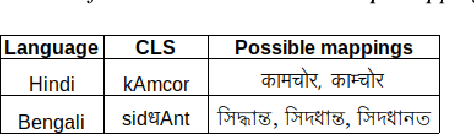

Abstract:India is home to multiple languages, and training automatic speech recognition (ASR) systems for languages is challenging. Over time, each language has adopted words from other languages, such as English, leading to code-mixing. Most Indian languages also have their own unique scripts, which poses a major limitation in training multilingual and code-switching ASR systems. Inspired by results in text-to-speech synthesis, in this work, we use an in-house rule-based phoneme-level common label set (CLS) representation to train multilingual and code-switching ASR for Indian languages. We propose two end-to-end (E2E) ASR systems. In the first system, the E2E model is trained on the CLS representation, and we use a novel data-driven back-end to recover the native language script. In the second system, we propose a modification to the E2E model, wherein the CLS representation and the native language characters are used simultaneously for training. We show our results on the multilingual and code-switching tasks of the Indic ASR Challenge 2021. Our best results achieve 6% and 5% improvement (approx) in word error rate over the baseline system for the multilingual and code-switching tasks, respectively, on the challenge development data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge