Saad Masrur

Thomas

Collection: Datasets from AFAR Challenge

May 11, 2025

Abstract:This paper presents a comprehensive real-world and Digital Twin (DT) dataset collected as part of the Find A Rover (AFAR) Challenge, organized by the NSF Aerial Experimentation and Research Platform for Advanced Wireless (AERPAW) testbed and hosted at the Lake Wheeler Field in Raleigh, North Carolina. The AFAR Challenge was a competition involving five finalist university teams, focused on promoting innovation in UAV-assisted radio frequency (RF) source localization. Participating teams were tasked with designing UAV flight trajectories and localization algorithms to detect the position of a hidden unmanned ground vehicle (UGV), also referred to as a rover, emitting wireless probe signals generated by GNU Radio. The competition was structured to evaluate solutions in a DT environment first, followed by deployment and testing in AERPAW's outdoor wireless testbed. For each team, the UGV was placed at three different positions, resulting in a total of 30 datasets, 15 collected in a DT simulation environment and 15 in a physical outdoor testbed. Each dataset contains time-synchronized measurements of received signal strength (RSS), received signal quality (RSQ), GPS coordinates, UAV velocity, and UAV orientation (roll, pitch, and yaw). Data is organized into structured folders by team, environment (DT and real-world), and UGV location. The dataset supports research in UAV-assisted RF source localization, air-to-ground (A2G) wireless propagation modeling, trajectory optimization, signal prediction, autonomous navigation, and DT validation. With approximately 300k time-synchronized samples collected from real-world experiments, the dataset provides a substantial foundation for training and evaluating deep learning (DL) models. Overall, the AFAR dataset serves as a valuable resource for advancing robust, real-world solutions in UAV-enabled wireless communications and sensing systems.

Impact of Altitude, Bandwidth, and NLOS Bias on TDOA-Based 3D UAV Localization: Experimental Results and CRLB Analysis

Feb 03, 2025

Abstract:This paper investigates unmanned aerial vehicle (UAV) localization using time difference of arrival (TDOA) measurements under mixed line-of-sight (LOS) and non-line-of-sight (NLOS) conditions. A 3D TDOA Cram\'er-Rao lower bound (CRLB) model is developed accounting for varying altitudes and signal bandwidths. The model is compared to five real-world UAV flight experiments conducted at different altitudes (40 m, 70 m, 100 m) and bandwidths (1.25 MHz, 2.5 MHz, 5 MHz) using Keysight N6841A radio frequency (RF) sensors of the NSF AERPAW platform. Results show that altitude, bandwidth, and NLOS obstructions significantly impact localization accuracy. Higher bandwidths enhance signal time resolution, while increased altitudes mitigate multipath and NLOS biases, both contributing to improved performance. However, hovering close to RF sensors degrades accuracy due to antenna pattern misalignment and geometric dilution of precision. These findings emphasize the inadequacy of traditional LOS-based models in NLOS environments and highlight the importance of adaptive approaches for accurate localization in challenging scenarios.

Transforming Indoor Localization: Advanced Transformer Architecture for NLOS Dominated Wireless Environments with Distributed Sensors

Jan 14, 2025Abstract:Indoor localization in challenging non-line-of-sight (NLOS) environments often leads to mediocre accuracy with traditional approaches. Deep learning (DL) has been applied to tackle these challenges; however, many DL approaches overlook computational complexity, especially for floating-point operations (FLOPs), making them unsuitable for resource-limited devices. Transformer-based models have achieved remarkable success in natural language processing (NLP) and computer vision (CV) tasks, motivating their use in wireless applications. However, their use in indoor localization remains nascent, and directly applying Transformers for indoor localization can be both computationally intensive and exhibit limitations in accuracy. To address these challenges, in this work, we introduce a novel tokenization approach, referred to as Sensor Snapshot Tokenization (SST), which preserves variable-specific representations of power delay profile (PDP) and enhances attention mechanisms by effectively capturing multi-variate correlation. Complementing this, we propose a lightweight Swish-Gated Linear Unit-based Transformer (L-SwiGLU Transformer) model, designed to reduce computational complexity without compromising localization accuracy. Together, these contributions mitigate the computational burden and dependency on large datasets, making Transformer models more efficient and suitable for resource-constrained scenarios. The proposed tokenization method enables the Vanilla Transformer to achieve a 90th percentile positioning error of 0.388 m in a highly NLOS indoor factory, surpassing conventional tokenization methods. The L-SwiGLU ViT further reduces the error to 0.355 m, achieving an 8.51% improvement. Additionally, the proposed model outperforms a 14.1 times larger model with a 46.13% improvement, underscoring its computational efficiency.

Energy-Efficient Sleep Mode Optimization of 5G mmWave Networks Using Deep Contextual MAB

May 15, 2024Abstract:Millimeter-wave (mmWave) networks, integral to 5G communication, offer a vast spectrum that addresses the issue of spectrum scarcity and enhances peak rate and capacity. However, their dense deployment, necessary to counteract propagation losses, leads to high power consumption. An effective strategy to reduce this energy consumption in mobile networks is the sleep mode optimization (SMO) of base stations (BSs). In this paper, we propose a novel SMO approach for mmWave BSs in a 3D urban environment. This approach, which incorporates a neural network (NN) based contextual multi-armed bandit (C-MAB) with an epsilon decay algorithm, accommodates the dynamic and diverse traffic of user equipment (UE) by clustering the UEs in their respective tracking areas (TAs). Our strategy includes beamforming, which helps reduce energy consumption from the UE side, while SMO minimizes energy use from the BS perspective. We extended our investigation to include Random, Epsilon Greedy, Upper Confidence Bound (UCB), and Load Based sleep mode (SM) strategies. We compared the performance of our proposed C-MAB based SM algorithm with those of All On and other alternative approaches. Simulation results show that our proposed method outperforms all other SM strategies in terms of the $10^{th}$ percentile of user rate and average throughput while demonstrating comparable average throughput to the All On approach. Importantly, it outperforms all approaches in terms of energy efficiency (EE).

Digital Twins for Supporting AI Research with Autonomous Vehicle Networks

Apr 01, 2024

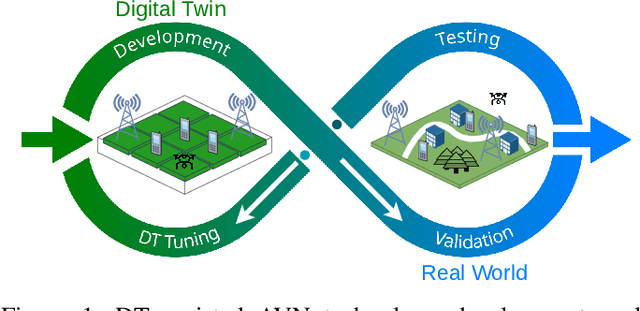

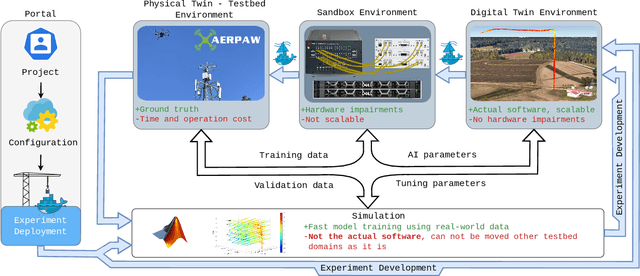

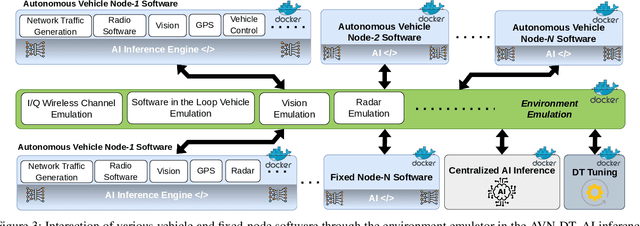

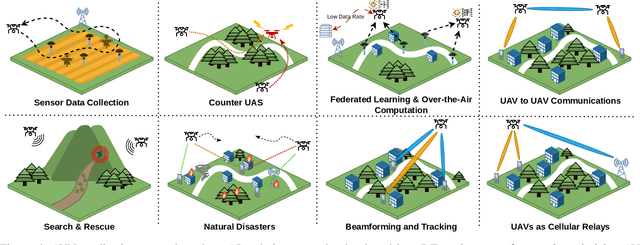

Abstract:Digital twins (DTs), which are virtual environments that simulate, predict, and optimize the performance of their physical counterparts, are envisioned to be essential technologies for advancing next-generation wireless networks. While DTs have been studied extensively for wireless networks, their use in conjunction with autonomous vehicles with programmable mobility remains relatively under-explored. In this paper, we study DTs used as a development environment to design, deploy, and test artificial intelligence (AI) techniques that use real-time observations, e.g. radio key performance indicators, for vehicle trajectory and network optimization decisions in an autonomous vehicle networks (AVN). We first compare and contrast the use of simulation, digital twin (software in the loop (SITL)), sandbox (hardware-in-the-loop (HITL)), and physical testbed environments for their suitability in developing and testing AI algorithms for AVNs. We then review various representative use cases of DTs for AVN scenarios. Finally, we provide an example from the NSF AERPAW platform where a DT is used to develop and test AI-aided solutions for autonomous unmanned aerial vehicles for localizing a signal source based solely on link quality measurements. Our results in the physical testbed show that SITL DTs, when supplemented with data from real-world (RW) measurements and simulations, can serve as an ideal environment for developing and testing innovative AI solutions for AVNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge