Sérgio Pequito

Logarithmically Quantized Distributed Optimization over Dynamic Multi-Agent Networks

Oct 27, 2024Abstract:Distributed optimization finds many applications in machine learning, signal processing, and control systems. In these real-world applications, the constraints of communication networks, particularly limited bandwidth, necessitate implementing quantization techniques. In this paper, we propose distributed optimization dynamics over multi-agent networks subject to logarithmically quantized data transmission. Under this condition, data exchange benefits from representing smaller values with more bits and larger values with fewer bits. As compared to uniform quantization, this allows for higher precision in representing near-optimal values and more accuracy of the distributed optimization algorithm. The proposed optimization dynamics comprise a primary state variable converging to the optimizer and an auxiliary variable tracking the objective function's gradient. Our setting accommodates dynamic network topologies, resulting in a hybrid system requiring convergence analysis using matrix perturbation theory and eigenspectrum analysis.

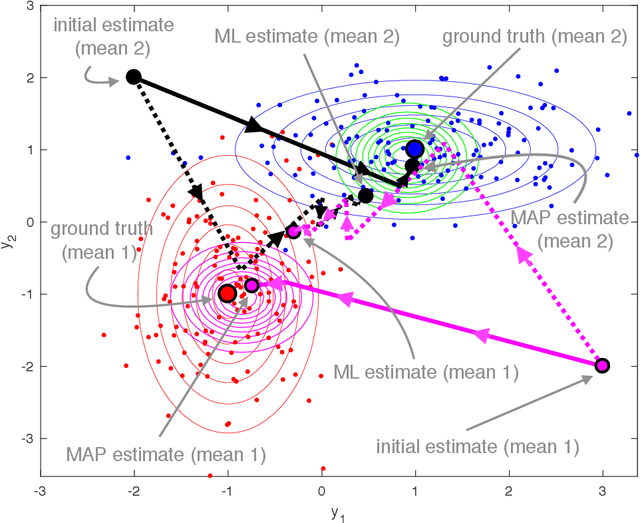

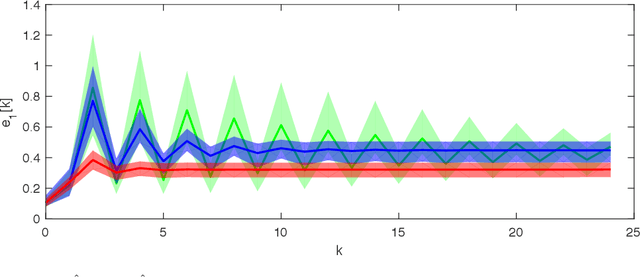

A Dynamical Systems Approach for Convergence of the Bayesian EM Algorithm

Jun 23, 2020

Abstract:Out of the recent advances in systems and control (S\&C)-based analysis of optimization algorithms, not enough work has been specifically dedicated to machine learning (ML) algorithms and its applications. This paper addresses this gap by illustrating how (discrete-time) Lyapunov stability theory can serve as a powerful tool to aid, or even lead, in the analysis (and potential design) of optimization algorithms that are not necessarily gradient-based. The particular ML problem that this paper focuses on is that of parameter estimation in an incomplete-data Bayesian framework via the popular optimization algorithm known as maximum a posteriori expectation-maximization (MAP-EM). Following first principles from dynamical systems stability theory, conditions for convergence of MAP-EM are developed. Furthermore, if additional assumptions are met, we show that fast convergence (linear or quadratic) is achieved, which could have been difficult to unveil without our adopted S\&C approach. The convergence guarantees in this paper effectively expand the set of sufficient conditions for EM applications, thereby demonstrating the potential of similar S\&C-based convergence analysis of other ML algorithms.

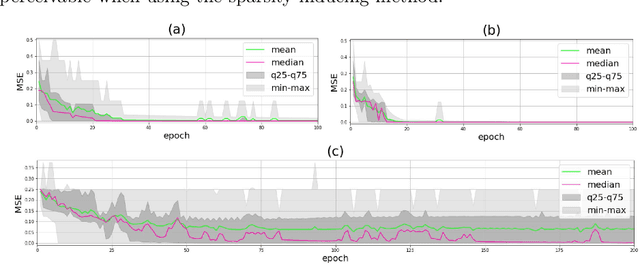

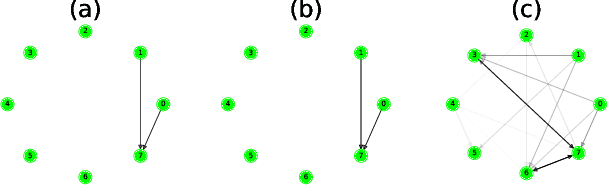

Equilibrium Propagation for Complete Directed Neural Networks

Jun 17, 2020

Abstract:Artificial neural networks, one of the most successful approaches to supervised learning, were originally inspired by their biological counterparts. However, the most successful learning algorithm for artificial neural networks, backpropagation, is considered biologically implausible. We contribute to the topic of biologically plausible neuronal learning by building upon and extending the equilibrium propagation learning framework. Specifically, we introduce: a new neuronal dynamics and learning rule for arbitrary network architectures; a sparsity-inducing method able to prune irrelevant connections; a dynamical-systems characterization of the models, using Lyapunov theory.

Analysis of Gradient-Based Expectation-Maximization-Like Algorithms via Integral Quadratic Constraints

Mar 03, 2019

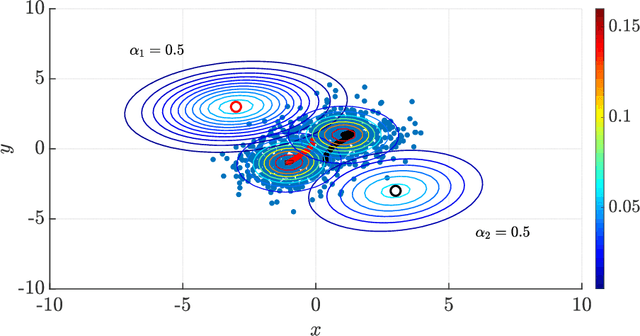

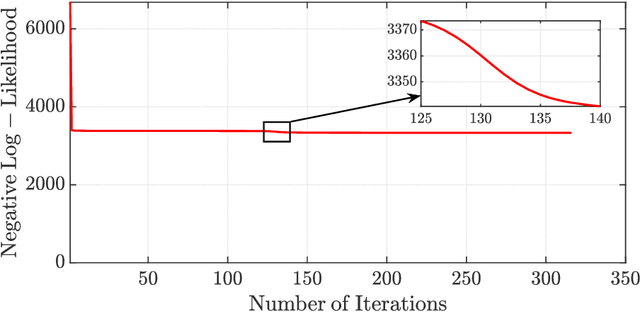

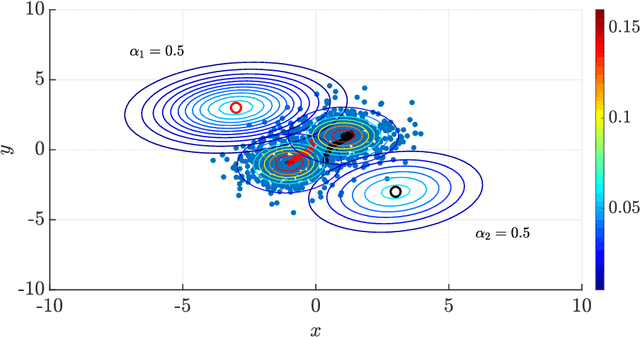

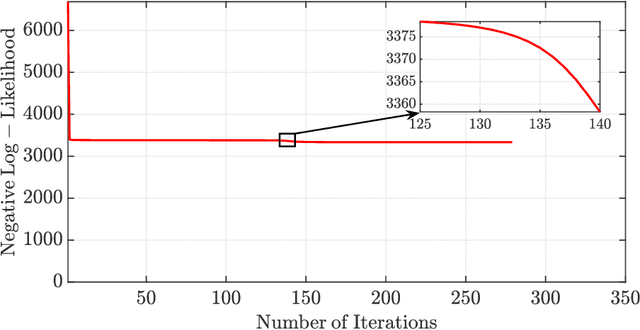

Abstract:The Expectation-Maximization (EM) algorithm is one of the most popular methods used to solve the problem of distribution-based clustering in unsupervised learning. In this paper, we propose an analysis of a generalized EM (GEM) algorithm and a designed EM-like algorithm, as linear time-invariant (LTI) systems with a feedback nonlinearity, and by leveraging tools from robust control theory, particularly integral quadratic constraints (IQCs). Towards this goal, we investigate the absolute stability of dynamical systems of the above form with a sector-bounded feedback nonlinearity, that represent the aforementioned algorithms. This analysis allows us to craft a strongly convex objective function, which led to the design of the aforementioned novel EM-like algorithm for Gaussian mixture models (GMMs). Furthermore, it allows us to establish bounds on the convergence rates of the studied algorithms. In particular, the derived bounds for our proposed EM-like algorithm generalize bounds found in the literature for the EM algorithm on GMMs, and our analysis of an existing gradient ascent GEM algorithm based on the $Q$-function allowed us to approximately recover bounds found in the literature.

Convergence of the Expectation-Maximization Algorithm Through Discrete-Time Lyapunov Stability Theory

Oct 04, 2018Abstract:In this paper, we propose a dynamical systems perspective of the Expectation-Maximization (EM) algorithm. More precisely, we can analyze the EM algorithm as a nonlinear state-space dynamical system. The EM algorithm is widely adopted for data clustering and density estimation in statistics, control systems, and machine learning. This algorithm belongs to a large class of iterative algorithms known as proximal point methods. In particular, we re-interpret limit points of the EM algorithm and other local maximizers of the likelihood function it seeks to optimize as equilibria in its dynamical system representation. Furthermore, we propose to assess its convergence as asymptotic stability in the sense of Lyapunov. As a consequence, we proceed by leveraging recent results regarding discrete-time Lyapunov stability theory in order to establish asymptotic stability (and thus, convergence) in the dynamical system representation of the EM algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge