Ryan Sander

Neighborhood Mixup Experience Replay: Local Convex Interpolation for Improved Sample Efficiency in Continuous Control Tasks

May 18, 2022

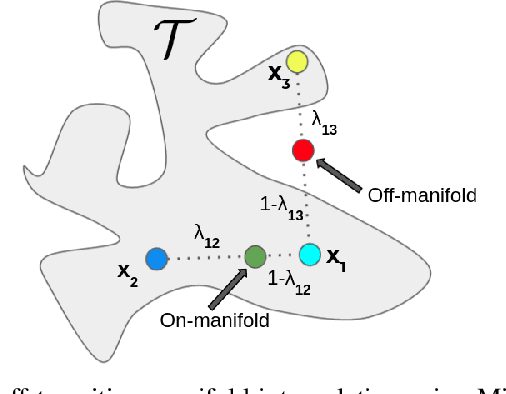

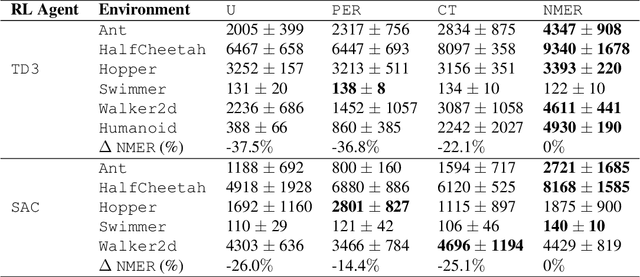

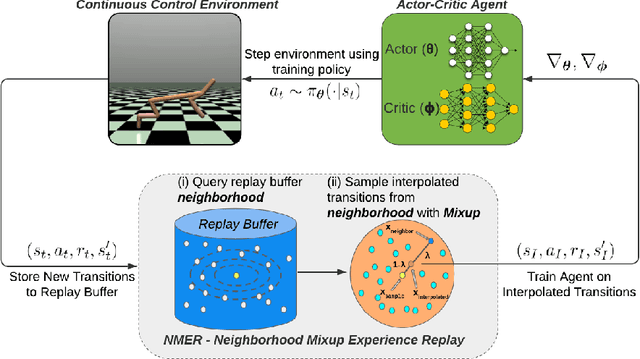

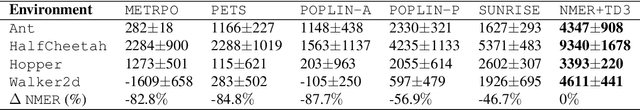

Abstract:Experience replay plays a crucial role in improving the sample efficiency of deep reinforcement learning agents. Recent advances in experience replay propose using Mixup (Zhang et al., 2018) to further improve sample efficiency via synthetic sample generation. We build upon this technique with Neighborhood Mixup Experience Replay (NMER), a geometrically-grounded replay buffer that interpolates transitions with their closest neighbors in state-action space. NMER preserves a locally linear approximation of the transition manifold by only applying Mixup between transitions with vicinal state-action features. Under NMER, a given transition's set of state action neighbors is dynamic and episode agnostic, in turn encouraging greater policy generalizability via inter-episode interpolation. We combine our approach with recent off-policy deep reinforcement learning algorithms and evaluate on continuous control environments. We observe that NMER improves sample efficiency by an average 94% (TD3) and 29% (SAC) over baseline replay buffers, enabling agents to effectively recombine previous experiences and learn from limited data.

On the Importance of 3D Surface Information for Remote Sensing Classification Tasks

Apr 26, 2021

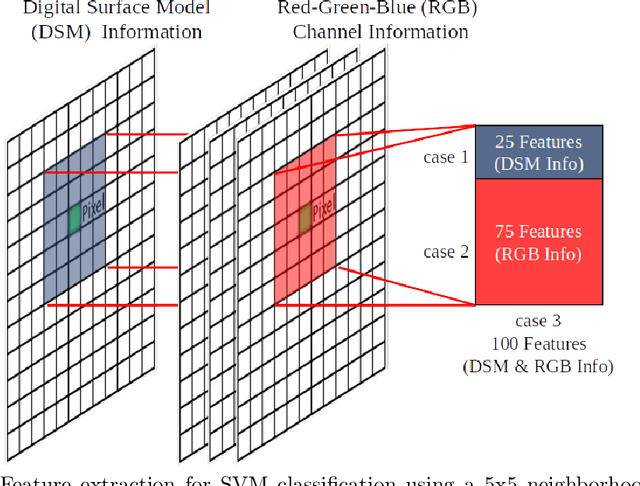

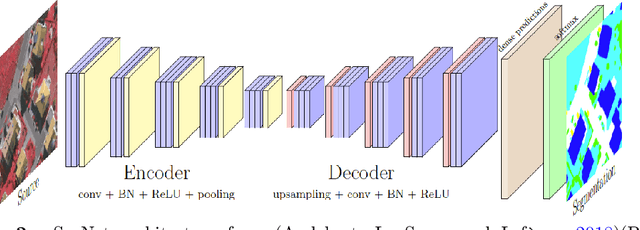

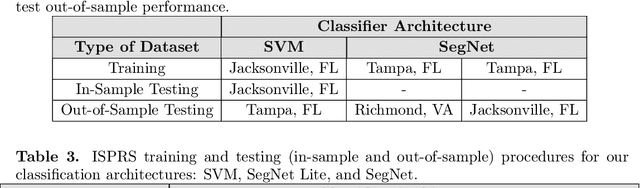

Abstract:There has been a surge in remote sensing machine learning applications that operate on data from active or passive sensors as well as multi-sensor combinations (Ma et al. (2019)). Despite this surge, however, there has been relatively little study on the comparative value of 3D surface information for machine learning classification tasks. Adding 3D surface information to RGB imagery can provide crucial geometric information for semantic classes such as buildings, and can thus improve out-of-sample predictive performance. In this paper, we examine in-sample and out-of-sample classification performance of Fully Convolutional Neural Networks (FCNNs) and Support Vector Machines (SVMs) trained with and without 3D normalized digital surface model (nDSM) information. We assess classification performance using multispectral imagery from the International Society for Photogrammetry and Remote Sensing (ISPRS) 2D Semantic Labeling contest and the United States Special Operations Command (USSOCOM) Urban 3D Challenge. We find that providing RGB classifiers with additional 3D nDSM information results in little increase in in-sample classification performance, suggesting that spectral information alone may be sufficient for the given classification tasks. However, we observe that providing these RGB classifiers with additional nDSM information leads to significant gains in out-of-sample predictive performance. Specifically, we observe an average improvement in out-of-sample all-class accuracy of 14.4% on the ISPRS dataset and an average improvement in out-of-sample F1 score of 8.6% on the USSOCOM dataset. In addition, the experiments establish that nDSM information is critical in machine learning and classification settings that face training sample scarcity.

Deep Latent Competition: Learning to Race Using Visual Control Policies in Latent Space

Feb 19, 2021

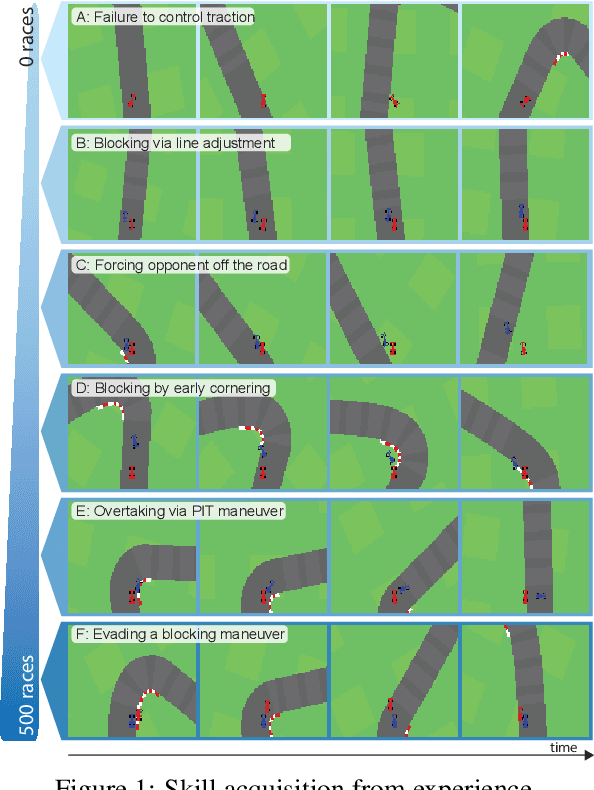

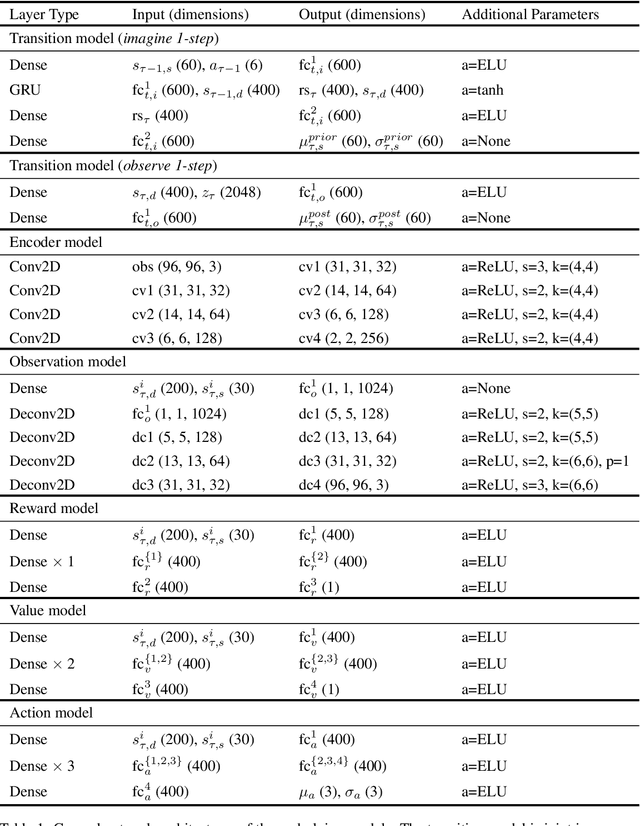

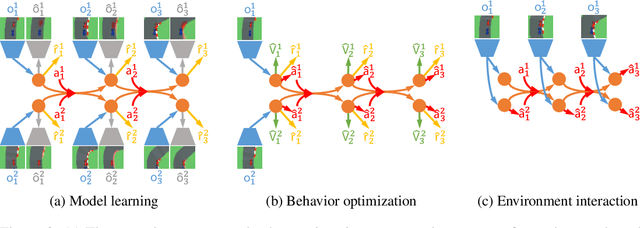

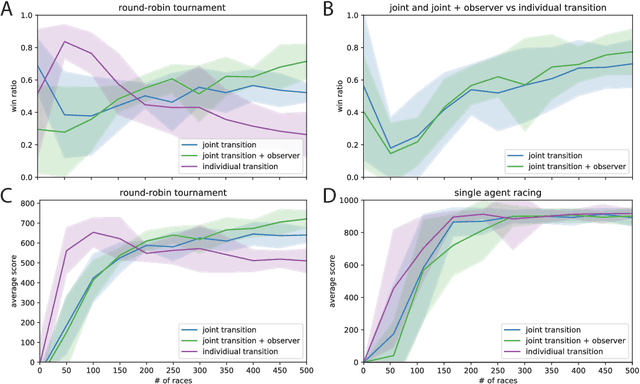

Abstract:Learning competitive behaviors in multi-agent settings such as racing requires long-term reasoning about potential adversarial interactions. This paper presents Deep Latent Competition (DLC), a novel reinforcement learning algorithm that learns competitive visual control policies through self-play in imagination. The DLC agent imagines multi-agent interaction sequences in the compact latent space of a learned world model that combines a joint transition function with opponent viewpoint prediction. Imagined self-play reduces costly sample generation in the real world, while the latent representation enables planning to scale gracefully with observation dimensionality. We demonstrate the effectiveness of our algorithm in learning competitive behaviors on a novel multi-agent racing benchmark that requires planning from image observations. Code and videos available at https://sites.google.com/view/deep-latent-competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge