Ruying Liu

Exploring Emotion-Sensitive LLM-Based Conversational AI

Feb 13, 2025

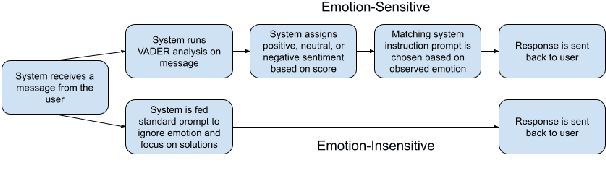

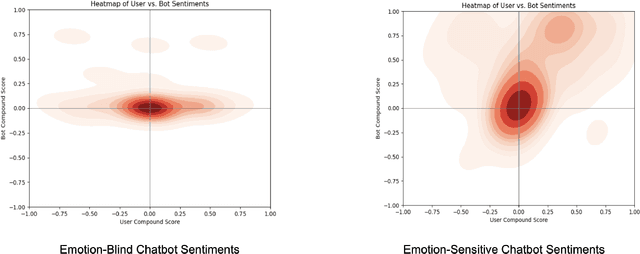

Abstract:Conversational AI chatbots have become increasingly common within the customer service industry. Despite improvements in their emotional development, they often lack the authenticity of real customer service interactions or the competence of service providers. By comparing emotion-sensitive and emotion-insensitive LLM-based chatbots across 30 participants, we aim to explore how emotional sensitivity in chatbots influences perceived competence and overall customer satisfaction in service interactions. Additionally, we employ sentiment analysis techniques to analyze and interpret the emotional content of user inputs. We highlight that perceptions of chatbot trustworthiness and competence were higher in the case of the emotion-sensitive chatbot, even if issue resolution rates were not affected. We discuss implications of improved user satisfaction from emotion-sensitive chatbots and potential applications in support services.

Enhancing Building Safety Design for Active Shooter Incidents: Exploration of Building Exit Parameters using Reinforcement Learning-Based Simulations

Jul 15, 2024

Abstract:With the alarming rise in active shooter incidents (ASIs) in the United States, enhancing public safety through building design has become a pressing need. This study proposes a reinforcement learning-based simulation approach addressing gaps in existing research that has neglected the dynamic behaviours of shooters. We developed an autonomous agent to simulate an active shooter within a realistic office environment, aiming to offer insights into the interactions between building design parameters and ASI outcomes. A case study is conducted to quantitatively investigate the impact of building exit numbers (total count of accessible exits) and configuration (arrangement of which exits are available or not) on evacuation and harm rates. Findings demonstrate that greater exit availability significantly improves evacuation outcomes and reduces harm. Exits nearer to the shooter's initial position hold greater importance for accessibility than those farther away. By encompassing dynamic shooter behaviours, this study offers preliminary insights into effective building safety design against evolving threats.

Creating a Lens of Chinese Culture: A Multimodal Dataset for Chinese Pun Rebus Art Understanding

Jun 14, 2024Abstract:Large vision-language models (VLMs) have demonstrated remarkable abilities in understanding everyday content. However, their performance in the domain of art, particularly culturally rich art forms, remains less explored. As a pearl of human wisdom and creativity, art encapsulates complex cultural narratives and symbolism. In this paper, we offer the Pun Rebus Art Dataset, a multimodal dataset for art understanding deeply rooted in traditional Chinese culture. We focus on three primary tasks: identifying salient visual elements, matching elements with their symbolic meanings, and explanations for the conveyed messages. Our evaluation reveals that state-of-the-art VLMs struggle with these tasks, often providing biased and hallucinated explanations and showing limited improvement through in-context learning. By releasing the Pun Rebus Art Dataset, we aim to facilitate the development of VLMs that can better understand and interpret culturally specific content, promoting greater inclusiveness beyond English-based corpora.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge