Ruisheng Diao

TVNet: A Novel Time Series Analysis Method Based on Dynamic Convolution and 3D-Variation

Mar 10, 2025

Abstract:With the recent development and advancement of Transformer and MLP architectures, significant strides have been made in time series analysis. Conversely, the performance of Convolutional Neural Networks (CNNs) in time series analysis has fallen short of expectations, diminishing their potential for future applications. Our research aims to enhance the representational capacity of Convolutional Neural Networks (CNNs) in time series analysis by introducing novel perspectives and design innovations. To be specific, We introduce a novel time series reshaping technique that considers the inter-patch, intra-patch, and cross-variable dimensions. Consequently, we propose TVNet, a dynamic convolutional network leveraging a 3D perspective to employ time series analysis. TVNet retains the computational efficiency of CNNs and achieves state-of-the-art results in five key time series analysis tasks, offering a superior balance of efficiency and performance over the state-of-the-art Transformer-based and MLP-based models. Additionally, our findings suggest that TVNet exhibits enhanced transferability and robustness. Therefore, it provides a new perspective for applying CNN in advanced time series analysis tasks.

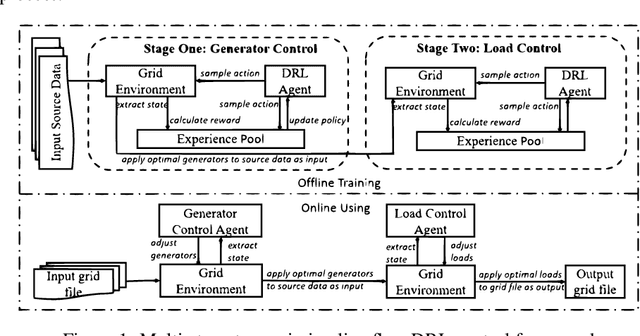

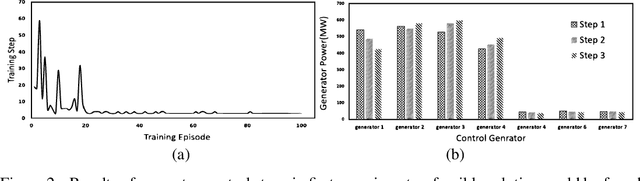

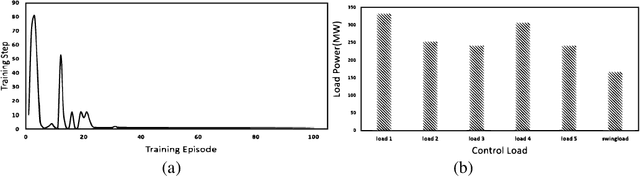

Multi-Stage Transmission Line Flow Control Using Centralized and Decentralized Reinforcement Learning Agents

Feb 16, 2021

Abstract:Planning future operational scenarios of bulk power systems that meet security and economic constraints typically requires intensive labor efforts in performing massive simulations. To automate this process and relieve engineers' burden, a novel multi-stage control approach is presented in this paper to train centralized and decentralized reinforcement learning agents that can automatically adjust grid controllers for regulating transmission line flows at normal condition and under contingencies. The power grid flow control problem is formulated as Markov Decision Process (MDP). At stage one, centralized soft actor-critic (SAC) agent is trained to control generator active power outputs in a wide area to control transmission line flows against specified security limits. If line overloading issues remain unresolved, stage two is used to train decentralized SAC agent via load throw-over at local substations. The effectiveness of the proposed approach is verified on a series of actual planning cases used for operating the power grid of SGCC Zhejiang Electric Power Company.

Rethink AI-based Power Grid Control: Diving Into Algorithm Design

Dec 23, 2020

Abstract:Recently, deep reinforcement learning (DRL)-based approach has shown promisein solving complex decision and control problems in power engineering domain.In this paper, we present an in-depth analysis of DRL-based voltage control fromaspects of algorithm selection, state space representation, and reward engineering.To resolve observed issues, we propose a novel imitation learning-based approachto directly map power grid operating points to effective actions without any interimreinforcement learning process. The performance results demonstrate that theproposed approach has strong generalization ability with much less training time.The agent trained by imitation learning is effective and robust to solve voltagecontrol problem and outperforms the former RL agents.

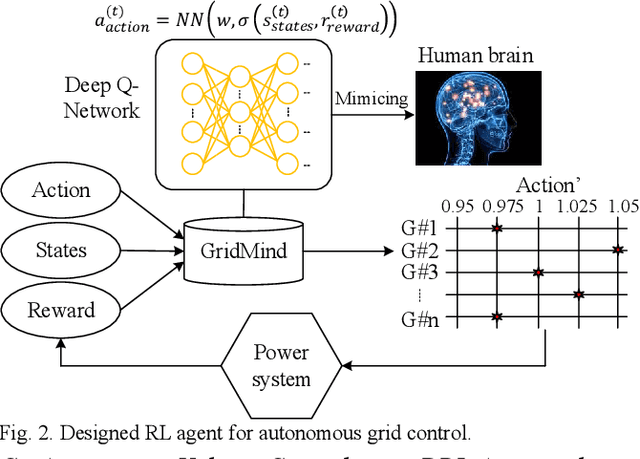

Autonomous Voltage Control for Grid Operation Using Deep Reinforcement Learning

Apr 24, 2019

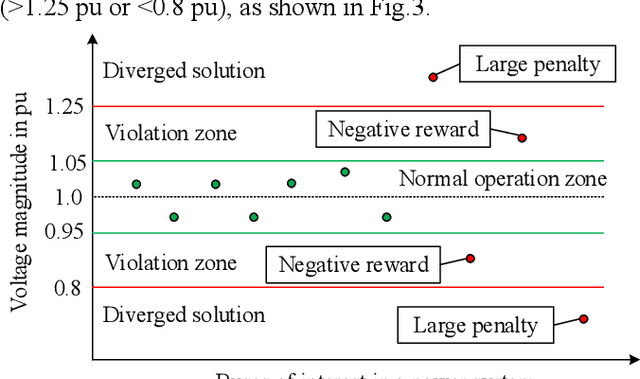

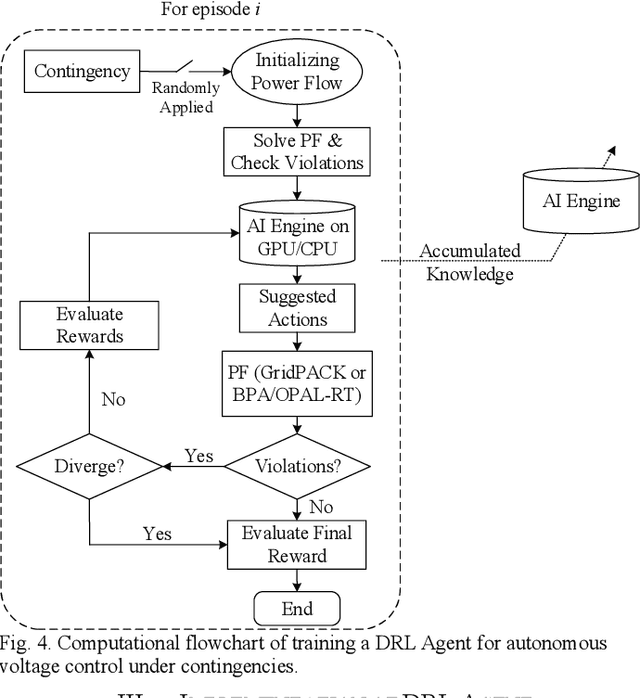

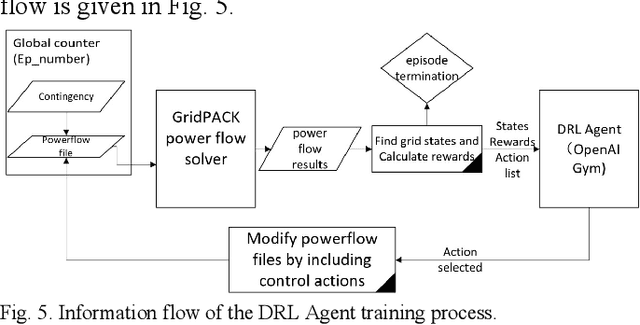

Abstract:Modern power grids are experiencing grand challenges caused by the stochastic and dynamic nature of growing renewable energy and demand response. Traditional theoretical assumptions and operational rules may be violated, which are difficult to be adapted by existing control systems due to the lack of computational power and accurate grid models for use in real time, leading to growing concerns in the secure and economic operation of the power grid. Existing operational control actions are typically determined offline, which are less optimized. This paper presents a novel paradigm, Grid Mind, for autonomous grid operational controls using deep reinforcement learning. The proposed AI agent for voltage control can learn its control policy through interactions with massive offline simulations, and adapts its behavior to new changes including not only load/generation variations but also topological changes. A properly trained agent is tested on the IEEE 14-bus system with tens of thousands of scenarios, and promising performance is demonstrated in applying autonomous voltage controls for secure grid operation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge