Rong Pan

Measuring Dataset Diversity from a Geometric Perspective

Feb 10, 2026Abstract:Diversity can be broadly defined as the presence of meaningful variation across elements, which can be viewed from multiple perspectives, including statistical variation and geometric structural richness in the dataset. Existing diversity metrics, such as feature-space dispersion and metric-space magnitude, primarily capture distributional variation or entropy, while largely neglecting the geometric structure of datasets. To address this gap, we introduce a framework based on topological data analysis (TDA) and persistence landscapes (PLs) to extract and quantify geometric features from data. This approach provides a theoretically grounded means of measuring diversity beyond entropy, capturing the rich geometric and structural properties of datasets. Through extensive experiments across diverse modalities, we demonstrate that our proposed PLs-based diversity metric (PLDiv) is powerful, reliable, and interpretable, directly linking data diversity to its underlying geometry and offering a foundational tool for dataset construction, augmentation, and evaluation.

Active Learning for Multiple Change Point Detection in Non-stationary Time Series with Deep Gaussian Processes

May 26, 2025Abstract:Multiple change point (MCP) detection in non-stationary time series is challenging due to the variety of underlying patterns. To address these challenges, we propose a novel algorithm that integrates Active Learning (AL) with Deep Gaussian Processes (DGPs) for robust MCP detection. Our method leverages spectral analysis to identify potential changes and employs AL to strategically select new sampling points for improved efficiency. By incorporating the modeling flexibility of DGPs with the change-identification capabilities of spectral methods, our approach adapts to diverse spectral change behaviors and effectively localizes multiple change points. Experiments on both simulated and real-world data demonstrate that our method outperforms existing techniques in terms of detection accuracy and sampling efficiency for non-stationary time series.

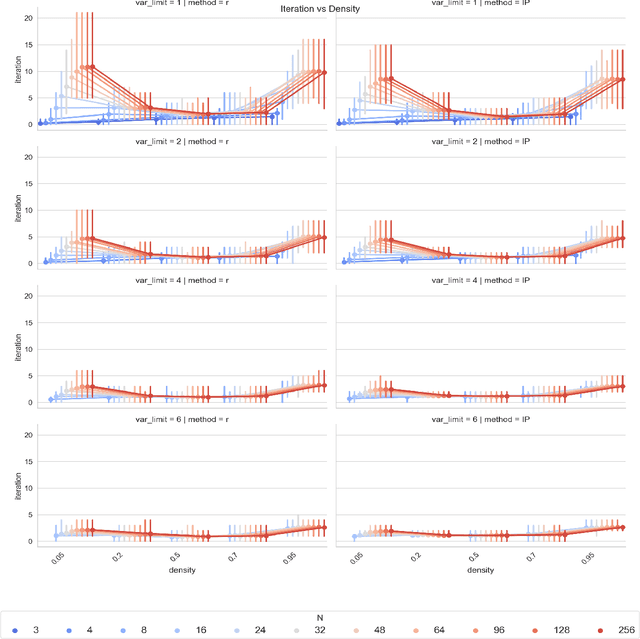

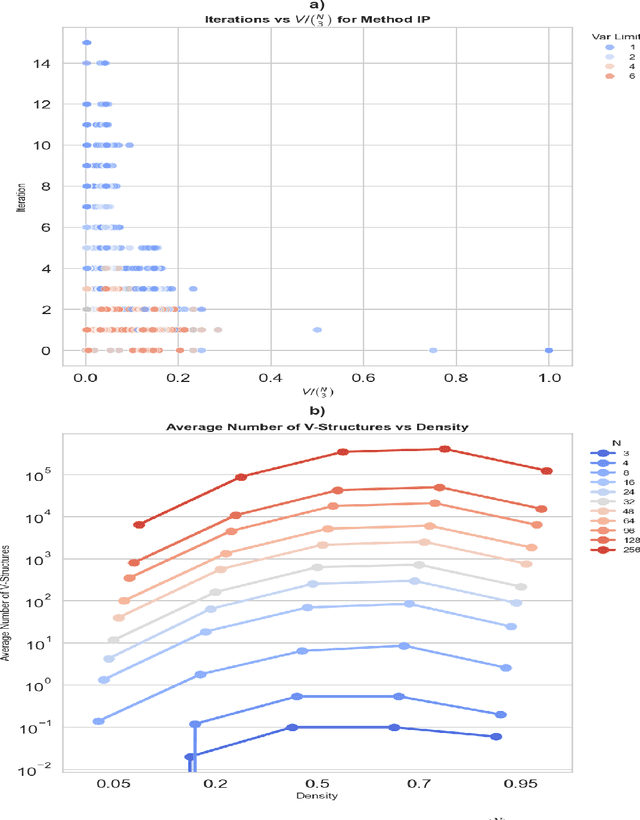

From Observation to Orientation: an Adaptive Integer Programming Approach to Intervention Design

Apr 10, 2025

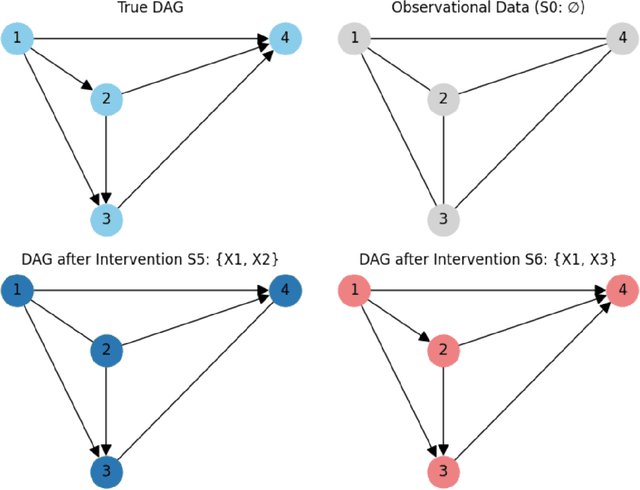

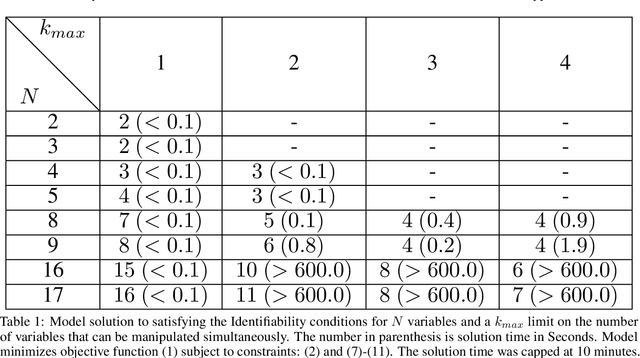

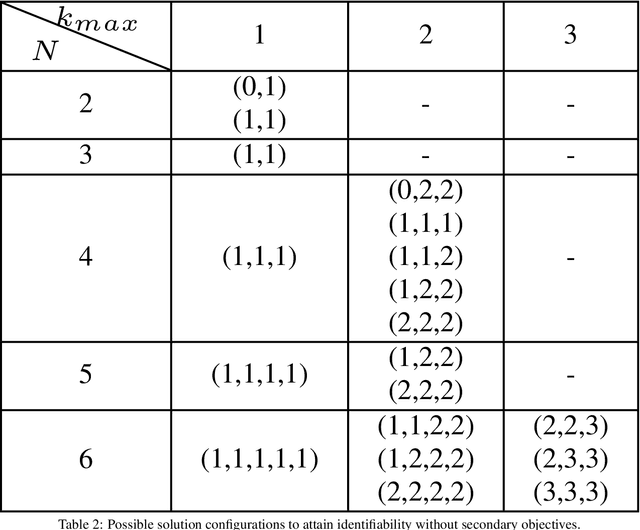

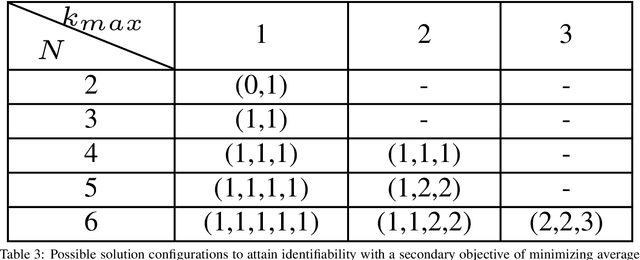

Abstract:Using both observational and experimental data, a causal discovery process can identify the causal relationships between variables. A unique adaptive intervention design paradigm is presented in this work, where causal directed acyclic graphs (DAGs) are for effectively recovered with practical budgetary considerations. In order to choose treatments that optimize information gain under these considerations, an iterative integer programming (IP) approach is proposed, which drastically reduces the number of experiments required. Simulations over a broad range of graph sizes and edge densities are used to assess the effectiveness of the suggested approach. Results show that the proposed adaptive IP approach achieves full causal graph recovery with fewer intervention iterations and variable manipulations than random intervention baselines, and it is also flexible enough to accommodate a variety of practical constraints.

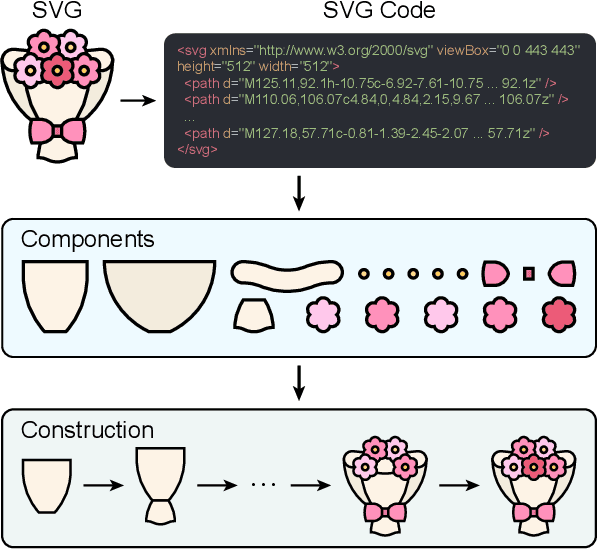

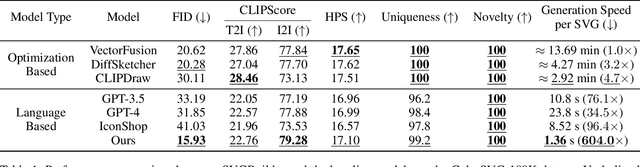

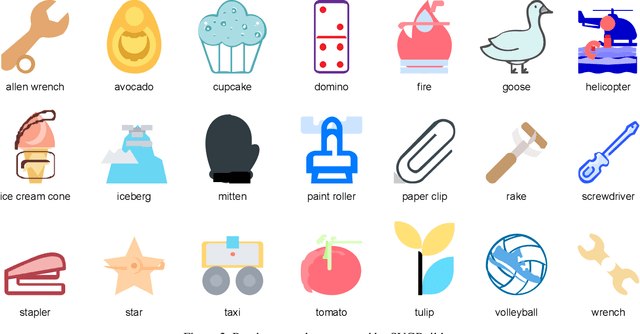

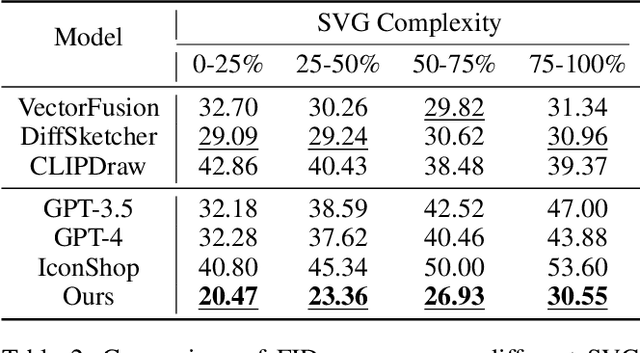

SVGBuilder: Component-Based Colored SVG Generation with Text-Guided Autoregressive Transformers

Dec 17, 2024

Abstract:Scalable Vector Graphics (SVG) are essential XML-based formats for versatile graphics, offering resolution independence and scalability. Unlike raster images, SVGs use geometric shapes and support interactivity, animation, and manipulation via CSS and JavaScript. Current SVG generation methods face challenges related to high computational costs and complexity. In contrast, human designers use component-based tools for efficient SVG creation. Inspired by this, SVGBuilder introduces a component-based, autoregressive model for generating high-quality colored SVGs from textual input. It significantly reduces computational overhead and improves efficiency compared to traditional methods. Our model generates SVGs up to 604 times faster than optimization-based approaches. To address the limitations of existing SVG datasets and support our research, we introduce ColorSVG-100K, the first large-scale dataset of colored SVGs, comprising 100,000 graphics. This dataset fills the gap in color information for SVG generation models and enhances diversity in model training. Evaluation against state-of-the-art models demonstrates SVGBuilder's superior performance in practical applications, highlighting its efficiency and quality in generating complex SVG graphics.

Leveraging LLM for Automated Ontology Extraction and Knowledge Graph Generation

Dec 03, 2024

Abstract:Extracting relevant and structured knowledge from large, complex technical documents within the Reliability and Maintainability (RAM) domain is labor-intensive and prone to errors. Our work addresses this challenge by presenting OntoKGen, a genuine pipeline for ontology extraction and Knowledge Graph (KG) generation. OntoKGen leverages Large Language Models (LLMs) through an interactive user interface guided by our adaptive iterative Chain of Thought (CoT) algorithm to ensure that the ontology extraction process and, thus, KG generation align with user-specific requirements. Although KG generation follows a clear, structured path based on the confirmed ontology, there is no universally correct ontology as it is inherently based on the user's preferences. OntoKGen recommends an ontology grounded in best practices, minimizing user effort and providing valuable insights that may have been overlooked, all while giving the user complete control over the final ontology. Having generated the KG based on the confirmed ontology, OntoKGen enables seamless integration into schemeless, non-relational databases like Neo4j. This integration allows for flexible storage and retrieval of knowledge from diverse, unstructured sources, facilitating advanced querying, analysis, and decision-making. Moreover, the generated KG serves as a robust foundation for future integration into Retrieval Augmented Generation (RAG) systems, offering enhanced capabilities for developing domain-specific intelligent applications.

Causal Discovery by Interventions via Integer Programming

Dec 02, 2024

Abstract:Causal discovery is essential across various scientific fields to uncover causal structures within data. Traditional methods relying on observational data have limitations due to confounding variables. This paper presents an optimization-based approach using integer programming (IP) to design minimal intervention sets that ensure causal structure identifiability. Our method provides exact and modular solutions that can be adjusted to different experimental settings and constraints. We demonstrate its effectiveness through comparative analysis across different settings, demonstrating its applicability and robustness.

Gaussian Derivative Change-point Detection for Early Warnings of Industrial System Failures

Oct 29, 2024Abstract:An early warning of future system failure is essential for conducting predictive maintenance and enhancing system availability. This paper introduces a three-step framework for assessing system health to predict imminent system breakdowns. First, the Gaussian Derivative Change-Point Detection (GDCPD) algorithm is proposed for detecting changes in the high-dimensional feature space. GDCPD conducts a multivariate Change-Point Detection (CPD) by implementing Gaussian derivative processes for identifying change locations on critical system features, as these changes eventually will lead to system failure. To assess the significance of these changes, Weighted Mahalanobis Distance (WMD) is applied in both offline and online analyses. In the offline setting, WMD helps establish a threshold that determines significant system variations, while in the online setting, it facilitates real-time monitoring, issuing alarms for potential future system breakdowns. Utilizing the insights gained from the GDCPD and monitoring scheme, Long Short-Term Memory (LSTM) network is then employed to estimate the Remaining Useful Life (RUL) of the system. The experimental study of a real-world system demonstrates the effectiveness of the proposed methodology in accurately forecasting system failures well before they occur. By integrating CPD with real-time monitoring and RUL prediction, this methodology significantly advances system health monitoring and early warning capabilities.

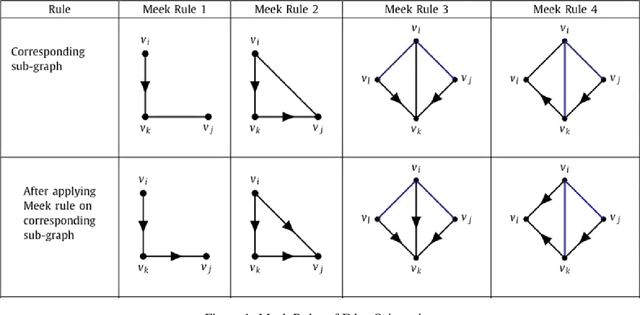

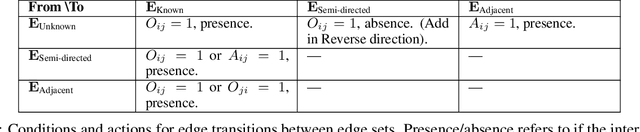

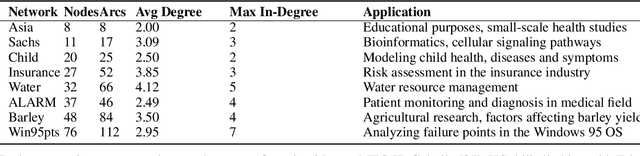

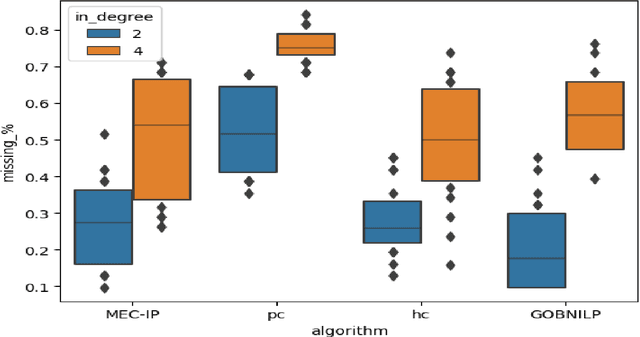

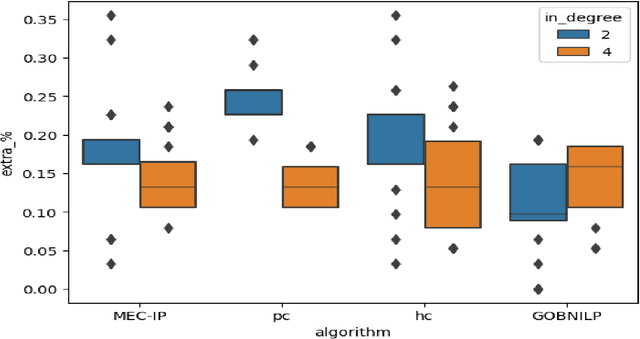

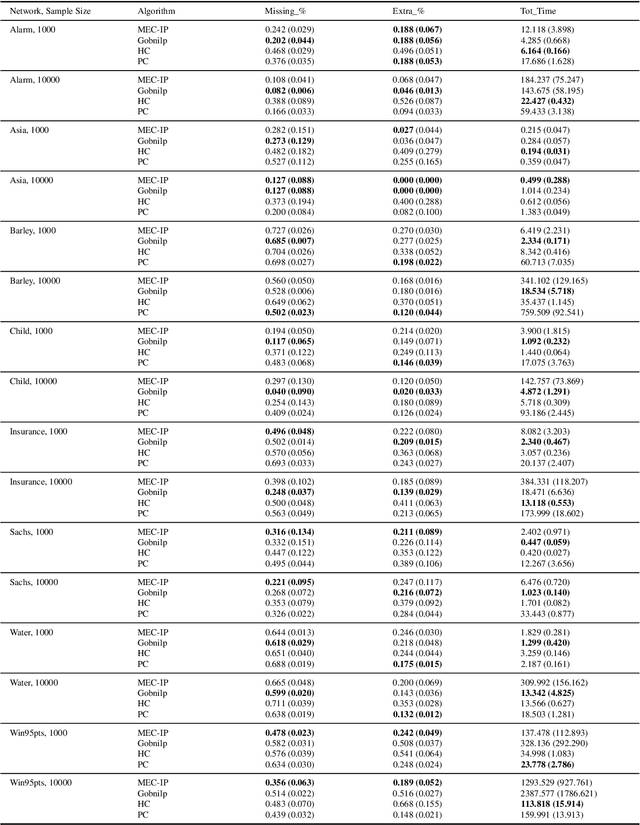

MEC-IP: Efficient Discovery of Markov Equivalent Classes via Integer Programming

Oct 22, 2024

Abstract:This paper presents a novel Integer Programming (IP) approach for discovering the Markov Equivalent Class (MEC) of Bayesian Networks (BNs) through observational data. The MEC-IP algorithm utilizes a unique clique-focusing strategy and Extended Maximal Spanning Graphs (EMSG) to streamline the search for MEC, thus overcoming the computational limitations inherent in other existing algorithms. Our numerical results show that not only a remarkable reduction in computational time is achieved by our algorithm but also an improvement in causal discovery accuracy is seen across diverse datasets. These findings underscore this new algorithm's potential as a powerful tool for researchers and practitioners in causal discovery and BNSL, offering a significant leap forward toward the efficient and accurate analysis of complex data structures.

How Does Data Diversity Shape the Weight Landscape of Neural Networks?

Oct 18, 2024

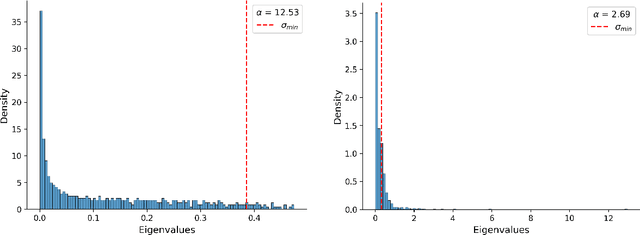

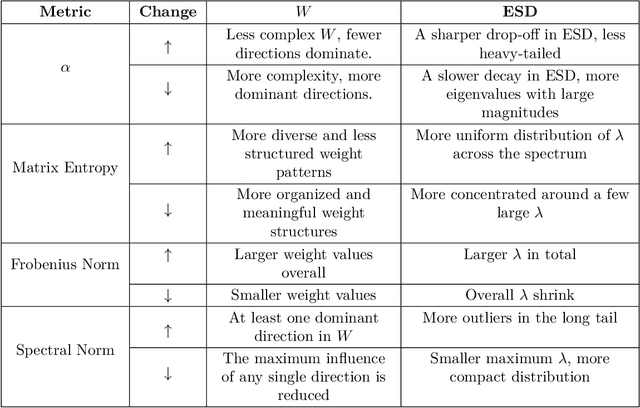

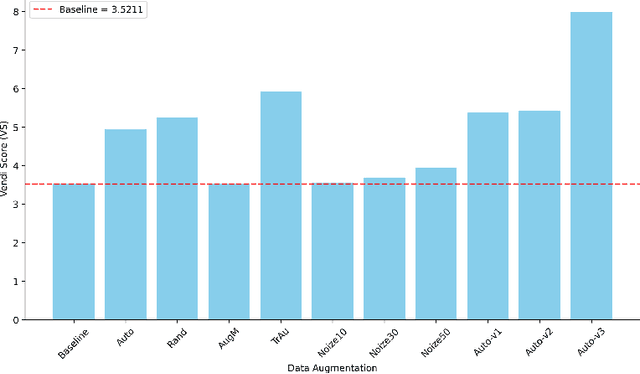

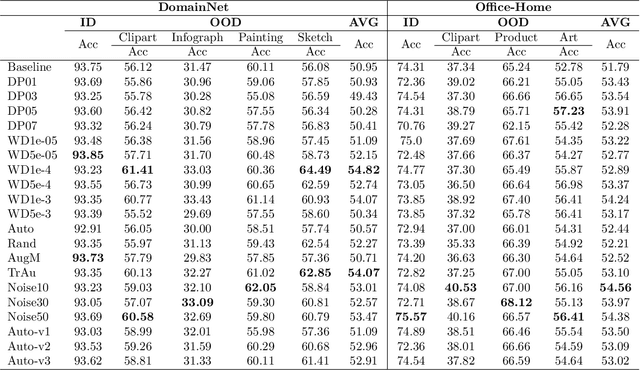

Abstract:To enhance the generalization of machine learning models to unseen data, techniques such as dropout, weight decay ($L_2$ regularization), and noise augmentation are commonly employed. While regularization methods (i.e., dropout and weight decay) are geared toward adjusting model parameters to prevent overfitting, data augmentation increases the diversity of the input training set, a method purported to improve accuracy and calibration error. In this paper, we investigate the impact of each of these techniques on the parameter space of neural networks, with the goal of understanding how they alter the weight landscape in transfer learning scenarios. To accomplish this, we employ Random Matrix Theory to analyze the eigenvalue distributions of pre-trained models, fine-tuned using these techniques but using different levels of data diversity, for the same downstream tasks. We observe that diverse data influences the weight landscape in a similar fashion as dropout. Additionally, we compare commonly used data augmentation methods with synthetic data created by generative models. We conclude that synthetic data can bring more diversity into real input data, resulting in a better performance on out-of-distribution test instances.

Fill In The Gaps: Model Calibration and Generalization with Synthetic Data

Oct 07, 2024

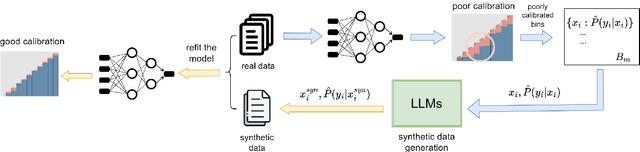

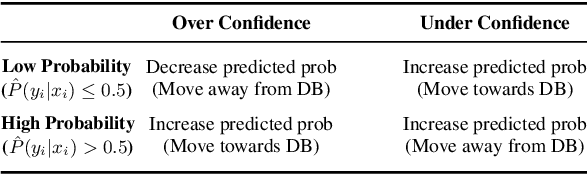

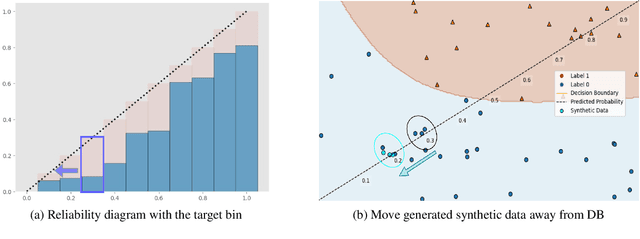

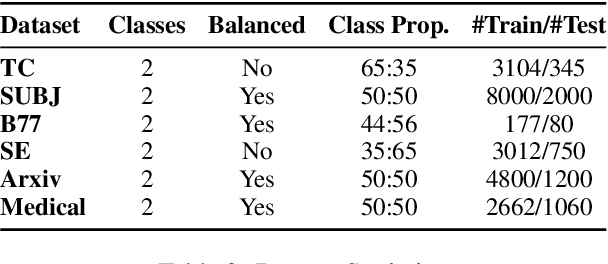

Abstract:As machine learning models continue to swiftly advance, calibrating their performance has become a major concern prior to practical and widespread implementation. Most existing calibration methods often negatively impact model accuracy due to the lack of diversity of validation data, resulting in reduced generalizability. To address this, we propose a calibration method that incorporates synthetic data without compromising accuracy. We derive the expected calibration error (ECE) bound using the Probably Approximately Correct (PAC) learning framework. Large language models (LLMs), known for their ability to mimic real data and generate text with mixed class labels, are utilized as a synthetic data generation strategy to lower the ECE bound and improve model accuracy on real test data. Additionally, we propose data generation mechanisms for efficient calibration. Testing our method on four different natural language processing tasks, we observed an average up to 34\% increase in accuracy and 33\% decrease in ECE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge