Robert Kraut

Multi-Level Feedback Generation with Large Language Models for Empowering Novice Peer Counselors

Mar 21, 2024Abstract:Realistic practice and tailored feedback are key processes for training peer counselors with clinical skills. However, existing mechanisms of providing feedback largely rely on human supervision. Peer counselors often lack mechanisms to receive detailed feedback from experienced mentors, making it difficult for them to support the large number of people with mental health issues who use peer counseling. Our work aims to leverage large language models to provide contextualized and multi-level feedback to empower peer counselors, especially novices, at scale. To achieve this, we co-design with a group of senior psychotherapy supervisors to develop a multi-level feedback taxonomy, and then construct a publicly available dataset with comprehensive feedback annotations of 400 emotional support conversations. We further design a self-improvement method on top of large language models to enhance the automatic generation of feedback. Via qualitative and quantitative evaluation with domain experts, we demonstrate that our method minimizes the risk of potentially harmful and low-quality feedback generation which is desirable in such high-stakes scenarios.

Agent-based Simulation for Online Mental Health Matching

Mar 20, 2023

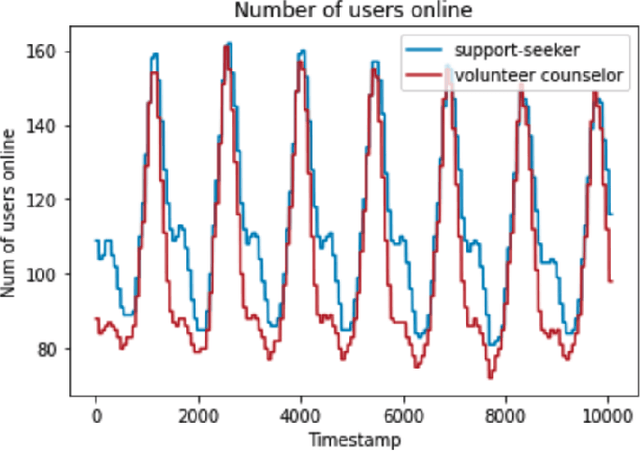

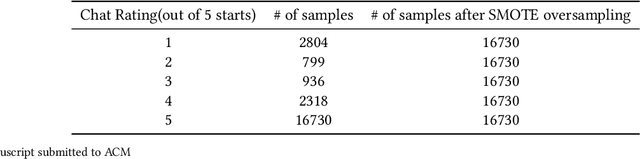

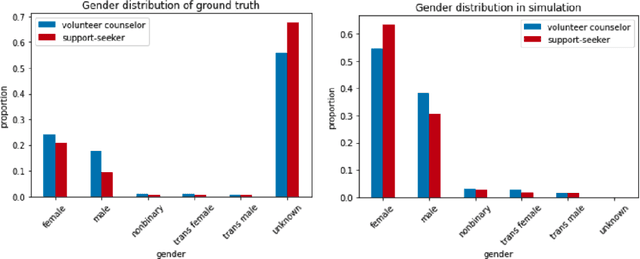

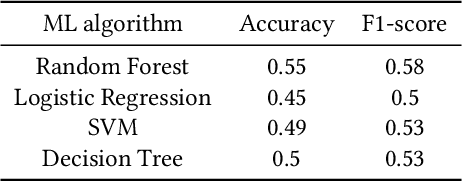

Abstract:Online mental health communities (OMHCs) are an effective and accessible channel to give and receive social support for individuals with mental and emotional issues. However, a key challenge on these platforms is finding suitable partners to interact with given that mechanisms to match users are currently underdeveloped. In this paper, we collaborate with one of the world's largest OMHC to develop an agent-based simulation framework and explore the trade-offs in different matching algorithms. The simulation framework allows us to compare current mechanisms and new algorithmic matching policies on the platform, and observe their differing effects on a variety of outcome metrics. Our findings include that usage of the deferred-acceptance algorithm can significantly better the experiences of support-seekers in one-on-one chats while maintaining low waiting time. We note key design considerations that agent-based modeling reveals in the OMHC context, including the potential benefits of algorithmic matching on marginalized communities.

Can You be More Social? Injecting Politeness and Positivity into Task-Oriented Conversational Agents

Dec 29, 2020

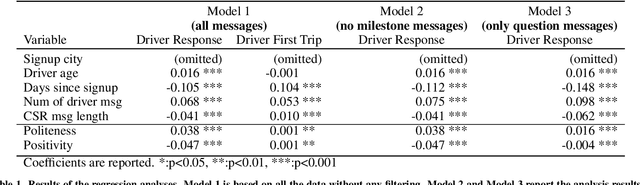

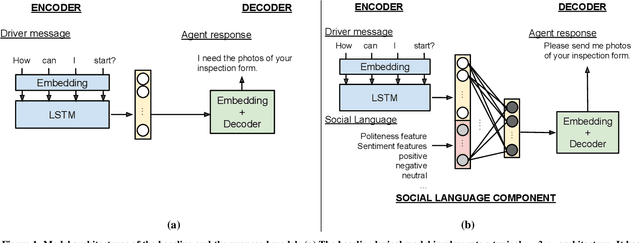

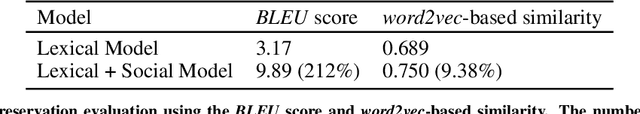

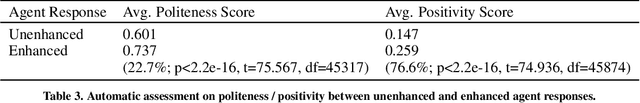

Abstract:Goal-oriented conversational agents are becoming prevalent in our daily lives. For these systems to engage users and achieve their goals, they need to exhibit appropriate social behavior as well as provide informative replies that guide users through tasks. The first component of the research in this paper applies statistical modeling techniques to understand conversations between users and human agents for customer service. Analyses show that social language used by human agents is associated with greater users' responsiveness and task completion. The second component of the research is the construction of a conversational agent model capable of injecting social language into an agent's responses while still preserving content. The model uses a sequence-to-sequence deep learning architecture, extended with a social language understanding element. Evaluation in terms of content preservation and social language level using both human judgment and automatic linguistic measures shows that the model can generate responses that enable agents to address users' issues in a more socially appropriate way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge