Robert Frederking

Transition-Based Dependency Parsing using Perceptron Learner

Jan 28, 2020

Abstract:Syntactic parsing using dependency structures has become a standard technique in natural language processing with many different parsing models, in particular data-driven models that can be trained on syntactically annotated corpora. In this paper, we tackle transition-based dependency parsing using a Perceptron Learner. Our proposed model, which adds more relevant features to the Perceptron Learner, outperforms a baseline arc-standard parser. We beat the UAS of the MALT and LSTM parsers. We also give possible ways to address parsing of non-projective trees.

RWR-GAE: Random Walk Regularization for Graph Auto Encoders

Aug 12, 2019

Abstract:Node embeddings have become an ubiquitous technique for representing graph data in a low dimensional space. Graph autoencoders, as one of the widely adapted deep models, have been proposed to learn graph embeddings in an unsupervised way by minimizing the reconstruction error for the graph data. However, its reconstruction loss ignores the distribution of the latent representation, and thus leading to inferior embeddings. To mitigate this problem, we propose a random walk based method to regularize the representations learnt by the encoder. We show that the proposed novel enhancement beats the existing state-of-the-art models by a large margin (upto 7.5\%) for node clustering task, and achieves state-of-the-art accuracy on the link prediction task for three standard datasets, cora, citeseer and pubmed. Code available at https://github.com/MysteryVaibhav/DW-GAE.

Supervised Topical Key Phrase Extraction of News Stories using Crowdsourcing, Light Filtering and Co-reference Normalization

Jun 20, 2013

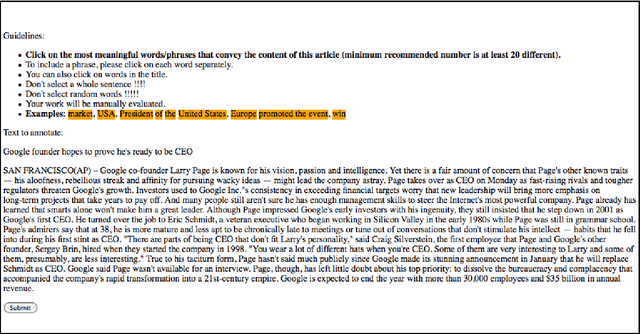

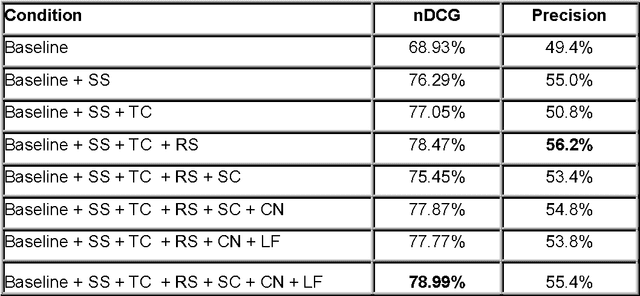

Abstract:Fast and effective automated indexing is critical for search and personalized services. Key phrases that consist of one or more words and represent the main concepts of the document are often used for the purpose of indexing. In this paper, we investigate the use of additional semantic features and pre-processing steps to improve automatic key phrase extraction. These features include the use of signal words and freebase categories. Some of these features lead to significant improvements in the accuracy of the results. We also experimented with 2 forms of document pre-processing that we call light filtering and co-reference normalization. Light filtering removes sentences from the document, which are judged peripheral to its main content. Co-reference normalization unifies several written forms of the same named entity into a unique form. We also needed a "Gold Standard" - a set of labeled documents for training and evaluation. While the subjective nature of key phrase selection precludes a true "Gold Standard", we used Amazon's Mechanical Turk service to obtain a useful approximation. Our data indicates that the biggest improvements in performance were due to shallow semantic features, news categories, and rhetorical signals (nDCG 78.47% vs. 68.93%). The inclusion of deeper semantic features such as Freebase sub-categories was not beneficial by itself, but in combination with pre-processing, did cause slight improvements in the nDCG scores.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge