Robert C. Leishman

Virtual Testbed for Monocular Visual Navigation of Small Unmanned Aircraft Systems

Jul 01, 2020

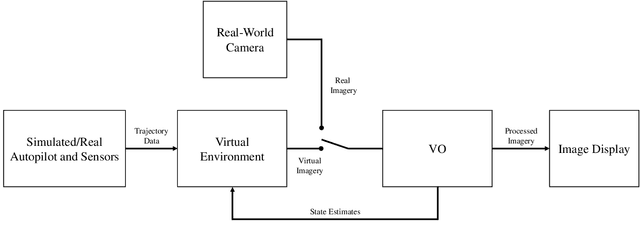

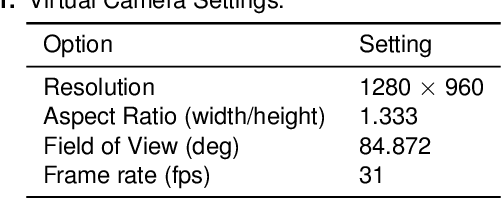

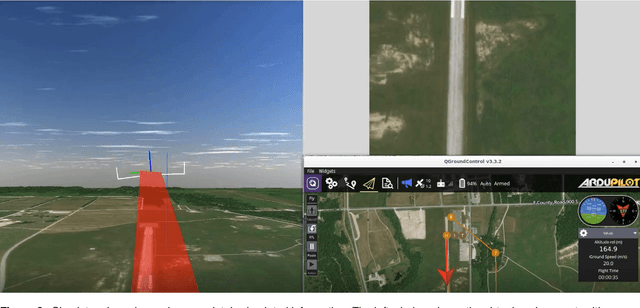

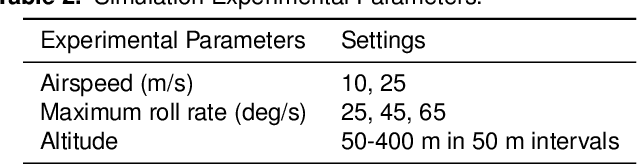

Abstract:Monocular visual navigation methods have seen significant advances in the last decade, recently producing several real-time solutions for autonomously navigating small unmanned aircraft systems without relying on GPS. This is critical for military operations which may involve environments where GPS signals are degraded or denied. However, testing and comparing visual navigation algorithms remains a challenge since visual data is expensive to gather. Conducting flight tests in a virtual environment is an attractive solution prior to committing to outdoor testing. This work presents a virtual testbed for conducting simulated flight tests over real-world terrain and analyzing the real-time performance of visual navigation algorithms at 31 Hz. This tool was created to ultimately find a visual odometry algorithm appropriate for further GPS-denied navigation research on fixed-wing aircraft, even though all of the algorithms were designed for other modalities. This testbed was used to evaluate three current state-of-the-art, open-source monocular visual odometry algorithms on a fixed-wing platform: Direct Sparse Odometry, Semi-Direct Visual Odometry, and ORB-SLAM2 (with loop closures disabled).

Robust Incremental State Estimation through Covariance Adaptation

Oct 11, 2019

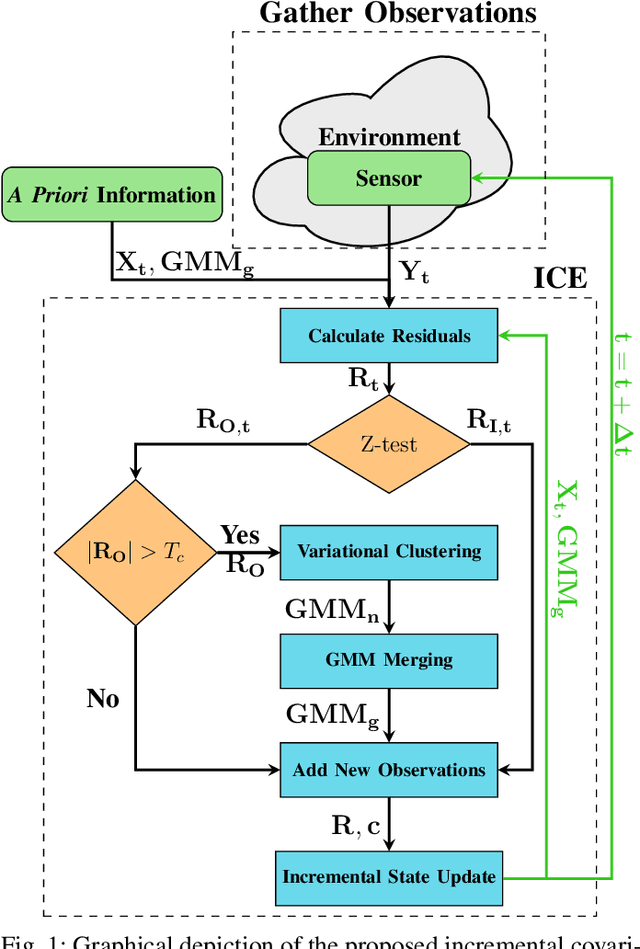

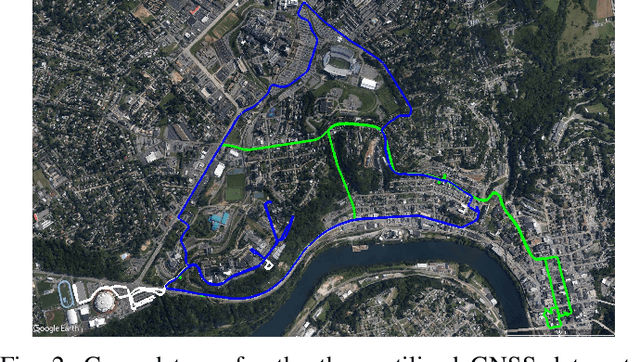

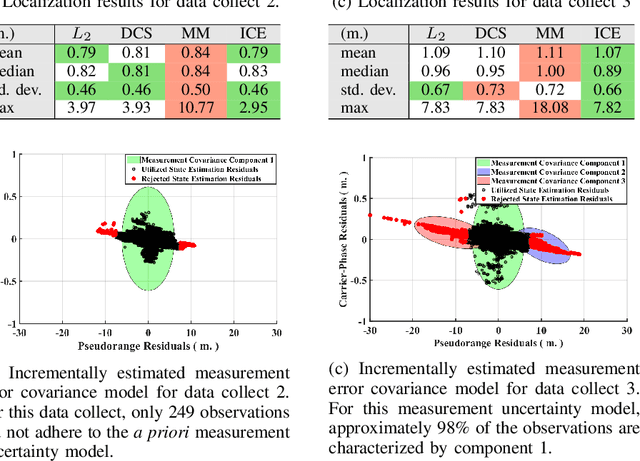

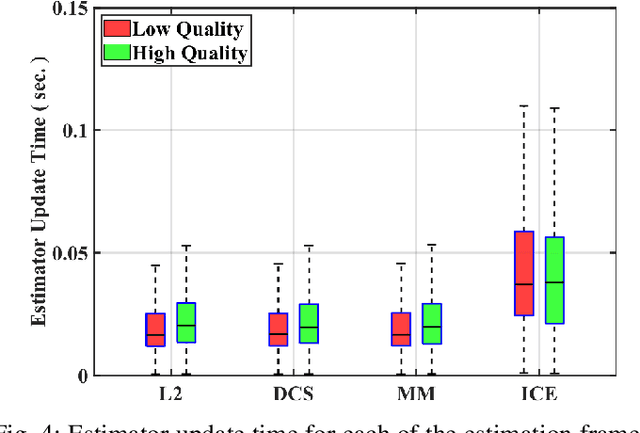

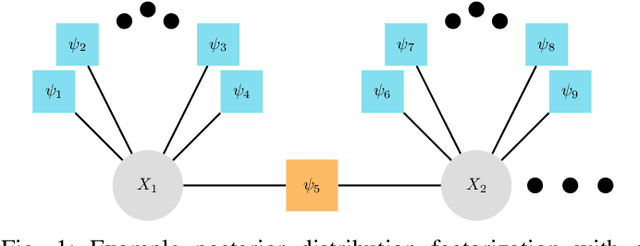

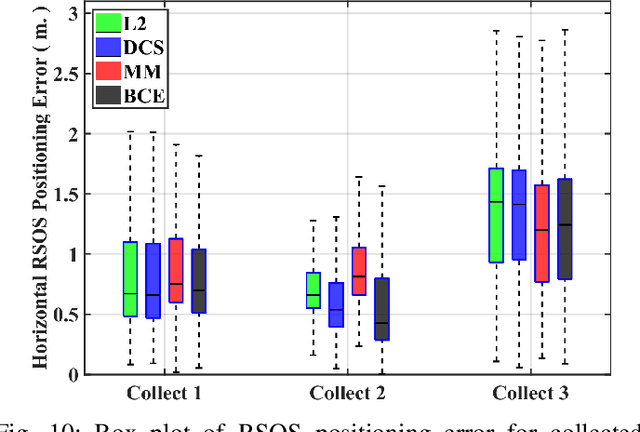

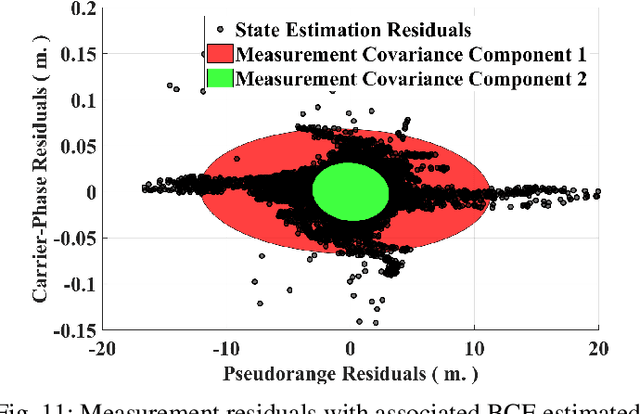

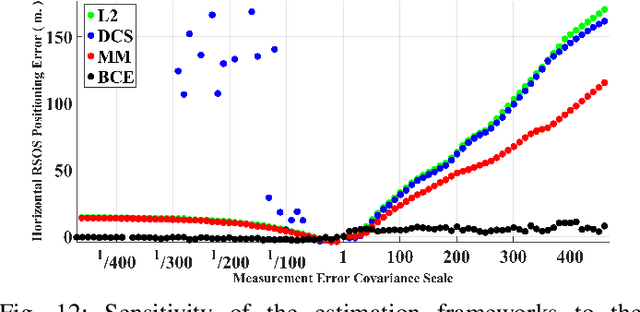

Abstract:Recent advances in the fields of robotics and automation have spurred significant interest in robust state estimation. To enable robust state estimation, several methodologies have been proposed. One such technique, which has shown promising performance, is the concept of iteratively estimating a Gaussian Mixture Model (GMM), based upon the state estimation residuals, to characterize the measurement uncertainty model. Through this iterative process, the measurement uncertainty model is more accurately characterized, which enables robust state estimation through the appropriate de-weighting of erroneous observations. This approach, however, has traditionally required a batch estimation framework to enable the estimation of the measurement uncertainty model, which is not advantageous to robotic applications. In this paper, we propose an efficient, incremental extension to the measurement uncertainty model estimation paradigm. The incremental covariance estimation (ICE) approach, as detailed within this paper, is evaluated on several collected data sets, where it is shown to provide a significant increase in localization accuracy when compared to other state-of-the-art robust, incremental estimation algorithms.

Enabling Robust State Estimation through Measurement Error Covariance Adaptation

Jun 10, 2019

Abstract:Accurate platform localization is an integral component of most robotic systems. As these robotic systems become more ubiquitous, it is necessary to develop robust state estimation algorithms that are able to withstand novel and non-cooperative environments. When dealing with novel and non-cooperative environments, little is known a priori about the measurement error uncertainty, thus, there is a requirement that the uncertainty models of the localization algorithm be adaptive. Within this paper, we propose one such technique that enables robust state estimation through the iterative adaptation of the measurement uncertainty model. The adaptation of the measurement uncertainty model is granted through non-parametric clustering of the residuals, which enables the characterization of the measurement uncertainty via a Gaussian mixture model. The provided Gaussian mixture model can be utilized within any non-linear least squares optimization algorithm by approximately characterizing each observation with the sufficient statistics of the assigned cluster (i.e., each observation's uncertainty model is updated based upon the assignment provided by the non-parametric clustering algorithm). The proposed algorithm is verified on several GNSS collected data sets, where it is shown that the proposed technique exhibits some advantages when compared to other robust estimation techniques when confronted with degraded data quality.

URSA: A Neural Network for Unordered Point Clouds Using Constellations

Oct 23, 2018

Abstract:This paper describes a neural network layer, named Ursa, that uses a constellation of points to learn classification information from point cloud data. Unlike other machine learning classification problems where the task is to classify an individual high-dimensional observation, in a point-cloud classification problem the goal is to classify a set of d-dimensional observations. Because a point cloud is a set, there is no ordering to the collection of points in a point-cloud classification problem. Thus, the challenge of classifying point clouds inputs is in building a classifier which is agnostic to the ordering of the observations, yet preserves the d-dimensional information of each point in the set. This research presents Ursa, a new layer type for an artificial neural network which achieves these two properties. Similar to new methods for this task, this architecture works directly on d-dimensional points rather than first converting the points to a d-dimensional volume. The Ursa layer is followed by a series of dense layers to classify 2D and 3D objects from point clouds. Experiments on ModelNet40 and MNIST data show classification results comparable with current methods, while reducing the training parameters by over 50 percent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge