Rishabh Khandelwal

Automatically Detecting Online Deceptive Patterns in Real-time

Nov 11, 2024Abstract:Deceptive patterns (DPs) in digital interfaces manipulate users into making unintended decisions, exploiting cognitive biases and psychological vulnerabilities. These patterns have become ubiquitous across various digital platforms. While efforts to mitigate DPs have emerged from legal and technical perspectives, a significant gap in usable solutions that empower users to identify and make informed decisions about DPs in real-time remains. In this work, we introduce AutoBot, an automated, deceptive pattern detector that analyzes websites' visual appearances using machine learning techniques to identify and notify users of DPs in real-time. AutoBot employs a two-staged pipeline that processes website screenshots, identifying interactable elements and extracting textual features without relying on HTML structure. By leveraging a custom language model, AutoBot understands the context surrounding these elements to determine the presence of deceptive patterns. We implement AutoBot as a lightweight Chrome browser extension that performs all analyses locally, minimizing latency and preserving user privacy. Through extensive evaluation, we demonstrate AutoBot's effectiveness in enhancing users' ability to navigate digital environments safely while providing a valuable tool for regulators to assess and enforce compliance with DP regulations.

PolicyLR: A Logic Representation For Privacy Policies

Aug 27, 2024

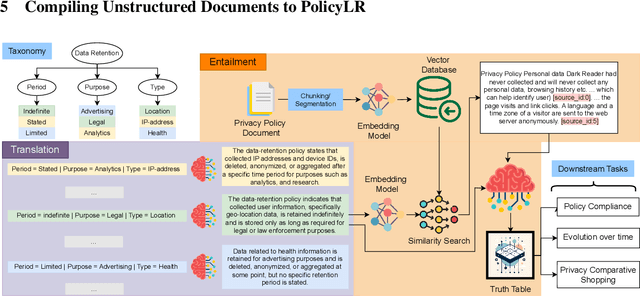

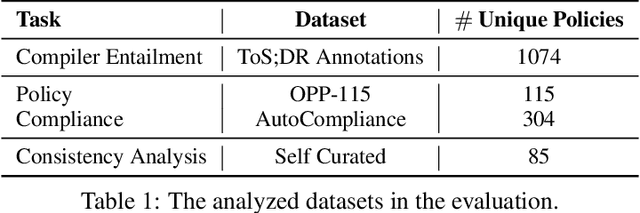

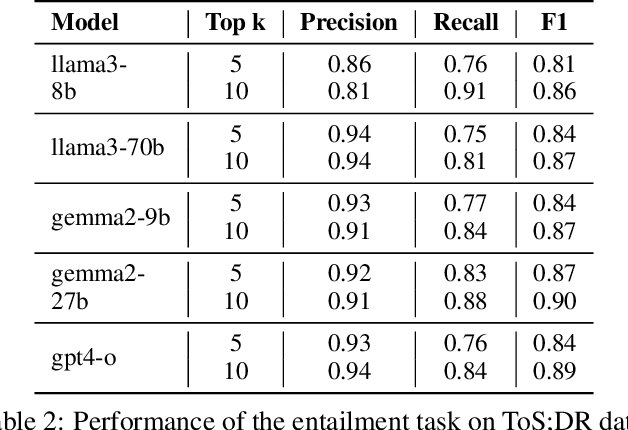

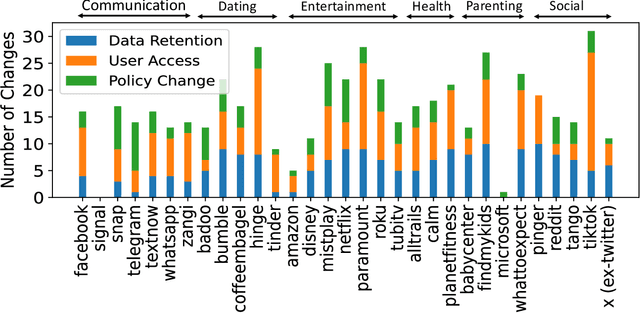

Abstract:Privacy policies are crucial in the online ecosystem, defining how services handle user data and adhere to regulations such as GDPR and CCPA. However, their complexity and frequent updates often make them difficult for stakeholders to understand and analyze. Current automated analysis methods, which utilize natural language processing, have limitations. They typically focus on individual tasks and fail to capture the full context of the policies. We propose PolicyLR, a new paradigm that offers a comprehensive machine-readable representation of privacy policies, serving as an all-in-one solution for multiple downstream tasks. PolicyLR converts privacy policies into a machine-readable format using valuations of atomic formulae, allowing for formal definitions of tasks like compliance and consistency. We have developed a compiler that transforms unstructured policy text into this format using off-the-shelf Large Language Models (LLMs). This compiler breaks down the transformation task into a two-stage translation and entailment procedure. This procedure considers the full context of the privacy policy to infer a complex formula, where each formula consists of simpler atomic formulae. The advantage of this model is that PolicyLR is interpretable by design and grounded in segments of the privacy policy. We evaluated the compiler using ToS;DR, a community-annotated privacy policy entailment dataset. Utilizing open-source LLMs, our compiler achieves precision and recall values of 0.91 and 0.88, respectively. Finally, we demonstrate the utility of PolicyLR in three privacy tasks: Policy Compliance, Inconsistency Detection, and Privacy Comparison Shopping.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge