Risa Higashita

Pathology Context Recalibration Network for Ocular Disease Recognition

Dec 30, 2025Abstract:Pathology context and expert experience play significant roles in clinical ocular disease diagnosis. Although deep neural networks (DNNs) have good ocular disease recognition results, they often ignore exploring the clinical pathology context and expert experience priors to improve ocular disease recognition performance and decision-making interpretability. To this end, we first develop a novel Pathology Recalibration Module (PRM) to leverage the potential of pathology context prior via the combination of the well-designed pixel-wise context compression operator and pathology distribution concentration operator; then this paper applies a novel expert prior Guidance Adapter (EPGA) to further highlight significant pixel-wise representation regions by fully mining the expert experience prior. By incorporating PRM and EPGA into the modern DNN, the PCRNet is constructed for automated ocular disease recognition. Additionally, we introduce an Integrated Loss (IL) to boost the ocular disease recognition performance of PCRNet by considering the effects of sample-wise loss distributions and training label frequencies. The extensive experiments on three ocular disease datasets demonstrate the superiority of PCRNet with IL over state-of-the-art attention-based networks and advanced loss methods. Further visualization analysis explains the inherent behavior of PRM and EPGA that affects the decision-making process of DNNs.

* The article has been accepted for publication at Machine Intelligence Research (MIR)

VOIC: Visible-Occluded Decoupling for Monocular 3D Semantic Scene Completion

Dec 22, 2025

Abstract:Camera-based 3D Semantic Scene Completion (SSC) is a critical task for autonomous driving and robotic scene understanding. It aims to infer a complete 3D volumetric representation of both semantics and geometry from a single image. Existing methods typically focus on end-to-end 2D-to-3D feature lifting and voxel completion. However, they often overlook the interference between high-confidence visible-region perception and low-confidence occluded-region reasoning caused by single-image input, which can lead to feature dilution and error propagation. To address these challenges, we introduce an offline Visible Region Label Extraction (VRLE) strategy that explicitly separates and extracts voxel-level supervision for visible regions from dense 3D ground truth. This strategy purifies the supervisory space for two complementary sub-tasks: visible-region perception and occluded-region reasoning. Building on this idea, we propose the Visible-Occluded Interactive Completion Network (VOIC), a novel dual-decoder framework that explicitly decouples SSC into visible-region semantic perception and occluded-region scene completion. VOIC first constructs a base 3D voxel representation by fusing image features with depth-derived occupancy. The visible decoder focuses on generating high-fidelity geometric and semantic priors, while the occlusion decoder leverages these priors together with cross-modal interaction to perform coherent global scene reasoning. Extensive experiments on the SemanticKITTI and SSCBench-KITTI360 benchmarks demonstrate that VOIC outperforms existing monocular SSC methods in both geometric completion and semantic segmentation accuracy, achieving state-of-the-art performance.

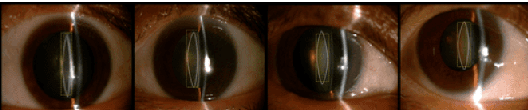

Adaptive Confidence-Wise Loss for Improved Lens Structure Segmentation in AS-OCT

Aug 12, 2025Abstract:Precise lens structure segmentation is essential for the design of intraocular lenses (IOLs) in cataract surgery. Existing deep segmentation networks typically weight all pixels equally under cross-entropy (CE) loss, overlooking the fact that sub-regions of lens structures are inhomogeneous (e.g., some regions perform better than others) and that boundary regions often suffer from poor segmentation calibration at the pixel level. Clinically, experts annotate different sub-regions of lens structures with varying confidence levels, considering factors such as sub-region proportions, ambiguous boundaries, and lens structure shapes. Motivated by this observation, we propose an Adaptive Confidence-Wise (ACW) loss to group each lens structure sub-region into different confidence sub-regions via a confidence threshold from the unique region aspect, aiming to exploit the potential of expert annotation confidence prior. Specifically, ACW clusters each target region into low-confidence and high-confidence groups and then applies a region-weighted loss to reweigh each confidence group. Moreover, we design an adaptive confidence threshold optimization algorithm to adjust the confidence threshold of ACW dynamically. Additionally, to better quantify the miscalibration errors in boundary region segmentation, we propose a new metric, termed Boundary Expected Calibration Error (BECE). Extensive experiments on a clinical lens structure AS-OCT dataset and other multi-structure datasets demonstrate that our ACW significantly outperforms competitive segmentation loss methods across different deep segmentation networks (e.g., MedSAM). Notably, our method surpasses CE with 6.13% IoU gain, 4.33% DSC increase, and 4.79% BECE reduction in lens structure segmentation under U-Net. The code of this paper is available at https://github.com/XiaoLing12138/Adaptive-Confidence-Wise-Loss.

Expert-Like Reparameterization of Heterogeneous Pyramid Receptive Fields in Efficient CNNs for Fair Medical Image Classification

May 19, 2025Abstract:Efficient convolutional neural network (CNN) architecture designs have attracted growing research interests. However, they usually apply single receptive field (RF), small asymmetric RFs, or pyramid RFs to learn different feature representations, still encountering two significant challenges in medical image classification tasks: 1) They have limitations in capturing diverse lesion characteristics efficiently, e.g., tiny, coordination, small and salient, which have unique roles on results, especially imbalanced medical image classification. 2) The predictions generated by those CNNs are often unfair/biased, bringing a high risk by employing them to real-world medical diagnosis conditions. To tackle these issues, we develop a new concept, Expert-Like Reparameterization of Heterogeneous Pyramid Receptive Fields (ERoHPRF), to simultaneously boost medical image classification performance and fairness. This concept aims to mimic the multi-expert consultation mode by applying the well-designed heterogeneous pyramid RF bags to capture different lesion characteristics effectively via convolution operations with multiple heterogeneous kernel sizes. Additionally, ERoHPRF introduces an expert-like structural reparameterization technique to merge its parameters with the two-stage strategy, ensuring competitive computation cost and inference speed through comparisons to a single RF. To manifest the effectiveness and generalization ability of ERoHPRF, we incorporate it into mainstream efficient CNN architectures. The extensive experiments show that our method maintains a better trade-off than state-of-the-art methods in terms of medical image classification, fairness, and computation overhead. The codes of this paper will be released soon.

MM-UNet: A Mixed MLP Architecture for Improved Ophthalmic Image Segmentation

Aug 16, 2024

Abstract:Ophthalmic image segmentation serves as a critical foundation for ocular disease diagnosis. Although fully convolutional neural networks (CNNs) are commonly employed for segmentation, they are constrained by inductive biases and face challenges in establishing long-range dependencies. Transformer-based models address these limitations but introduce substantial computational overhead. Recently, a simple yet efficient Multilayer Perceptron (MLP) architecture was proposed for image classification, achieving competitive performance relative to advanced transformers. However, its effectiveness for ophthalmic image segmentation remains unexplored. In this paper, we introduce MM-UNet, an efficient Mixed MLP model tailored for ophthalmic image segmentation. Within MM-UNet, we propose a multi-scale MLP (MMLP) module that facilitates the interaction of features at various depths through a grouping strategy, enabling simultaneous capture of global and local information. We conducted extensive experiments on both a private anterior segment optical coherence tomography (AS-OCT) image dataset and a public fundus image dataset. The results demonstrated the superiority of our MM-UNet model in comparison to state-of-the-art deep segmentation networks.

Elongated Physiological Structure Segmentation via Spatial and Scale Uncertainty-aware Network

May 30, 2023

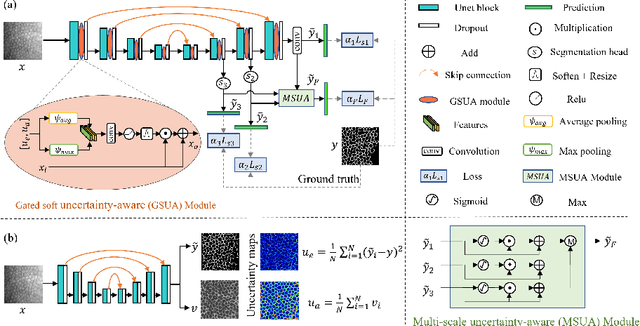

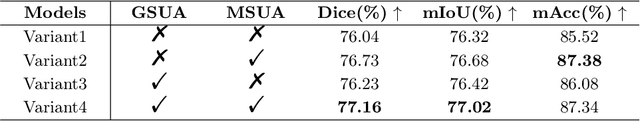

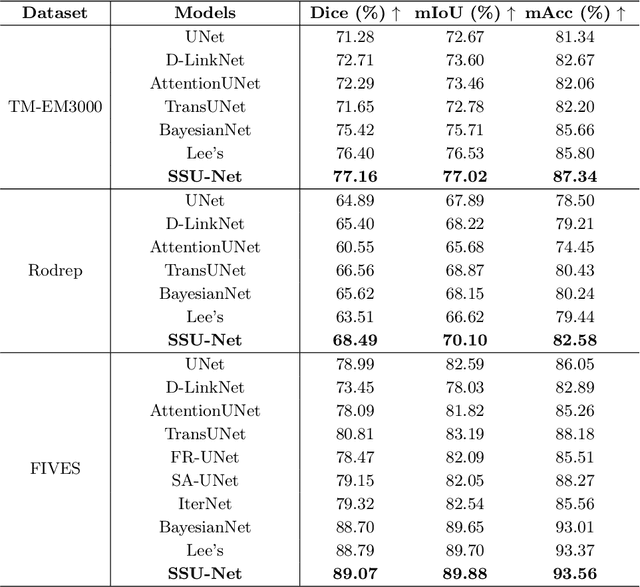

Abstract:Robust and accurate segmentation for elongated physiological structures is challenging, especially in the ambiguous region, such as the corneal endothelium microscope image with uneven illumination or the fundus image with disease interference. In this paper, we present a spatial and scale uncertainty-aware network (SSU-Net) that fully uses both spatial and scale uncertainty to highlight ambiguous regions and integrate hierarchical structure contexts. First, we estimate epistemic and aleatoric spatial uncertainty maps using Monte Carlo dropout to approximate Bayesian networks. Based on these spatial uncertainty maps, we propose the gated soft uncertainty-aware (GSUA) module to guide the model to focus on ambiguous regions. Second, we extract the uncertainty under different scales and propose the multi-scale uncertainty-aware (MSUA) fusion module to integrate structure contexts from hierarchical predictions, strengthening the final prediction. Finally, we visualize the uncertainty map of final prediction, providing interpretability for segmentation results. Experiment results show that the SSU-Net performs best on cornea endothelial cell and retinal vessel segmentation tasks. Moreover, compared with counterpart uncertainty-based methods, SSU-Net is more accurate and robust.

PPCR: Learning Pyramid Pixel Context Recalibration Module for Medical Image Classification

Mar 10, 2023Abstract:Spatial attention mechanism has been widely incorporated into deep convolutional neural networks (CNNs) via long-range dependency capturing, significantly lifting the performance in computer vision, but it may perform poorly in medical imaging. Unfortunately, existing efforts are often unaware that long-range dependency capturing has limitations in highlighting subtle lesion regions, neglecting to exploit the potential of multi-scale pixel context information to improve the representational capability of CNNs. In this paper, we propose a practical yet lightweight architectural unit, Pyramid Pixel Context Recalibration (PPCR) module, which exploits multi-scale pixel context information to recalibrate pixel position in a pixel-independent manner adaptively. PPCR first designs a cross-channel pyramid pooling to aggregate multi-scale pixel context information, then eliminates the inconsistency among them by the well-designed pixel normalization, and finally estimates per pixel attention weight via a pixel context integration. PPCR can be flexibly plugged into modern CNNs with negligible overhead. Extensive experiments on five medical image datasets and CIFAR benchmarks empirically demonstrate the superiority and generalization of PPCR over state-of-the-art attention methods. The in-depth analyses explain the inherent behavior of PPCR in the decision-making process, improving the interpretability of CNNs.

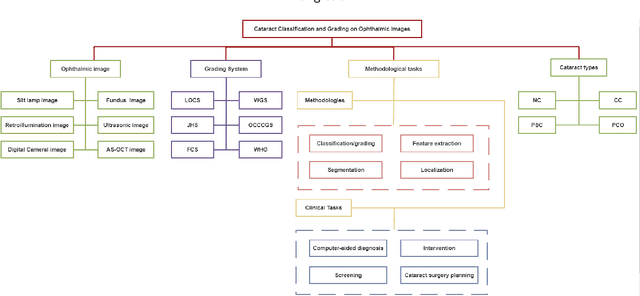

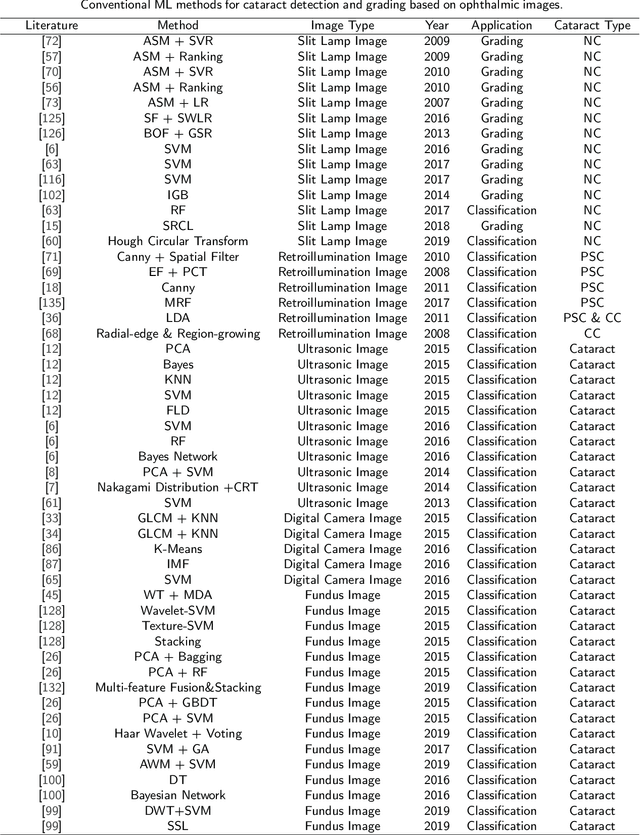

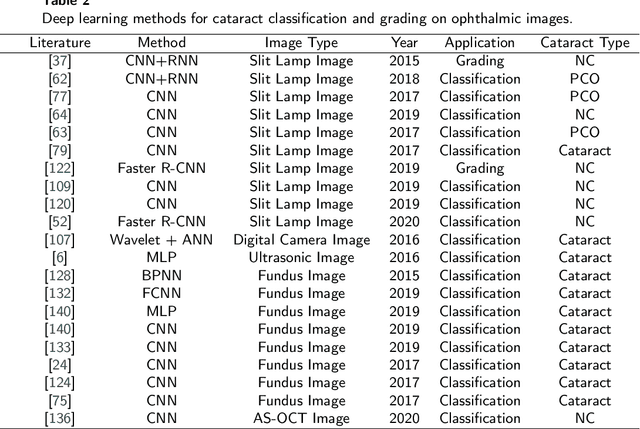

Machine Learning for Cataract Classification and Grading on Ophthalmic Imaging Modalities: A Survey

Dec 09, 2020

Abstract:Cataract is one of the leading causes of reversible visual impairment and blindness globally. Over the years, researchers have achieved significant progress in developing state-of-the-art artificial intelligence techniques for automatic cataract classification and grading, helping clinicians prevent and treat cataract in time. This paper provides a comprehensive survey of recent advances in machine learning for cataract classification and grading based on ophthalmic images. We summarize existing literature from two research directions: conventional machine learning techniques and deep learning techniques. This paper also provides insights into existing works of both merits and limitations. In addition, we discuss several challenges of automatic cataract classification and grading based on machine learning techniques and present possible solutions to these challenges for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge