Richard Plesh

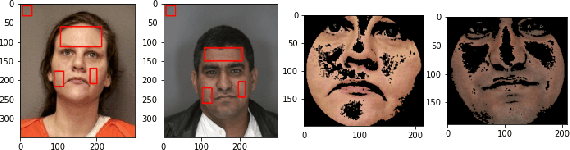

Generalizability and Application of the Skin Reflectance Estimate Based on Dichromatic Separation (SREDS)

Sep 03, 2023

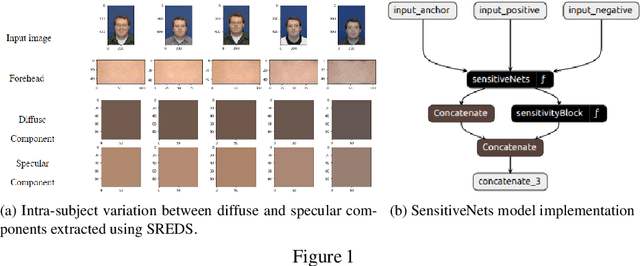

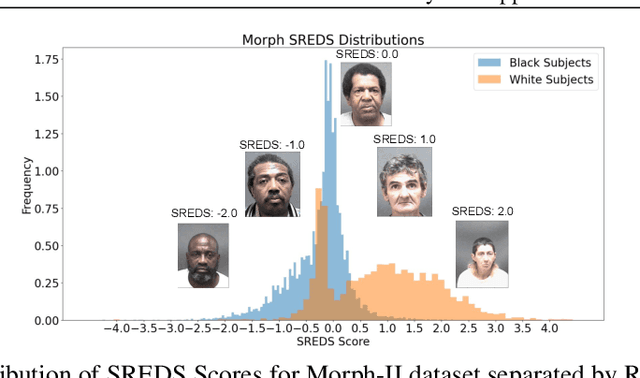

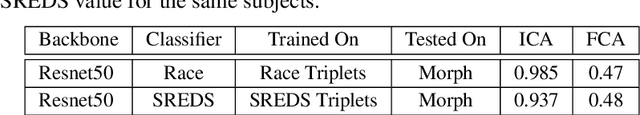

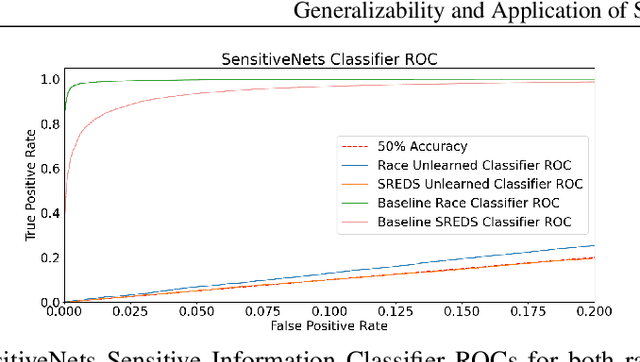

Abstract:Face recognition (FR) systems have become widely used and readily available in recent history. However, differential performance between certain demographics has been identified within popular FR models. Skin tone differences between demographics can be one of the factors contributing to the differential performance observed in face recognition models. Skin tone metrics provide an alternative to self-reported race labels when such labels are lacking or completely not available e.g. large-scale face recognition datasets. In this work, we provide a further analysis of the generalizability of the Skin Reflectance Estimate based on Dichromatic Separation (SREDS) against other skin tone metrics and provide a use case for substituting race labels for SREDS scores in a privacy-preserving learning solution. Our findings suggest that SREDS consistently creates a skin tone metric with lower variability within each subject and SREDS values can be utilized as an alternative to the self-reported race labels at minimal drop in performance. Finally, we provide a publicly available and open-source implementation of SREDS to help the research community. Available at https://github.com/JosephDrahos/SREDS

Empirical Assessment of End-to-End Iris Recognition System Capacity

Mar 20, 2023

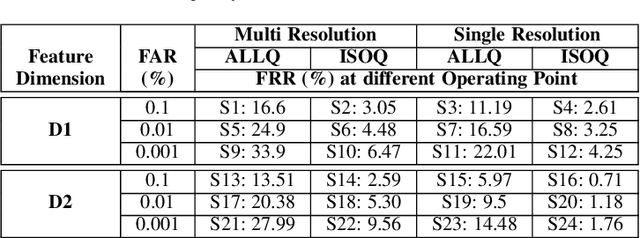

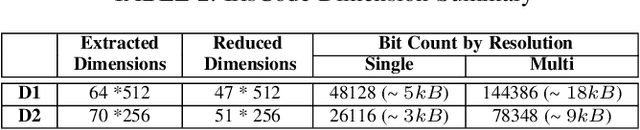

Abstract:Iris is an established modality in biometric recognition applications including consumer electronics, e-commerce, border security, forensics, and de-duplication of identity at a national scale. In light of the expanding usage of biometric recognition, identity clash (when templates from two different people match) is an imperative factor of consideration for a system's deployment. This study explores system capacity estimation by empirically estimating the constrained capacity of an end-to-end iris recognition system (NIR systems with Daugman-based feature extraction) operating at an acceptable error rate i.e. the number of subjects a system can resolve before encountering an error. We study the impact of six system parameters on an iris recognition system's constrained capacity -- number of enrolled identities, image quality, template dimension, random feature elimination, filter resolution, and system operating point. In our assessment, we analyzed 13.2 million comparisons from 5158 unique identities for each of 24 different system configurations. This work provides a framework to better understand iris recognition system capacity as a function of biometric system configurations beyond the operating point, for large-scale applications.

GlassesGAN: Eyewear Personalization using Synthetic Appearance Discovery and Targeted Subspace Modeling

Oct 24, 2022

Abstract:We present GlassesGAN, a novel image editing framework for custom design of glasses, that sets a new standard in terms of image quality, edit realism, and continuous multi-style edit capability. To facilitate the editing process with GlassesGAN, we propose a Targeted Subspace Modelling (TSM) procedure that, based on a novel mechanism for (synthetic) appearance discovery in the latent space of a pre-trained GAN generator, constructs an eyeglasses-specific (latent) subspace that the editing framework can utilize. To improve the reliability of our learned edits, we also introduce an appearance-constrained subspace initialization (SI) technique able to center the latent representation of a given input image in the well-defined part of the constructed subspace. We test GlassesGAN on three diverse datasets (CelebA-HQ, SiblingsDB-HQf, and MetFaces) and compare it against three state-of-the-art competitors, i.e., InterfaceGAN, GANSpace, and MaskGAN. Our experimental results show that GlassesGAN achieves photo-realistic, multi-style edits to eyeglasses while comparing favorably to its competitors. The source code is made freely available.

High Fidelity Fingerprint Generation: Quality, Uniqueness, and Privacy

May 21, 2021

Abstract:In this work, we utilize progressive growth-based Generative Adversarial Networks (GANs) to develop the Clarkson Fingerprint Generator (CFG). We demonstrate that the CFG is capable of generating realistic, high fidelity, $512\times512$ pixels, full, plain impression fingerprints. Our results suggest that the fingerprints generated by the CFG are unique, diverse, and resemble the training dataset in terms of minutiae configuration and quality, while not revealing the underlying identities of the training data. We make the pre-trained CFG model and the synthetically generated dataset publicly available at https://github.com/keivanB/Clarkson_Finger_Gen

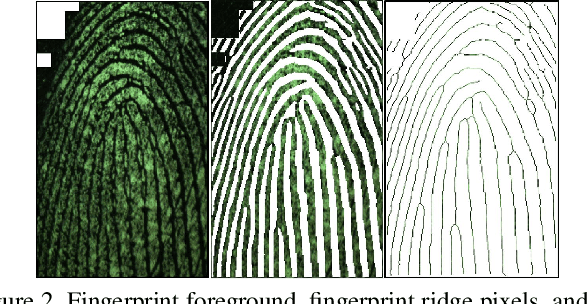

Fingerprint Presentation Attack Detection utilizing Time-Series, Color Fingerprint Captures

Apr 08, 2021

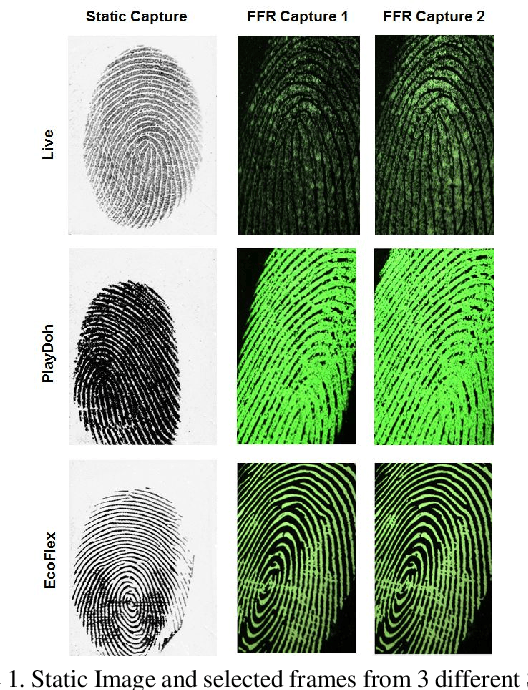

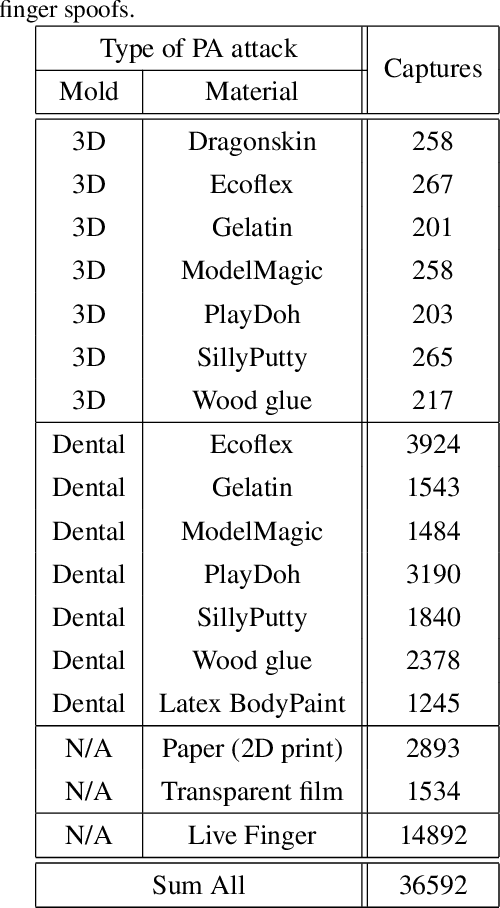

Abstract:Fingerprint capture systems can be fooled by widely accessible methods to spoof the system using fake fingers, known as presentation attacks. As biometric recognition systems become more extensively relied upon at international borders and in consumer electronics, presentation attacks are becoming an increasingly serious issue. A robust solution is needed that can handle the increased variability and complexity of spoofing techniques. This paper demonstrates the viability of utilizing a sensor with time-series and color-sensing capabilities to improve the robustness of a traditional fingerprint sensor and introduces a comprehensive fingerprint dataset with over 36,000 image sequences and a state-of-the-art set of spoofing techniques. The specific sensor used in this research captures a traditional gray-scale static capture and a time-series color capture simultaneously. Two different methods for Presentation Attack Detection (PAD) are used to assess the benefit of a color dynamic capture. The first algorithm utilizes Static-Temporal Feature Engineering on the fingerprint capture to generate a classification decision. The second generates its classification decision using features extracted by way of the Inception V3 CNN trained on ImageNet. Classification performance is evaluated using features extracted exclusively from the static capture, exclusively from the dynamic capture, and on a fusion of the two feature sets. With both PAD approaches we find that the fusion of the dynamic and static feature-set is shown to improve performance to a level not individually achievable.

* 8 pages, 3 figures, ICB-2019

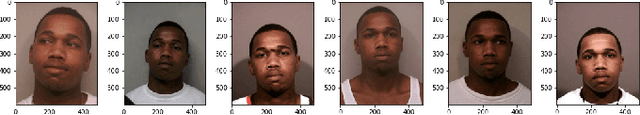

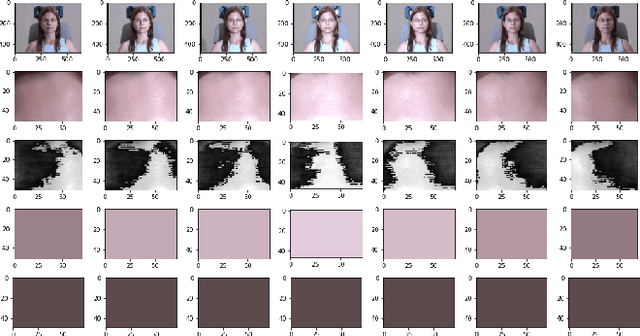

SREDS: A dichromatic separation based measure of skin color

Apr 07, 2021

Abstract:Face recognition (FR) systems are fast becoming ubiquitous. However, differential performance among certain demographics was identified in several widely used FR models. The skin tone of the subject is an important factor in addressing the differential performance. Previous work has used modeling methods to propose skin tone measures of subjects across different illuminations or utilized subjective labels of skin color and demographic information. However, such models heavily rely on consistent background and lighting for calibration, or utilize labeled datasets, which are time-consuming to generate or are unavailable. In this work, we have developed a novel and data-driven skin color measure capable of accurately representing subjects' skin tone from a single image, without requiring a consistent background or illumination. Our measure leverages the dichromatic reflection model in RGB space to decompose skin patches into diffuse and specular bases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge