Keivan Bahmani

Longitudinal Evaluation of Child Face Recognition and the Impact of Underlying Age

Aug 01, 2024

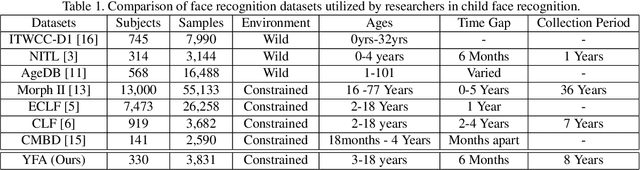

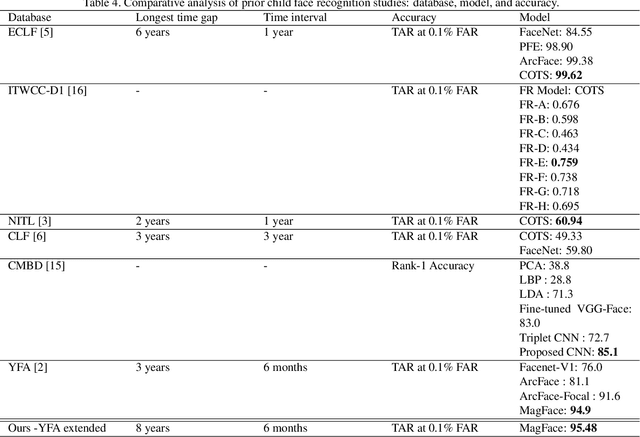

Abstract:The need for reliable identification of children in various emerging applications has sparked interest in leveraging child face recognition technology. This study introduces a longitudinal approach to enrollment and verification accuracy for child face recognition, focusing on the YFA database collected by Clarkson University CITeR research group over an 8 year period, at 6 month intervals.

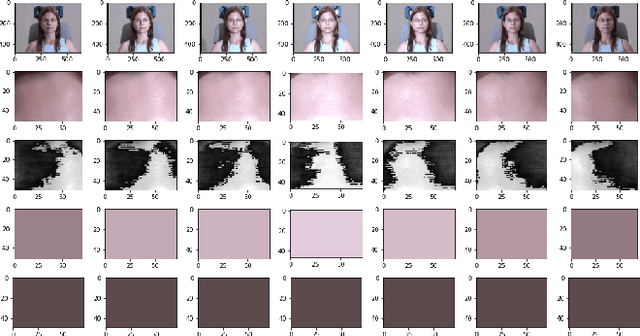

Generalizability and Application of the Skin Reflectance Estimate Based on Dichromatic Separation (SREDS)

Sep 03, 2023

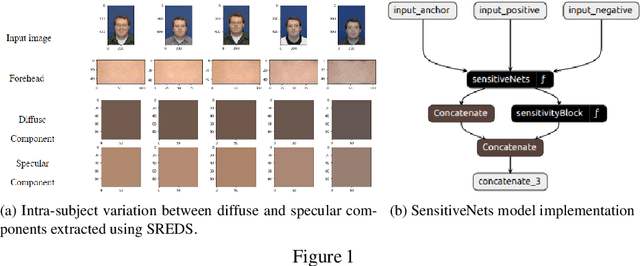

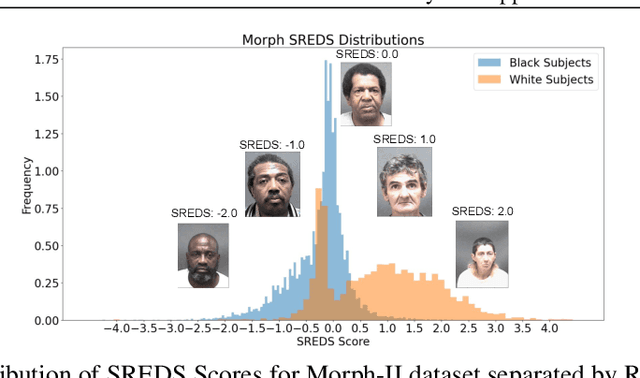

Abstract:Face recognition (FR) systems have become widely used and readily available in recent history. However, differential performance between certain demographics has been identified within popular FR models. Skin tone differences between demographics can be one of the factors contributing to the differential performance observed in face recognition models. Skin tone metrics provide an alternative to self-reported race labels when such labels are lacking or completely not available e.g. large-scale face recognition datasets. In this work, we provide a further analysis of the generalizability of the Skin Reflectance Estimate based on Dichromatic Separation (SREDS) against other skin tone metrics and provide a use case for substituting race labels for SREDS scores in a privacy-preserving learning solution. Our findings suggest that SREDS consistently creates a skin tone metric with lower variability within each subject and SREDS values can be utilized as an alternative to the self-reported race labels at minimal drop in performance. Finally, we provide a publicly available and open-source implementation of SREDS to help the research community. Available at https://github.com/JosephDrahos/SREDS

Presentation Attack Detection with Advanced CNN Models for Noncontact-based Fingerprint Systems

Mar 09, 2023

Abstract:Touch-based fingerprint biometrics is one of the most popular biometric modalities with applications in several fields. Problems associated with touch-based techniques such as the presence of latent fingerprints and hygiene issues due to many people touching the same surface motivated the community to look for non-contact-based solutions. For the last few years, contactless fingerprint systems are on the rise and in demand because of the ability to turn any device with a camera into a fingerprint reader. Yet, before we can fully utilize the benefit of noncontact-based methods, the biometric community needs to resolve a few concerns such as the resiliency of the system against presentation attacks. One of the major obstacles is the limited publicly available data sets with inadequate spoof and live data. In this publication, we have developed a Presentation attack detection (PAD) dataset of more than 7500 four-finger images and more than 14,000 manually segmented single-fingertip images, and 10,000 synthetic fingertips (deepfakes). The PAD dataset was collected from six different Presentation Attack Instruments (PAI) of three different difficulty levels according to FIDO protocols, with five different types of PAI materials, and different smartphone cameras with manual focusing. We have utilized DenseNet-121 and NasNetMobile models and our proposed dataset to develop PAD algorithms and achieved PAD accuracy of Attack presentation classification error rate (APCER) 0.14\% and Bonafide presentation classification error rate (BPCER) 0.18\%. We have also reported the test results of the models against unseen spoof types to replicate uncertain real-world testing scenarios.

Deep Age-Invariant Fingerprint Segmentation System

Mar 06, 2023Abstract:Fingerprint-based identification systems achieve higher accuracy when a slap containing multiple fingerprints of a subject is used instead of a single fingerprint. However, segmenting or auto-localizing all fingerprints in a slap image is a challenging task due to the different orientations of fingerprints, noisy backgrounds, and the smaller size of fingertip components. The presence of slap images in a real-world dataset where one or more fingerprints are rotated makes it challenging for a biometric recognition system to localize and label the fingerprints automatically. Improper fingerprint localization and finger labeling errors lead to poor matching performance. In this paper, we introduce a method to generate arbitrary angled bounding boxes using a deep learning-based algorithm that precisely localizes and labels fingerprints from both axis-aligned and over-rotated slap images. We built a fingerprint segmentation model named CRFSEG (Clarkson Rotated Fingerprint segmentation Model) by updating the previously proposed CFSEG model which was based on traditional Faster R-CNN architecture [21]. CRFSEG improves upon the Faster R-CNN algorithm with arbitrarily angled bounding boxes that allow the CRFSEG to perform better in challenging slap images. After training the CRFSEG algorithm on a new dataset containing slap images collected from both adult and children subjects, our results suggest that the CRFSEG model was invariant across different age groups and can handle over-rotated slap images successfully. In the Combined dataset containing both normal and rotated images of adult and children subjects, we achieved a matching accuracy of 97.17%, which outperformed state-of-the-art VeriFinger (94.25%) and NFSEG segmentation systems (80.58%).

Face Recognition In Children: A Longitudinal Study

Apr 04, 2022

Abstract:The lack of high fidelity and publicly available longitudinal children face datasets is one of the main limiting factors in the development of face recognition systems for children. In this work, we introduce the Young Face Aging (YFA) dataset for analyzing the performance of face recognition systems over short age-gaps in children. We expand previous work by comparing YFA with several publicly available cross-age adult datasets to quantify the effects of short age-gap in adults and children. Our analysis confirms a statistically significant and matcher independent decaying relationship between the match scores of ArcFace-Focal, MagFace, and Facenet matchers and the age-gap between the gallery and probe images in children, even at the short age-gap of 6 months. However, our result indicates that the low verification performance reported in previous work might be due to the intra-class structure of the matcher and the lower quality of the samples. Our experiment using YFA and a state-of-the-art, quality-aware face matcher (MagFace) indicates 98.3% and 94.9% TAR at 0.1% FAR over 6 and 36 Months age-gaps, respectively, suggesting that face recognition may be feasible for children for age-gaps of up to three years.

Deep Slap Fingerprint Segmentation for Juveniles and Adults

Oct 06, 2021

Abstract:Many fingerprint recognition systems capture four fingerprints in one image. In such systems, the fingerprint processing pipeline must first segment each four-fingerprint slap into individual fingerprints. Note that most of the current fingerprint segmentation algorithms have been designed and evaluated using only adult fingerprint datasets. In this work, we have developed a human-annotated in-house dataset of 15790 slaps of which 9084 are adult samples and 6706 are samples drawn from children from ages 4 to 12. Subsequently, the dataset is used to evaluate the matching performance of the NFSEG, a slap fingerprint segmentation system developed by NIST, on slaps from adults and juvenile subjects. Our results reveal the lower performance of NFSEG on slaps from juvenile subjects. Finally, we utilized our novel dataset to develop the Mask-RCNN based Clarkson Fingerprint Segmentation (CFSEG). Our matching results using the Verifinger fingerprint matcher indicate that CFSEG outperforms NFSEG for both adults and juvenile slaps. The CFSEG model is publicly available at \url{https://github.com/keivanB/Clarkson_Finger_Segment}

High Fidelity Fingerprint Generation: Quality, Uniqueness, and Privacy

May 21, 2021

Abstract:In this work, we utilize progressive growth-based Generative Adversarial Networks (GANs) to develop the Clarkson Fingerprint Generator (CFG). We demonstrate that the CFG is capable of generating realistic, high fidelity, $512\times512$ pixels, full, plain impression fingerprints. Our results suggest that the fingerprints generated by the CFG are unique, diverse, and resemble the training dataset in terms of minutiae configuration and quality, while not revealing the underlying identities of the training data. We make the pre-trained CFG model and the synthetically generated dataset publicly available at https://github.com/keivanB/Clarkson_Finger_Gen

Fingerprint Presentation Attack Detection utilizing Time-Series, Color Fingerprint Captures

Apr 08, 2021

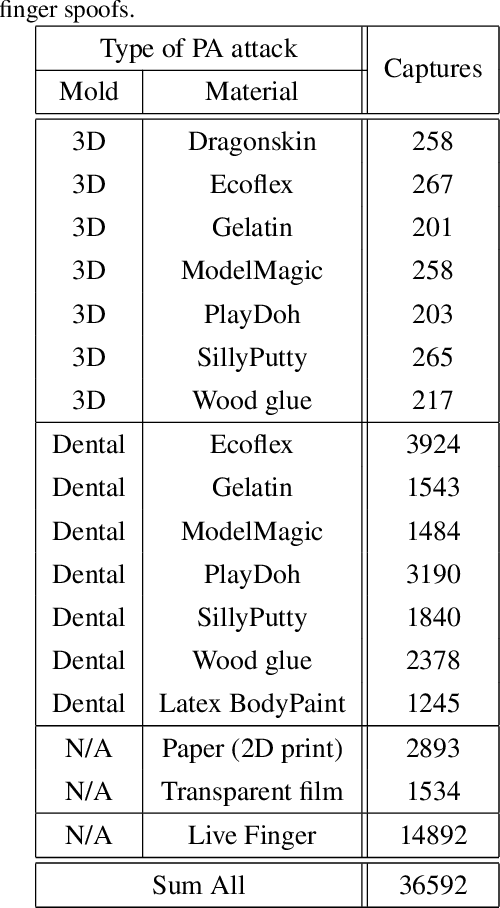

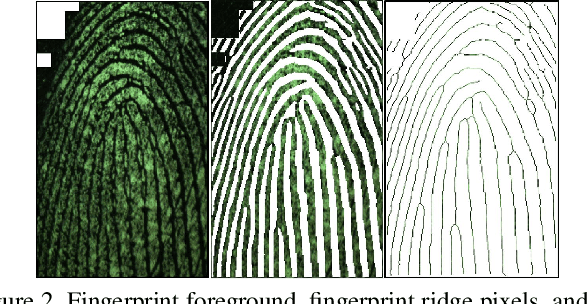

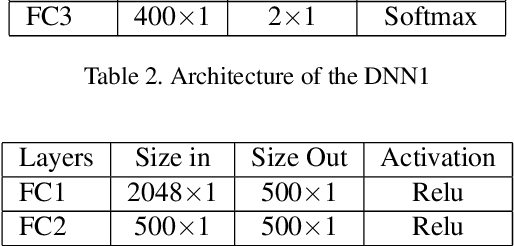

Abstract:Fingerprint capture systems can be fooled by widely accessible methods to spoof the system using fake fingers, known as presentation attacks. As biometric recognition systems become more extensively relied upon at international borders and in consumer electronics, presentation attacks are becoming an increasingly serious issue. A robust solution is needed that can handle the increased variability and complexity of spoofing techniques. This paper demonstrates the viability of utilizing a sensor with time-series and color-sensing capabilities to improve the robustness of a traditional fingerprint sensor and introduces a comprehensive fingerprint dataset with over 36,000 image sequences and a state-of-the-art set of spoofing techniques. The specific sensor used in this research captures a traditional gray-scale static capture and a time-series color capture simultaneously. Two different methods for Presentation Attack Detection (PAD) are used to assess the benefit of a color dynamic capture. The first algorithm utilizes Static-Temporal Feature Engineering on the fingerprint capture to generate a classification decision. The second generates its classification decision using features extracted by way of the Inception V3 CNN trained on ImageNet. Classification performance is evaluated using features extracted exclusively from the static capture, exclusively from the dynamic capture, and on a fusion of the two feature sets. With both PAD approaches we find that the fusion of the dynamic and static feature-set is shown to improve performance to a level not individually achievable.

* 8 pages, 3 figures, ICB-2019

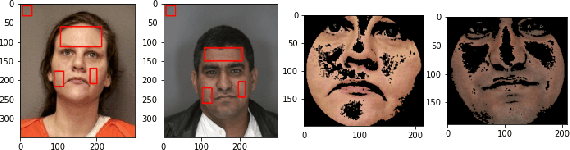

SREDS: A dichromatic separation based measure of skin color

Apr 07, 2021

Abstract:Face recognition (FR) systems are fast becoming ubiquitous. However, differential performance among certain demographics was identified in several widely used FR models. The skin tone of the subject is an important factor in addressing the differential performance. Previous work has used modeling methods to propose skin tone measures of subjects across different illuminations or utilized subjective labels of skin color and demographic information. However, such models heavily rely on consistent background and lighting for calibration, or utilize labeled datasets, which are time-consuming to generate or are unavailable. In this work, we have developed a novel and data-driven skin color measure capable of accurately representing subjects' skin tone from a single image, without requiring a consistent background or illumination. Our measure leverages the dichromatic reflection model in RGB space to decompose skin patches into diffuse and specular bases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge