Rejwan Bin Sulaiman

Enhancing Bangla Fake News Detection Using Bidirectional Gated Recurrent Units and Deep Learning Techniques

Mar 31, 2024Abstract:The rise of fake news has made the need for effective detection methods, including in languages other than English, increasingly important. The study aims to address the challenges of Bangla which is considered a less important language. To this end, a complete dataset containing about 50,000 news items is proposed. Several deep learning models have been tested on this dataset, including the bidirectional gated recurrent unit (GRU), the long short-term memory (LSTM), the 1D convolutional neural network (CNN), and hybrid architectures. For this research, we assessed the efficacy of the model utilizing a range of useful measures, including recall, precision, F1 score, and accuracy. This was done by employing a big application. We carry out comprehensive trials to show the effectiveness of these models in identifying bogus news in Bangla, with the Bidirectional GRU model having a stunning accuracy of 99.16%. Our analysis highlights the importance of dataset balance and the need for continual improvement efforts to a substantial degree. This study makes a major contribution to the creation of Bangla fake news detecting systems with limited resources, thereby setting the stage for future improvements in the detection process.

Comparative study of Deep Learning Models for Binary Classification on Combined Pulmonary Chest X-ray Dataset

Sep 16, 2023

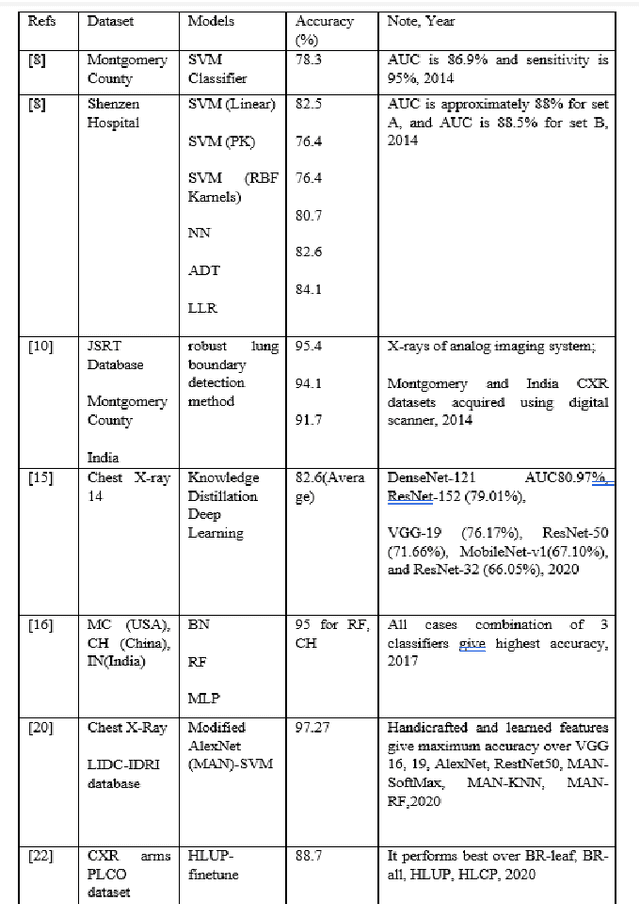

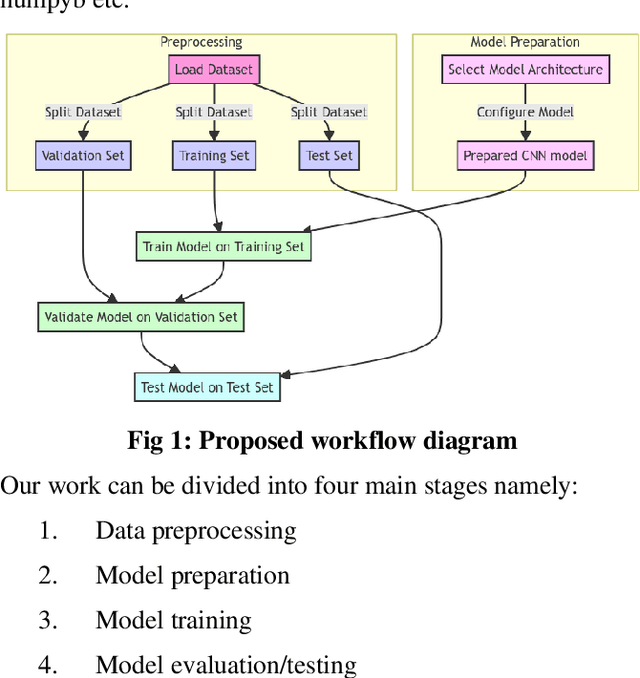

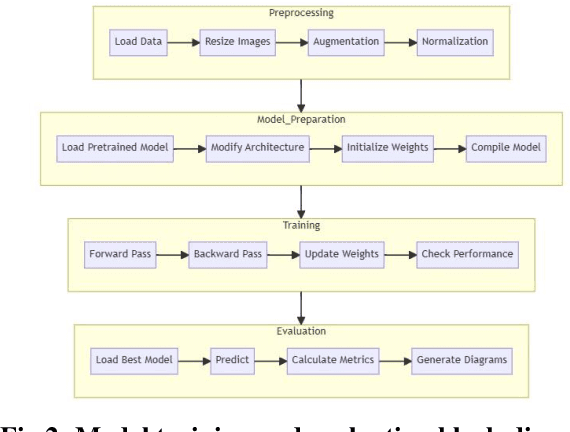

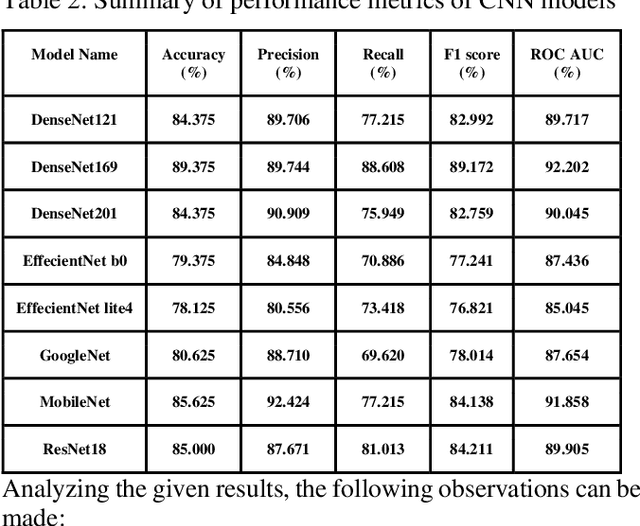

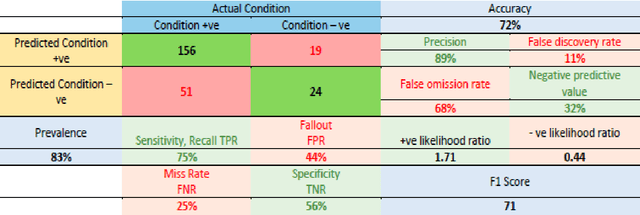

Abstract:CNN-based deep learning models for disease detection have become popular recently. We compared the binary classification performance of eight prominent deep learning models: DenseNet 121, DenseNet 169, DenseNet 201, EffecientNet b0, EffecientNet lite4, GoogleNet, MobileNet, and ResNet18 for their binary classification performance on combined Pulmonary Chest Xrays dataset. Despite the widespread application in different fields in medical images, there remains a knowledge gap in determining their relative performance when applied to the same dataset, a gap this study aimed to address. The dataset combined Shenzhen, China (CH) and Montgomery, USA (MC) data. We trained our model for binary classification, calculated different parameters of the mentioned models, and compared them. The models were trained to keep in mind all following the same training parameters to maintain a controlled comparison environment. End of the study, we found a distinct difference in performance among the other models when applied to the pulmonary chest Xray image dataset, where DenseNet169 performed with 89.38 percent and MobileNet with 92.2 percent precision. Keywords: Pulmonary, Deep Learning, Tuberculosis, Disease detection, Xray

IoMT-Blockchain based Secured Remote Patient Monitoring Framework for Neuro-Stimulation Device

Aug 31, 2023Abstract:Biomedical Engineering's Internet of Medical Things (IoMT) is helping to improve the accuracy, dependability, and productivity of electronic equipment in the healthcare business. Real-time sensory data from patients may be delivered and subsequently analyzed through rapid development of wearable IoMT devices, such as neuro-stimulation devices with a range of functions. Data from the Internet of Things is gathered, analyzed, and stored in a single location. However, single-point failure, data manipulation, privacy difficulties, and other challenges might arise as a result of centralization. Due to its decentralized nature, blockchain (BC) can alleviate these issues. The viability of establishing a non-invasive remote neurostimulation system employing IoMT-based transcranial Direct Current Stimulation is investigated in this work (tDCS). A hardware-based prototype tDCS device has been developed that can be operated over the internet using an android application. Our suggested framework addresses the problems of IoMTBC-based systems, meets the criteria of real-time remote patient monitoring systems, and incorporates literature best practices in the relevant fields.

Unleashing the Power of Extra-Tree Feature Selection and Random Forest Classifier for Improved Survival Prediction in Heart Failure Patients

Aug 09, 2023

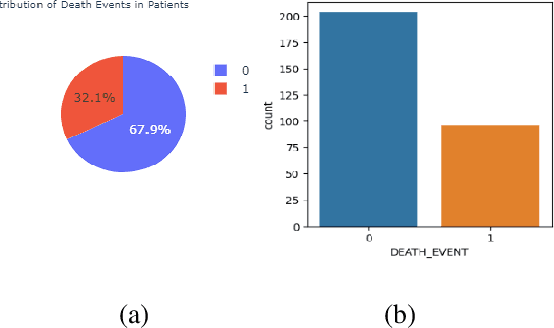

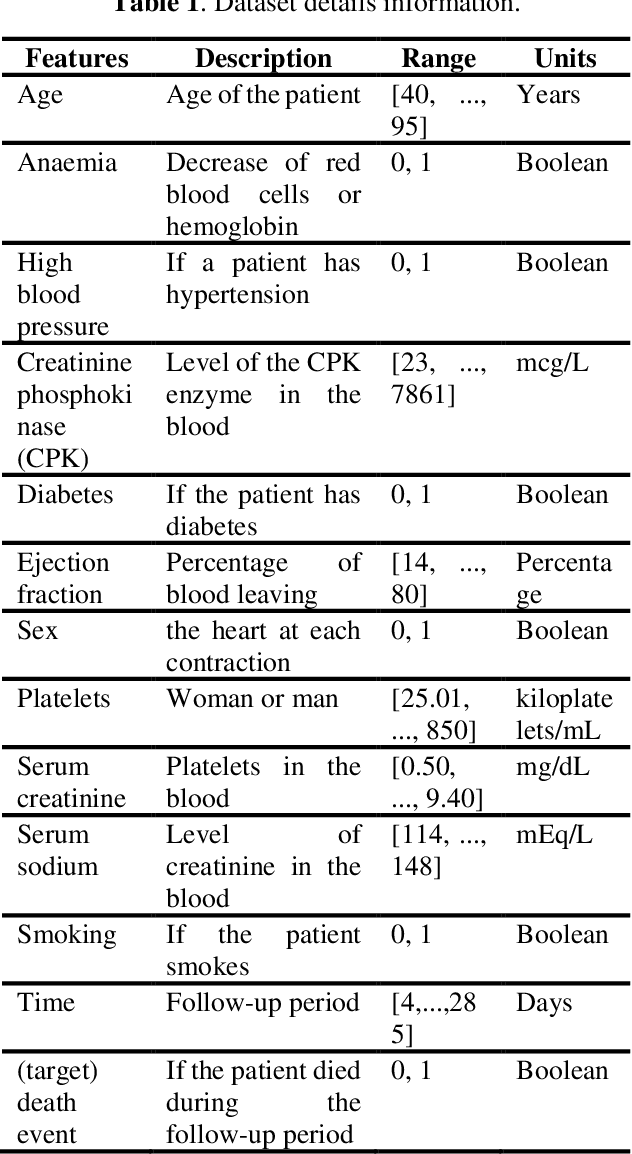

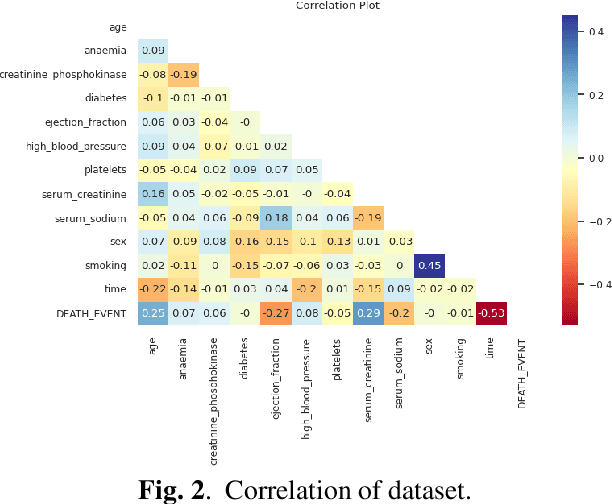

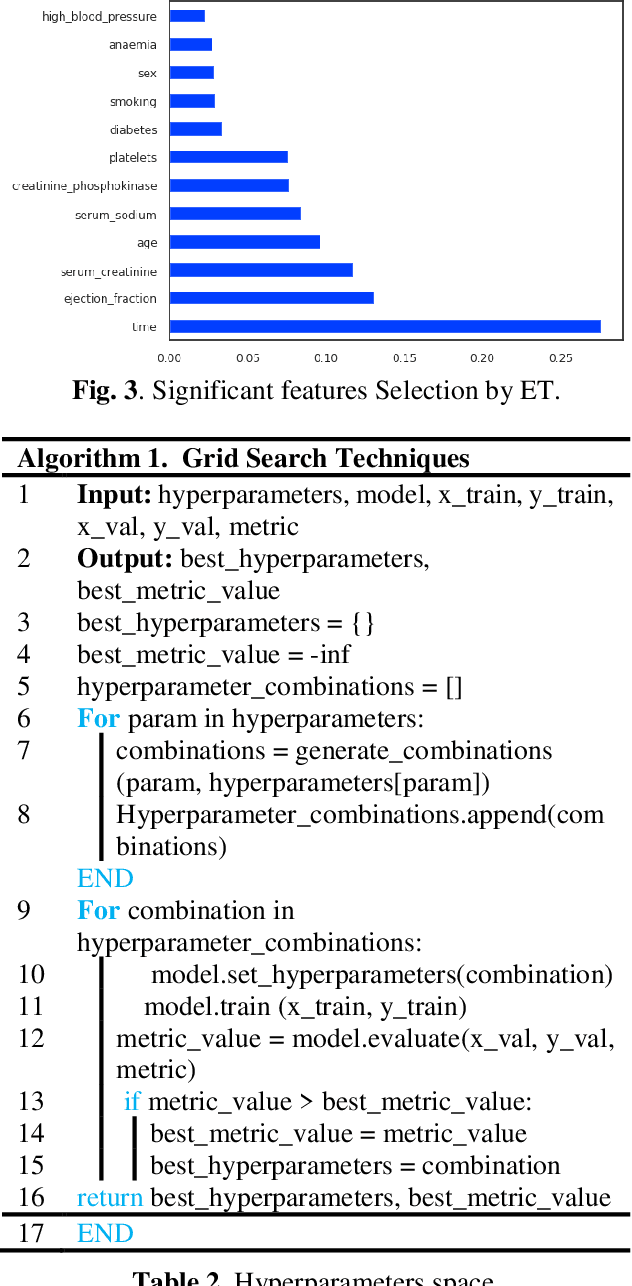

Abstract:Heart failure is a life-threatening condition that affects millions of people worldwide. The ability to accurately predict patient survival can aid in early intervention and improve patient outcomes. In this study, we explore the potential of utilizing data pre-processing techniques and the Extra-Tree (ET) feature selection method in conjunction with the Random Forest (RF) classifier to improve survival prediction in heart failure patients. By leveraging the strengths of ET feature selection, we aim to identify the most significant predictors associated with heart failure survival. Using the public UCL Heart failure (HF) survival dataset, we employ the ET feature selection algorithm to identify the most informative features. These features are then used as input for grid search of RF. Finally, the tuned RF Model was trained and evaluated using different matrices. The approach was achieved 98.33% accuracy that is the highest over the exiting work.

Comparative Analysis of Epileptic Seizure Prediction: Exploring Diverse Pre-Processing Techniques and Machine Learning Models

Aug 06, 2023

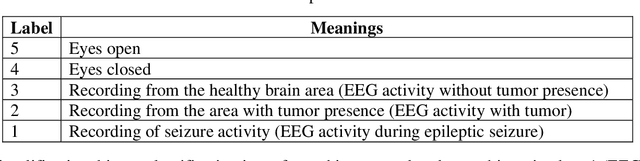

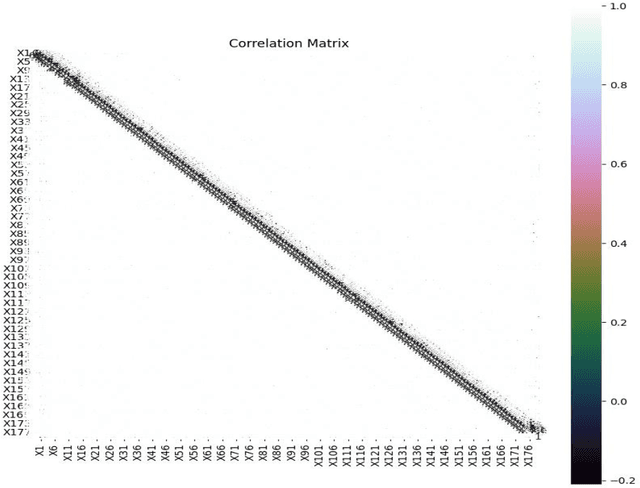

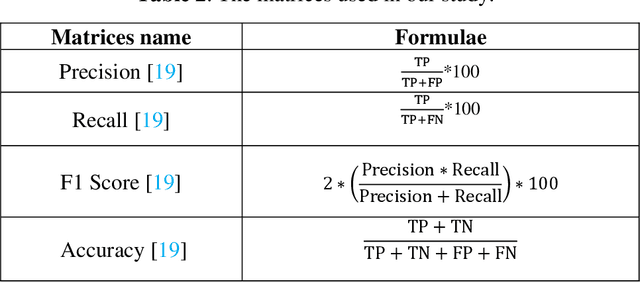

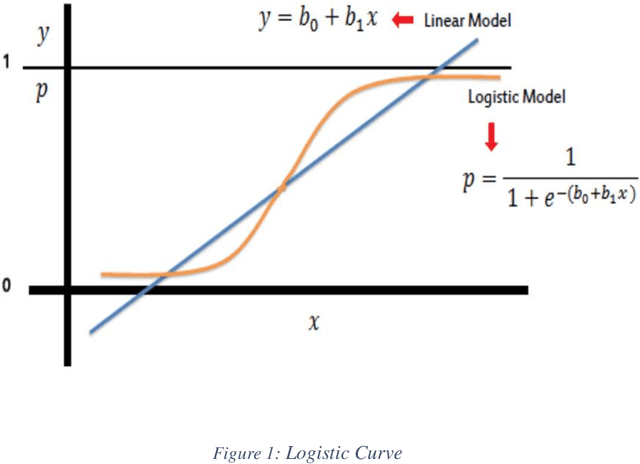

Abstract:Epilepsy is a prevalent neurological disorder characterized by recurrent and unpredictable seizures, necessitating accurate prediction for effective management and patient care. Application of machine learning (ML) on electroencephalogram (EEG) recordings, along with its ability to provide valuable insights into brain activity during seizures, is able to make accurate and robust seizure prediction an indispensable component in relevant studies. In this research, we present a comprehensive comparative analysis of five machine learning models - Random Forest (RF), Decision Tree (DT), Extra Trees (ET), Logistic Regression (LR), and Gradient Boosting (GB) - for the prediction of epileptic seizures using EEG data. The dataset underwent meticulous preprocessing, including cleaning, normalization, outlier handling, and oversampling, ensuring data quality and facilitating accurate model training. These preprocessing techniques played a crucial role in enhancing the models' performance. The results of our analysis demonstrate the performance of each model in terms of accuracy. The LR classifier achieved an accuracy of 56.95%, while GB and DT both attained 97.17% accuracy. RT achieved a higher accuracy of 98.99%, while the ET model exhibited the best performance with an accuracy of 99.29%. Our findings reveal that the ET model outperformed not only the other models in the comparative analysis but also surpassed the state-of-the-art results from previous research. The superior performance of the ET model makes it a compelling choice for accurate and robust epileptic seizure prediction using EEG data.

PotatoPestNet: A CTInceptionV3-RS-Based Neural Network for Accurate Identification of Potato Pests

May 27, 2023

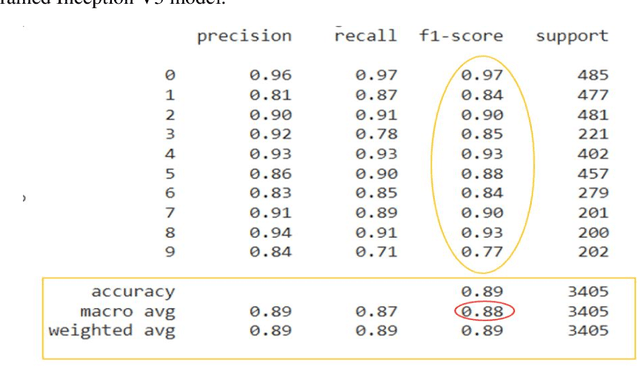

Abstract:Potatoes are the third-largest food crop globally, but their production frequently encounters difficulties because of aggressive pest infestations. The aim of this study is to investigate the various types and characteristics of these pests and propose an efficient PotatoPestNet AI-based automatic potato pest identification system. To accomplish this, we curated a reliable dataset consisting of eight types of potato pests. We leveraged the power of transfer learning by employing five customized, pre-trained transfer learning models: CMobileNetV2, CNASLargeNet, CXception, CDenseNet201, and CInceptionV3, in proposing a robust PotatoPestNet model to accurately classify potato pests. To improve the models' performance, we applied various augmentation techniques, incorporated a global average pooling layer, and implemented proper regularization methods. To further enhance the performance of the models, we utilized random search (RS) optimization for hyperparameter tuning. This optimization technique played a significant role in fine-tuning the models and achieving improved performance. We evaluated the models both visually and quantitatively, utilizing different evaluation metrics. The robustness of the models in handling imbalanced datasets was assessed using the Receiver Operating Characteristic (ROC) curve. Among the models, the Customized Tuned Inception V3 (CTInceptionV3) model, optimized through random search, demonstrated outstanding performance. It achieved the highest accuracy (91%), precision (91%), recall (91%), and F1-score (91%), showcasing its superior ability to accurately identify and classify potato pests.

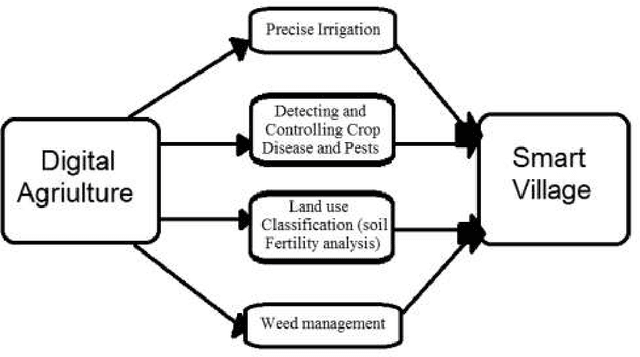

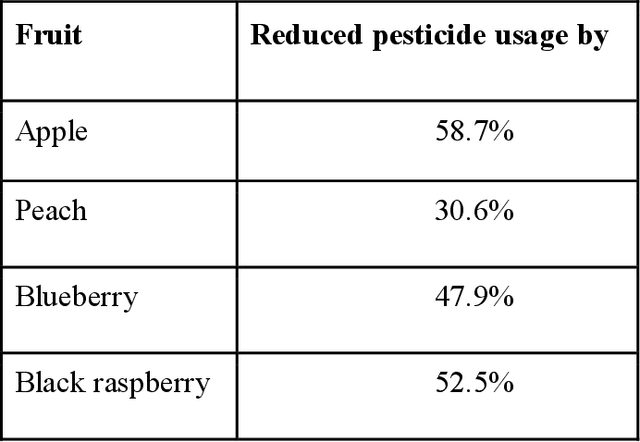

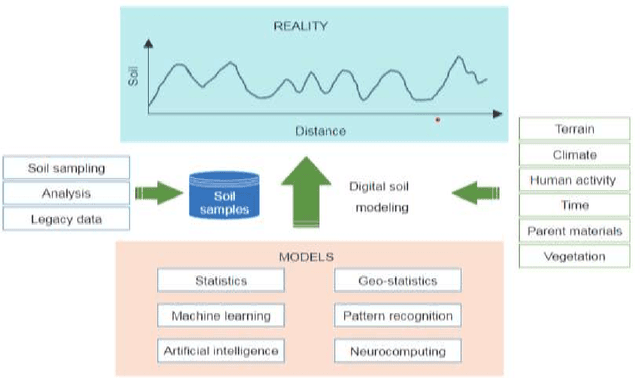

The Role of Digital Agriculture in Transforming Rural Areas into Smart Villages

Jan 07, 2023

Abstract:From the perspective of any nation, rural areas generally present a comparable set of problems, such as a lack of proper health care, education, living conditions, wages, and market opportunities. Some nations have created and developed the concept of smart villages during the previous few decades, which effectively addresses these issues. The landscape of traditional agriculture has been radically altered by digital agriculture, which has also had a positive economic impact on farmers and those who live in rural regions by ensuring an increase in agricultural production. We explored current issues in rural areas, and the consequences of smart village applications, and then illustrate our concept of smart village from recent examples of how emerging digital agriculture trends contribute to improving agricultural production in this chapter.

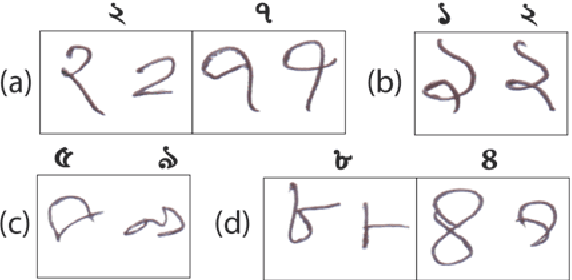

Efficient approach of using CNN based pretrained model in Bangla handwritten digit recognition

Sep 19, 2022

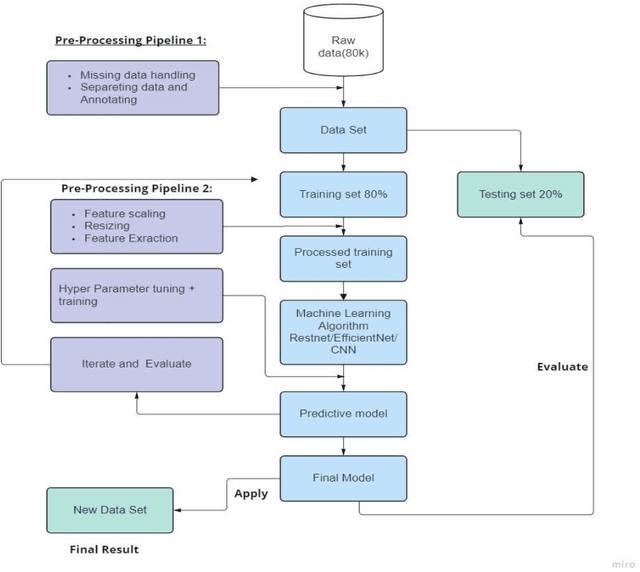

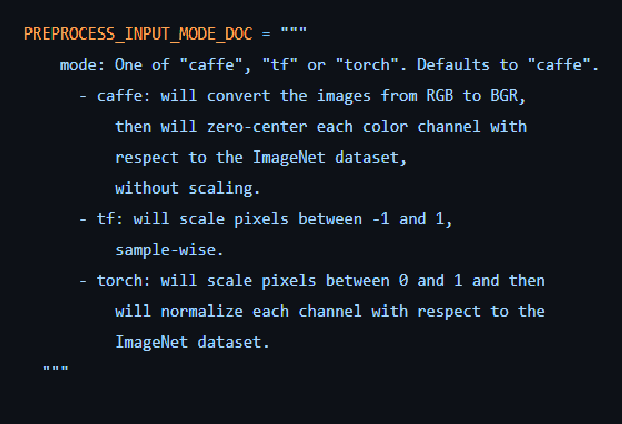

Abstract:Due to digitalization in everyday life, the need for automatically recognizing handwritten digits is increasing. Handwritten digit recognition is essential for numerous applications in various industries. Bengali ranks the fifth largest language in the world with 265 million speakers (Native and non-native combined) and 4 percent of the world population speaks Bengali. Due to the complexity of Bengali writing in terms of variety in shape, size, and writing style, researchers did not get better accuracy using Supervised machine learning algorithms to date. Moreover, fewer studies have been done on Bangla handwritten digit recognition (BHwDR). In this paper, we proposed a novel CNN-based pre-trained handwritten digit recognition model which includes Resnet-50, Inception-v3, and EfficientNetB0 on NumtaDB dataset of 17 thousand instances with 10 classes.. The Result outperformed the performance of other models to date with 97% accuracy in the 10-digit classes. Furthermore, we have evaluated the result or our model with other research studies while suggesting future study

A novel approach to increase scalability while training machine learning algorithms using Bfloat 16 in credit card fraud detection

Jun 24, 2022

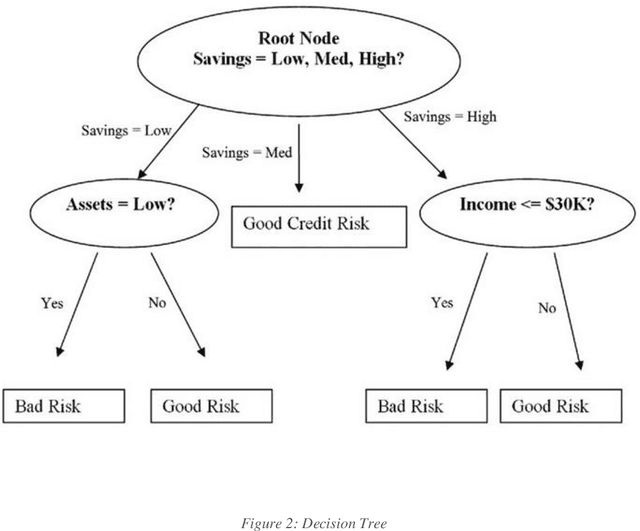

Abstract:The use of credit cards has become quite common these days as digital banking has become the norm. With this increase, fraud in credit cards also has a huge problem and loss to the banks and customers alike. Normal fraud detection systems, are not able to detect the fraud since fraudsters emerge with new techniques to commit fraud. This creates the need to use machine learning-based software to detect frauds. Currently, the machine learning softwares that are available focuses only on the accuracy of detecting frauds but does not focus on the cost or time factors to detect. This research focuses on machine learning scalability for banks' credit card fraud detection systems. We have compared the existing machine learning algorithms and methods that are available with the newly proposed technique. The goal is to prove that using fewer bits for training a machine learning algorithm will result in a more scalable system, that will reduce the time and will also be less costly to implement.

Efficiency Comparison of AI classification algorithms for Image Detection and Recognition in Real-time

Jun 12, 2022

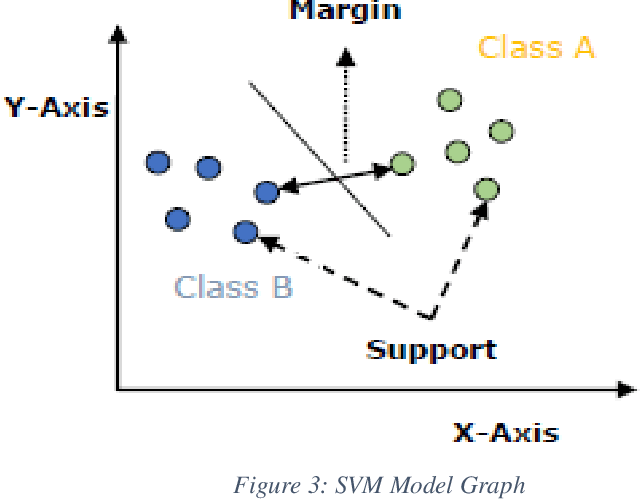

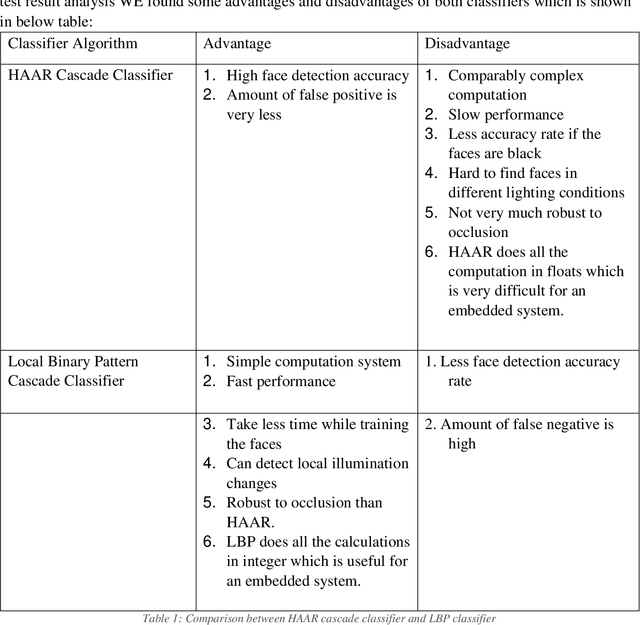

Abstract:Face detection and identification is the most difficult and often used task in Artificial Intelligence systems. The goal of this study is to present and compare the results of several face detection and recognition algorithms used in the system. This system begins with a training image of a human, then continues on to the test image, identifying the face, comparing it to the trained face, and finally classifying it using OpenCV classifiers. This research will discuss the most effective and successful tactics used in the system, which are implemented using Python, OpenCV, and Matplotlib. It may also be used in locations with CCTV, such as public spaces, shopping malls, and ATM booths.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge