Rebeca P. Díaz-Redondo

Continual Learning at the Edge: An Agnostic IIoT Architecture

Dec 16, 2025Abstract:The exponential growth of Internet-connected devices has presented challenges to traditional centralized computing systems due to latency and bandwidth limitations. Edge computing has evolved to address these difficulties by bringing computations closer to the data source. Additionally, traditional machine learning algorithms are not suitable for edge-computing systems, where data usually arrives in a dynamic and continual way. However, incremental learning offers a good solution for these settings. We introduce a new approach that applies the incremental learning philosophy within an edge-computing scenario for the industrial sector with a specific purpose: real time quality control in a manufacturing system. Applying continual learning we reduce the impact of catastrophic forgetting and provide an efficient and effective solution.

Realistic Urban Traffic Generator using Decentralized Federated Learning for the SUMO simulator

Jun 09, 2025Abstract:Realistic urban traffic simulation is essential for sustainable urban planning and the development of intelligent transportation systems. However, generating high-fidelity, time-varying traffic profiles that accurately reflect real-world conditions, especially in large-scale scenarios, remains a major challenge. Existing methods often suffer from limitations in accuracy, scalability, or raise privacy concerns due to centralized data processing. This work introduces DesRUTGe (Decentralized Realistic Urban Traffic Generator), a novel framework that integrates Deep Reinforcement Learning (DRL) agents with the SUMO simulator to generate realistic 24-hour traffic patterns. A key innovation of DesRUTGe is its use of Decentralized Federated Learning (DFL), wherein each traffic detector and its corresponding urban zone function as an independent learning node. These nodes train local DRL models using minimal historical data and collaboratively refine their performance by exchanging model parameters with selected peers (e.g., geographically adjacent zones), without requiring a central coordinator. Evaluated using real-world data from the city of Barcelona, DesRUTGe outperforms standard SUMO-based tools such as RouteSampler, as well as other centralized learning approaches, by delivering more accurate and privacy-preserving traffic pattern generation.

CO-DEFEND: Continuous Decentralized Federated Learning for Secure DoH-Based Threat Detection

Apr 02, 2025

Abstract:The use of DNS over HTTPS (DoH) tunneling by an attacker to hide malicious activity within encrypted DNS traffic poses a serious threat to network security, as it allows malicious actors to bypass traditional monitoring and intrusion detection systems while evading detection by conventional traffic analysis techniques. Machine Learning (ML) techniques can be used to detect DoH tunnels; however, their effectiveness relies on large datasets containing both benign and malicious traffic. Sharing such datasets across entities is challenging due to privacy concerns. In this work, we propose CO-DEFEND (Continuous Decentralized Federated Learning for Secure DoH-Based Threat Detection), a Decentralized Federated Learning (DFL) framework that enables multiple entities to collaboratively train a classification machine learning model while preserving data privacy and enhancing resilience against single points of failure. The proposed DFL framework, which is scalable and privacy-preserving, is based on a federation process that allows multiple entities to train online their local models using incoming DoH flows in real time as they are processed by the entity. In addition, we adapt four classical machine learning algorithms, Support Vector Machines (SVM), Logistic Regression (LR), Decision Trees (DT), and Random Forest (RF), for federated scenarios, comparing their results with more computationally complex alternatives such as neural networks. We compare our proposed method by using the dataset CIRA-CIC-DoHBrw-2020 with existing machine learning approaches to demonstrate its effectiveness in detecting malicious DoH tunnels and the benefits it brings.

Towards efficient compression and communication for prototype-based decentralized learning

Nov 14, 2024Abstract:In prototype-based federated learning, the exchange of model parameters between clients and the master server is replaced by transmission of prototypes or quantized versions of the data samples to the aggregation server. A fully decentralized deployment of prototype-based learning, without a central agregartor of prototypes, is more robust upon network failures and reacts faster to changes in the statistical distribution of the data, suggesting potential advantages and quick adaptation in dynamic learning tasks, e.g., when the data sources are IoT devices or when data is non-iid. In this paper, we consider the problem of designing a communication-efficient decentralized learning system based on prototypes. We address the challenge of prototype redundancy by leveraging on a twofold data compression technique, i.e., sending only update messages if the prototypes are informationtheoretically useful (via the Jensen-Shannon distance), and using clustering on the prototypes to compress the update messages used in the gossip protocol. We also use parallel instead of sequential gossiping, and present an analysis of its age-of-information (AoI). Our experimental results show that, with these improvements, the communications load can be substantially reduced without decreasing the convergence rate of the learning algorithm.

Byzantine-Robust Aggregation for Securing Decentralized Federated Learning

Sep 26, 2024Abstract:Federated Learning (FL) emerges as a distributed machine learning approach that addresses privacy concerns by training AI models locally on devices. Decentralized Federated Learning (DFL) extends the FL paradigm by eliminating the central server, thereby enhancing scalability and robustness through the avoidance of a single point of failure. However, DFL faces significant challenges in optimizing security, as most Byzantine-robust algorithms proposed in the literature are designed for centralized scenarios. In this paper, we present a novel Byzantine-robust aggregation algorithm to enhance the security of Decentralized Federated Learning environments, coined WFAgg. This proposal handles the adverse conditions and strength robustness of dynamic decentralized topologies at the same time by employing multiple filters to identify and mitigate Byzantine attacks. Experimental results demonstrate the effectiveness of the proposed algorithm in maintaining model accuracy and convergence in the presence of various Byzantine attack scenarios, outperforming state-of-the-art centralized Byzantine-robust aggregation schemes (such as Multi-Krum or Clustering). These algorithms are evaluated on an IID image classification problem in both centralized and decentralized scenarios.

Overcoming Catastrophic Forgetting in Tabular Data Classification: A Pseudorehearsal-based approach

Jul 12, 2024

Abstract:Continual learning (CL) poses the important challenge of adapting to evolving data distributions without forgetting previously acquired knowledge while consolidating new knowledge. In this paper, we introduce a new methodology, coined as Tabular-data Rehearsal-based Incremental Lifelong Learning framework (TRIL3), designed to address the phenomenon of catastrophic forgetting in tabular data classification problems. TRIL3 uses the prototype-based incremental generative model XuILVQ to generate synthetic data to preserve old knowledge and the DNDF algorithm, which was modified to run in an incremental way, to learn classification tasks for tabular data, without storing old samples. After different tests to obtain the adequate percentage of synthetic data and to compare TRIL3 with other CL available proposals, we can conclude that the performance of TRIL3 outstands other options in the literature using only 50% of synthetic data.

Decentralised and collaborative machine learning framework for IoT

Dec 19, 2023Abstract:Decentralised machine learning has recently been proposed as a potential solution to the security issues of the canonical federated learning approach. In this paper, we propose a decentralised and collaborative machine learning framework specially oriented to resource-constrained devices, usual in IoT deployments. With this aim we propose the following construction blocks. First, an incremental learning algorithm based on prototypes that was specifically implemented to work in low-performance computing elements. Second, two random-based protocols to exchange the local models among the computing elements in the network. Finally, two algorithmics approaches for prediction and prototype creation. This proposal was compared to a typical centralized incremental learning approach in terms of accuracy, training time and robustness with very promising results.

Discovering Geo-dependent Stories by Combining Density-based Clustering and Thread-based Aggregation techniques

Dec 18, 2023Abstract:Citizens are actively interacting with their surroundings, especially through social media. Not only do shared posts give important information about what is happening (from the users' perspective), but also the metadata linked to these posts offer relevant data, such as the GPS-location in Location-based Social Networks (LBSNs). In this paper we introduce a global analysis of the geo-tagged posts in social media which supports (i) the detection of unexpected behavior in the city and (ii) the analysis of the posts to infer what is happening. The former is obtained by applying density-based clustering techniques, whereas the latter is consequence of applying natural language processing. We have applied our methodology to a dataset obtained from Instagram activity in New York City for seven months obtaining promising results. The developed algorithms require very low resources, being able to analyze millions of data-points in commodity hardware in less than one hour without applying complex parallelization techniques. Furthermore, the solution can be easily adapted to other geo-tagged data sources without extra effort.

* 11 pages, 12 figures, journal

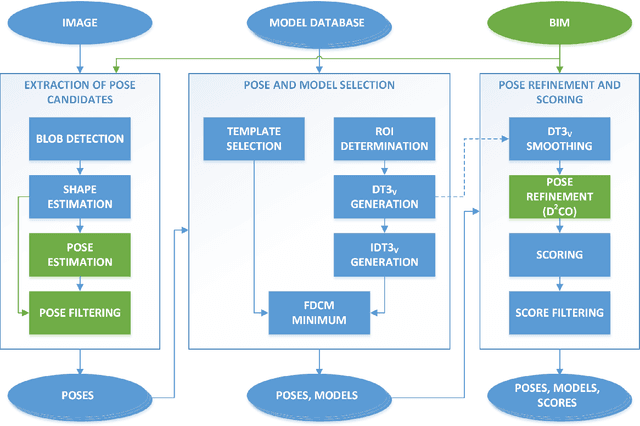

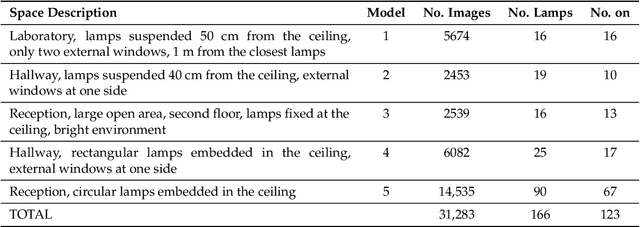

Use of BIM Data as Input and Output for Improved Detection of Lighting Elements in Buildings

Dec 18, 2023Abstract:This paper introduces a complete method for the automatic detection, identification and localization of lighting elements in buildings, leveraging the available building information modeling (BIM) data of a building and feeding the BIM model with the new collected information, which is key for energy-saving strategies. The detection system is heavily improved from our previous work, with the following two main contributions: (i) a new refinement algorithm to provide a better detection rate and identification performance with comparable computational resources and (ii) a new plane estimation, filtering and projection step to leverage the BIM information earlier for lamps that are both hanging and embedded. The two modifications are thoroughly tested in five different case studies, yielding better results in terms of detection, identification and localization.

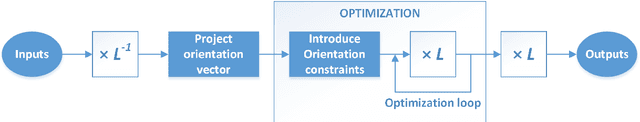

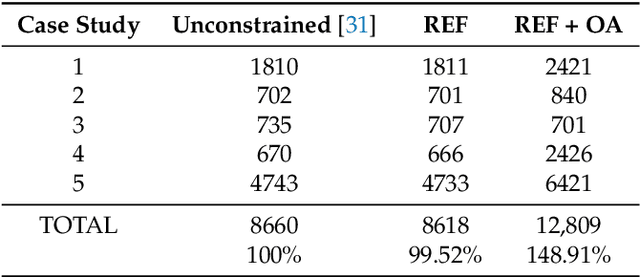

Orientation-Constrained System for Lamp Detection in Buildings Based on Computer Vision

Dec 18, 2023

Abstract:Computer vision is used in this work to detect lighting elements in buildings with the goal of improving the accuracy of previous methods to provide a precise inventory of the location and state of lamps. Using the framework developed in our previous works, we introduce two new modifications to enhance the system: first, a constraint on the orientation of the detected poses in the optimization methods for both the initial and the refined estimates based on the geometric information of the building information modelling (BIM) model; second, an additional reprojection error filtering step to discard the erroneous poses introduced with the orientation restrictions, keeping the identification and localization errors low while greatly increasing the number of detections. These~enhancements are tested in five different case studies with more than 30,000 images, with results showing improvements in the number of detections, the percentage of correct model and state identifications, and the distance between detections and reference positions

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge