Ranran Huang

Cross-layer Attention Network for Fine-grained Visual Categorization

Oct 17, 2022

Abstract:Learning discriminative representations for subtle localized details plays a significant role in Fine-grained Visual Categorization (FGVC). Compared to previous attention-based works, our work does not explicitly define or localize the part regions of interest; instead, we leverage the complementary properties of different stages of the network, and build a mutual refinement mechanism between the mid-level feature maps and the top-level feature map by our proposed Cross-layer Attention Network (CLAN). Specifically, CLAN is composed of 1) the Cross-layer Context Attention (CLCA) module, which enhances the global context information in the intermediate feature maps with the help of the top-level feature map, thereby improving the expressive power of the middle layers, and 2) the Cross-layer Spatial Attention (CLSA) module, which takes advantage of the local attention in the mid-level feature maps to boost the feature extraction of local regions at the top-level feature maps. Experimental results show our approach achieves state-of-the-art on three publicly available fine-grained recognition datasets (CUB-200-2011, Stanford Cars and FGVC-Aircraft). Ablation studies and visualizations are provided to understand our approach. Experimental results show our approach achieves state-of-the-art on three publicly available fine-grained recognition datasets (CUB-200-2011, Stanford Cars and FGVC-Aircraft).

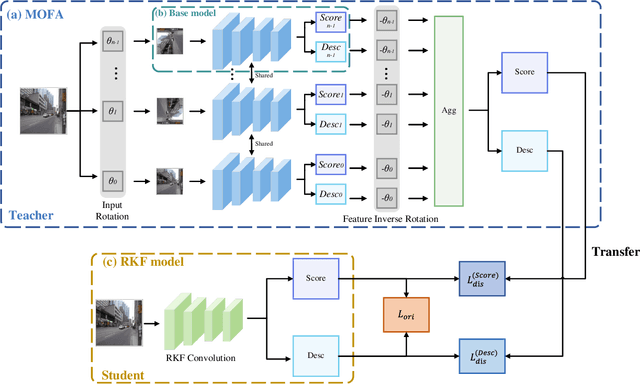

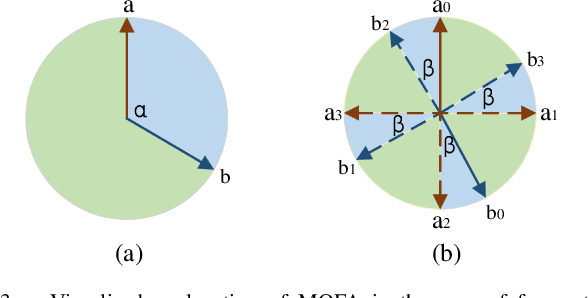

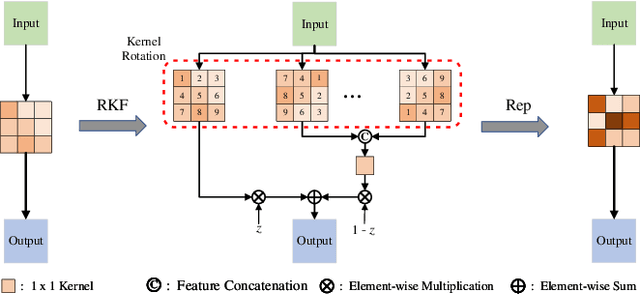

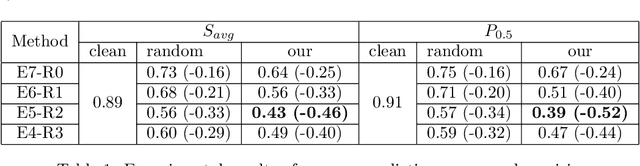

DRKF: Distilled Rotated Kernel Fusion for Efficiently Boosting Rotation Invariance in Image Matching

Sep 22, 2022

Abstract:Most existing learning-based image matching pipelines are designed for better feature detectors and descriptors which are robust to repeated textures, viewpoint changes, etc., while little attention has been paid to rotation invariance. As a consequence, these approaches usually demonstrate inferior performance compared to the handcrafted algorithms in circumstances where a significant level of rotation exists in data, due to the lack of keypoint orientation prediction. To address the issue efficiently, an approach based on knowledge distillation is proposed for improving rotation robustness without extra computational costs. Specifically, based on the base model, we propose Multi-Oriented Feature Aggregation (MOFA), which is subsequently adopted as the teacher in the distillation pipeline. Moreover, Rotated Kernel Fusion (RKF) is applied to each convolution kernel of the student model to facilitate learning rotation-invariant features. Eventually, experiments show that our proposals can generalize successfully under various rotations without additional costs in the inference stage.

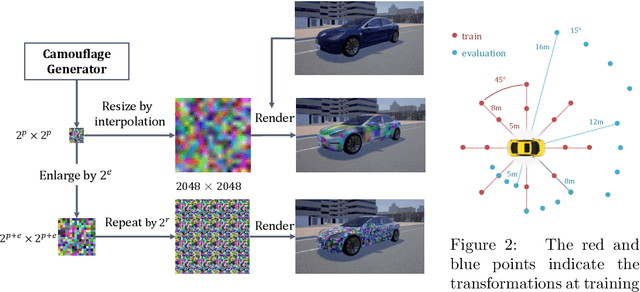

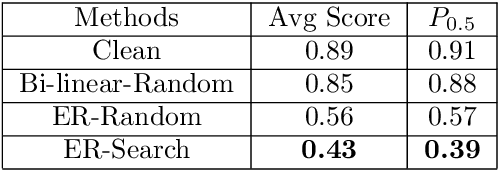

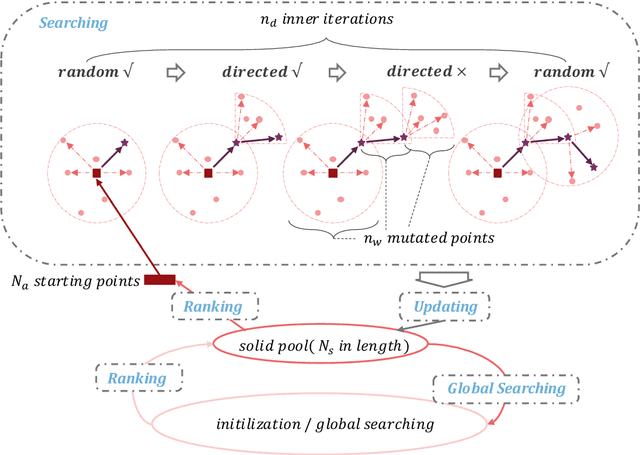

Physical Adversarial Attack on Vehicle Detector in the Carla Simulator

Aug 07, 2020

Abstract:In this paper, we tackle the issue of physical adversarial examples for object detectors in the wild. Specifically, we proposed to generate adversarial patterns to be applied on vehicle surface so that it's not recognizable by detectors in the photo-realistic Carla simulator. Our approach contains two main techniques, an Enlarge-and-Repeat process and a Discrete Searching method, to craft mosaic-like adversarial vehicle textures without access to neither the model weight of the detector nor a differential rendering procedure. The experimental results demonstrate the effectiveness of our approach in the simulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge