Randall Ali

MoD-ART: Modal Decomposition of Acoustic Radiance Transfer

Dec 05, 2024

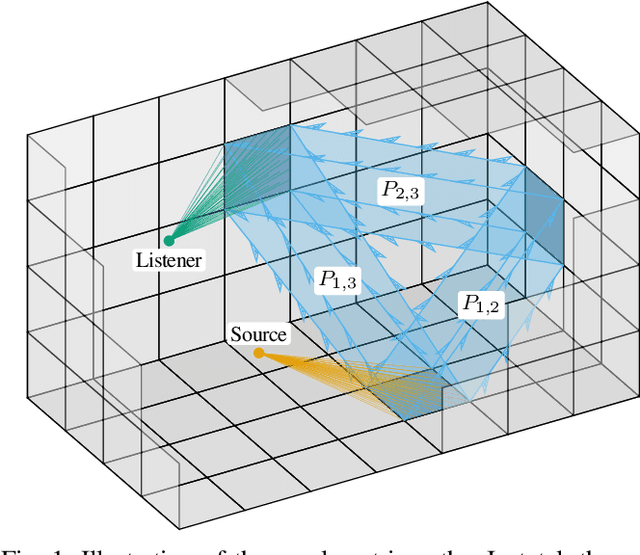

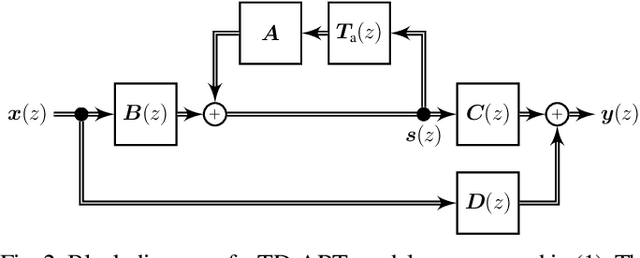

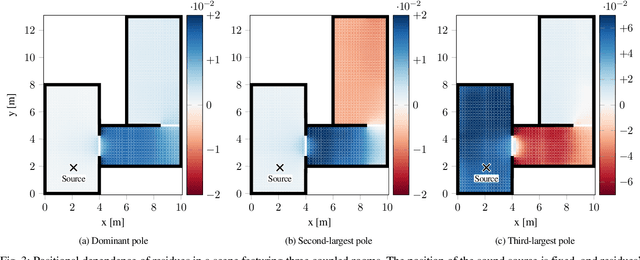

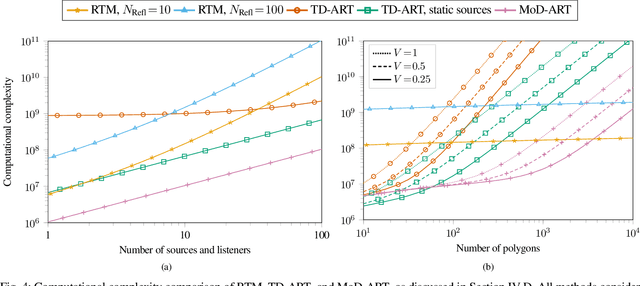

Abstract:Modeling late reverberation at interactive speeds is a challenging task when multiple sound sources and listeners are present in the same environment. This is especially problematic when the environment is geometrically complex and/or features uneven energy absorption (e.g. coupled volumes), because in such cases the late reverberation is dependent on the sound sources' and listeners' positions, and therefore must be adapted to their movements in real time. We present a novel approach to the task, named modal decomposition of Acoustic Radiance Transfer (MoD-ART), which can handle highly complex scenarios with efficiency. The approach is based on the geometrical acoustics method of Acoustic Radiance Transfer, from which we extract a set of energy decay modes and their positional relationships with sources and listeners. In this paper, we describe the physical and mathematical meaningfulness of MoD-ART, highlighting its advantages and applicability to different scenarios. Through an analysis of the method's computational complexity, we show that it compares very favourably with ray-tracing. We also present simulation results showing that MoD-ART can capture multiple decay slopes and flutter echoes.

State-Space Estimation of Spatially Dynamic Room Impulse Responses using a Room Acoustic Model-based Prior

Nov 13, 2024

Abstract:The estimation of room impulse responses (RIRs) between static loudspeaker and microphone locations can be done using a number of well-established measurement and inference procedures. While these procedures assume a time-invariant acoustic system, time variations need to be considered for the case of spatially dynamic scenarios where loudspeakers and microphones are subject to movement. If the RIR is modeled using image sources, then movement implies that the distance to each image source varies over time, making the estimation of the spatially dynamic RIR particularly challenging. In this paper, we propose a procedure to estimate the early part of the spatially dynamic RIR between a stationary source and a microphone moving on a linear trajectory at constant velocity. The procedure is built upon a state-space model, where the state to be estimated represents the early RIR, the observation corresponds to a microphone recording in a spatially dynamic scenario, and time-varying distances to the image sources are incorporated into the state transition matrix obtained from static RIRs at the start and end point of the trajectory. The performance of the proposed approach is evaluated against state-of-the-art RIR interpolation and state-space estimation methods using simulations, demonstrating the potential of the proposed state-space model.

* 30 pages, 13 figures

Distributed Adaptive Norm Estimation for Blind System Identification in Wireless Sensor Networks

Mar 01, 2023Abstract:Distributed signal-processing algorithms in (wireless) sensor networks often aim to decentralize processing tasks to reduce communication cost and computational complexity or avoid reliance on a single device (i.e., fusion center) for processing. In this contribution, we extend a distributed adaptive algorithm for blind system identification that relies on the estimation of a stacked network-wide consensus vector at each node, the computation of which requires either broadcasting or relaying of node-specific values (i.e., local vector norms) to all other nodes. The extended algorithm employs a distributed-averaging-based scheme to estimate the network-wide consensus norm value by only using the local vector norm provided by neighboring sensor nodes. We introduce an adaptive mixing factor between instantaneous and recursive estimates of these norms for adaptivity in a time-varying system. Simulation results show that the extension provides estimation results close to the optimal fully-connected-network or broadcasting case while reducing inter-node transmission significantly.

MYRiAD: A Multi-Array Room Acoustic Database

Jan 30, 2023Abstract:In the development of acoustic signal processing algorithms, their evaluation in various acoustic environments is of utmost importance. In order to advance evaluation in realistic and reproducible scenarios, several high-quality acoustic databases have been developed over the years. In this paper, we present another complementary database of acoustic recordings, referred to as the Multi-arraY Room Acoustic Database (MYRiAD). The MYRiAD database is unique in its diversity of microphone configurations suiting a wide range of enhancement and reproduction applications (such as assistive hearing, teleconferencing, or sound zoning), the acoustics of the two recording spaces, and the variety of contained signals including 1214 room impulse responses (RIRs), reproduced speech, music, and stationary noise, as well as recordings of live cocktail parties held in both rooms. The microphone configurations comprise a dummy head (DH) with in-ear omnidirectional microphones, two behind-the-ear (BTE) pieces equipped with 2 omnidirectional microphones each, 5 external omnidirectional microphones (XMs), and two concentric circular microphone arrays (CMAs) consisting of 12 omnidirectional microphones in total. The two recording spaces, namely the SONORA Audio Laboratory (SAL) and the Alamire Interactive Laboratory (AIL), have reverberation times of 2.1s and 0.5s, respectively. Audio signals were reproduced using 10 movable loudspeakers in the SAL and a built-in array of 24 loudspeakers in the AIL. MATLAB and Python scripts are included for accessing the signals as well as microphone and loudspeaker coordinates. The database is publicly available at [1].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge