Marc Moonen

Sequential Processing Strategies in Fronthaul Constrained Cell-Free Massive MIMO Networks

Jan 28, 2026Abstract:In a cell-free massive MIMO (CFmMIMO) network with a daisy-chain fronthaul, the amount of information that each access point (AP) needs to communicate with the next AP in the chain is determined by the location of the AP in the sequential fronthaul. Therefore, we propose two sequential processing strategies to combat the adverse effect of fronthaul compression on the sum of users' spectral efficiency (SE): 1) linearly increasing fronthaul capacity allocation among APs and 2) Two-Path users' signal estimation. The two strategies show superior performance in terms of sum SE compared to the equal fronthaul capacity allocation and Single-Path sequential signal estimation.

Master-Assisted Distributed Uplink Operation for Cell-Free Massive MIMO Networks

Jan 27, 2026Abstract:Cell-free massive multiple-input-multiple-output is considered a promising technology for the next generation of wireless communication networks. The main idea is to distribute a large number of access points (APs) in a geographical region to serve the user equipments (UEs) cooperatively. In the uplink, one of two types of operations is often adopted: centralized or distributed. In centralized operation, channel estimation and data decoding are performed at the central processing unit (CPU), whereas in distributed operation, channel estimation occurs at the APs and data detection at the CPU. In this paper, we propose a novel uplink operation, termed Master-Assisted Distributed Uplink Operation (MADUO), where each UE is assigned a master AP, which receives soft data estimates from the other APs and decodes the data using its local signals and the received data estimates. Numerical experiments demonstrate that the proposed operation performs comparably to the centralized operation and balances fronthaul signaling and computational complexity.

A Comparative Analysis of Generalised Echo and Interference Cancelling and Extended Multichannel Wiener Filtering for Combined Noise Reduction and Acoustic Echo Cancellation

Mar 05, 2025

Abstract:Two algorithms for combined acoustic echo cancellation (AEC) and noise reduction (NR) are analysed, namely the generalised echo and interference canceller (GEIC) and the extended multichannel Wiener filter (MWFext). Previously, these algorithms have been examined for linear echo paths, and assuming access to voice activity detectors (VADs) that separately detect desired speech and echo activity. However, algorithms implementing VADs may introduce detection errors. Therefore, in this paper, the previous analyses are extended by 1) modelling general nonlinear echo paths by means of the generalised Bussgang decomposition, and 2) modelling VAD error effects in each specific algorithm, thereby also allowing to model specific VAD assumptions. It is found and verified with simulations that, generally, the MWFext achieves a higher NR performance, while the GEIC achieves a more robust AEC performance.

Integrated Minimum Mean Squared Error Algorithms for Combined Acoustic Echo Cancellation and Noise Reduction

Dec 05, 2024

Abstract:In many speech recording applications, noise and acoustic echo corrupt the desired speech. Consequently, combined noise reduction (NR) and acoustic echo cancellation (AEC) is required. Generally, a cascade approach is followed, i.e., the AEC and NR are designed in isolation by selecting a separate signal model, formulating a separate cost function, and using a separate solution strategy. The AEC and NR are then cascaded one after the other, not accounting for their interaction. In this paper, however, an integrated approach is proposed to consider this interaction in a general multi-microphone/multi-loudspeaker setup. Therefore, a single signal model of either the microphone signal vector or the extended signal vector, obtained by stacking microphone and loudspeaker signals, is selected, a single mean squared error cost function is formulated, and a common solution strategy is used. Using this microphone signal model, a multi channel Wiener filter (MWF) is derived. Using the extended signal model, an extended MWF (MWFext) is derived, and several equivalent expressions are found, which nevertheless are interpretable as cascade algorithms. Specifically, the MWFext is shown to be equivalent to algorithms where the AEC precedes the NR (AEC NR), the NR precedes the AEC (NR-AEC), and the extended NR (NRext) precedes the AEC and post-filter (PF) (NRext-AECPF). Under rank-deficiency conditions the MWFext is non-unique, such that this equivalence amounts to the expressions being specific, not necessarily minimum-norm solutions for this MWFext. The practical performances nonetheless differ due to non-stationarities and imperfect correlation matrix estimation, resulting in the AEC-NR and NRext-AEC-PF attaining best overall performance.

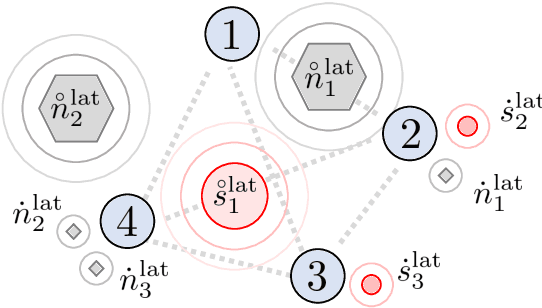

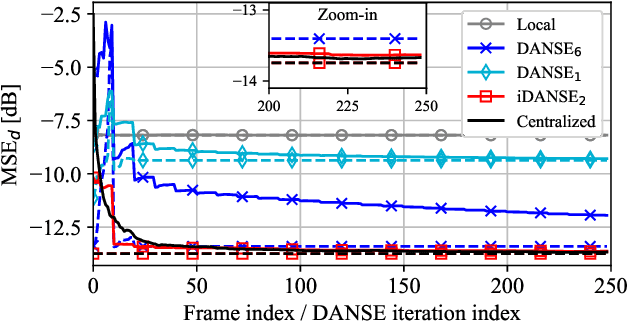

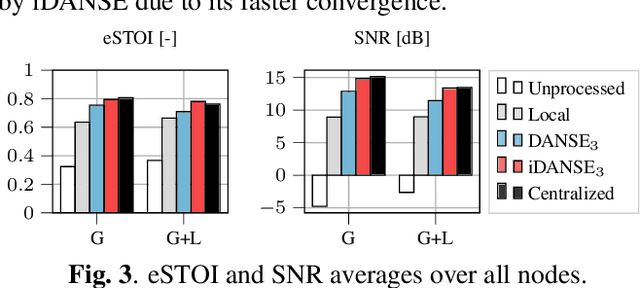

One-Shot Distributed Node-Specific Signal Estimation with Non-Overlapping Latent Subspaces in Acoustic Sensor Networks

Aug 07, 2024

Abstract:A one-shot algorithm called iterationless DANSE (iDANSE) is introduced to perform distributed adaptive node-specific signal estimation (DANSE) in a fully connected wireless acoustic sensor network (WASN) deployed in an environment with non-overlapping latent signal subspaces. The iDANSE algorithm matches the performance of a centralized algorithm in a single processing cycle while devices exchange fused versions of their multichannel local microphone signals. Key advantages of iDANSE over currently available solutions are its iterationless nature, which favors deployment in real-time applications, and the fact that devices can exchange fewer fused signals than the number of latent sources in the environment. The proposed method is validated in numerical simulations including a speech enhancement scenario.

Cell-free Massive MIMO with Sequential Fronthaul Architecture and Limited Memory Access Points

Jul 31, 2024

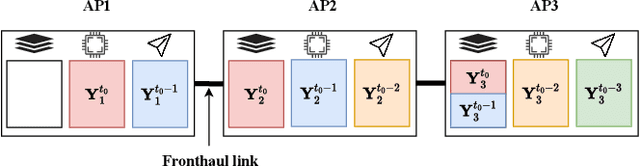

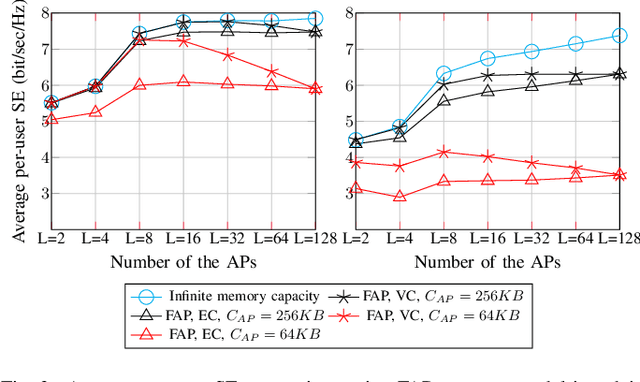

Abstract:Cell-free massive multiple-input multiple-output (CFmMIMO) is a paradigm that can improve users' spectral efficiency (SE) far beyond traditional cellular networks. Increased spatial diversity in CFmMIMO is achieved by spreading the antennas into small access points (APs), which cooperate to serve the users. Sequential fronthaul topologies in CFmMIMO, such as the daisy chain and multi-branch tree topology, have gained considerable attention recently. In such a processing architecture, each AP must store its received signal vector in the memory until it receives the relevant information from the previous AP in the sequence to refine the estimate of the users' signal vector in the uplink. In this paper, we adopt vector-wise and element-wise compression on the raw or pre-processed received signal vectors to store them in the memory. We investigate the impact of the limited memory capacity in the APs on the optimal number of APs. We show that with no memory constraint, having single-antenna APs is optimal, especially as the number of users grows. However, a limited memory at the APs restricts the depth of the sequential processing pipeline. Furthermore, we investigate the relation between the memory capacity at the APs and the rate of the fronthaul link.

Topology-Independent GEVD-Based Distributed Adaptive Node-Specific Signal Estimation in Ad-Hoc Wireless Acoustic Sensor Networks

Jul 19, 2024Abstract:A low-rank approximation-based version of the topology-independent distributed adaptive node-specific signal estimation (TI-DANSE) algorithm is introduced, using a generalized eigenvalue decomposition (GEVD) for application in ad-hoc wireless acoustic sensor networks. This TI-GEVD-DANSE algorithm as well as the original TI-DANSE algorithm exhibit a non-strict convergence, which can lead to numerical instability over time, particularly in scenarios where the estimation of accurate spatial covariance matrices is challenging. An adaptive filter coefficient normalization strategy is proposed to mitigate this issue and enable the stable performance of TI-(GEVD-)DANSE. The method is validated in numerical simulations including dynamic acoustic scenarios, demonstrating the importance of the additional normalization.

Cascaded noise reduction and acoustic echo cancellation based on an extended noise reduction

Jun 13, 2024Abstract:In many speech recording applications, the recorded desired speech is corrupted by both noise and acoustic echo, such that combined noise reduction (NR) and acoustic echo cancellation (AEC) is called for. A common cascaded design corresponds to NR filters preceding AEC filters. These NR filters aim at reducing the near-end room noise (and possibly partially the echo) and operate on the microphones only, consequently requiring the AEC filters to model both the echo paths and the NR filters. In this paper, however, we propose a design with extended NR (NRext) filters preceding AEC filters under the assumption of the echo paths being additive maps, thus preserving the addition operation. Here, the NRext filters aim at reducing both the near-end room noise and the far-end room noise component in the echo, and operate on both the microphones and loudspeakers. We show that the succeeding AEC filters remarkably become independent of the NRext filters, such that the AEC filters are only required to model the echo paths, improving the AEC performance. Further, the degrees of freedom in the NRext filters scale with the number of loudspeakers, which is not the case for the NR filters, resulting in an improved NR performance.

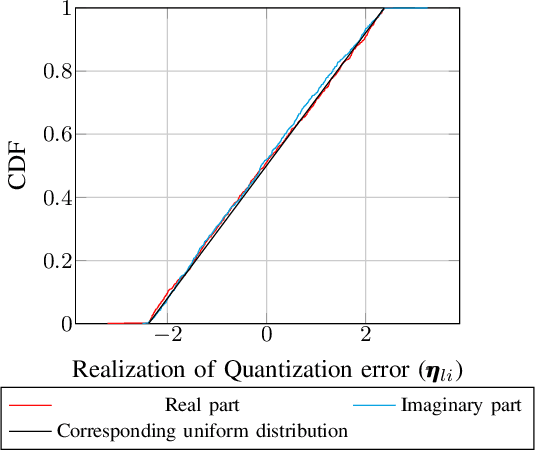

Joint Sequential Fronthaul Quantization and Hardware Complexity Reduction in Uplink Cell-Free Massive MIMO Networks

May 02, 2024

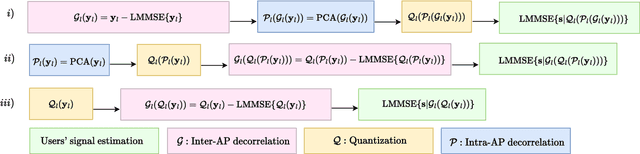

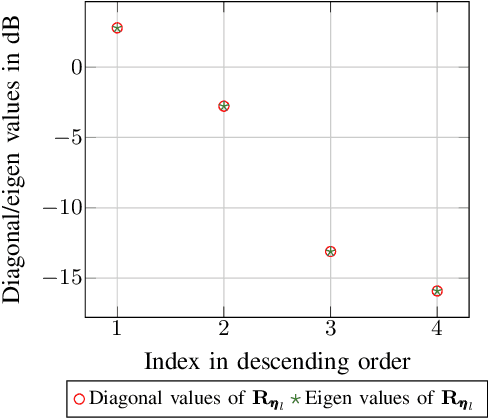

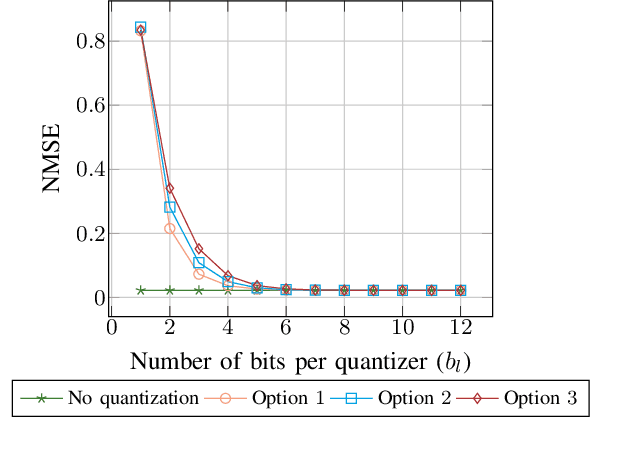

Abstract:Fronthaul quantization causes a significant distortion in cell-free massive MIMO networks. Due to the limited capacity of fronthaul links, information exchange among access points (APs) must be quantized significantly. Furthermore, the complexity of the multiplication operation in the base-band processing unit increases with the number of bits of the operands. Thus, quantizing the APs' signal vector reduces the complexity of signal estimation in the base-band processing unit. Most recent works consider the direct quantization of the received signal vectors at each AP without any pre-processing. However, the signal vectors received at different APs are correlated mutually (inter-AP correlation) and also have correlated dimensions (intra-AP correlation). Hence, cooperative quantization of APs fronthaul can help to efficiently use the quantization bits at each AP and further reduce the distortion imposed on the quantized vector at the APs. This paper considers a daisy chain fronthaul and three different processing sequences at each AP. We show that 1) de-correlating the received signal vector at each AP from the corresponding vectors of the previous APs (inter-AP de-correlation) and 2) de-correlating the dimensions of the received signal vector at each AP (intra-AP de-correlation) before quantization helps to use the quantization bits at each AP more efficiently than directly quantizing the received signal vector without any pre-processing and consequently, improves the bit error rate (BER) and normalized mean square error (NMSE) of users signal estimation.

Sequential Processing in Cell-free Massive MIMO Uplink with Limited Memory Access Points

Dec 09, 2023

Abstract:Cell-free massive multiple-input multiple-output (MIMO) is an emerging technology that will reshape the architecture of next-generation networks. This paper considers the sequential fronthaul, whereby the access points (APs) are connected in a daisy chain topology with multiple sequential processing stages. With this sequential processing in the uplink, each AP refines users' signal estimates received from the previous AP based on its own local received signal vector. While this processing architecture has been shown to achieve the same performance as centralized processing, the impact of the limited memory capacity at the APs on the store and forward processing architecture is yet to be analyzed. Thus, we model the received signal vector compression using rate-distortion theory to demonstrate the effect of limited memory capacity on the optimal number of APs in the daisy chain fronthaul. Without this memory constraint, more geographically distributed antennas alleviate the adverse effect of large-scale fading on the signal-to-interference-plus-noise-ratio (SINR). However, we show that in case of limited memory capacity at each AP, the memory capacity to store the received signal vectors at the final AP of this fronthaul becomes a limiting factor. In other words, we show that when deciding on the number of APs to distribute the antennas, there is an inherent trade-off between more macro-diversity and compression noise power on the stored signal vectors at the APs. Hence, the available memory capacity at the APs significantly influences the optimal number of APs in the fronthaul.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge