Ralph Ewerth

Robust Fake News Detection using Large Language Models under Adversarial Sentiment Attacks

Jan 21, 2026Abstract:Misinformation and fake news have become a pressing societal challenge, driving the need for reliable automated detection methods. Prior research has highlighted sentiment as an important signal in fake news detection, either by analyzing which sentiments are associated with fake news or by using sentiment and emotion features for classification. However, this poses a vulnerability since adversaries can manipulate sentiment to evade detectors especially with the advent of large language models (LLMs). A few studies have explored adversarial samples generated by LLMs, but they mainly focus on stylistic features such as writing style of news publishers. Thus, the crucial vulnerability of sentiment manipulation remains largely unexplored. In this paper, we investigate the robustness of state-of-the-art fake news detectors under sentiment manipulation. We introduce AdSent, a sentiment-robust detection framework designed to ensure consistent veracity predictions across both original and sentiment-altered news articles. Specifically, we (1) propose controlled sentiment-based adversarial attacks using LLMs, (2) analyze the impact of sentiment shifts on detection performance. We show that changing the sentiment heavily impacts the performance of fake news detection models, indicating biases towards neutral articles being real, while non-neutral articles are often classified as fake content. (3) We introduce a novel sentiment-agnostic training strategy that enhances robustness against such perturbations. Extensive experiments on three benchmark datasets demonstrate that AdSent significantly outperforms competitive baselines in both accuracy and robustness, while also generalizing effectively to unseen datasets and adversarial scenarios.

Enhancing the Learning Experience: Using Vision-Language Models to Generate Questions for Educational Videos

May 03, 2025Abstract:Web-based educational videos offer flexible learning opportunities and are becoming increasingly popular. However, improving user engagement and knowledge retention remains a challenge. Automatically generated questions can activate learners and support their knowledge acquisition. Further, they can help teachers and learners assess their understanding. While large language and vision-language models have been employed in various tasks, their application to question generation for educational videos remains underexplored. In this paper, we investigate the capabilities of current vision-language models for generating learning-oriented questions for educational video content. We assess (1) out-of-the-box models' performance; (2) fine-tuning effects on content-specific question generation; (3) the impact of different video modalities on question quality; and (4) in a qualitative study, question relevance, answerability, and difficulty levels of generated questions. Our findings delineate the capabilities of current vision-language models, highlighting the need for fine-tuning and addressing challenges in question diversity and relevance. We identify requirements for future multimodal datasets and outline promising research directions.

Verifying Cross-modal Entity Consistency in News using Vision-language Models

Jan 20, 2025Abstract:The web has become a crucial source of information, but it is also used to spread disinformation, often conveyed through multiple modalities like images and text. The identification of inconsistent cross-modal information, in particular entities such as persons, locations, and events, is critical to detect disinformation. Previous works either identify out-of-context disinformation by assessing the consistency of images to the whole document, neglecting relations of individual entities, or focus on generic entities that are not relevant to news. So far, only few approaches have addressed the task of validating entity consistency between images and text in news. However, the potential of large vision-language models (LVLMs) has not been explored yet. In this paper, we propose an LVLM-based framework for verifying Cross-modal Entity Consistency~(LVLM4CEC), to assess whether persons, locations and events in news articles are consistent across both modalities. We suggest effective prompting strategies for LVLMs for entity verification that leverage reference images crawled from web. Moreover, we extend three existing datasets for the task of entity verification in news providing manual ground-truth data. Our results show the potential of LVLMs for automating cross-modal entity verification, showing improved accuracy in identifying persons and events when using evidence images. Moreover, our method outperforms a baseline for location and event verification in documents. The datasets and source code are available on GitHub at \url{https://github.com/TIBHannover/LVLM4CEC}.

Unraveling the Impact of Visual Complexity on Search as Learning

Jan 09, 2025

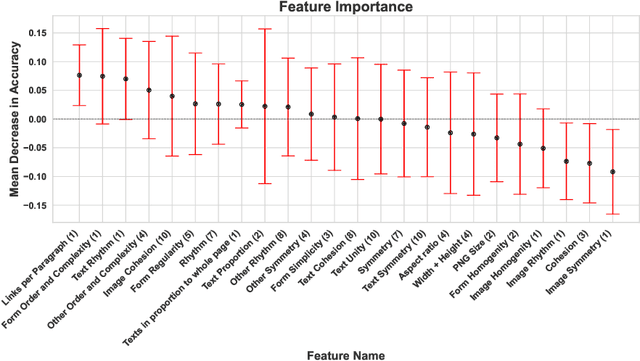

Abstract:Information search has become essential for learning and knowledge acquisition, offering broad access to information and learning resources. The visual complexity of web pages is known to influence search behavior, with previous work suggesting that searchers make evaluative judgments within the first second on a page. However, there is a significant gap in our understanding of how visual complexity impacts searches specifically conducted with a learning intent. This gap is particularly relevant for the development of optimized information retrieval (IR) systems that effectively support educational objectives. To address this research need, we model visual complexity and aesthetics via a diverse set of features, investigating their relationship with search behavior during learning-oriented web sessions. Our study utilizes a publicly available dataset from a lab study where participants learned about thunderstorm formation. Our findings reveal that while content relevance is the most significant predictor for knowledge gain, sessions with less visually complex pages are associated with higher learning success. This observation applies to features associated with the layout of web pages rather than to simpler features (e.g., number of images). The reported results shed light on the impact of visual complexity on learning-oriented searches, informing the design of more effective IR systems for educational contexts. To foster reproducibility, we release our source code (https://github.com/TIBHannover/sal_visual_complexity).

Saliency Detection in Educational Videos: Analyzing the Performance of Current Models, Identifying Limitations and Advancement Directions

Aug 08, 2024

Abstract:Identifying the regions of a learning resource that a learner pays attention to is crucial for assessing the material's impact and improving its design and related support systems. Saliency detection in videos addresses the automatic recognition of attention-drawing regions in single frames. In educational settings, the recognition of pertinent regions in a video's visual stream can enhance content accessibility and information retrieval tasks such as video segmentation, navigation, and summarization. Such advancements can pave the way for the development of advanced AI-assisted technologies that support learning with greater efficacy. However, this task becomes particularly challenging for educational videos due to the combination of unique characteristics such as text, voice, illustrations, animations, and more. To the best of our knowledge, there is currently no study that evaluates saliency detection approaches in educational videos. In this paper, we address this gap by evaluating four state-of-the-art saliency detection approaches for educational videos. We reproduce the original studies and explore the replication capabilities for general-purpose (non-educational) datasets. Then, we investigate the generalization capabilities of the models and evaluate their performance on educational videos. We conduct a comprehensive analysis to identify common failure scenarios and possible areas of improvement. Our experimental results show that educational videos remain a challenging context for generic video saliency detection models.

Multimodal Misinformation Detection using Large Vision-Language Models

Jul 19, 2024Abstract:The increasing proliferation of misinformation and its alarming impact have motivated both industry and academia to develop approaches for misinformation detection and fact checking. Recent advances on large language models (LLMs) have shown remarkable performance in various tasks, but whether and how LLMs could help with misinformation detection remains relatively underexplored. Most of existing state-of-the-art approaches either do not consider evidence and solely focus on claim related features or assume the evidence to be provided. Few approaches consider evidence retrieval as part of the misinformation detection but rely on fine-tuning models. In this paper, we investigate the potential of LLMs for misinformation detection in a zero-shot setting. We incorporate an evidence retrieval component into the process as it is crucial to gather pertinent information from various sources to detect the veracity of claims. To this end, we propose a novel re-ranking approach for multimodal evidence retrieval using both LLMs and large vision-language models (LVLM). The retrieved evidence samples (images and texts) serve as the input for an LVLM-based approach for multimodal fact verification (LVLM4FV). To enable a fair evaluation, we address the issue of incomplete ground truth for evidence samples in an existing evidence retrieval dataset by annotating a more complete set of evidence samples for both image and text retrieval. Our experimental results on two datasets demonstrate the superiority of the proposed approach in both evidence retrieval and fact verification tasks and also better generalization capability across dataset compared to the supervised baseline.

On the Influence of Reading Sequences on Knowledge Gain during Web Search

Jan 10, 2024Abstract:Nowadays, learning increasingly involves the usage of search engines and web resources. The related interdisciplinary research field search as learning aims to understand how people learn on the web. Previous work has investigated several feature classes to predict, for instance, the expected knowledge gain during web search. Therein, eye-tracking features have not been extensively studied so far. In this paper, we extend a previously used reading model from a line-based one to one that can detect reading sequences across multiple lines. We use publicly available study data from a web-based learning task to examine the relationship between our feature set and the participants' test scores. Our findings demonstrate that learners with higher knowledge gain spent significantly more time reading, and processing more words in total. We also find evidence that faster reading at the expense of more backward regressions may be an indicator of better web-based learning. We make our code publicly available at https://github.com/TIBHannover/reading_web_search.

Classification of Visualization Types and Perspectives in Patents

Jul 19, 2023

Abstract:Due to the swift growth of patent applications each year, information and multimedia retrieval approaches that facilitate patent exploration and retrieval are of utmost importance. Different types of visualizations (e.g., graphs, technical drawings) and perspectives (e.g., side view, perspective) are used to visualize details of innovations in patents. The classification of these images enables a more efficient search and allows for further analysis. So far, datasets for image type classification miss some important visualization types for patents. Furthermore, related work does not make use of recent deep learning approaches including transformers. In this paper, we adopt state-of-the-art deep learning methods for the classification of visualization types and perspectives in patent images. We extend the CLEF-IP dataset for image type classification in patents to ten classes and provide manual ground truth annotations. In addition, we derive a set of hierarchical classes from a dataset that provides weakly-labeled data for image perspectives. Experimental results have demonstrated the feasibility of the proposed approaches. Source code, models, and dataset will be made publicly available.

Improving Generalization for Multimodal Fake News Detection

May 29, 2023Abstract:The increasing proliferation of misinformation and its alarming impact have motivated both industry and academia to develop approaches for fake news detection. However, state-of-the-art approaches are usually trained on datasets of smaller size or with a limited set of specific topics. As a consequence, these models lack generalization capabilities and are not applicable to real-world data. In this paper, we propose three models that adopt and fine-tune state-of-the-art multimodal transformers for multimodal fake news detection. We conduct an in-depth analysis by manipulating the input data aimed to explore models performance in realistic use cases on social media. Our study across multiple models demonstrates that these systems suffer significant performance drops against manipulated data. To reduce the bias and improve model generalization, we suggest training data augmentation to conduct more meaningful experiments for fake news detection on social media. The proposed data augmentation techniques enable models to generalize better and yield improved state-of-the-art results.

Predicting Knowledge Gain for MOOC Video Consumption

Dec 13, 2022Abstract:Informal learning on the Web using search engines as well as more structured learning on MOOC platforms have become very popular in recent years. As a result of the vast amount of available learning resources, intelligent retrieval and recommendation methods are indispensable -- this is true also for MOOC videos. However, the automatic assessment of this content with regard to predicting (potential) knowledge gain has not been addressed by previous work yet. In this paper, we investigate whether we can predict learning success after MOOC video consumption using 1) multimodal features covering slide and speech content, and 2) a wide range of text-based features describing the content of the video. In a comprehensive experimental setting, we test four different classifiers and various feature subset combinations. We conduct a detailed feature importance analysis to gain insights in which modality benefits knowledge gain prediction the most.

* 13 pages, 1 figure, 3 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge