Rainer Lienhart

Multi-Agent Causal Reasoning System for Error Pattern Rule Automation in Vehicles

Feb 03, 2026Abstract:Modern vehicles generate thousands of different discrete events known as Diagnostic Trouble Codes (DTCs). Automotive manufacturers use Boolean combinations of these codes, called error patterns (EPs), to characterize system faults and ensure vehicle safety. Yet, EP rules are still manually handcrafted by domain experts, a process that is expensive and prone to errors as vehicle complexity grows. This paper introduces CAREP (Causal Automated Reasoning for Error Patterns), a multi-agent system that automatizes the generation of EP rules from high-dimensional event sequences of DTCs. CAREP combines a causal discovery agent that identifies potential DTC-EP relations, a contextual information agent that integrates metadata and descriptions, and an orchestrator agent that synthesizes candidate boolean rules together with interpretable reasoning traces. Evaluation on a large-scale automotive dataset with over 29,100 unique DTCs and 474 error patterns demonstrates that CAREP can automatically and accurately discover the unknown EP rules, outperforming LLM-only baselines while providing transparent causal explanations. By uniting practical causal discovery and agent-based reasoning, CAREP represents a step toward fully automated fault diagnostics, enabling scalable, interpretable, and cost-efficient vehicle maintenance.

TRACE: Scalable Amortized Causal Discovery from Single Sequences via Autoregressive Density Estimation

Feb 01, 2026Abstract:We study causal discovery from a single observed sequence of discrete events generated by a stochastic process, as encountered in vehicle logs, manufacturing systems, or patient trajectories. This regime is particularly challenging due to the absence of repeated samples, high dimensionality, and long-range temporal dependencies of the single observation during inference. We introduce TRACE, a scalable framework that repurposes autoregressive models as pretrained density estimators for conditional mutual information estimation. TRACE infers the summary causal graph between event types in a sequence, scaling linearly with the event vocabulary and supporting delayed causal effects, while being fully parallel on GPUs. We establish its theoretical identifiability under imperfect autoregressive models. Experiments demonstrate robust performance across different baselines and varying vocabulary sizes including an application to root-cause analysis in vehicle diagnostics with over 29,100 event types.

Transforming Vehicle Diagnostics: A Multimodal Approach to Error Patterns Prediction

Feb 01, 2026Abstract:Accurately diagnosing and predicting vehicle malfunctions is crucial for maintenance and safety in the automotive industry. While modern diagnostic systems primarily rely on sequences of vehicular Diagnostic Trouble Codes (DTCs) registered in On-Board Diagnostic (OBD) systems, they often overlook valuable contextual information such as raw sensory data (e.g., temperature, humidity, and pressure). This contextual data, crucial for domain experts to classify vehicle failures, introduces unique challenges due to its complexity and the noisy nature of real-world data. This paper presents BiCarFormer: the first multimodal approach to multi-label sequence classification of error codes into error patterns that integrates DTC sequences and environmental conditions. BiCarFormer is a bidirectional Transformer model tailored for vehicle event sequences, employing embedding fusions and a co-attention mechanism to capture the relationships between diagnostic codes and environmental data. Experimental results on a challenging real-world automotive dataset with 22,137 error codes and 360 error patterns demonstrate that our approach significantly improves classification performance compared to models that rely solely on DTC sequences and traditional sequence models. This work highlights the importance of incorporating contextual environmental information for more accurate and robust vehicle diagnostics, hence reducing maintenance costs and enhancing automation processes in the automotive industry.

MMMS: Multi-Modal Multi-Surface Interactive Segmentation

Sep 16, 2025Abstract:In this paper, we present a method to interactively create segmentation masks on the basis of user clicks. We pay particular attention to the segmentation of multiple surfaces that are simultaneously present in the same image. Since these surfaces may be heavily entangled and adjacent, we also present a novel extended evaluation metric that accounts for the challenges of this scenario. Additionally, the presented method is able to use multi-modal inputs to facilitate the segmentation task. At the center of this method is a network architecture which takes as input an RGB image, a number of non-RGB modalities, an erroneous mask, and encoded clicks. Based on this input, the network predicts an improved segmentation mask. We design our architecture such that it adheres to two conditions: (1) The RGB backbone is only available as a black-box. (2) To reduce the response time, we want our model to integrate the interaction-specific information after the image feature extraction and the multi-modal fusion. We refer to the overall task as Multi-Modal Multi-Surface interactive segmentation (MMMS). We are able to show the effectiveness of our multi-modal fusion strategy. Using additional modalities, our system reduces the NoC@90 by up to 1.28 clicks per surface on average on DeLiVER and up to 1.19 on MFNet. On top of this, we are able to show that our RGB-only baseline achieves competitive, and in some cases even superior performance when tested in a classical, single-mask interactive segmentation scenario.

Coling-UniA at SciVQA 2025: Few-Shot Example Retrieval and Confidence-Informed Ensembling for Multimodal Large Language Models

Jul 03, 2025Abstract:This paper describes our system for the SciVQA 2025 Shared Task on Scientific Visual Question Answering. Our system employs an ensemble of two Multimodal Large Language Models and various few-shot example retrieval strategies. The model and few-shot setting are selected based on the figure and question type. We also select answers based on the models' confidence levels. On the blind test data, our system ranks third out of seven with an average F1 score of 85.12 across ROUGE-1, ROUGE-L, and BERTS. Our code is publicly available.

CoPa-SG: Dense Scene Graphs with Parametric and Proto-Relations

Jun 26, 2025Abstract:2D scene graphs provide a structural and explainable framework for scene understanding. However, current work still struggles with the lack of accurate scene graph data. To overcome this data bottleneck, we present CoPa-SG, a synthetic scene graph dataset with highly precise ground truth and exhaustive relation annotations between all objects. Moreover, we introduce parametric and proto-relations, two new fundamental concepts for scene graphs. The former provides a much more fine-grained representation than its traditional counterpart by enriching relations with additional parameters such as angles or distances. The latter encodes hypothetical relations in a scene graph and describes how relations would form if new objects are placed in the scene. Using CoPa-SG, we compare the performance of various scene graph generation models. We demonstrate how our new relation types can be integrated in downstream applications to enhance planning and reasoning capabilities.

HOIverse: A Synthetic Scene Graph Dataset With Human Object Interactions

Jun 24, 2025Abstract:When humans and robotic agents coexist in an environment, scene understanding becomes crucial for the agents to carry out various downstream tasks like navigation and planning. Hence, an agent must be capable of localizing and identifying actions performed by the human. Current research lacks reliable datasets for performing scene understanding within indoor environments where humans are also a part of the scene. Scene Graphs enable us to generate a structured representation of a scene or an image to perform visual scene understanding. To tackle this, we present HOIverse a synthetic dataset at the intersection of scene graph and human-object interaction, consisting of accurate and dense relationship ground truths between humans and surrounding objects along with corresponding RGB images, segmentation masks, depth images and human keypoints. We compute parametric relations between various pairs of objects and human-object pairs, resulting in an accurate and unambiguous relation definitions. In addition, we benchmark our dataset on state-of-the-art scene graph generation models to predict parametric relations and human-object interactions. Through this dataset, we aim to accelerate research in the field of scene understanding involving people.

Towards Ball Spin and Trajectory Analysis in Table Tennis Broadcast Videos via Physically Grounded Synthetic-to-Real Transfer

Apr 28, 2025Abstract:Analyzing a player's technique in table tennis requires knowledge of the ball's 3D trajectory and spin. While, the spin is not directly observable in standard broadcasting videos, we show that it can be inferred from the ball's trajectory in the video. We present a novel method to infer the initial spin and 3D trajectory from the corresponding 2D trajectory in a video. Without ground truth labels for broadcast videos, we train a neural network solely on synthetic data. Due to the choice of our input data representation, physically correct synthetic training data, and using targeted augmentations, the network naturally generalizes to real data. Notably, these simple techniques are sufficient to achieve generalization. No real data at all is required for training. To the best of our knowledge, we are the first to present a method for spin and trajectory prediction in simple monocular broadcast videos, achieving an accuracy of 92.0% in spin classification and a 2D reprojection error of 0.19% of the image diagonal.

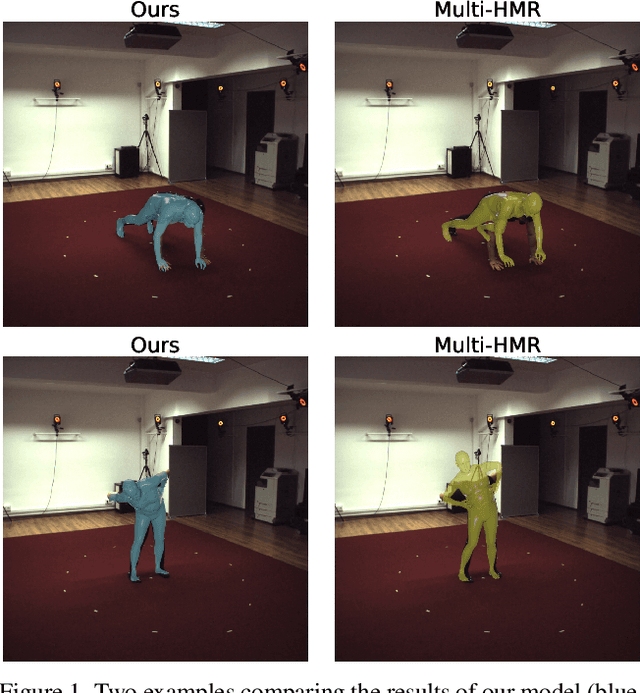

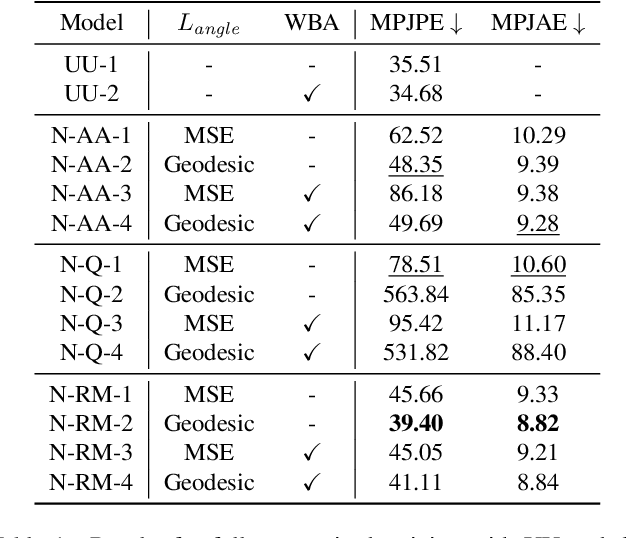

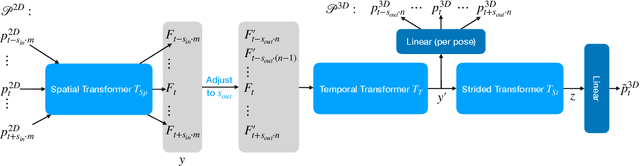

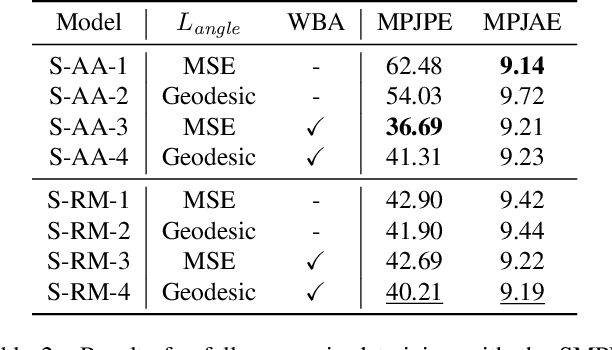

Efficient 2D to Full 3D Human Pose Uplifting including Joint Rotations

Apr 14, 2025

Abstract:In sports analytics, accurately capturing both the 3D locations and rotations of body joints is essential for understanding an athlete's biomechanics. While Human Mesh Recovery (HMR) models can estimate joint rotations, they often exhibit lower accuracy in joint localization compared to 3D Human Pose Estimation (HPE) models. Recent work addressed this limitation by combining a 3D HPE model with inverse kinematics (IK) to estimate both joint locations and rotations. However, IK is computationally expensive. To overcome this, we propose a novel 2D-to-3D uplifting model that directly estimates 3D human poses, including joint rotations, in a single forward pass. We investigate multiple rotation representations, loss functions, and training strategies - both with and without access to ground truth rotations. Our models achieve state-of-the-art accuracy in rotation estimation, are 150 times faster than the IK-based approach, and surpass HMR models in joint localization precision.

On the Importance of Conditioning for Privacy-Preserving Data Augmentation

Apr 08, 2025Abstract:Latent diffusion models can be used as a powerful augmentation method to artificially extend datasets for enhanced training. To the human eye, these augmented images look very different to the originals. Previous work has suggested to use this data augmentation technique for data anonymization. However, we show that latent diffusion models that are conditioned on features like depth maps or edges to guide the diffusion process are not suitable as a privacy preserving method. We use a contrastive learning approach to train a model that can correctly identify people out of a pool of candidates. Moreover, we demonstrate that anonymization using conditioned diffusion models is susceptible to black box attacks. We attribute the success of the described methods to the conditioning of the latent diffusion model in the anonymization process. The diffusion model is instructed to produce similar edges for the anonymized images. Hence, a model can learn to recognize these patterns for identification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge