Daniel Kienzle

MMMS: Multi-Modal Multi-Surface Interactive Segmentation

Sep 16, 2025Abstract:In this paper, we present a method to interactively create segmentation masks on the basis of user clicks. We pay particular attention to the segmentation of multiple surfaces that are simultaneously present in the same image. Since these surfaces may be heavily entangled and adjacent, we also present a novel extended evaluation metric that accounts for the challenges of this scenario. Additionally, the presented method is able to use multi-modal inputs to facilitate the segmentation task. At the center of this method is a network architecture which takes as input an RGB image, a number of non-RGB modalities, an erroneous mask, and encoded clicks. Based on this input, the network predicts an improved segmentation mask. We design our architecture such that it adheres to two conditions: (1) The RGB backbone is only available as a black-box. (2) To reduce the response time, we want our model to integrate the interaction-specific information after the image feature extraction and the multi-modal fusion. We refer to the overall task as Multi-Modal Multi-Surface interactive segmentation (MMMS). We are able to show the effectiveness of our multi-modal fusion strategy. Using additional modalities, our system reduces the NoC@90 by up to 1.28 clicks per surface on average on DeLiVER and up to 1.19 on MFNet. On top of this, we are able to show that our RGB-only baseline achieves competitive, and in some cases even superior performance when tested in a classical, single-mask interactive segmentation scenario.

Towards Ball Spin and Trajectory Analysis in Table Tennis Broadcast Videos via Physically Grounded Synthetic-to-Real Transfer

Apr 28, 2025Abstract:Analyzing a player's technique in table tennis requires knowledge of the ball's 3D trajectory and spin. While, the spin is not directly observable in standard broadcasting videos, we show that it can be inferred from the ball's trajectory in the video. We present a novel method to infer the initial spin and 3D trajectory from the corresponding 2D trajectory in a video. Without ground truth labels for broadcast videos, we train a neural network solely on synthetic data. Due to the choice of our input data representation, physically correct synthetic training data, and using targeted augmentations, the network naturally generalizes to real data. Notably, these simple techniques are sufficient to achieve generalization. No real data at all is required for training. To the best of our knowledge, we are the first to present a method for spin and trajectory prediction in simple monocular broadcast videos, achieving an accuracy of 92.0% in spin classification and a 2D reprojection error of 0.19% of the image diagonal.

Efficient 2D to Full 3D Human Pose Uplifting including Joint Rotations

Apr 14, 2025

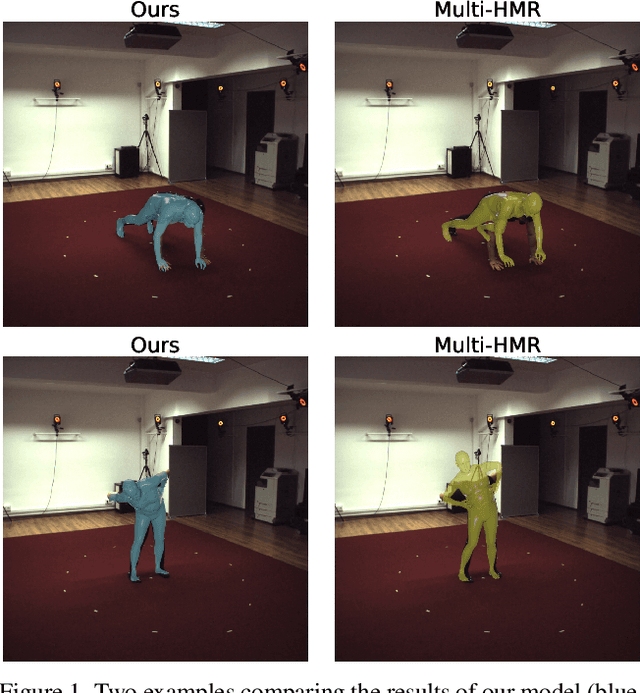

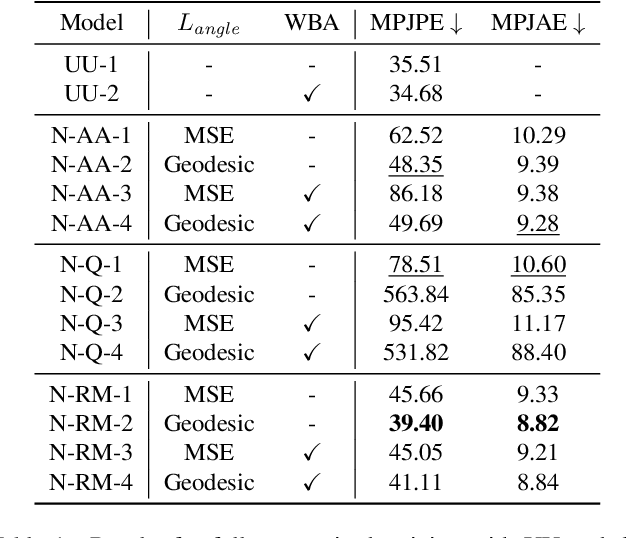

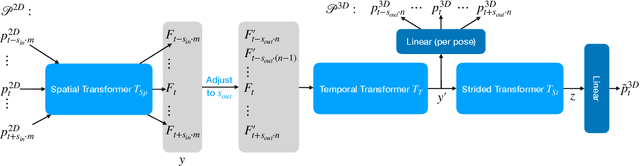

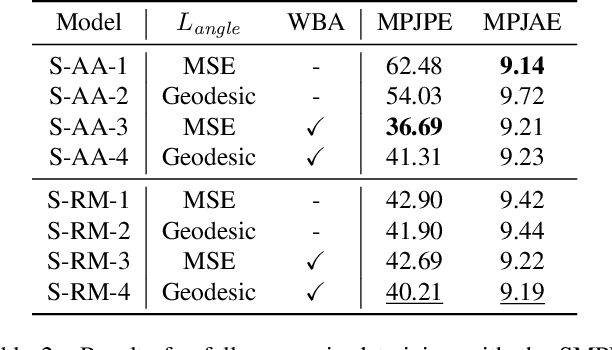

Abstract:In sports analytics, accurately capturing both the 3D locations and rotations of body joints is essential for understanding an athlete's biomechanics. While Human Mesh Recovery (HMR) models can estimate joint rotations, they often exhibit lower accuracy in joint localization compared to 3D Human Pose Estimation (HPE) models. Recent work addressed this limitation by combining a 3D HPE model with inverse kinematics (IK) to estimate both joint locations and rotations. However, IK is computationally expensive. To overcome this, we propose a novel 2D-to-3D uplifting model that directly estimates 3D human poses, including joint rotations, in a single forward pass. We investigate multiple rotation representations, loss functions, and training strategies - both with and without access to ground truth rotations. Our models achieve state-of-the-art accuracy in rotation estimation, are 150 times faster than the IK-based approach, and surpass HMR models in joint localization precision.

SkipClick: Combining Quick Responses and Low-Level Features for Interactive Segmentation in Winter Sports Contexts

Jan 14, 2025Abstract:In this paper, we present a novel architecture for interactive segmentation in winter sports contexts. The field of interactive segmentation deals with the prediction of high-quality segmentation masks by informing the network about the objects position with the help of user guidance. In our case the guidance consists of click prompts. For this task, we first present a baseline architecture which is specifically geared towards quickly responding after each click. Afterwards, we motivate and describe a number of architectural modifications which improve the performance when tasked with segmenting winter sports equipment on the WSESeg dataset. With regards to the average NoC@85 metric on the WSESeg classes, we outperform SAM and HQ-SAM by 2.336 and 7.946 clicks, respectively. When applied to the HQSeg-44k dataset, our system delivers state-of-the-art results with a NoC@90 of 6.00 and NoC@95 of 9.89. In addition to that, we test our model on a novel dataset containing masks for humans during skiing.

Leveraging Anthropometric Measurements to Improve Human Mesh Estimation and Ensure Consistent Body Shapes

Sep 27, 2024

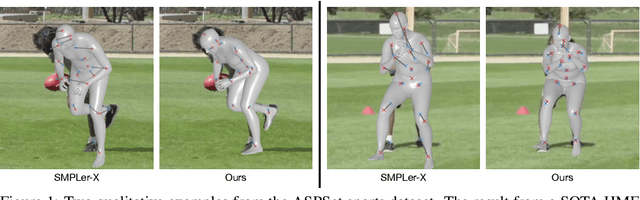

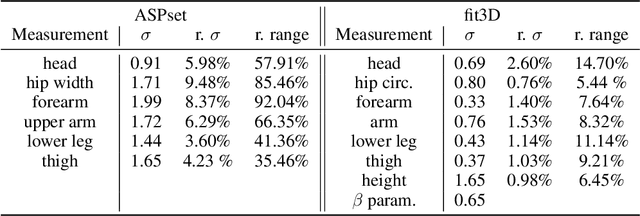

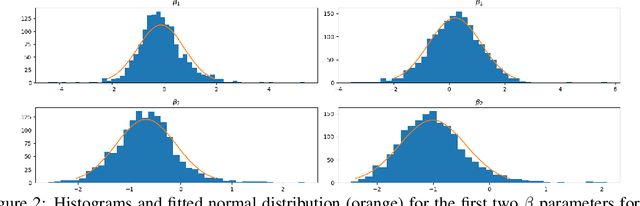

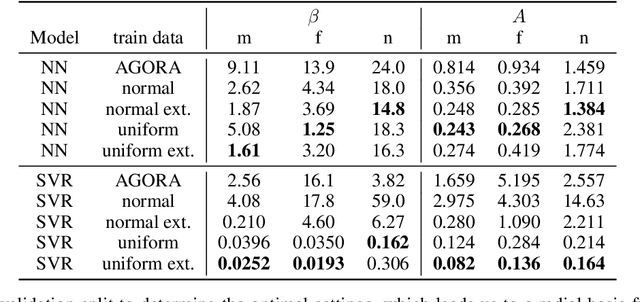

Abstract:The basic body shape of a person does not change within a single video. However, most SOTA human mesh estimation (HME) models output a slightly different body shape for each video frame, which results in inconsistent body shapes for the same person. In contrast, we leverage anthropometric measurements like tailors are already obtaining from humans for centuries. We create a model called A2B that converts such anthropometric measurements to body shape parameters of human mesh models. Moreover, we find that finetuned SOTA 3D human pose estimation (HPE) models outperform HME models regarding the precision of the estimated keypoints. We show that applying inverse kinematics (IK) to the results of such a 3D HPE model and combining the resulting body pose with the A2B body shape leads to superior and consistent human meshes for challenging datasets like ASPset or fit3D, where we can lower the MPJPE by over 30 mm compared to SOTA HME models. Further, replacing HME models estimates of the body shape parameters with A2B model results not only increases the performance of these HME models, but also leads to consistent body shapes.

A Fair Ranking and New Model for Panoptic Scene Graph Generation

Jul 12, 2024

Abstract:In panoptic scene graph generation (PSGG), models retrieve interactions between objects in an image which are grounded by panoptic segmentation masks. Previous evaluations on panoptic scene graphs have been subject to an erroneous evaluation protocol where multiple masks for the same object can lead to multiple relation distributions per mask-mask pair. This can be exploited to increase the final score. We correct this flaw and provide a fair ranking over a wide range of existing PSGG models. The observed scores for existing methods increase by up to 7.4 mR@50 for all two-stage methods, while dropping by up to 19.3 mR@50 for all one-stage methods, highlighting the importance of a correct evaluation. Contrary to recent publications, we show that existing two-stage methods are competitive to one-stage methods. Building on this, we introduce the Decoupled SceneFormer (DSFormer), a novel two-stage model that outperforms all existing scene graph models by a large margin of +11 mR@50 and +10 mNgR@50 on the corrected evaluation, thus setting a new SOTA. As a core design principle, DSFormer encodes subject and object masks directly into feature space.

WSESeg: Introducing a Dataset for the Segmentation of Winter Sports Equipment with a Baseline for Interactive Segmentation

Jul 12, 2024

Abstract:In this paper we introduce a new dataset containing instance segmentation masks for ten different categories of winter sports equipment, called WSESeg (Winter Sports Equipment Segmentation). Furthermore, we carry out interactive segmentation experiments on said dataset to explore possibilities for efficient further labeling. The SAM and HQ-SAM models are conceptualized as foundation models for performing user guided segmentation. In order to measure their claimed generalization capability we evaluate them on WSESeg. Since interactive segmentation offers the benefit of creating easily exploitable ground truth data during test-time, we are going to test various online adaptation methods for the purpose of exploring potentials for improvements without having to fine-tune the models explicitly. Our experiments show that our adaptation methods drastically reduce the Failure Rate (FR) and Number of Clicks (NoC) metrics, which generally leads faster to better interactive segmentation results.

Segformer++: Efficient Token-Merging Strategies for High-Resolution Semantic Segmentation

May 23, 2024

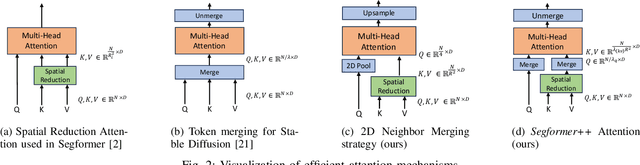

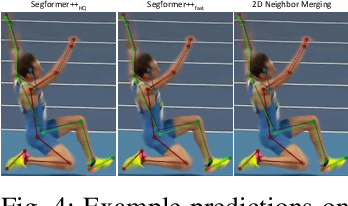

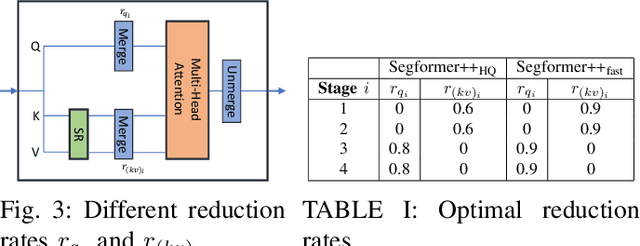

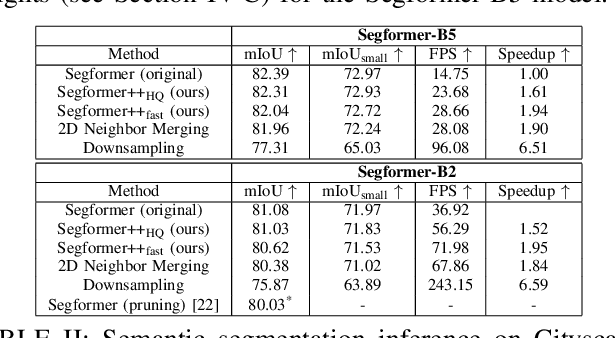

Abstract:Utilizing transformer architectures for semantic segmentation of high-resolution images is hindered by the attention's quadratic computational complexity in the number of tokens. A solution to this challenge involves decreasing the number of tokens through token merging, which has exhibited remarkable enhancements in inference speed, training efficiency, and memory utilization for image classification tasks. In this paper, we explore various token merging strategies within the framework of the Segformer architecture and perform experiments on multiple semantic segmentation and human pose estimation datasets. Notably, without model re-training, we, for example, achieve an inference acceleration of 61% on the Cityscapes dataset while maintaining the mIoU performance. Consequently, this paper facilitates the deployment of transformer-based architectures on resource-constrained devices and in real-time applications.

Towards Learning Monocular 3D Object Localization From 2D Labels using the Physical Laws of Motion

Oct 26, 2023

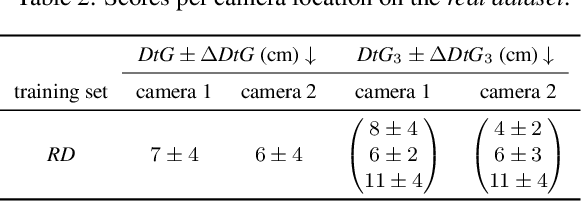

Abstract:We present a novel method for precise 3D object localization in single images from a single calibrated camera using only 2D labels. No expensive 3D labels are needed. Thus, instead of using 3D labels, our model is trained with easy-to-annotate 2D labels along with the physical knowledge of the object's motion. Given this information, the model can infer the latent third dimension, even though it has never seen this information during training. Our method is evaluated on both synthetic and real-world datasets, and we are able to achieve a mean distance error of just 6 cm in our experiments on real data. The results indicate the method's potential as a step towards learning 3D object location estimation, where collecting 3D data for training is not feasible.

Haystack: A Panoptic Scene Graph Dataset to Evaluate Rare Predicate Classes

Sep 05, 2023Abstract:Current scene graph datasets suffer from strong long-tail distributions of their predicate classes. Due to a very low number of some predicate classes in the test sets, no reliable metrics can be retrieved for the rarest classes. We construct a new panoptic scene graph dataset and a set of metrics that are designed as a benchmark for the predictive performance especially on rare predicate classes. To construct the new dataset, we propose a model-assisted annotation pipeline that efficiently finds rare predicate classes that are hidden in a large set of images like needles in a haystack. Contrary to prior scene graph datasets, Haystack contains explicit negative annotations, i.e. annotations that a given relation does not have a certain predicate class. Negative annotations are helpful especially in the field of scene graph generation and open up a whole new set of possibilities to improve current scene graph generation models. Haystack is 100% compatible with existing panoptic scene graph datasets and can easily be integrated with existing evaluation pipelines. Our dataset and code can be found here: https://lorjul.github.io/haystack/. It includes annotation files and simple to use scripts and utilities, to help with integrating our dataset in existing work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge