Rahul Baburajan

Phase retrieval for Fourier Ptychography under varying amount of measurements

May 09, 2018

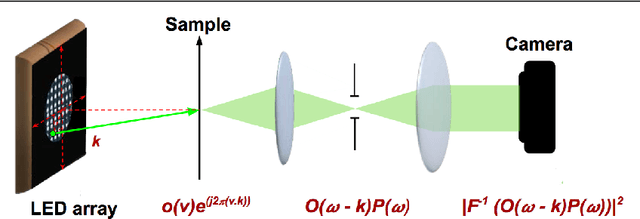

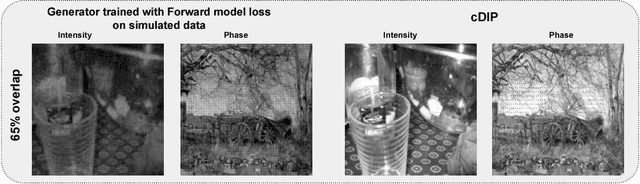

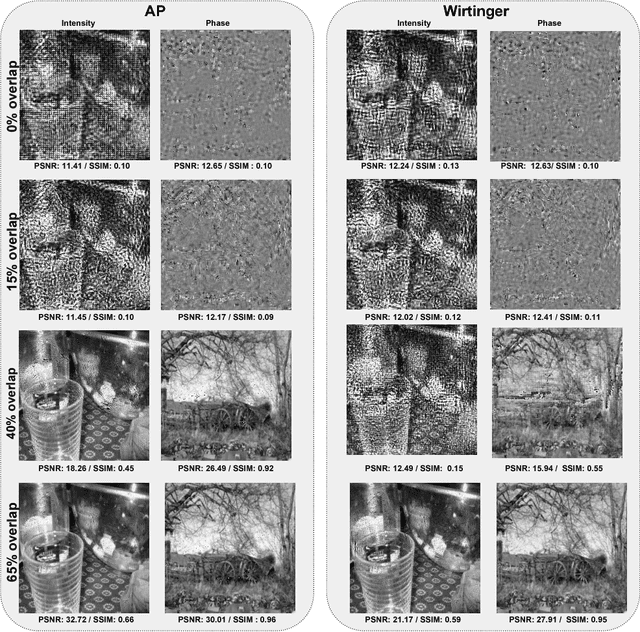

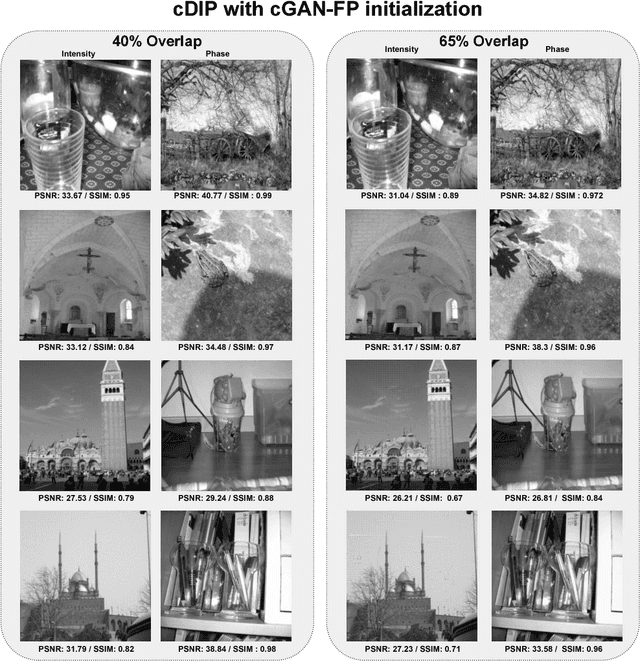

Abstract:Fourier Ptychography is a recently proposed imaging technique that yields high-resolution images by computationally transcending the diffraction blur of an optical system. At the crux of this method is the phase retrieval algorithm, which is used for computationally stitching together low-resolution images taken under varying illumination angles of a coherent light source. However, the traditional iterative phase retrieval technique relies heavily on the initialization and also need a good amount of overlap in the Fourier domain for the successively captured low-resolution images, thus increasing the acquisition time and data. We show that an auto-encoder based architecture can be adaptively trained for phase retrieval under both low overlap, where traditional techniques completely fail, and at higher levels of overlap. For the low overlap case we show that a supervised deep learning technique using an autoencoder generator is a good choice for solving the Fourier ptychography problem. And for the high overlap case, we show that optimizing the generator for reducing the forward model error is an appropriate choice. Using simulations for the challenging case of uncorrelated phase and amplitude, we show that our method outperforms many of the previously proposed Fourier ptychography phase retrieval techniques.

Solving Inverse Computational Imaging Problems using Deep Pixel-level Prior

Apr 24, 2018

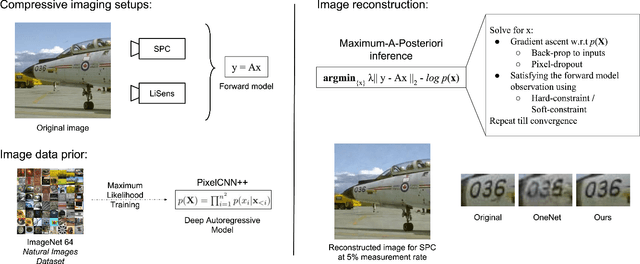

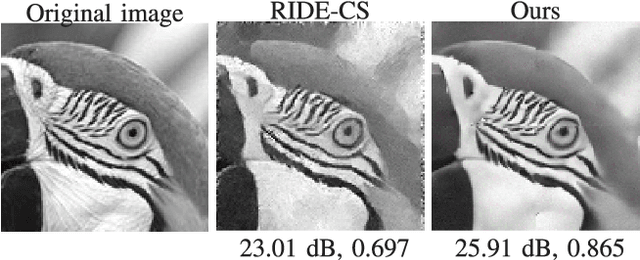

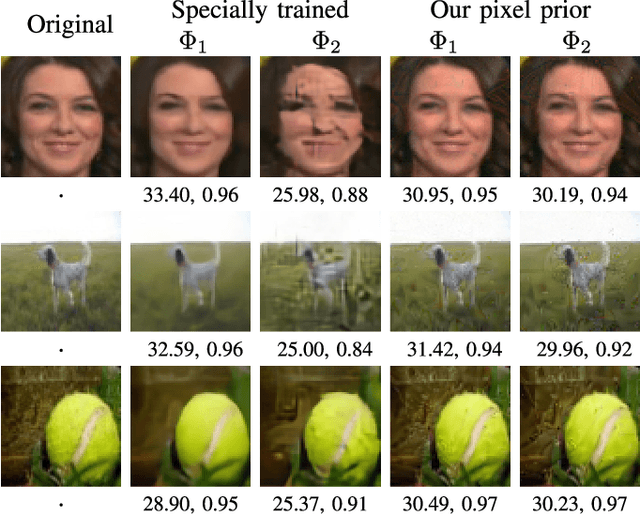

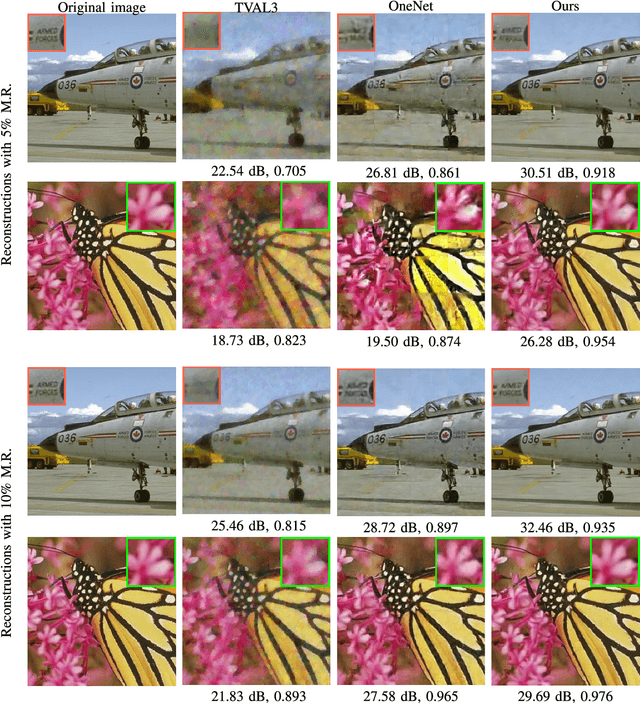

Abstract:Signal reconstruction is a challenging aspect of computational imaging as it often involves solving ill-posed inverse problems. Recently, deep feed-forward neural networks have led to state-of-the-art results in solving various inverse imaging problems. However, being task specific, these networks have to be learned for each inverse problem. On the other hand, a more flexible approach would be to learn a deep generative model once and then use it as a signal prior for solving various inverse problems. We show that among the various state of the art deep generative models, autoregressive models are especially suitable for our purpose for the following reasons. First, they explicitly model the pixel level dependencies and hence are capable of reconstructing low-level details such as texture patterns and edges better. Second, they provide an explicit expression for the image prior which can then be used for MAP based inference along with the forward model. Third, they can model long range dependencies in images which make them ideal for handling global multiplexing as encountered in various compressive imaging systems. We demonstrate the efficacy of our proposed approach in solving three computational imaging problems: Single Pixel Camera (SPC), LiSens and FlatCam. For both real and simulated cases, we obtain better reconstructions than the state-of-the-art methods in terms of perceptual and quantitative metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge