Honey Gupta

Pyramidal Edge-maps based Guided Thermal Super-resolution

Mar 13, 2020

Abstract:Thermal imaging is a robust sensing technique but its consumer applicability is limited by the high cost of thermal sensors. Nevertheless, low-resolution thermal cameras are relatively affordable and are also usually accompanied by a high-resolution visible-range camera. This visible-range image can be used as a guide to reconstruct a high-resolution thermal image using guided super-resolution(GSR) techniques. However, the difference in wavelength-range of the input images makes this task challenging. Improper processing can introduce artifacts such as blur and ghosting, mainly due to texture and content mismatch. To this end, we propose a novel algorithm for guided super-resolution that explicitly tackles the issue of texture-mismatch caused due to multimodality. We propose a two-stage network that combines information from a low-resolution thermal and a high-resolution visible image with the help of multi-level edge-extraction and integration. The first stage of our network extracts edge-maps from the visual image at different pyramidal levels and the second stage integrates these edge-maps into our proposed super-resolution network at appropriate layers. Extraction and integration of edges belonging to different scales simplifies the task of GSR as it provides texture to object-level information in a progressive manner. Using multi-level edges also allows us to adjust the contribution of the visual image directly at the time of testing and thus provides controllability at test-time. We perform multiple experiments and show that our method performs better than existing state-of-the-art guided super-resolution methods both quantitatively and qualitatively.

Unsupervised Single Image Underwater Depth Estimation

May 28, 2019

Abstract:Depth estimation from a single underwater image is one of the most challenging problems and is highly ill-posed. Due to the absence of large generalized underwater depth datasets and the difficulty in obtaining ground truth depth-maps, supervised learning techniques such as direct depth regression cannot be used. In this paper, we propose an unsupervised method for depth estimation from a single underwater image taken `in the wild' by using haze as a cue for depth. Our approach is based on indirect depth-map estimation where we learn the mapping functions between unpaired RGB-D terrestrial images and arbitrary underwater images to estimate the required depth-map. We propose a method which is based on the principles of cycle-consistent learning and uses dense-block based auto-encoders as generator networks. We evaluate and compare our method both quantitatively and qualitatively on various underwater images with diverse attenuation and scattering conditions and show that our method produces state-of-the-art results for unsupervised depth estimation from a single underwater image.

Phase retrieval for Fourier Ptychography under varying amount of measurements

May 09, 2018

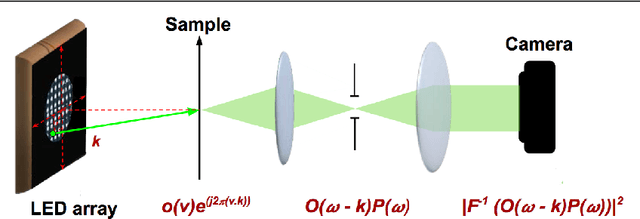

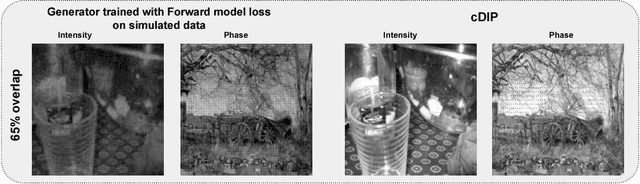

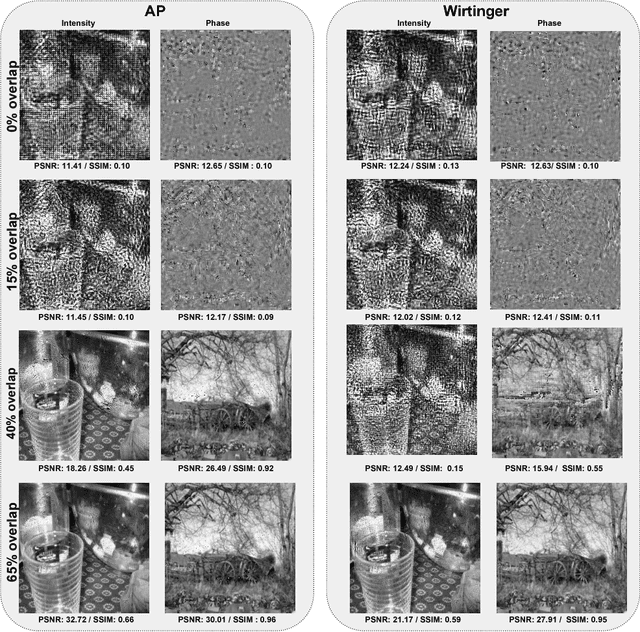

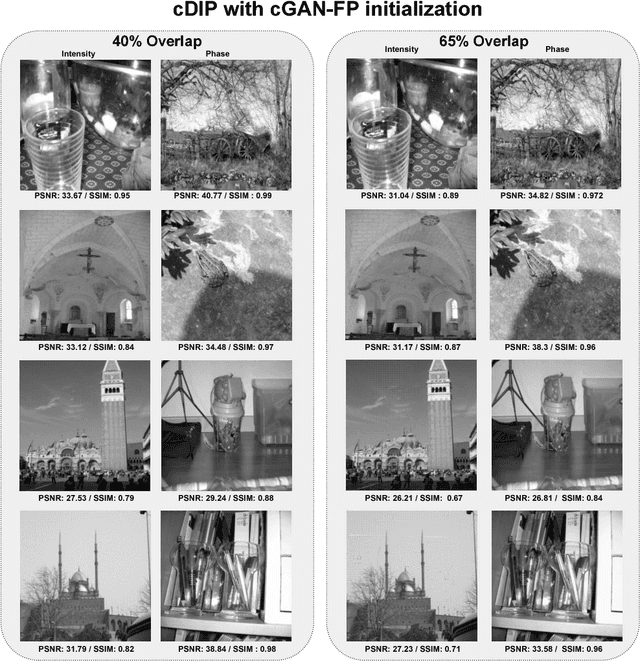

Abstract:Fourier Ptychography is a recently proposed imaging technique that yields high-resolution images by computationally transcending the diffraction blur of an optical system. At the crux of this method is the phase retrieval algorithm, which is used for computationally stitching together low-resolution images taken under varying illumination angles of a coherent light source. However, the traditional iterative phase retrieval technique relies heavily on the initialization and also need a good amount of overlap in the Fourier domain for the successively captured low-resolution images, thus increasing the acquisition time and data. We show that an auto-encoder based architecture can be adaptively trained for phase retrieval under both low overlap, where traditional techniques completely fail, and at higher levels of overlap. For the low overlap case we show that a supervised deep learning technique using an autoencoder generator is a good choice for solving the Fourier ptychography problem. And for the high overlap case, we show that optimizing the generator for reducing the forward model error is an appropriate choice. Using simulations for the challenging case of uncorrelated phase and amplitude, we show that our method outperforms many of the previously proposed Fourier ptychography phase retrieval techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge