Rânik Guidolini

Bio-Inspired Foveated Technique for Augmented-Range Vehicle Detection Using Deep Neural Networks

Oct 02, 2019

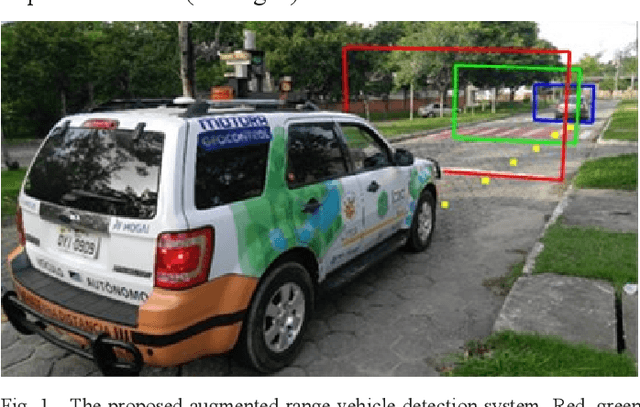

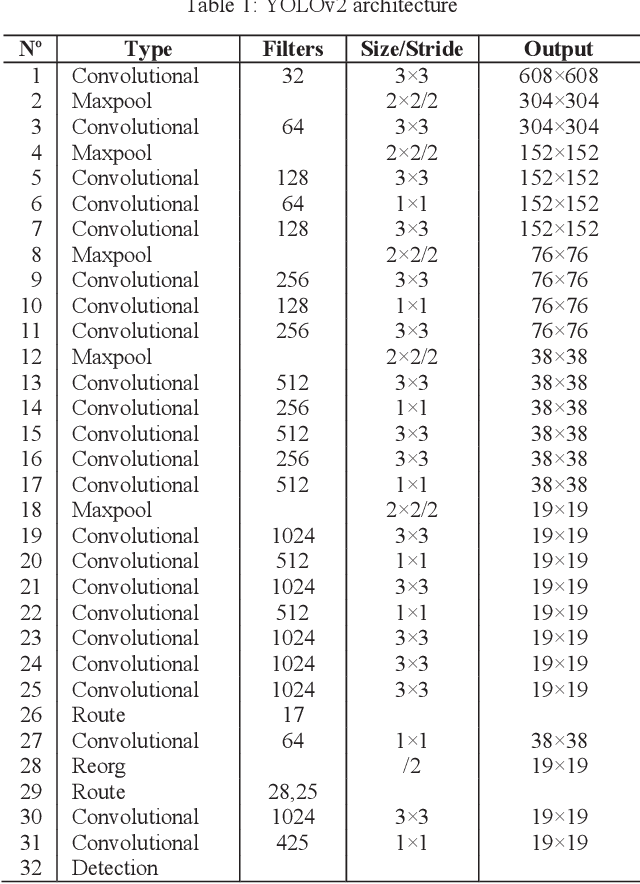

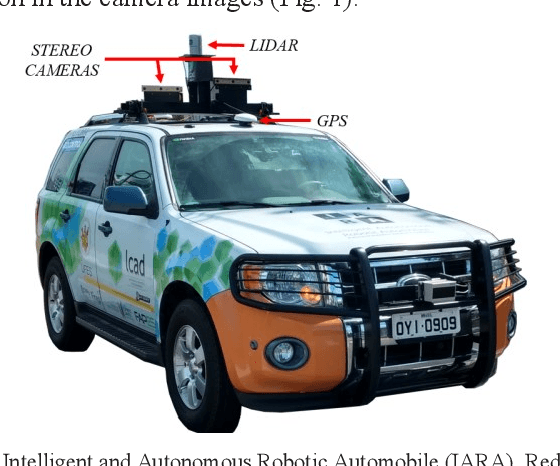

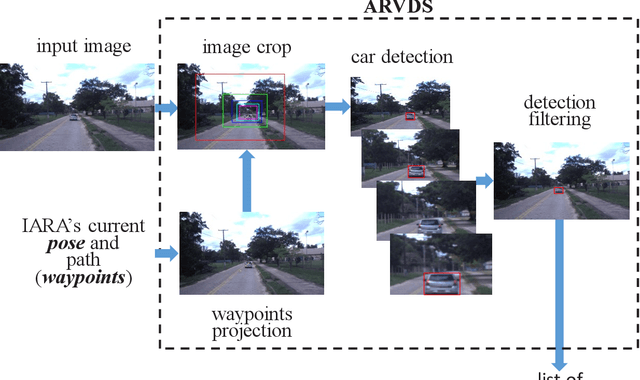

Abstract:We propose a bio-inspired foveated technique to detect cars in a long range camera view using a deep convolutional neural network (DCNN) for the IARA self-driving car. The DCNN receives as input (i) an image, which is captured by a camera installed on IARA's roof; and (ii) crops of the image, which are centered in the waypoints computed by IARA's path planner and whose sizes increase with the distance from IARA. We employ an overlap filter to discard detections of the same car in different crops of the same image based on the percentage of overlap of detections' bounding boxes. We evaluated the performance of the proposed augmented-range vehicle detection system (ARVDS) using the hardware and software infrastructure available in the IARA self-driving car. Using IARA, we captured thousands of images of real traffic situations containing cars in a long range. Experimental results show that ARVDS increases the Average Precision (AP) of long range car detection from 29.51% (using a single whole image) to 63.15%.

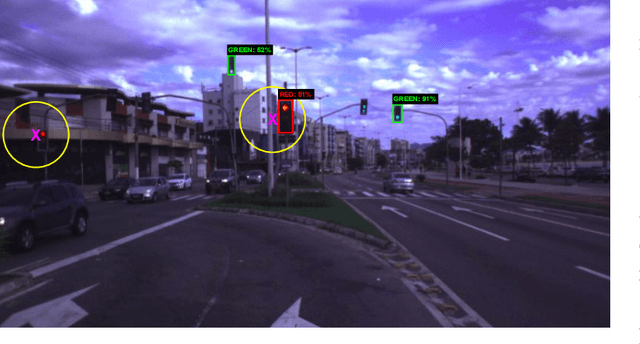

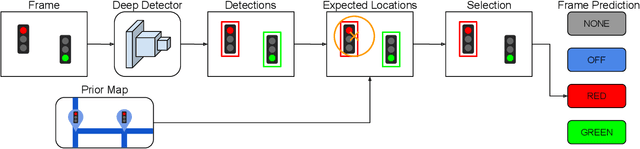

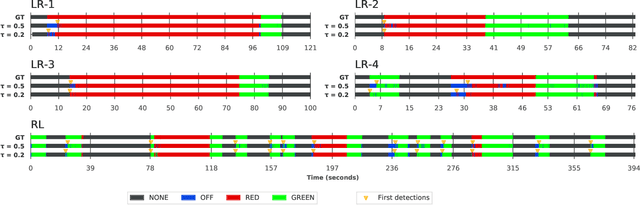

Traffic Light Recognition Using Deep Learning and Prior Maps for Autonomous Cars

Jun 04, 2019

Abstract:Autonomous terrestrial vehicles must be capable of perceiving traffic lights and recognizing their current states to share the streets with human drivers. Most of the time, human drivers can easily identify the relevant traffic lights. To deal with this issue, a common solution for autonomous cars is to integrate recognition with prior maps. However, additional solution is required for the detection and recognition of the traffic light. Deep learning techniques have showed great performance and power of generalization including traffic related problems. Motivated by the advances in deep learning, some recent works leveraged some state-of-the-art deep detectors to locate (and further recognize) traffic lights from 2D camera images. However, none of them combine the power of the deep learning-based detectors with prior maps to recognize the state of the relevant traffic lights. Based on that, this work proposes to integrate the power of deep learning-based detection with the prior maps used by our car platform IARA (acronym for Intelligent Autonomous Robotic Automobile) to recognize the relevant traffic lights of predefined routes. The process is divided in two phases: an offline phase for map construction and traffic lights annotation; and an online phase for traffic light recognition and identification of the relevant ones. The proposed system was evaluated on five test cases (routes) in the city of Vit\'oria, each case being composed of a video sequence and a prior map with the relevant traffic lights for the route. Results showed that the proposed technique is able to correctly identify the relevant traffic light along the trajectory.

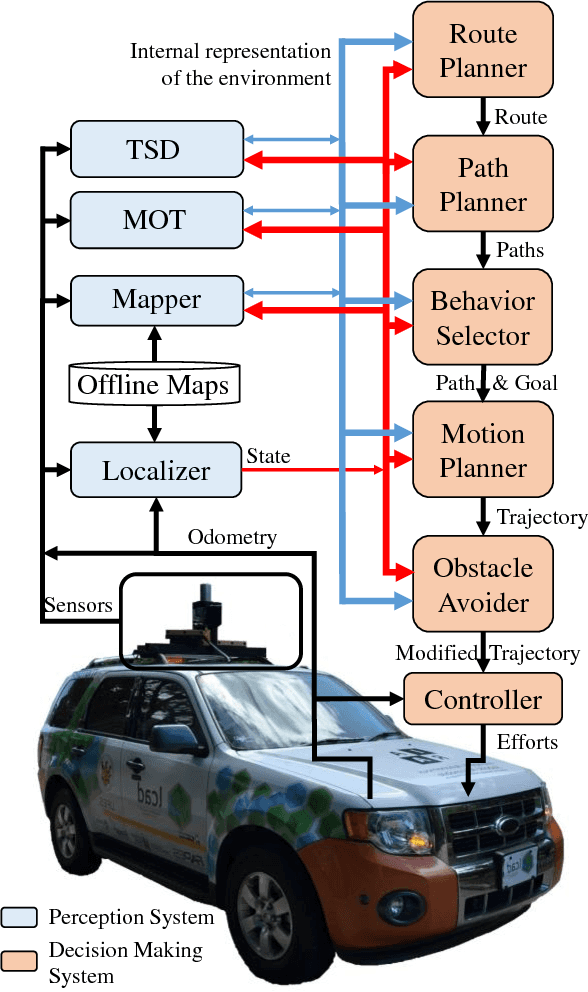

Self-Driving Cars: A Survey

Jan 14, 2019

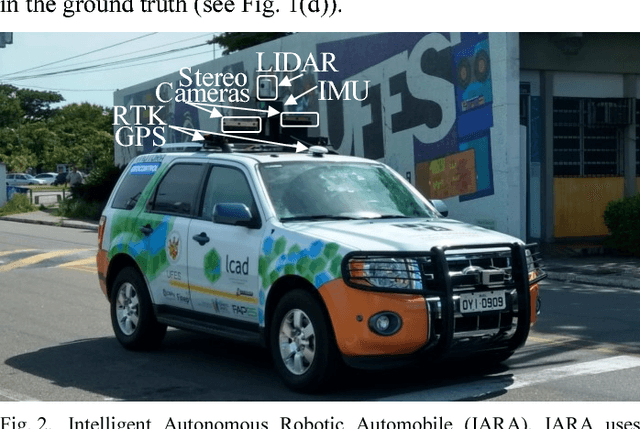

Abstract:We survey research on self-driving cars published in the literature focusing on autonomous cars developed since the DARPA challenges, which are equipped with an autonomy system that can be categorized as SAE level 3 or higher. The architecture of the autonomy system of self-driving cars is typically organized into the perception system and the decision-making system. The perception system is generally divided into many subsystems responsible for tasks such as self-driving-car localization, static obstacles mapping, moving obstacles detection and tracking, road mapping, traffic signalization detection and recognition, among others. The decision-making system is commonly partitioned as well into many subsystems responsible for tasks such as route planning, path planning, behavior selection, motion planning, and control. In this survey, we present the typical architecture of the autonomy system of self-driving cars. We also review research on relevant methods for perception and decision making. Furthermore, we present a detailed description of the architecture of the autonomy system of the UFES's car, IARA. Finally, we list prominent autonomous research cars developed by technology companies and reported in the media.

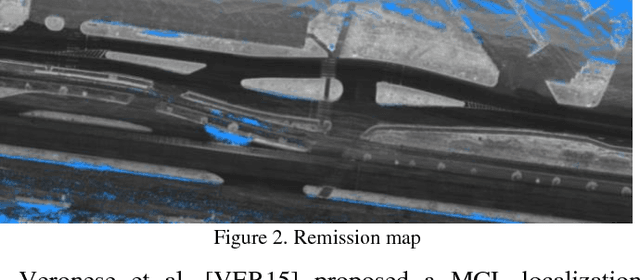

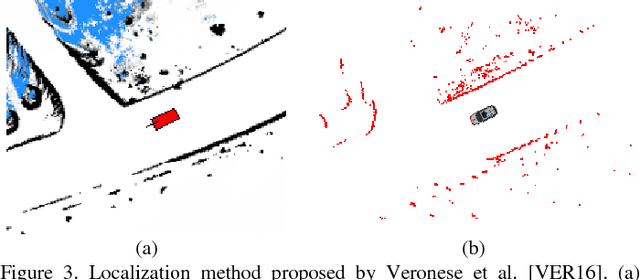

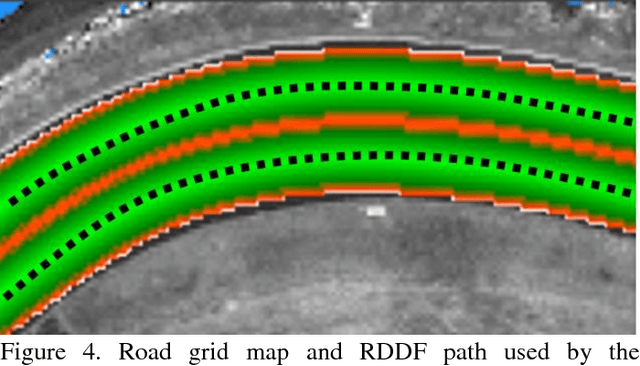

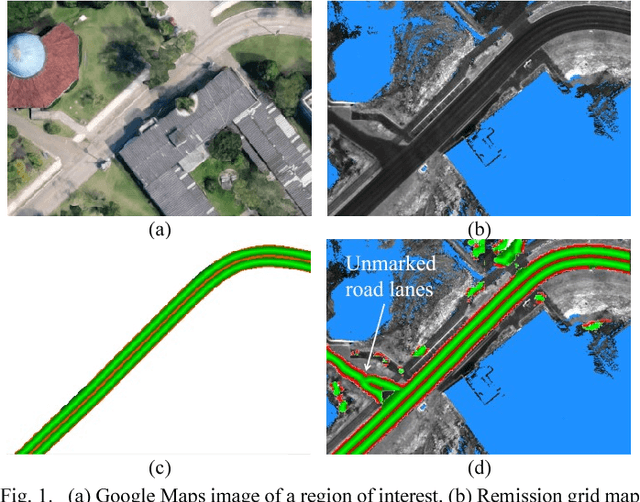

Mapping Road Lanes Using Laser Remission and Deep Neural Networks

Apr 27, 2018

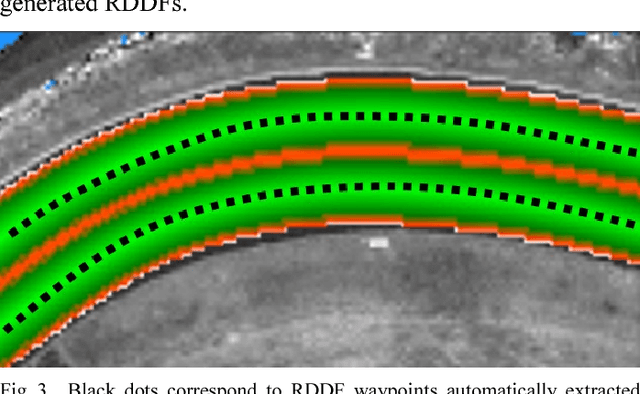

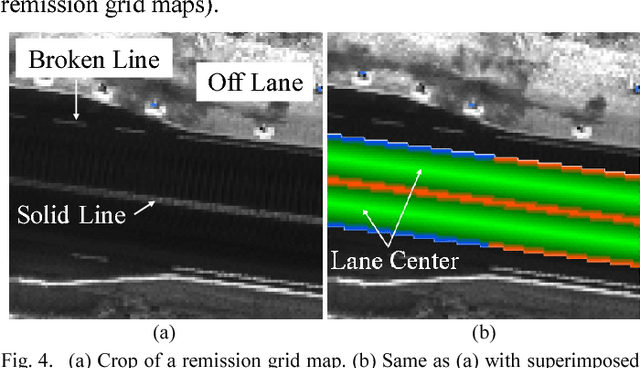

Abstract:We propose the use of deep neural networks (DNN) for solving the problem of inferring the position and relevant properties of lanes of urban roads with poor or absent horizontal signalization, in order to allow the operation of autonomous cars in such situations. We take a segmentation approach to the problem and use the Efficient Neural Network (ENet) DNN for segmenting LiDAR remission grid maps into road maps. We represent road maps using what we called road grid maps. Road grid maps are square matrixes and each element of these matrixes represents a small square region of real-world space. The value of each element is a code associated with the semantics of the road map. Our road grid maps contain all information about the roads' lanes required for building the Road Definition Data Files (RDDFs) that are necessary for the operation of our autonomous car, IARA (Intelligent Autonomous Robotic Automobile). We have built a dataset of tens of kilometers of manually marked road lanes and used part of it to train ENet to segment road grid maps from remission grid maps. After being trained, ENet achieved an average segmentation accuracy of 83.7%. We have tested the use of inferred road grid maps in the real world using IARA on a stretch of 3.7 km of urban roads and it has shown performance equivalent to that of the previous IARA's subsystem that uses a manually generated RDDF.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge