Qinghua Zeng

Semi-Aerodynamic Model Aided Invariant Kalman Filtering for UAV Full-State Estimation

Oct 03, 2023Abstract:Due to the state trajectory-independent features of invariant Kalman filtering (InEKF), it has attracted widespread attention in the research community for its significantly improved state estimation accuracy and convergence under disturbance. In this paper, we formulate the full-source data fusion navigation problem for fixed-wing unmanned aerial vehicle (UAV) within a framework based on error state right-invariant extended Kalman filtering (ES-RIEKF) on Lie groups. We merge measurements from a multi-rate onboard sensor network on UAVs to achieve real-time estimation of pose, air flow angles, and wind speed. Detailed derivations are provided, and the algorithm's convergence and accuracy improvements over established methods like Error State EKF (ES-EKF) and Nonlinear Complementary Filter (NCF) are demonstrated using real-flight data from UAVs. Additionally, we introduce a semi-aerodynamic model fusion framework that relies solely on ground-measurable parameters. We design and train an Long Short Term Memory (LSTM) deep network to achieve drift-free prediction of the UAV's angle of attack (AOA) and side-slip angle (SA) using easily obtainable onboard data like control surface deflections, thereby significantly reducing dependency on GNSS or complicated aerodynamic model parameters. Further, we validate the algorithm's robust advantages under GNSS denied, where flight data shows that the maximum positioning error stays within 30 meters over a 130-second denial period. To the best of our knowledge, this study is the first to apply ES-RIEKF to full-source navigation applications for fixed-wing UAVs, aiming to provide engineering references for designers. Our implementations using MATLAB/Simulink will open source.

Small Object Detection via Coarse-to-fine Proposal Generation and Imitation Learning

Aug 18, 2023

Abstract:The past few years have witnessed the immense success of object detection, while current excellent detectors struggle on tackling size-limited instances. Concretely, the well-known challenge of low overlaps between the priors and object regions leads to a constrained sample pool for optimization, and the paucity of discriminative information further aggravates the recognition. To alleviate the aforementioned issues, we propose CFINet, a two-stage framework tailored for small object detection based on the Coarse-to-fine pipeline and Feature Imitation learning. Firstly, we introduce Coarse-to-fine RPN (CRPN) to ensure sufficient and high-quality proposals for small objects through the dynamic anchor selection strategy and cascade regression. Then, we equip the conventional detection head with a Feature Imitation (FI) branch to facilitate the region representations of size-limited instances that perplex the model in an imitation manner. Moreover, an auxiliary imitation loss following supervised contrastive learning paradigm is devised to optimize this branch. When integrated with Faster RCNN, CFINet achieves state-of-the-art performance on the large-scale small object detection benchmarks, SODA-D and SODA-A, underscoring its superiority over baseline detector and other mainstream detection approaches.

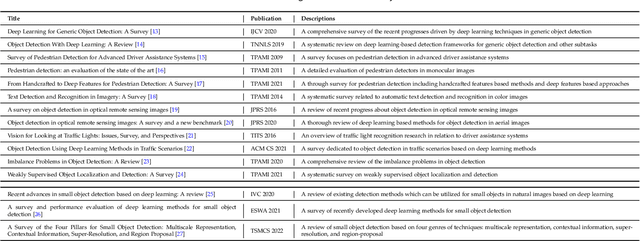

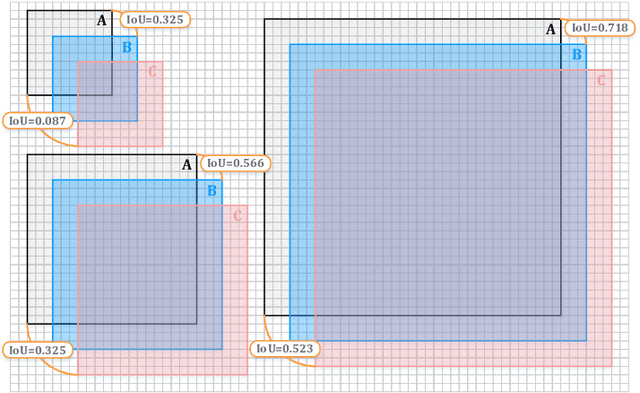

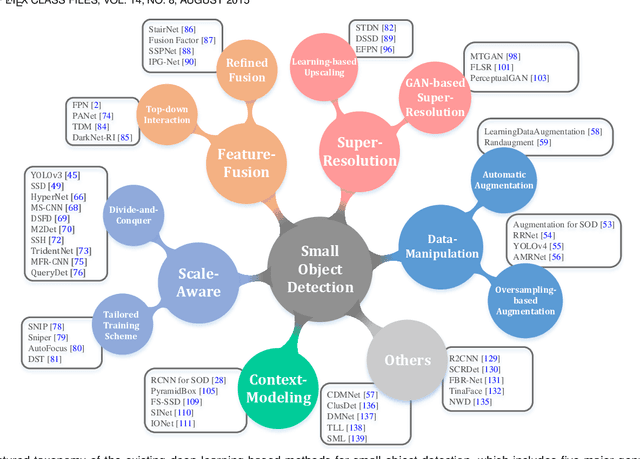

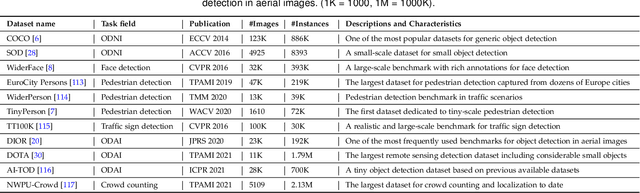

Towards Large-Scale Small Object Detection: Survey and Benchmarks

Jul 31, 2022

Abstract:With the rise of deep convolutional neural networks, object detection has achieved prominent advances in past years. However, such prosperity could not camouflage the unsatisfactory situation of Small Object Detection (SOD), one of the notoriously challenging tasks in computer vision, owing to the poor visual appearance and noisy representation caused by the intrinsic structure of small targets. In addition, large-scale dataset for benchmarking small object detection methods remains a bottleneck. In this paper, we first conduct a thorough review of small object detection. Then, to catalyze the development of SOD, we construct two large-scale Small Object Detection dAtasets (SODA), SODA-D and SODA-A, which focus on the Driving and Aerial scenarios respectively. SODA-D includes 24704 high-quality traffic images and 277596 instances of 9 categories. For SODA-A, we harvest 2510 high-resolution aerial images and annotate 800203 instances over 9 classes. The proposed datasets, as we know, are the first-ever attempt to large-scale benchmarks with a vast collection of exhaustively annotated instances tailored for multi-category SOD. Finally, we evaluate the performance of mainstream methods on SODA. We expect the released benchmarks could facilitate the development of SOD and spawn more breakthroughs in this field. Datasets and codes will be available soon at: \url{https://shaunyuan22.github.io/SODA}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge