Qianli Hu

Hybrid Reinforcement Learning Framework for Mixed-Variable Problems

May 30, 2024Abstract:Optimization problems characterized by both discrete and continuous variables are common across various disciplines, presenting unique challenges due to their complex solution landscapes and the difficulty of navigating mixed-variable spaces effectively. To Address these challenges, we introduce a hybrid Reinforcement Learning (RL) framework that synergizes RL for discrete variable selection with Bayesian Optimization for continuous variable adjustment. This framework stands out by its strategic integration of RL and continuous optimization techniques, enabling it to dynamically adapt to the problem's mixed-variable nature. By employing RL for exploring discrete decision spaces and Bayesian Optimization to refine continuous parameters, our approach not only demonstrates flexibility but also enhances optimization performance. Our experiments on synthetic functions and real-world machine learning hyperparameter tuning tasks reveal that our method consistently outperforms traditional RL, random search, and standalone Bayesian optimization in terms of effectiveness and efficiency.

A cost-reducing partial labeling estimator in text classification problem

Jun 10, 2019

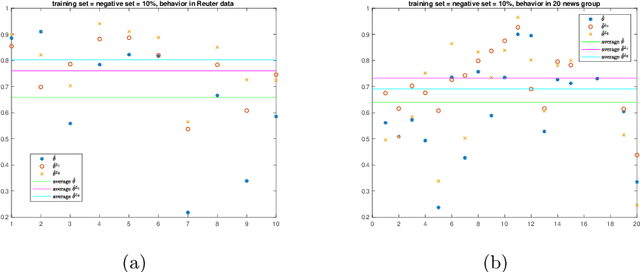

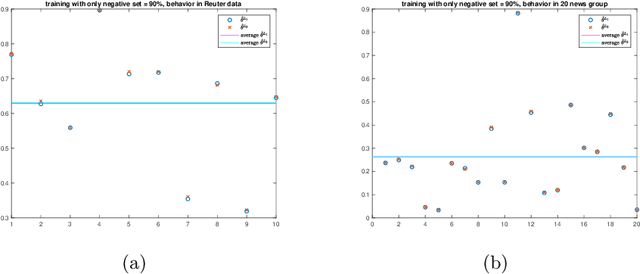

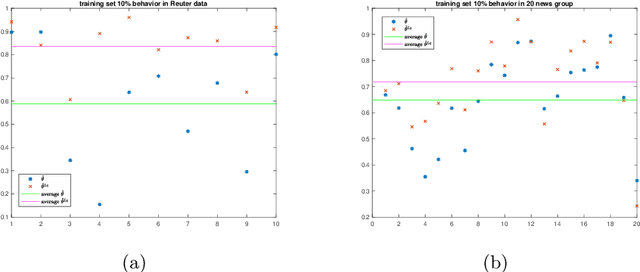

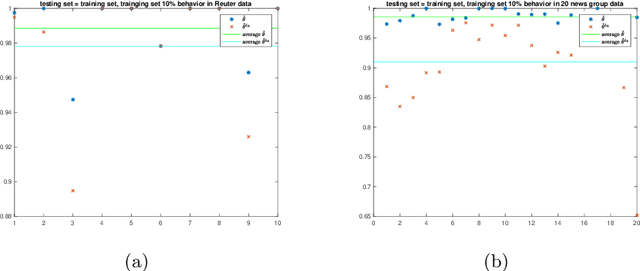

Abstract:We propose a new approach to address the text classification problems when learning with partial labels is beneficial. Instead of offering each training sample a set of candidate labels, we assign negative-oriented labels to the ambiguous training examples if they are unlikely fall into certain classes. We construct our new maximum likelihood estimators with self-correction property, and prove that under some conditions, our estimators converge faster. Also we discuss the advantages of applying one of our estimator to a fully supervised learning problem. The proposed method has potential applicability in many areas, such as crowdsourcing, natural language processing and medical image analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge