Qi Kuang

Variance Control for Distributional Reinforcement Learning

Jul 30, 2023

Abstract:Although distributional reinforcement learning (DRL) has been widely examined in the past few years, very few studies investigate the validity of the obtained Q-function estimator in the distributional setting. To fully understand how the approximation errors of the Q-function affect the whole training process, we do some error analysis and theoretically show how to reduce both the bias and the variance of the error terms. With this new understanding, we construct a new estimator \emph{Quantiled Expansion Mean} (QEM) and introduce a new DRL algorithm (QEMRL) from the statistical perspective. We extensively evaluate our QEMRL algorithm on a variety of Atari and Mujoco benchmark tasks and demonstrate that QEMRL achieves significant improvement over baseline algorithms in terms of sample efficiency and convergence performance.

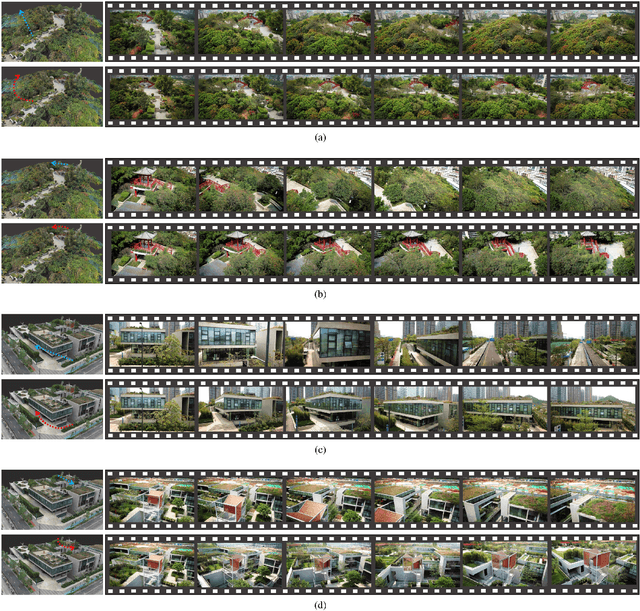

Aesthetics Driven Autonomous Time-Lapse Photography Generation by Virtual and Real Robots

Aug 22, 2022

Abstract:Time-lapse photography is employed in movies and promotional films because it can reflect the passage of time in a short time and strengthen the visual attraction. However, since it takes a long time and requires the stable shooting, it is a great challenge for the photographer. In this article, we propose a time-lapse photography system with virtual and real robots. To help users shoot time-lapse videos efficiently, we first parameterize the time-lapse photography and propose a parameter optimization method. For different parameters, different aesthetic models, including image and video aesthetic quality assessment networks, are used to generate optimal parameters. Then we propose a time-lapse photography interface to facilitate users to view and adjust parameters and use virtual robots to conduct virtual photography in a three-dimensional scene. The system can also export the parameters and provide them to real robots so that the time-lapse videos can be filmed in the real world. In addition, we propose a time-lapse photography aesthetic assessment method that can automatically evaluate the aesthetic quality of time-lapse video. The experimental results show that our method can efficiently obtain the time-lapse videos. We also conduct a user study. The results show that our system has the similar effect as professional photographers and is more efficient.

Non-decreasing Quantile Function Network with Efficient Exploration for Distributional Reinforcement Learning

May 14, 2021

Abstract:Although distributional reinforcement learning (DRL) has been widely examined in the past few years, there are two open questions people are still trying to address. One is how to ensure the validity of the learned quantile function, the other is how to efficiently utilize the distribution information. This paper attempts to provide some new perspectives to encourage the future in-depth studies in these two fields. We first propose a non-decreasing quantile function network (NDQFN) to guarantee the monotonicity of the obtained quantile estimates and then design a general exploration framework called distributional prediction error (DPE) for DRL which utilizes the entire distribution of the quantile function. In this paper, we not only discuss the theoretical necessity of our method but also show the performance gain it achieves in practice by comparing with some competitors on Atari 2600 Games especially in some hard-explored games.

Deep Multimodality Learning for UAV Video Aesthetic Quality Assessment

Nov 04, 2020

Abstract:Despite the growing number of unmanned aerial vehicles (UAVs) and aerial videos, there is a paucity of studies focusing on the aesthetics of aerial videos that can provide valuable information for improving the aesthetic quality of aerial photography. In this article, we present a method of deep multimodality learning for UAV video aesthetic quality assessment. More specifically, a multistream framework is designed to exploit aesthetic attributes from multiple modalities, including spatial appearance, drone camera motion, and scene structure. A novel specially designed motion stream network is proposed for this new multistream framework. We construct a dataset with 6,000 UAV video shots captured by drone cameras. Our model can judge whether a UAV video was shot by professional photographers or amateurs together with the scene type classification. The experimental results reveal that our method outperforms the video classification methods and traditional SVM-based methods for video aesthetics. In addition, we present three application examples of UAV video grading, professional segment detection and aesthetic-based UAV path planning using the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge