Pravin Nagar

Making Third Person Techniques Recognize First-Person Actions in Egocentric Videos

Oct 17, 2019

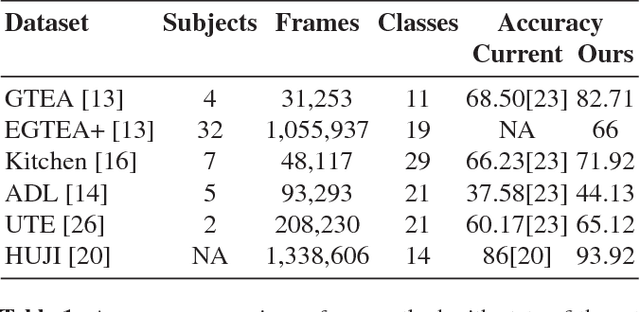

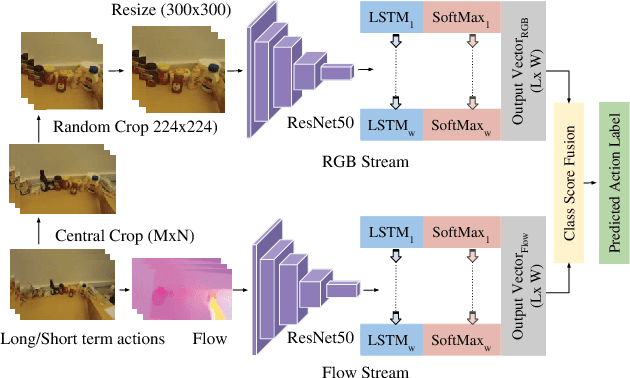

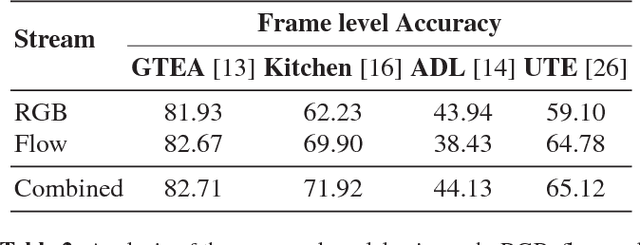

Abstract:We focus on first-person action recognition from egocentric videos. Unlike third person domain, researchers have divided first-person actions into two categories: involving hand-object interactions and the ones without, and developed separate techniques for the two action categories. Further, it has been argued that traditional cues used for third person action recognition do not suffice, and egocentric specific features, such as head motion and handled objects have been used for such actions. Unlike the state-of-the-art approaches, we show that a regular two stream Convolutional Neural Network (CNN) with Long Short-Term Memory (LSTM) architecture, having separate streams for objects and motion, can generalize to all categories of first-person actions. The proposed approach unifies the feature learned by all action categories, making the proposed architecture much more practical. In an important observation, we note that the size of the objects visible in the egocentric videos is much smaller. We show that the performance of the proposed model improves after cropping and resizing frames to make the size of objects comparable to the size of ImageNet's objects. Our experiments on the standard datasets: GTEA, EGTEA Gaze+, HUJI, ADL, UTE, and Kitchen, proves that our model significantly outperforms various state-of-the-art techniques.

U-SegNet: Fully Convolutional Neural Network based Automated Brain tissue segmentation Tool

Jun 12, 2018

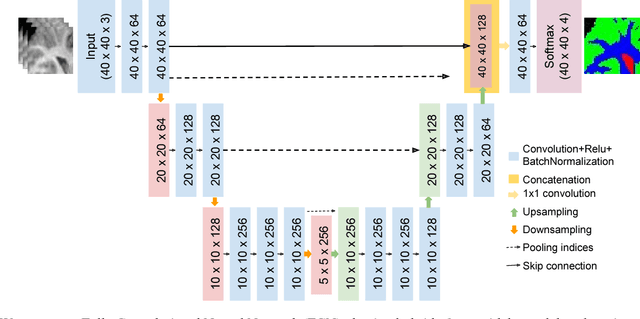

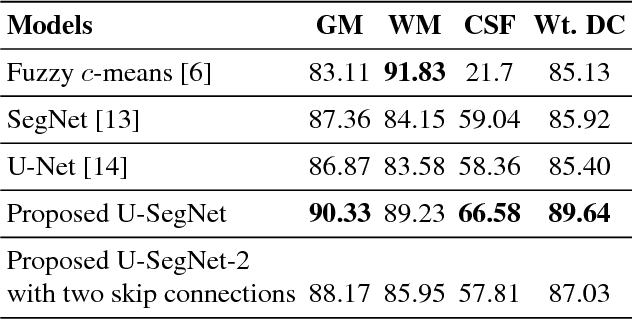

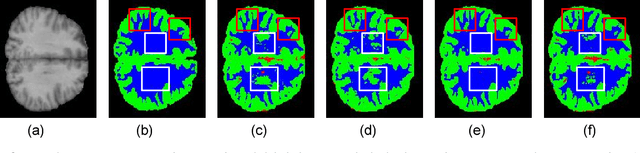

Abstract:Automated brain tissue segmentation into white matter (WM), gray matter (GM), and cerebro-spinal fluid (CSF) from magnetic resonance images (MRI) is helpful in the diagnosis of neuro-disorders such as epilepsy, Alzheimer's, multiple sclerosis, etc. However, thin GM structures at the periphery of cortex and smooth transitions on tissue boundaries such as between GM and WM, or WM and CSF pose difficulty in building a reliable segmentation tool. This paper proposes a Fully Convolutional Neural Network (FCN) tool, that is a hybrid of two widely used deep learning segmentation architectures SegNet and U-Net, for improved brain tissue segmentation. We propose a skip connection inspired from U-Net, in the SegNet architetcure, to incorporate fine multiscale information for better tissue boundary identification. We show that the proposed U-SegNet architecture, improves segmentation performance, as measured by average dice ratio, to 89.74% on the widely used IBSR dataset consisting of T-1 weighted MRI volumes of 18 subjects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge