Pravakar Roy

Apple Counting using Convolutional Neural Networks

Aug 24, 2022

Abstract:Estimating accurate and reliable fruit and vegetable counts from images in real-world settings, such as orchards, is a challenging problem that has received significant recent attention. Estimating fruit counts before harvest provides useful information for logistics planning. While considerable progress has been made toward fruit detection, estimating the actual counts remains challenging. In practice, fruits are often clustered together. Therefore, methods that only detect fruits fail to offer general solutions to estimate accurate fruit counts. Furthermore, in horticultural studies, rather than a single yield estimate, finer information such as the distribution of the number of apples per cluster is desirable. In this work, we formulate fruit counting from images as a multi-class classification problem and solve it by training a Convolutional Neural Network. We first evaluate the per-image accuracy of our method and compare it with a state-of-the-art method based on Gaussian Mixture Models over four test datasets. Even though the parameters of the Gaussian Mixture Model-based method are specifically tuned for each dataset, our network outperforms it in three out of four datasets with a maximum of 94\% accuracy. Next, we use the method to estimate the yield for two datasets for which we have ground truth. Our method achieved 96-97\% accuracies. For additional details please see our video here: https://www.youtube.com/watch?v=Le0mb5P-SYc}{https://www.youtube.com/watch?v=Le0mb5P-SYc.

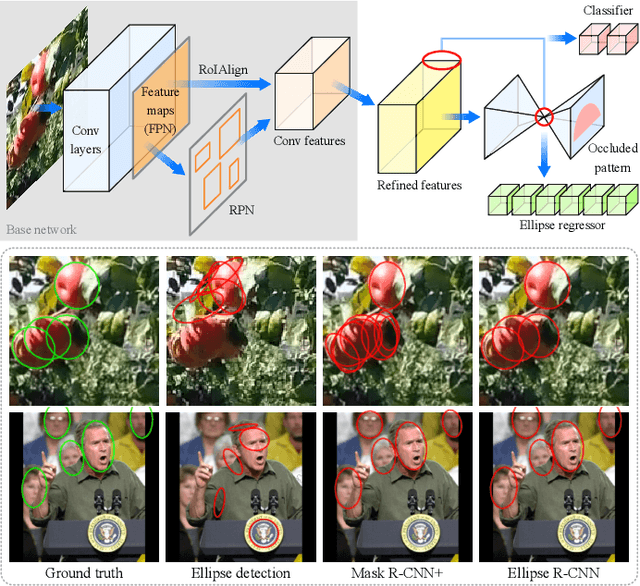

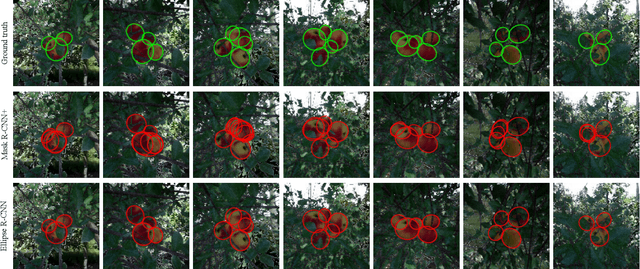

Ellipse R-CNN: Learning to Infer Elliptical Object from Clustering and Occlusion

Jan 30, 2020

Abstract:Images of heavily occluded objects in cluttered scenes, such as fruit clusters in trees, are hard to segment. To further retrieve the 3D size and 6D pose of each individual object in such cases, bounding boxes are not reliable from multiple views since only a little portion of the object's geometry is captured. We introduce the first CNN-based ellipse detector, called Ellipse R-CNN, to represent and infer occluded objects as ellipses. We first propose a robust and compact ellipse regression based on the Mask R-CNN architecture for elliptical object detection. Our method can infer the parameters of multiple elliptical objects even they are occluded by other neighboring objects. For better occlusion handling, we exploit refined feature regions for the regression stage, and integrate the U-Net structure for learning different occlusion patterns to compute the final detection score. The correctness of ellipse regression is validated through experiments performed on synthetic data of clustered ellipses. We further quantitatively and qualitatively demonstrate that our approach outperforms the state-of-the-art model (i.e., Mask R-CNN followed by ellipse fitting) and its three variants on both synthetic and real datasets of occluded and clustered elliptical objects.

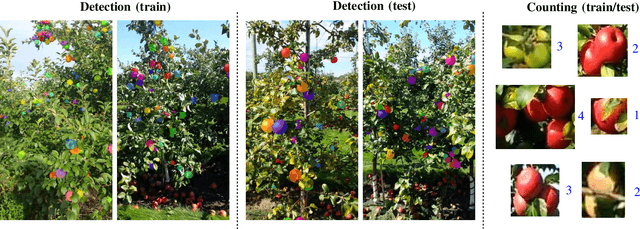

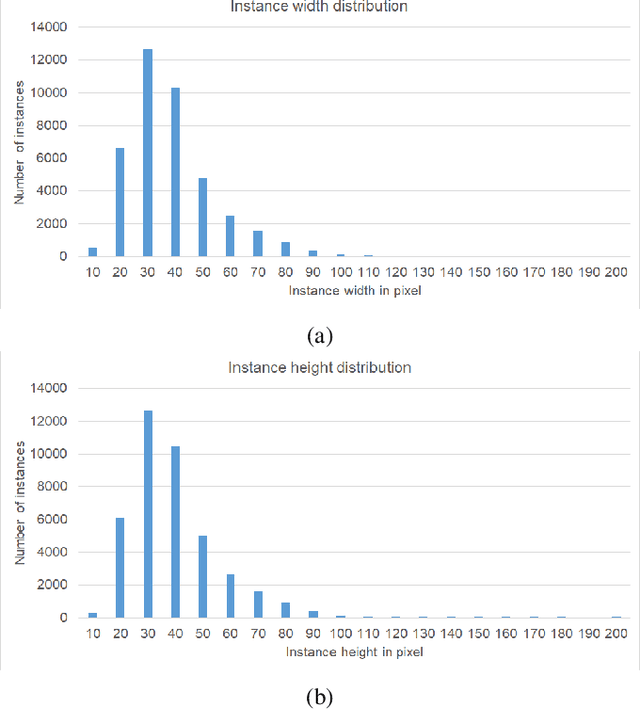

MinneApple: A Benchmark Dataset for Apple Detection and Segmentation

Sep 13, 2019

Abstract:In this work, we present a new dataset to advance the state-of-the-art in fruit detection, segmentation, and counting in orchard environments. While there has been significant recent interest in solving these problems, the lack of a unified dataset has made it difficult to compare results. We hope to enable direct comparisons by providing a large variety of high-resolution images acquired in orchards, together with human annotations of the fruit on trees. The fruits are labeled using polygonal masks for each object instance to aid in precise object detection, localization, and segmentation. Additionally, we provide data for patch-based counting of clustered fruits. Our dataset contains over 41, 000 annotated object instances in 1000 images. We present a detailed overview of the dataset together with baseline performance analysis for bounding box detection, segmentation, and fruit counting as well as representative results for yield estimation. We make this dataset publicly available and host a CodaLab challenge to encourage comparison of results on a common dataset. To download the data and learn more about MinneApple please see the project website: http://rsn.cs.umn.edu/index.php/MinneApple. Up to date information is available online.

Semantics-Aware Image to Image Translation and Domain Transfer

Apr 03, 2019

Abstract:Image to image translation is the problem of transferring an image from a source domain to a target domain. We present a new method to transfer the underlying semantics of an image even when there are geometric changes across the two domains. Specifically, we present a Generative Adversarial Network (GAN) that can transfer semantic information presented as segmentation masks. Our main technical contribution is an encoder-decoder based generator architecture that jointly encodes the image and its underlying semantics and translates both simultaneously to the target domain. Additionally, we propose object transfiguration and cross-domain semantic consistency losses that preserve the underlying semantic labels maps. We demonstrate the effectiveness of our approach in multiple object transfiguration and domain transfer tasks through qualitative and quantitative experiments. The results show that our method is better at transferring image semantics than state of the art image to image translation methods.

A Comparative Study of Fruit Detection and Counting Methods for Yield Mapping in Apple Orchards

Mar 06, 2019

Abstract:We present new methods for apple detection and counting based on recent deep learning approaches and compare them with state-of-the-art results based on classical methods. Our goal is to quantify performance improvements by neural network-based methods compared to methods based on classical approaches. Additionally, we introduce a complete system for counting apples in an entire row. This task is challenging as it requires tracking fruits in images from both sides of the row. We evaluate the performances of three fruit detection methods and two fruit counting methods on six datasets. Results indicate that the classical detection approach still outperforms the deep learning based methods in the majority of the datasets. For fruit counting though, the deep learning based approach performs better for all of the datasets. Combining the classical detection method together with the neural network based counting approach, we achieve remarkable yield accuracies ranging from 95.56% to 97.83%.

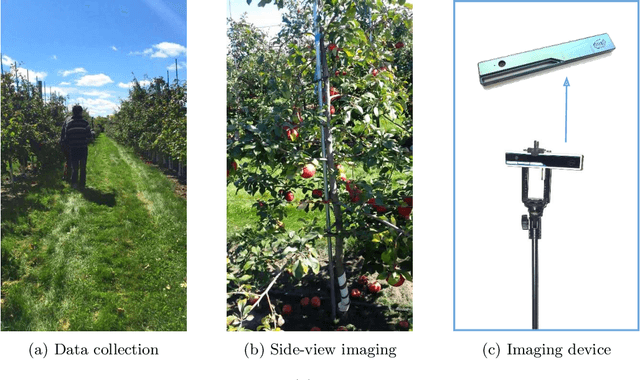

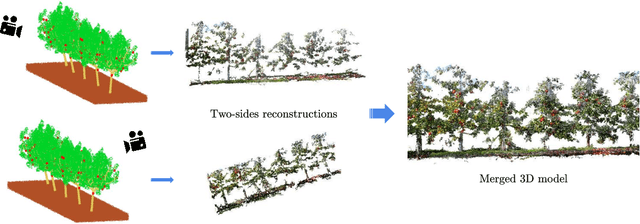

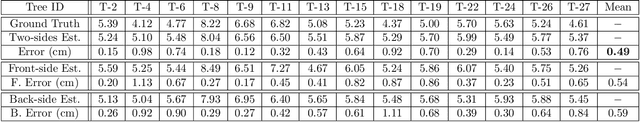

Semantic Mapping for Orchard Environments by Merging Two-Sides Reconstructions of Tree Rows

Jan 11, 2019

Abstract:Measuring semantic traits for phenotyping is an essential but labor-intensive activity in horticulture. Researchers often rely on manual measurements which may not be accurate for tasks such as measuring tree volume. To improve the accuracy of such measurements and to automate the process, we consider the problem of building coherent three dimensional (3D) reconstructions of orchard rows. Even though 3D reconstructions of side views can be obtained using standard mapping techniques, merging the two side-views is difficult due to the lack of overlap between the two partial reconstructions. Our first main contribution in this paper is a novel method that utilizes global features and semantic information to obtain an initial solution aligning the two sides. Our mapping approach then refines the 3D model of the entire tree row by integrating semantic information common to both sides, and extracted using our novel robust detection and fitting algorithms. Next, we present a vision system to measure semantic traits from the optimized 3D model that is built from the RGB or RGB-D data captured by only a camera. Specifically, we show how canopy volume, trunk diameter, tree height and fruit count can be automatically obtained in real orchard environments. The experiment results from multiple datasets quantitatively demonstrate the high accuracy and robustness of our method.

Vision-Based Preharvest Yield Mapping for Apple Orchards

Aug 13, 2018

Abstract:We present an end-to-end computer vision system for mapping yield in an apple orchard using images captured from a single camera. Our proposed system is platform independent and does not require any specific lighting conditions. Our main technical contributions are 1)~a semi-supervised clustering algorithm that utilizes colors to identify apples and 2)~an unsupervised clustering method that utilizes spatial properties to estimate fruit counts from apple clusters having arbitrarily complex geometry. Additionally, we utilize camera motion to merge the counts across multiple views. We verified the performance of our algorithms by conducting multiple field trials on three tree rows consisting of $252$ trees at the University of Minnesota Horticultural Research Center. Results indicate that the detection method achieves $F_1$-measure $.95 -.97$ for multiple color varieties and lighting conditions. The counting method achieves an accuracy of $89\%-98\%$. Additionally, we report merged fruit counts from both sides of the tree rows. Our yield estimation method achieves an overall accuracy of $91.98\% - 94.81\%$ across different datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge