Semantic Mapping for Orchard Environments by Merging Two-Sides Reconstructions of Tree Rows

Paper and Code

Jan 11, 2019

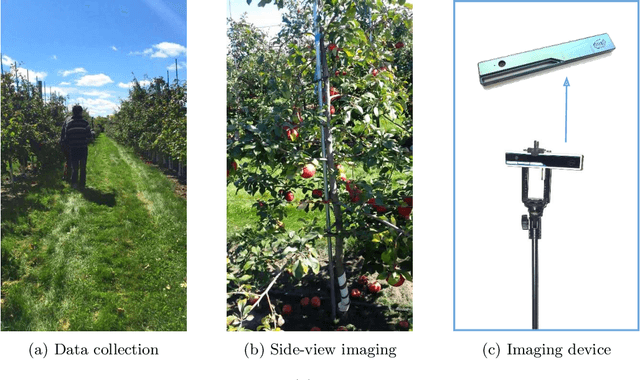

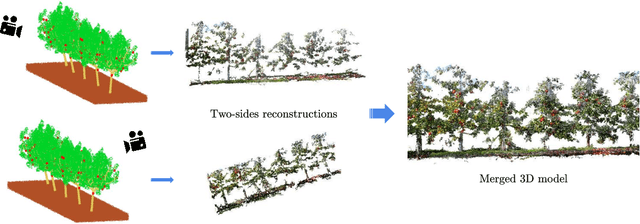

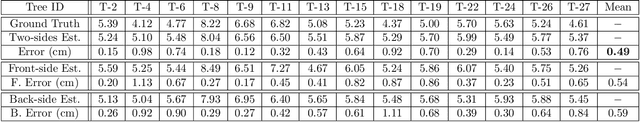

Measuring semantic traits for phenotyping is an essential but labor-intensive activity in horticulture. Researchers often rely on manual measurements which may not be accurate for tasks such as measuring tree volume. To improve the accuracy of such measurements and to automate the process, we consider the problem of building coherent three dimensional (3D) reconstructions of orchard rows. Even though 3D reconstructions of side views can be obtained using standard mapping techniques, merging the two side-views is difficult due to the lack of overlap between the two partial reconstructions. Our first main contribution in this paper is a novel method that utilizes global features and semantic information to obtain an initial solution aligning the two sides. Our mapping approach then refines the 3D model of the entire tree row by integrating semantic information common to both sides, and extracted using our novel robust detection and fitting algorithms. Next, we present a vision system to measure semantic traits from the optimized 3D model that is built from the RGB or RGB-D data captured by only a camera. Specifically, we show how canopy volume, trunk diameter, tree height and fruit count can be automatically obtained in real orchard environments. The experiment results from multiple datasets quantitatively demonstrate the high accuracy and robustness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge