Philippe Zanne

ICube

Spatiotemporal modeling of grip forces captures proficiency in manual robot control

Mar 03, 2023

Abstract:This paper builds on our previous work by exploiting Artificial Intelligence to predict individual grip force variability in manual robot control. Grip forces were recorded from various loci in the dominant and non dominant hands of individuals by means of wearable wireless sensor technology. Statistical analyses bring to the fore skill specific temporal variations in thousands of grip forces of a complete novice and a highly proficient expert in manual robot control. A brain inspired neural network model that uses the output metric of a Self Organizing Map with unsupervised winner take all learning was run on the sensor output from both hands of each user. The neural network metric expresses the difference between an input representation and its model representation at any given moment in time t and reliably captures the differences between novice and expert performance in terms of grip force variability.Functionally motivated spatiotemporal analysis of individual average grip forces, computed for time windows of constant size in the output of a restricted amount of task-relevant sensors in the dominant (preferred) hand, reveal finger-specific synergies reflecting robotic task skill. The analyses lead the way towards grip force monitoring in real time to permit tracking task skill evolution in trainees, or identify individual proficiency levels in human robot interaction in environmental contexts of high sensory uncertainty. Parsimonious Artificial Intelligence (AI) assistance will contribute to the outcome of new types of surgery, in particular single-port approaches such as NOTES (Natural Orifice Transluminal Endoscopic Surgery) and SILS (Single Incision Laparoscopic Surgery).

Data Stream Stabilization for Optical Coherence Tomography Volumetric Scanning

Dec 02, 2021

Abstract:Optical Coherence Tomography (OCT) is an emerging medical imaging modality for luminal organ diagnosis. The non-constant rotation speed of optical components in the OCT catheter tip causes rotational distortion in OCT volumetric scanning. By improving the scanning process, this instability can be partially reduced. To further correct the rotational distortion in the OCT image, a volumetric data stabilization algorithm is proposed. The algorithm first estimates the Non-Uniform Rotational Distortion (NURD) for each B-scan by using a Convolutional Neural Network (CNN). A correlation map between two successive B-scans is computed and provided as input to the CNN. To solve the problem of accumulative error in iterative frame stream processing, we deploy an overall rotation estimation between reference orientation and actual OCT image orientation. We train the network with synthetic OCT videos by intentionally adding rotational distortion into real OCT images. As part of this article we discuss the proposed method in two different scanning modes: the first is a conventional pullback mode where the optical components move along the protection sheath, and the second is a self-designed scanning mode where the catheter is globally translated by using an external actuator. The efficiency and robustness of the proposed method are evaluated with synthetic scans as well as real scans under two scanning modes.

* 11pages, 5 figures

Deep Reinforcement Learning for the Control of Robotic Manipulation: A Focussed Mini-Review

Feb 08, 2021

Abstract:Deep learning has provided new ways of manipulating, processing and analyzing data. It sometimes may achieve results comparable to, or surpassing human expert performance, and has become a source of inspiration in the era of artificial intelligence. Another subfield of machine learning named reinforcement learning, tries to find an optimal behavior strategy through interactions with the environment. Combining deep learning and reinforcement learning permits resolving critical issues relative to the dimensionality and scalability of data in tasks with sparse reward signals, such as robotic manipulation and control tasks, that neither method permits resolving when applied on its own. In this paper, we present recent significant progress of deep reinforcement learning algorithms, which try to tackle the problems for the application in the domain of robotic manipulation control, such as sample efficiency and generalization. Despite these continuous improvements, currently, the challenges of learning robust and versatile manipulation skills for robots with deep reinforcement learning are still far from being resolved for real world applications.

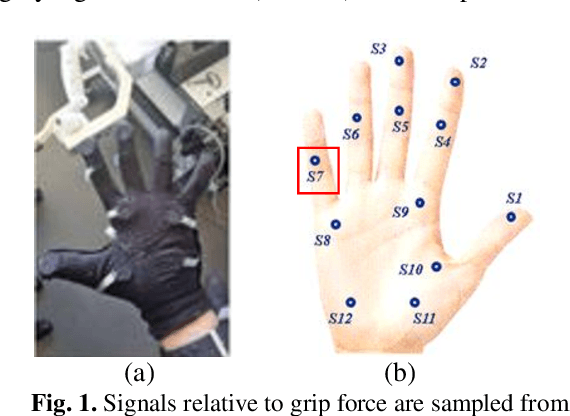

Wearable Sensors for Spatio-Temporal Grip Force Profiling

Jan 16, 2021

Abstract:Wearable biosensor technology enables real-time, convenient, and continuous monitoring of users behavioral signals. Such include signals relative to body motion, body temperature, biological or biochemical markers, and individual grip forces, which are studied in this paper. A four step pick and drop image guided and robot assisted precision task has been designed for exploiting a wearable wireless sensor glove system. Individual spatio temporal grip forces are analyzed on the basis of thousands of individual sensor data, collected from different locations on the dominant and non-dominant hands of each of three users in ten successive task sessions. Statistical comparisons reveal specific differences between grip force profiles of the individual users as a function of task skill level (expertise) and time.

Correlating grip force signals from multiple sensors highlights prehensile control strategies in a complex task-user system

Nov 12, 2020

Abstract:Wearable sensor systems with transmitting capabilities are currently employed for the biometric screening of exercise activities and other performance data. Such technology is generally wireless and enables the noninvasive monitoring of signals to track and trace user behaviors in real time. Examples include signals relative to hand and finger movements or force control reflected by individual grip force data. As will be shown here, these signals directly translate into task, skill, and hand specific, dominant versus non dominant hand, grip force profiles for different measurement loci in the fingers and palm of the hand. The present study draws from thousands of such sensor data recorded from multiple spatial locations. The individual grip force profiles of a highly proficient left handed exper, a right handed dominant hand trained user, and a right handed novice performing an image guided, robot assisted precision task with the dominant or the non dominant hand are analyzed. The step by step statistical approach follows Tukeys detective work principle, guided by explicit functional assumptions relating to somatosensory receptive field organization in the human brain. Correlation analyses in terms of Person Product Moments reveal skill specific differences in covariation patterns in the individual grip force profiles. These can be functionally mapped to from global to local coding principles in the brain networks that govern grip force control and its optimization with a specific task expertise. Implications for the real time monitoring of grip forces and performance training in complex task user systems are brought forward.

Sensors for expert grip force profiling: towards benchmarking manual control of a robotic device for surgical tool movements

Nov 12, 2020

Abstract:STRAS (Single access Transluminal Robotic Assistant for Surgeons) is a new robotic system for application to intraluminal surgical procedures. Preclinical testing of STRAS has recently permitted to demonstrate major advantages of the system in comparison with classic procedures. Benchmark methods permitting to establish objective criteria for expertise need to be worked out now to effectively train surgeons on this new system in the near future. STRAS consists of three cable driven subsystems, one endoscope serving as guide, and two flexible instruments. The flexible instruments have three degrees of freedom and can be teleoperated by a single user via two specially designed master interfaces. In this study here, small force sensors sewn into a wearable glove to ergonomically fit the master handles of the robotic system were employed for monitoring the forces applied by an expert and a trainee who was a complete novice during all the steps of surgical task execution in a simulator task, a four step pick and drop. Analysis of gripforce profiles is performed sensor by sensor to bring to the fore specific differences in handgrip force profiles in specific sensor locations on anatomically relevant parts of the fingers and hand controlling the master slave system.

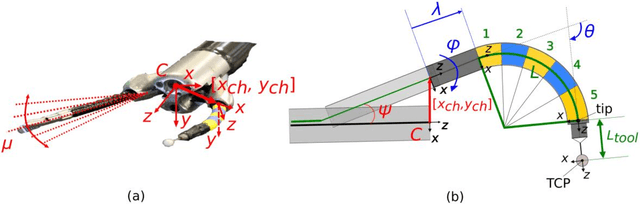

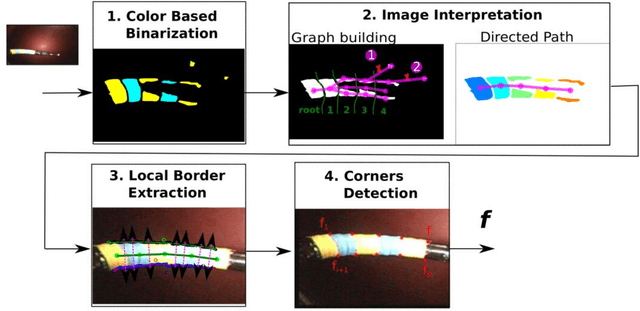

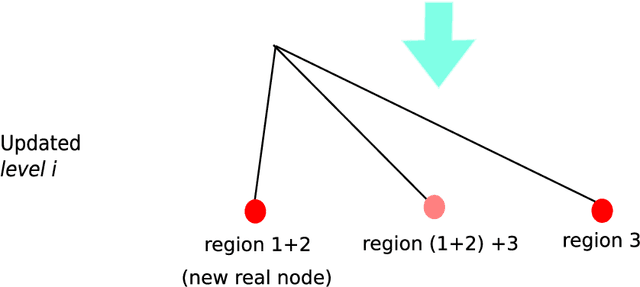

An adaptive and fully automatic method for estimating the 3D position of bendable instruments using endoscopic images

Nov 29, 2019

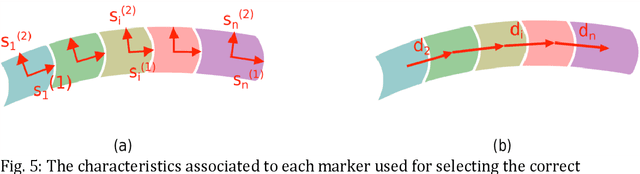

Abstract:Background. Flexible bendable instruments are key tools for performing surgical endoscopy. Being able to measure the 3D position of such instruments can be useful for various tasks, such as controlling automatically robotized instruments and analyzing motions. Methods. We propose an automatic method to infer the 3D pose of a single bending section instrument, using only the images provided by a monocular camera embedded at the tip of the endoscope. The proposed method relies on colored markers attached onto the bending section. The image of the instrument is segmented using a graph-based method and the corners of the markers are extracted by detecting the color transition along B{\'e}zier curves fitted on edge points. These features are accurately located and then used to estimate the 3D pose of the instrument using an adaptive model that allows to take into account the mechanical play between the instrument and its housing channel. Results. The feature extraction method provides good localization of markers corners with images of in vivo environment despite sensor saturation due to strong lighting. The RMS error on the estimation of the tip position of the instrument for laboratory experiments was 2.1, 1.96, 3.18 mm in the x, y and z directions respectively. Qualitative analysis in the case of in vivo images shows the ability to correctly estimate the 3D position of the instrument tip during real motions. Conclusions. The proposed method provides an automatic and accurate estimation of the 3D position of the tip of a bendable instrument in realistic conditions, where standard approaches fail.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge