Christophe Doignon

ICube

An adaptive and fully automatic method for estimating the 3D position of bendable instruments using endoscopic images

Nov 29, 2019

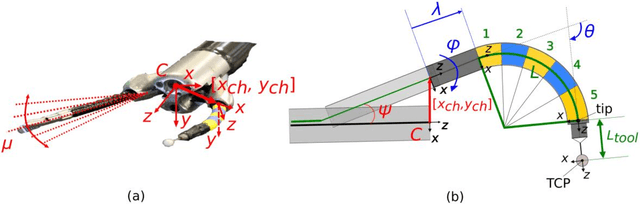

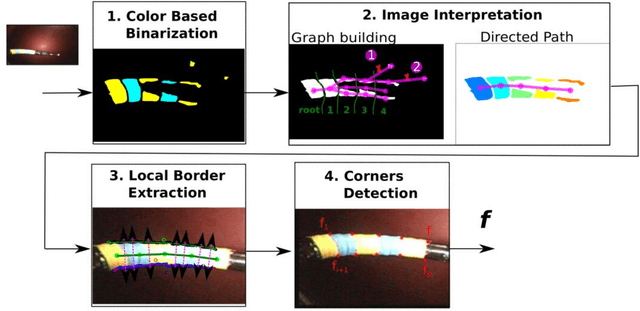

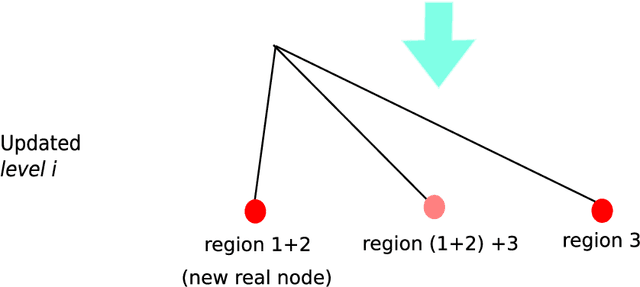

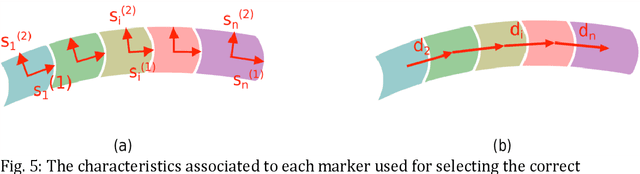

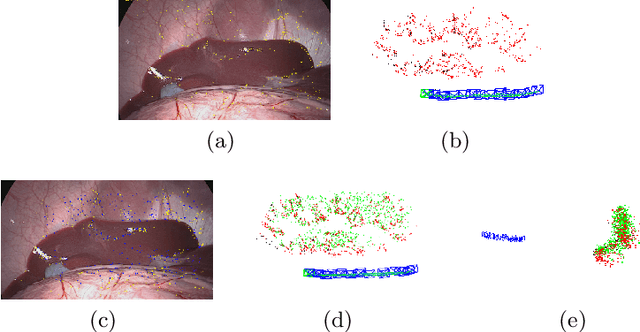

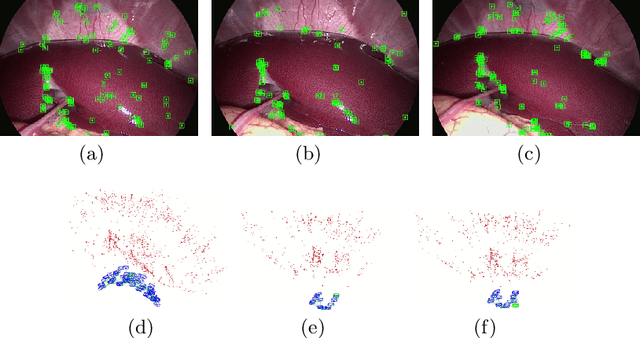

Abstract:Background. Flexible bendable instruments are key tools for performing surgical endoscopy. Being able to measure the 3D position of such instruments can be useful for various tasks, such as controlling automatically robotized instruments and analyzing motions. Methods. We propose an automatic method to infer the 3D pose of a single bending section instrument, using only the images provided by a monocular camera embedded at the tip of the endoscope. The proposed method relies on colored markers attached onto the bending section. The image of the instrument is segmented using a graph-based method and the corners of the markers are extracted by detecting the color transition along B{\'e}zier curves fitted on edge points. These features are accurately located and then used to estimate the 3D pose of the instrument using an adaptive model that allows to take into account the mechanical play between the instrument and its housing channel. Results. The feature extraction method provides good localization of markers corners with images of in vivo environment despite sensor saturation due to strong lighting. The RMS error on the estimation of the tip position of the instrument for laboratory experiments was 2.1, 1.96, 3.18 mm in the x, y and z directions respectively. Qualitative analysis in the case of in vivo images shows the ability to correctly estimate the 3D position of the instrument tip during real motions. Conclusions. The proposed method provides an automatic and accurate estimation of the 3D position of the tip of a bendable instrument in realistic conditions, where standard approaches fail.

SLAM based Quasi Dense Reconstruction For Minimally Invasive Surgery Scenes

May 25, 2017

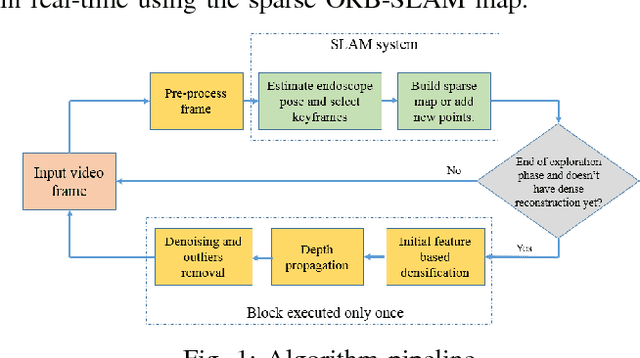

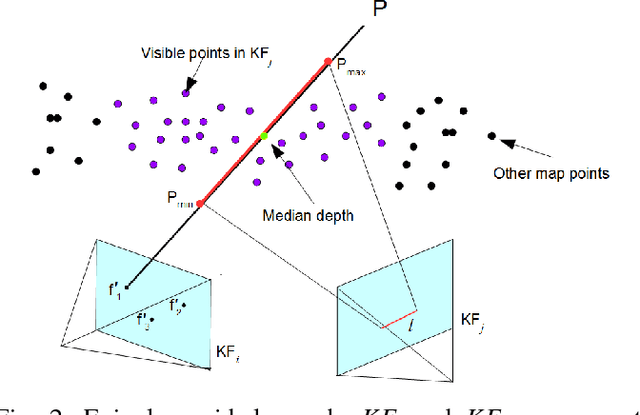

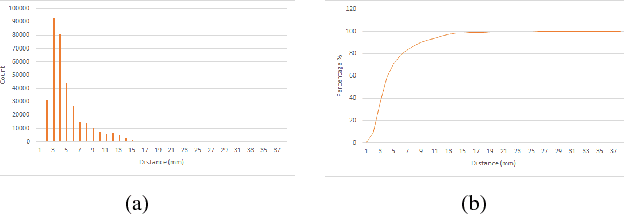

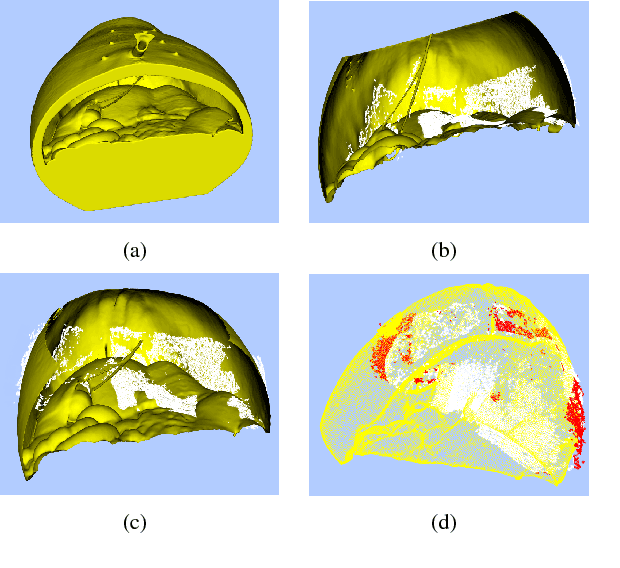

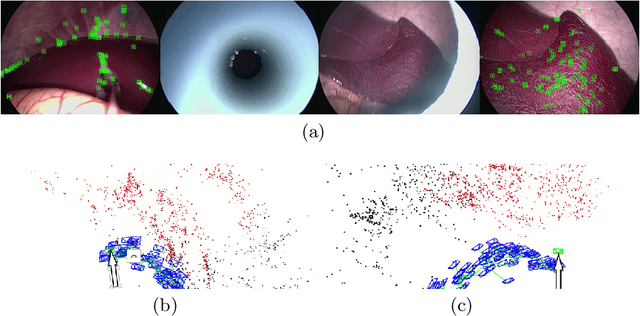

Abstract:Recovering surgical scene structure in laparoscope surgery is crucial step for surgical guidance and augmented reality applications. In this paper, a quasi dense reconstruction algorithm of surgical scene is proposed. This is based on a state-of-the-art SLAM system, and is exploiting the initial exploration phase that is typically performed by the surgeon at the beginning of the surgery. We show how to convert the sparse SLAM map to a quasi dense scene reconstruction, using pairs of keyframe images and correlation-based featureless patch matching. We have validated the approach with a live porcine experiment using Computed Tomography as ground truth, yielding a Root Mean Squared Error of 4.9mm.

ORBSLAM-based Endoscope Tracking and 3D Reconstruction

Aug 29, 2016

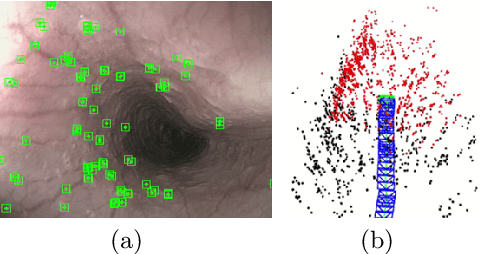

Abstract:We aim to track the endoscope location inside the surgical scene and provide 3D reconstruction, in real-time, from the sole input of the image sequence captured by the monocular endoscope. This information offers new possibilities for developing surgical navigation and augmented reality applications. The main benefit of this approach is the lack of extra tracking elements which can disturb the surgeon performance in the clinical routine. It is our first contribution to exploit ORBSLAM, one of the best performing monocular SLAM algorithms, to estimate both of the endoscope location, and 3D structure of the surgical scene. However, the reconstructed 3D map poorly describe textureless soft organ surfaces such as liver. It is our second contribution to extend ORBSLAM to be able to reconstruct a semi-dense map of soft organs. Experimental results on in-vivo pigs, shows a robust endoscope tracking even with organs deformations and partial instrument occlusions. It also shows the reconstruction density, and accuracy against ground truth surface obtained from CT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge