Rongrong Liu

Spatiotemporal modeling of grip forces captures proficiency in manual robot control

Mar 03, 2023

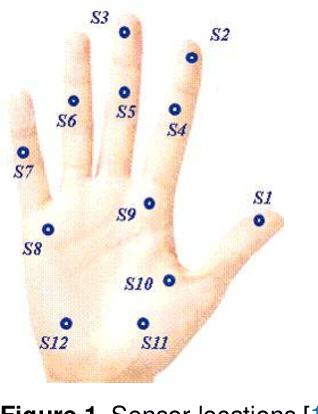

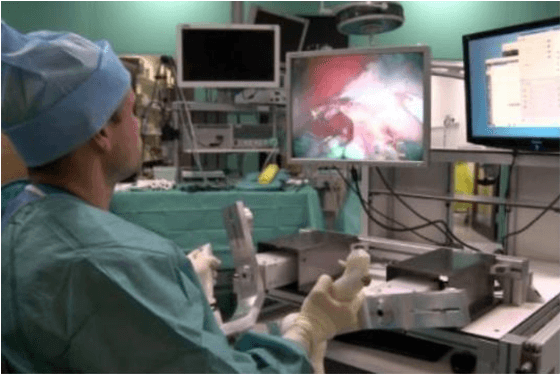

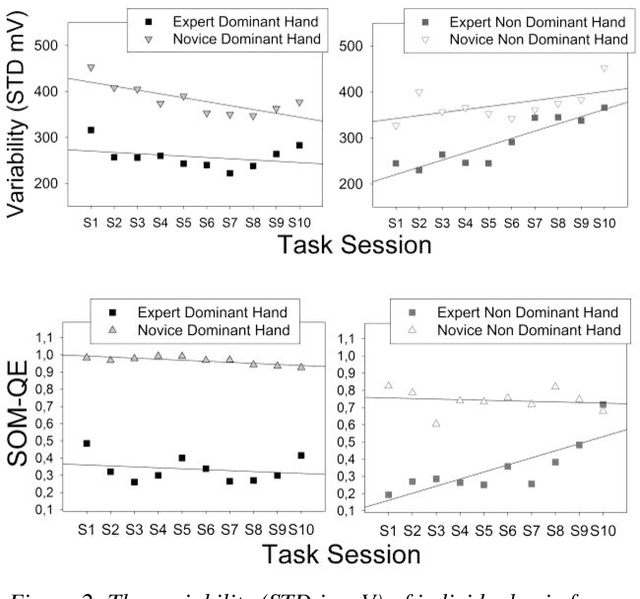

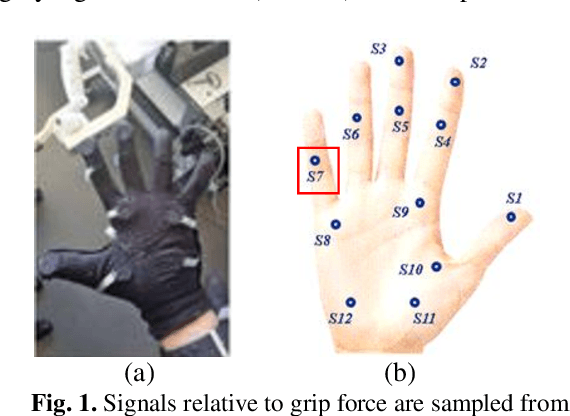

Abstract:This paper builds on our previous work by exploiting Artificial Intelligence to predict individual grip force variability in manual robot control. Grip forces were recorded from various loci in the dominant and non dominant hands of individuals by means of wearable wireless sensor technology. Statistical analyses bring to the fore skill specific temporal variations in thousands of grip forces of a complete novice and a highly proficient expert in manual robot control. A brain inspired neural network model that uses the output metric of a Self Organizing Map with unsupervised winner take all learning was run on the sensor output from both hands of each user. The neural network metric expresses the difference between an input representation and its model representation at any given moment in time t and reliably captures the differences between novice and expert performance in terms of grip force variability.Functionally motivated spatiotemporal analysis of individual average grip forces, computed for time windows of constant size in the output of a restricted amount of task-relevant sensors in the dominant (preferred) hand, reveal finger-specific synergies reflecting robotic task skill. The analyses lead the way towards grip force monitoring in real time to permit tracking task skill evolution in trainees, or identify individual proficiency levels in human robot interaction in environmental contexts of high sensory uncertainty. Parsimonious Artificial Intelligence (AI) assistance will contribute to the outcome of new types of surgery, in particular single-port approaches such as NOTES (Natural Orifice Transluminal Endoscopic Surgery) and SILS (Single Incision Laparoscopic Surgery).

Making Sense of Complex Sensor Data Streams

Jun 17, 2021

Abstract:This concept paper draws from our previous research on individual grip force data collected from biosensors placed on specific anatomical locations in the dominant and non dominant hands of operators performing a robot assisted precision grip task for minimally invasive endoscopic surgery. The specificity of the robotic system on the one hand, and that of the 2D image guided task performed in a real world 3D space on the other, constrain the individual hand and finger movements during task performance in a unique way. Our previous work showed task specific characteristics of operator expertise in terms of specific grip force profiles, which we were able to detect in thousands of highly variable individual data. This concept paper is focused on two complementary data analysis strategies that allow achieving such a goal. In contrast with other sensor data analysis strategies aimed at minimizing variance in the data, it is in this case here necessary to decipher the meaning of the full extent of intra and inter individual variance in the sensor data by using the appropriate statistical analyses, as shown in the first part of this paper. Then, it is explained how the computation of individual spatio temporal grip force profiles permits detecting expertise specific differences between individual users. It is concluded that these two analytic strategies are complementary. They enable drawing meaning from thousands of biosensor data reflecting human grip performance and its evolution with training, while fully taking into account their considerable inter and intra individual variability.

Surgical task expertise detected by a self-organizing neural network map

Jun 03, 2021

Abstract:Individual grip force profiling of bimanual simulator task performance of experts and novices using a robotic control device designed for endoscopic surgery permits defining benchmark criteria that tell true expert task skills from the skills of novices or trainee surgeons. Grip force variability in a true expert and a complete novice executing a robot assisted surgical simulator task reveal statistically significant differences as a function of task expertise. Here we show that the skill specific differences in local grip forces are predicted by the output metric of a Self Organizing neural network Map (SOM) with a bio inspired functional architecture that maps the functional connectivity of somatosensory neural networks in the primate brain.

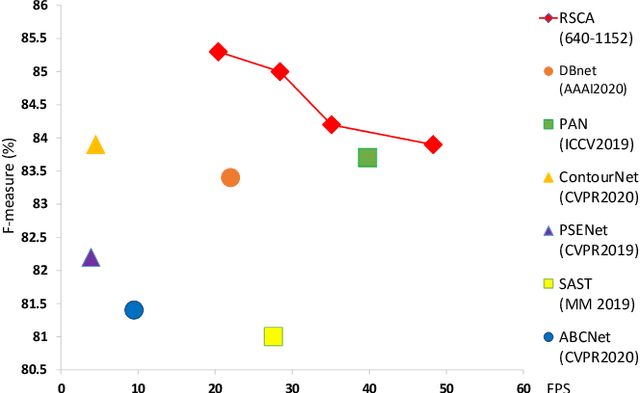

RSCA: Real-time Segmentation-based Context-Aware Scene Text Detection

May 26, 2021

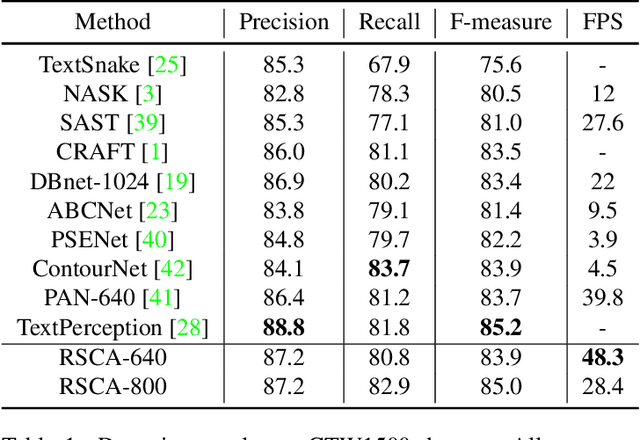

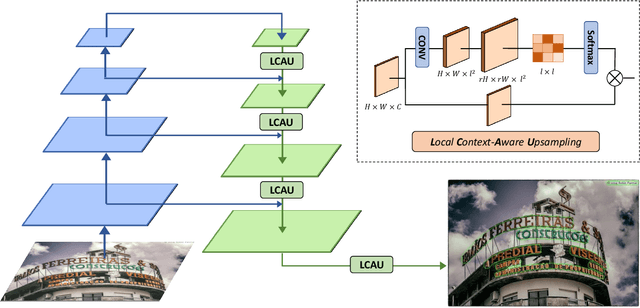

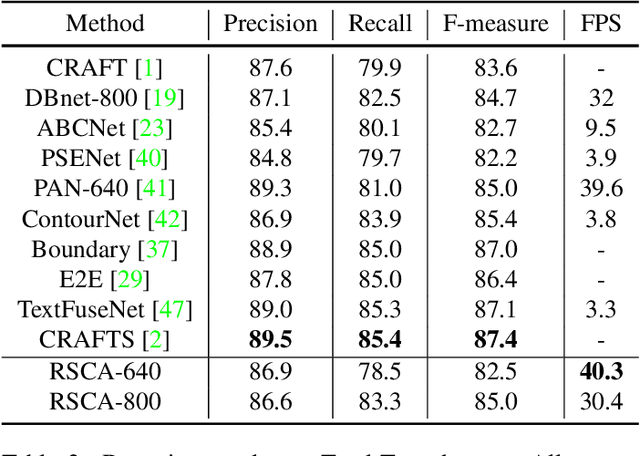

Abstract:Segmentation-based scene text detection methods have been widely adopted for arbitrary-shaped text detection recently, since they make accurate pixel-level predictions on curved text instances and can facilitate real-time inference without time-consuming processing on anchors. However, current segmentation-based models are unable to learn the shapes of curved texts and often require complex label assignments or repeated feature aggregations for more accurate detection. In this paper, we propose RSCA: a Real-time Segmentation-based Context-Aware model for arbitrary-shaped scene text detection, which sets a strong baseline for scene text detection with two simple yet effective strategies: Local Context-Aware Upsampling and Dynamic Text-Spine Labeling, which model local spatial transformation and simplify label assignments separately. Based on these strategies, RSCA achieves state-of-the-art performance in both speed and accuracy, without complex label assignments or repeated feature aggregations. We conduct extensive experiments on multiple benchmarks to validate the effectiveness of our method. RSCA-640 reaches 83.9% F-measure at 48.3 FPS on CTW1500 dataset.

Deep Reinforcement Learning for the Control of Robotic Manipulation: A Focussed Mini-Review

Feb 08, 2021

Abstract:Deep learning has provided new ways of manipulating, processing and analyzing data. It sometimes may achieve results comparable to, or surpassing human expert performance, and has become a source of inspiration in the era of artificial intelligence. Another subfield of machine learning named reinforcement learning, tries to find an optimal behavior strategy through interactions with the environment. Combining deep learning and reinforcement learning permits resolving critical issues relative to the dimensionality and scalability of data in tasks with sparse reward signals, such as robotic manipulation and control tasks, that neither method permits resolving when applied on its own. In this paper, we present recent significant progress of deep reinforcement learning algorithms, which try to tackle the problems for the application in the domain of robotic manipulation control, such as sample efficiency and generalization. Despite these continuous improvements, currently, the challenges of learning robust and versatile manipulation skills for robots with deep reinforcement learning are still far from being resolved for real world applications.

Wearable Sensors for Spatio-Temporal Grip Force Profiling

Jan 16, 2021

Abstract:Wearable biosensor technology enables real-time, convenient, and continuous monitoring of users behavioral signals. Such include signals relative to body motion, body temperature, biological or biochemical markers, and individual grip forces, which are studied in this paper. A four step pick and drop image guided and robot assisted precision task has been designed for exploiting a wearable wireless sensor glove system. Individual spatio temporal grip forces are analyzed on the basis of thousands of individual sensor data, collected from different locations on the dominant and non-dominant hands of each of three users in ten successive task sessions. Statistical comparisons reveal specific differences between grip force profiles of the individual users as a function of task skill level (expertise) and time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge