Philip T. Jackson

Camera Bias in a Fine Grained Classification Task

Jul 16, 2020

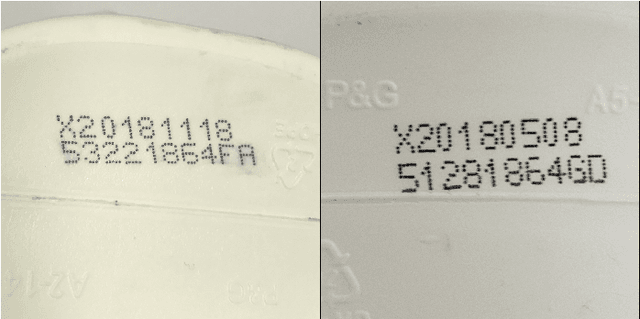

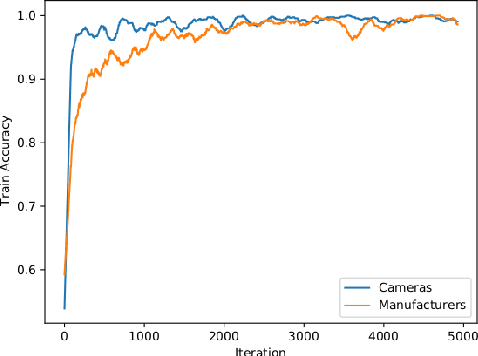

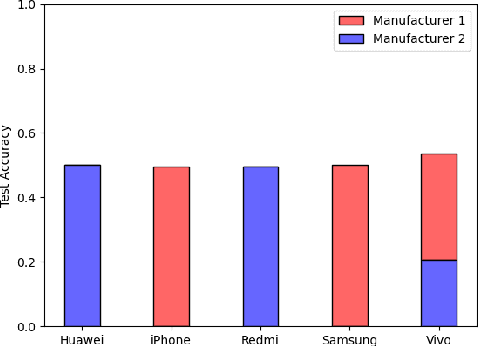

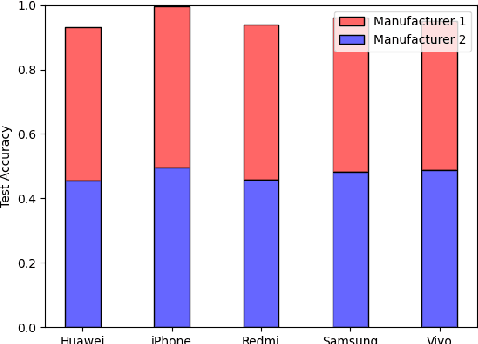

Abstract:We show that correlations between the camera used to acquire an image and the class label of that image can be exploited by convolutional neural networks (CNN), resulting in a model that "cheats" at an image classification task by recognizing which camera took the image and inferring the class label from the camera. We show that models trained on a dataset with camera / label correlations do not generalize well to images in which those correlations are absent, nor to images from unencountered cameras. Furthermore, we investigate which visual features they are exploiting for camera recognition. Our experiments present evidence against the importance of global color statistics, lens deformation and chromatic aberration, and in favor of high frequency features, which may be introduced by image processing algorithms built into the cameras.

Phenotypic Profiling of High Throughput Imaging Screens with Generic Deep Convolutional Features

Mar 15, 2019

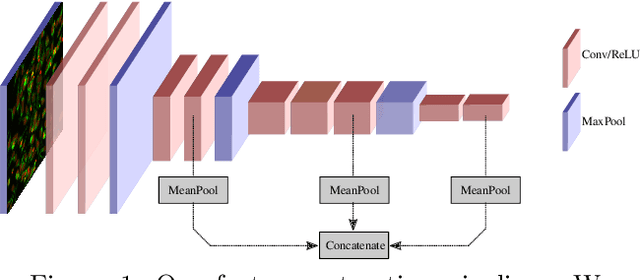

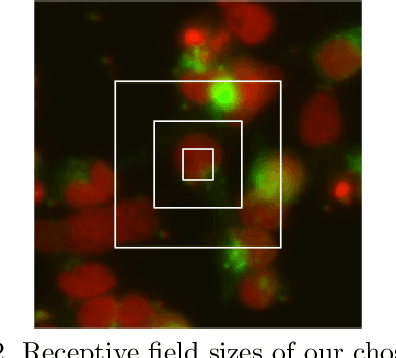

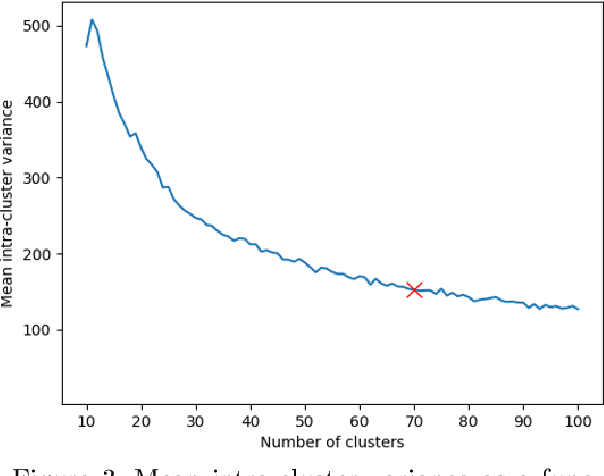

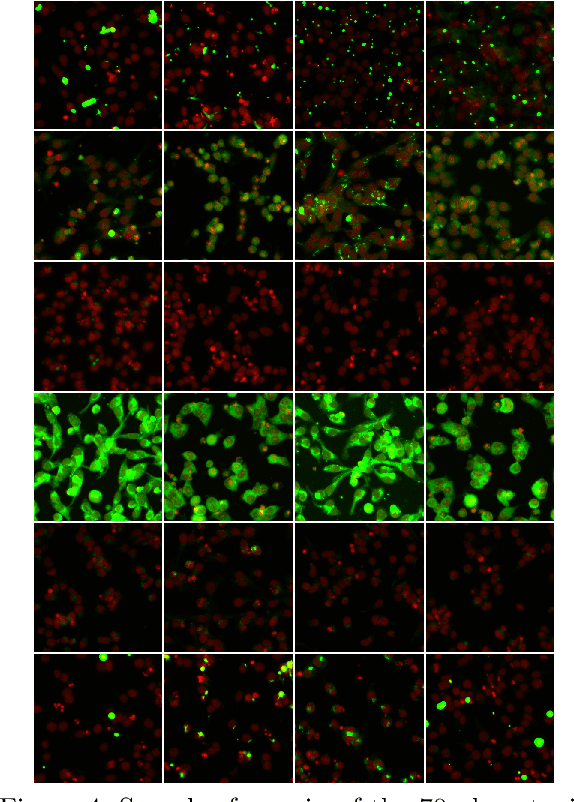

Abstract:While deep learning has seen many recent applications to drug discovery, most have focused on predicting activity or toxicity directly from chemical structure. Phenotypic changes exhibited in cellular images are also indications of the mechanism of action (MoA) of chemical compounds. In this paper, we show how pre-trained convolutional image features can be used to assist scientists in discovering interesting chemical clusters for further investigation. Our method reduces the dimensionality of raw fluorescent stained images from a high throughput imaging (HTI) screen, producing an embedding space that groups together images with similar cellular phenotypes. Running standard unsupervised clustering on this embedding space yields a set of distinct phenotypic clusters. This allows scientists to further select and focus on interesting clusters for downstream analyses. We validate the consistency of our embedding space qualitatively with t-sne visualizations, and quantitatively by measuring embedding variance among images that are known to be similar. Results suggested the usefulness of our proposed workflow using deep learning and clustering and it can lead to robust HTI screening and compound triage.

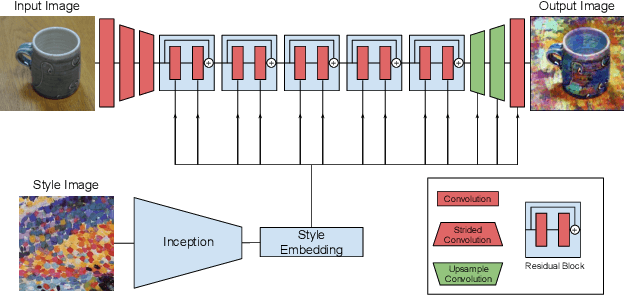

Style Augmentation: Data Augmentation via Style Randomization

Sep 14, 2018

Abstract:We introduce style augmentation, a new form of data augmentation based on random style transfer, for improving the robustness of convolutional neural networks (CNN) over both classification and regression based tasks. During training, our style augmentation randomizes texture, contrast and color, while preserving shape and semantic content. This is accomplished by adapting an arbitrary style transfer network to perform style randomization, by sampling input style embeddings from a multivariate normal distribution instead of inferring them from a style image. In addition to standard classification experiments, we investigate the effect of style augmentation (and data augmentation generally) on domain transfer tasks. We find that data augmentation significantly improves robustness to domain shift, and can be used as a simple, domain agnostic alternative to domain adaptation. Comparing style augmentation against a mix of seven traditional augmentation techniques, we find that it can be readily combined with them to improve network performance. We validate the efficacy of our technique with domain transfer experiments in classification and monocular depth estimation, illustrating consistent improvements in generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge