Style Augmentation: Data Augmentation via Style Randomization

Paper and Code

Sep 14, 2018

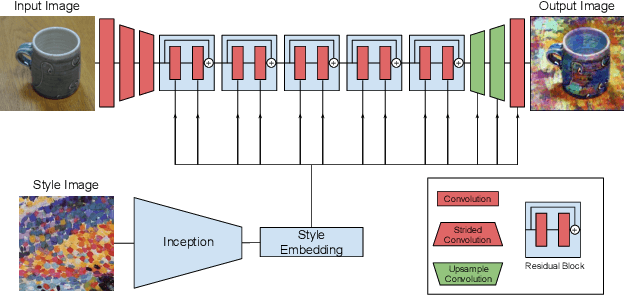

We introduce style augmentation, a new form of data augmentation based on random style transfer, for improving the robustness of convolutional neural networks (CNN) over both classification and regression based tasks. During training, our style augmentation randomizes texture, contrast and color, while preserving shape and semantic content. This is accomplished by adapting an arbitrary style transfer network to perform style randomization, by sampling input style embeddings from a multivariate normal distribution instead of inferring them from a style image. In addition to standard classification experiments, we investigate the effect of style augmentation (and data augmentation generally) on domain transfer tasks. We find that data augmentation significantly improves robustness to domain shift, and can be used as a simple, domain agnostic alternative to domain adaptation. Comparing style augmentation against a mix of seven traditional augmentation techniques, we find that it can be readily combined with them to improve network performance. We validate the efficacy of our technique with domain transfer experiments in classification and monocular depth estimation, illustrating consistent improvements in generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge