Philip Pratt

Self-Supervised Siamese Learning on Stereo Image Pairs for Depth Estimation in Robotic Surgery

May 17, 2017

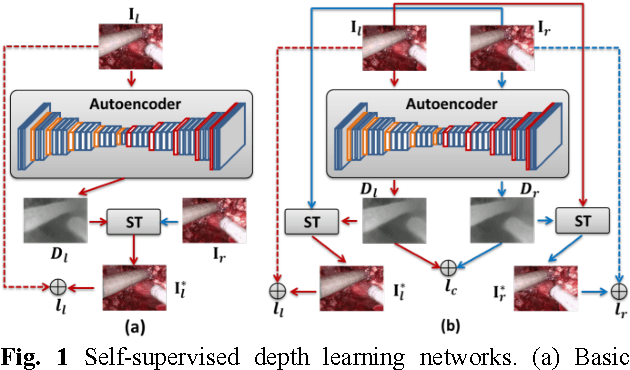

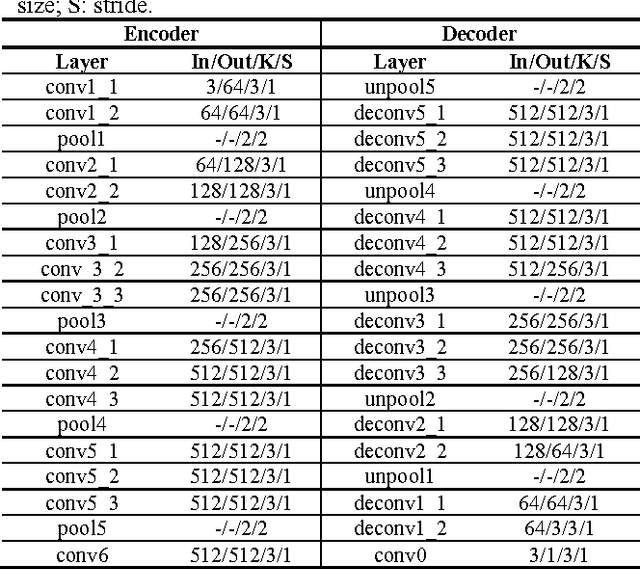

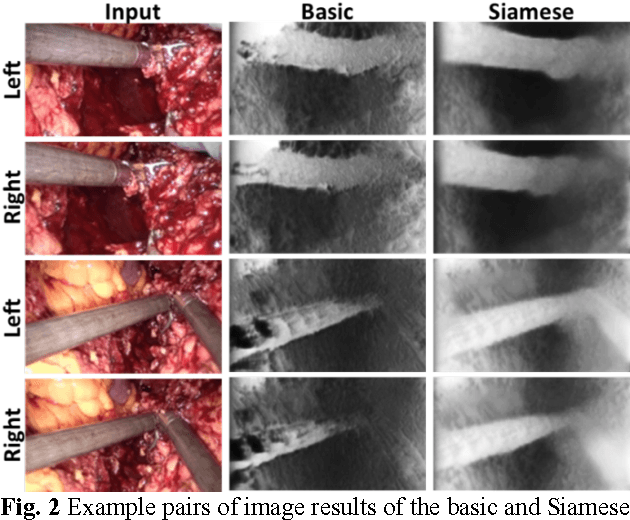

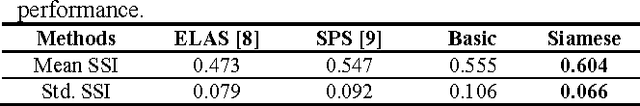

Abstract:Robotic surgery has become a powerful tool for performing minimally invasive procedures, providing advantages in dexterity, precision, and 3D vision, over traditional surgery. One popular robotic system is the da Vinci surgical platform, which allows preoperative information to be incorporated into live procedures using Augmented Reality (AR). Scene depth estimation is a prerequisite for AR, as accurate registration requires 3D correspondences between preoperative and intraoperative organ models. In the past decade, there has been much progress on depth estimation for surgical scenes, such as using monocular or binocular laparoscopes [1,2]. More recently, advances in deep learning have enabled depth estimation via Convolutional Neural Networks (CNNs) [3], but training requires a large image dataset with ground truth depths. Inspired by [4], we propose a deep learning framework for surgical scene depth estimation using self-supervision for scalable data acquisition. Our framework consists of an autoencoder for depth prediction, and a differentiable spatial transformer for training the autoencoder on stereo image pairs without ground truth depths. Validation was conducted on stereo videos collected in robotic partial nephrectomy.

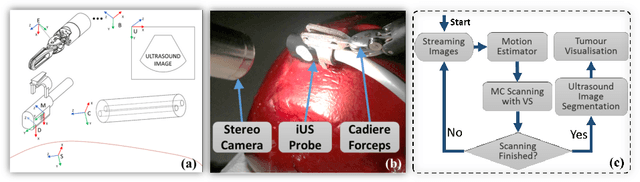

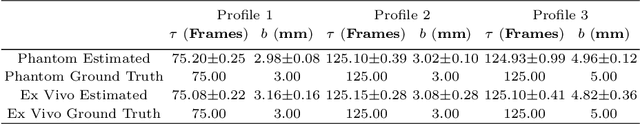

Motion-Compensated Autonomous Scanning for Tumour Localisation using Intraoperative Ultrasound

May 16, 2017

Abstract:Intraoperative ultrasound facilitates localisation of tumour boundaries during minimally invasive procedures. Autonomous ultrasound scanning systems have been recently proposed to improve scanning accuracy and reduce surgeons' cognitive load. However, current methods mainly consider static scanning environments typically with the probe pressing against the tissue surface. In this work, a motion-compensated autonomous ultrasound scanning system using the da Vinci Research Kit (dVRK) is proposed. An optimal scanning trajectory is generated considering both the tissue surface shape and the ultrasound transducer dimensions. A robust vision-based approach is proposed to learn the underlying tissue motion characteristics. The learned motion model is then incorporated into the visual servoing framework. The proposed system has been validated with both phantom and ex vivo experiments using the ground truth motion data for comparison.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge