Petteri Nurmi

Thermal Dissipation Resulting from Everyday Interactions as a Sensing Modality -- The MIDAS Touch

Dec 06, 2022Abstract:We contribute MIDAS as a novel sensing solution for characterizing everyday objects using thermal dissipation. MIDAS takes advantage of the fact that anytime a person touches an object it results in heat transfer. By capturing and modeling the dissipation of the transferred heat, e.g., through the decrease in the captured thermal radiation, MIDAS can characterize the object and determine its material. We validate MIDAS through extensive empirical benchmarks and demonstrate that MIDAS offers an innovative sensing modality that can recognize a wide range of materials with up to 83% accuracy and generalize to variations in the people interacting with objects. We also demonstrate that MIDAS can detect thermal dissipation through objects, up to 2 mm thickness, and support analysis of multiple objects that are interacted with

Low-Cost Outdoor Air Quality Monitoring and In-Field Sensor Calibration

Feb 05, 2020

Abstract:The significance of air pollution and problems associated with it is fueling deployments of air quality monitoring stations worldwide. The most common approach for air quality monitoring is to rely on environmental monitoring stations, which unfortunately are very expensive both to acquire and to maintain. Hence, environmental monitoring stations typically are deployed sparsely, resulting in limited spatial resolution for measurements. Recently, low-cost air quality sensors have emerged as an alternative that can improve granularity of monitoring. The use of low-cost air quality sensors, however, presents several challenges: they suffer from cross-sensitivities between different ambient pollutants; they can be affected by external factors such as traffic, weather changes, and human behavior; and their accuracy degrades over time. The accuracy of low-cost sensors can be improved through periodic re-calibration with particularly machine learning based calibration having shown great promise due to its capability to calibrate sensors in-field. In this article, we survey the rapidly growing research landscape of low-cost sensor technologies for air quality monitoring, and their calibration using machine learning techniques. We also identify open research challenges and present directions for future research.

Towards Using Unlabeled Data in a Sparse-coding Framework for Human Activity Recognition

Jul 23, 2014

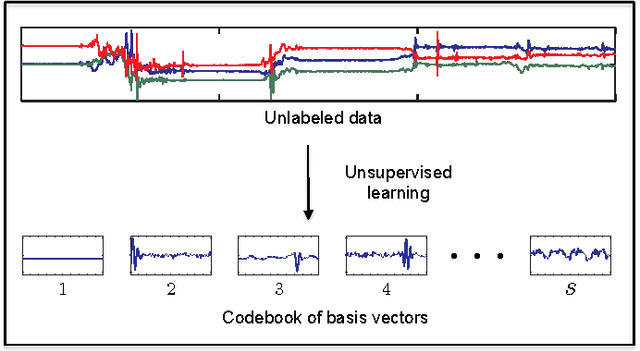

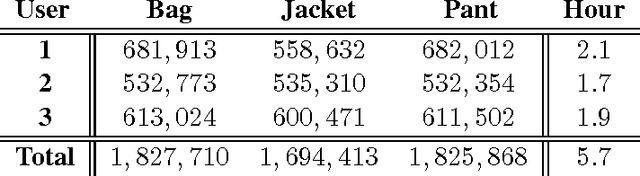

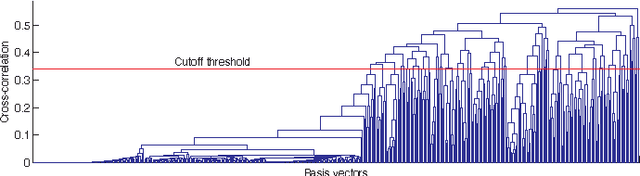

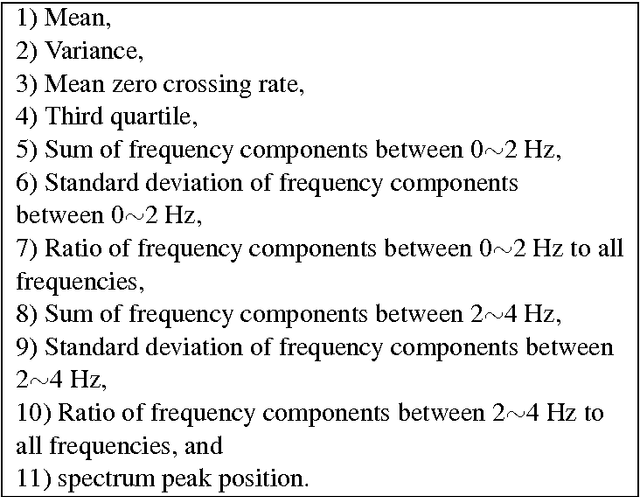

Abstract:We propose a sparse-coding framework for activity recognition in ubiquitous and mobile computing that alleviates two fundamental problems of current supervised learning approaches. (i) It automatically derives a compact, sparse and meaningful feature representation of sensor data that does not rely on prior expert knowledge and generalizes extremely well across domain boundaries. (ii) It exploits unlabeled sample data for bootstrapping effective activity recognizers, i.e., substantially reduces the amount of ground truth annotation required for model estimation. Such unlabeled data is trivial to obtain, e.g., through contemporary smartphones carried by users as they go about their everyday activities. Based on the self-taught learning paradigm we automatically derive an over-complete set of basis vectors from unlabeled data that captures inherent patterns present within activity data. Through projecting raw sensor data onto the feature space defined by such over-complete sets of basis vectors effective feature extraction is pursued. Given these learned feature representations, classification backends are then trained using small amounts of labeled training data. We study the new approach in detail using two datasets which differ in terms of the recognition tasks and sensor modalities. Primarily we focus on transportation mode analysis task, a popular task in mobile-phone based sensing. The sparse-coding framework significantly outperforms the state-of-the-art in supervised learning approaches. Furthermore, we demonstrate the great practical potential of the new approach by successfully evaluating its generalization capabilities across both domain and sensor modalities by considering the popular Opportunity dataset. Our feature learning approach outperforms state-of-the-art approaches to analyzing activities in daily living.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge