Peixian Zhuang

Tree-Mamba: A Tree-Aware Mamba for Underwater Monocular Depth Estimation

Jul 10, 2025Abstract:Underwater Monocular Depth Estimation (UMDE) is a critical task that aims to estimate high-precision depth maps from underwater degraded images caused by light absorption and scattering effects in marine environments. Recently, Mamba-based methods have achieved promising performance across various vision tasks; however, they struggle with the UMDE task because their inflexible state scanning strategies fail to model the structural features of underwater images effectively. Meanwhile, existing UMDE datasets usually contain unreliable depth labels, leading to incorrect object-depth relationships between underwater images and their corresponding depth maps. To overcome these limitations, we develop a novel tree-aware Mamba method, dubbed Tree-Mamba, for estimating accurate monocular depth maps from underwater degraded images. Specifically, we propose a tree-aware scanning strategy that adaptively constructs a minimum spanning tree based on feature similarity. The spatial topological features among the tree nodes are then flexibly aggregated through bottom-up and top-down traversals, enabling stronger multi-scale feature representation capabilities. Moreover, we construct an underwater depth estimation benchmark (called BlueDepth), which consists of 38,162 underwater image pairs with reliable depth labels. This benchmark serves as a foundational dataset for training existing deep learning-based UMDE methods to learn accurate object-depth relationships. Extensive experiments demonstrate the superiority of the proposed Tree-Mamba over several leading methods in both qualitative results and quantitative evaluations with competitive computational efficiency. Code and dataset will be available at https://wyjgr.github.io/Tree-Mamba.html.

MGD-SAM2: Multi-view Guided Detail-enhanced Segment Anything Model 2 for High-Resolution Class-agnostic Segmentation

Mar 31, 2025Abstract:Segment Anything Models (SAMs), as vision foundation models, have demonstrated remarkable performance across various image analysis tasks. Despite their strong generalization capabilities, SAMs encounter challenges in fine-grained detail segmentation for high-resolution class-independent segmentation (HRCS), due to the limitations in the direct processing of high-resolution inputs and low-resolution mask predictions, and the reliance on accurate manual prompts. To address these limitations, we propose MGD-SAM2 which integrates SAM2 with multi-view feature interaction between a global image and local patches to achieve precise segmentation. MGD-SAM2 incorporates the pre-trained SAM2 with four novel modules: the Multi-view Perception Adapter (MPAdapter), the Multi-view Complementary Enhancement Module (MCEM), the Hierarchical Multi-view Interaction Module (HMIM), and the Detail Refinement Module (DRM). Specifically, we first introduce MPAdapter to adapt the SAM2 encoder for enhanced extraction of local details and global semantics in HRCS images. Then, MCEM and HMIM are proposed to further exploit local texture and global context by aggregating multi-view features within and across multi-scales. Finally, DRM is designed to generate gradually restored high-resolution mask predictions, compensating for the loss of fine-grained details resulting from directly upsampling the low-resolution prediction maps. Experimental results demonstrate the superior performance and strong generalization of our model on multiple high-resolution and normal-resolution datasets. Code will be available at https://github.com/sevenshr/MGD-SAM2.

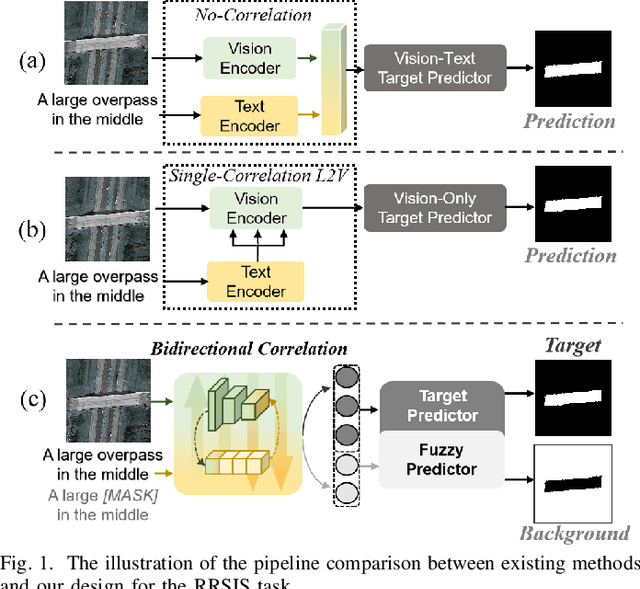

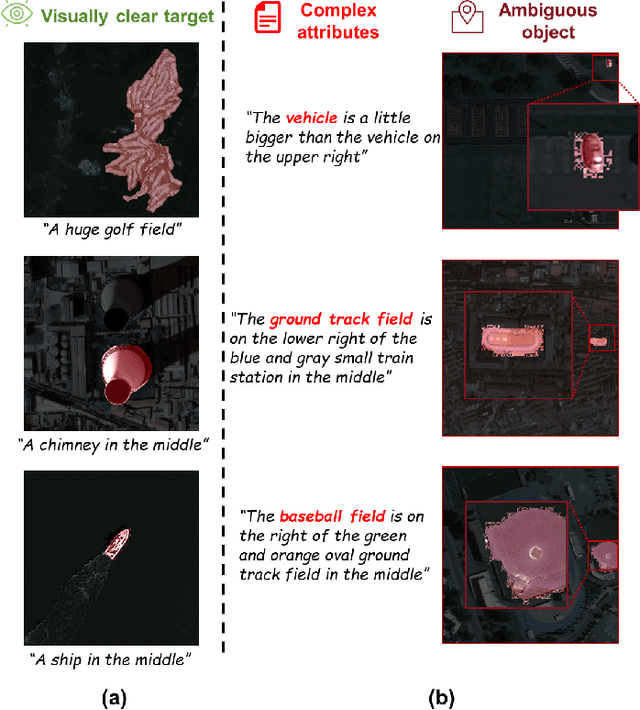

Referring Remote Sensing Image Segmentation via Bidirectional Alignment Guided Joint Prediction

Feb 12, 2025

Abstract:Referring Remote Sensing Image Segmentation (RRSIS) is critical for ecological monitoring, urban planning, and disaster management, requiring precise segmentation of objects in remote sensing imagery guided by textual descriptions. This task is uniquely challenging due to the considerable vision-language gap, the high spatial resolution and broad coverage of remote sensing imagery with diverse categories and small targets, and the presence of clustered, unclear targets with blurred edges. To tackle these issues, we propose \ours, a novel framework designed to bridge the vision-language gap, enhance multi-scale feature interaction, and improve fine-grained object differentiation. Specifically, \ours introduces: (1) the Bidirectional Spatial Correlation (BSC) for improved vision-language feature alignment, (2) the Target-Background TwinStream Decoder (T-BTD) for precise distinction between targets and non-targets, and (3) the Dual-Modal Object Learning Strategy (D-MOLS) for robust multimodal feature reconstruction. Extensive experiments on the benchmark datasets RefSegRS and RRSIS-D demonstrate that \ours achieves state-of-the-art performance. Specifically, \ours improves the overall IoU (oIoU) by 3.76 percentage points (80.57) and 1.44 percentage points (79.23) on the two datasets, respectively. Additionally, it outperforms previous methods in the mean IoU (mIoU) by 5.37 percentage points (67.95) and 1.84 percentage points (66.04), effectively addressing the core challenges of RRSIS with enhanced precision and robustness.

Dual High-Order Total Variation Model for Underwater Image Restoration

Jul 20, 2024Abstract:Underwater images are typically characterized by color cast, haze, blurring, and uneven illumination due to the selective absorption and scattering when light propagates through the water, which limits their practical applications. Underwater image enhancement and restoration (UIER) is one crucial mode to improve the visual quality of underwater images. However, most existing UIER methods concentrate on enhancing contrast and dehazing, rarely pay attention to the local illumination differences within the image caused by illumination variations, thus introducing some undesirable artifacts and unnatural color. To address this issue, an effective variational framework is proposed based on an extended underwater image formation model (UIFM). Technically, dual high-order regularizations are successfully integrated into the variational model to acquire smoothed local ambient illuminance and structure-revealed reflectance in a unified manner. In our proposed framework, the weight factors-based color compensation is combined with the color balance to compensate for the attenuated color channels and remove the color cast. In particular, the local ambient illuminance with strong robustness is acquired by performing the local patch brightest pixel estimation and an improved gamma correction. Additionally, we design an iterative optimization algorithm relying on the alternating direction method of multipliers (ADMM) to accelerate the solution of the proposed variational model. Considerable experiments on three real-world underwater image datasets demonstrate that the proposed method outperforms several state-of-the-art methods with regard to visual quality and quantitative assessments. Moreover, the proposed method can also be extended to outdoor image dehazing, low-light image enhancement, and some high-level vision tasks. The code is available at https://github.com/Hou-Guojia/UDHTV.

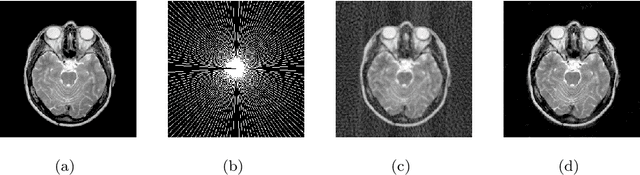

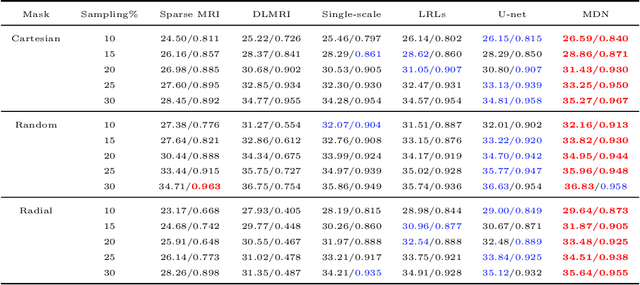

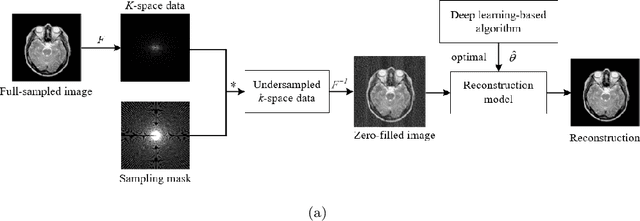

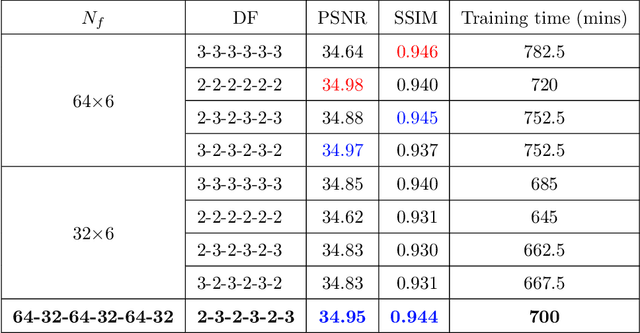

Compressed Sensing MRI via a Multi-scale Dilated Residual Convolution Network

Jun 11, 2019

Abstract:Magnetic resonance imaging (MRI) reconstruction is an active inverse problem which can be addressed by conventional compressed sensing (CS) MRI algorithms that exploit the sparse nature of MRI in an iterative optimization-based manner. However, two main drawbacks of iterative optimization-based CSMRI methods are time-consuming and are limited in model capacity. Meanwhile, one main challenge for recent deep learning-based CSMRI is the trade-off between model performance and network size. To address the above issues, we develop a new multi-scale dilated network for MRI reconstruction with high speed and outstanding performance. Comparing to convolutional kernels with same receptive fields, dilated convolutions reduce network parameters with smaller kernels and expand receptive fields of kernels to obtain almost same information. To maintain the abundance of features, we present global and local residual learnings to extract more image edges and details. Then we utilize concatenation layers to fuse multi-scale features and residual learnings for better reconstruction. Compared with several non-deep and deep learning CSMRI algorithms, the proposed method yields better reconstruction accuracy and noticeable visual improvements. In addition, we perform the noisy setting to verify the model stability, and then extend the proposed model on a MRI super-resolution task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge